🧠 ARMIE - A Surgical Robot Arm and MR Simulator for the Real World

Mixed Reality Surgical Training with Snap Spectacles + Physical Robot Arm + Arduino Uno Q

What ARMIE Is

ARMIE is a mixed-reality surgical training system that allows students to practice procedures safely, affordably, and repeatedly.

Students operate a real robotic arm while visualizing a virtual patient through AR. All movements are captured, logged, and compared against expert demonstrations.

ARMIE is designed to augment, not replace, cadaveric training.

It provides a pre-clinical simulation layer that enables repetition, standardization, and objective skill measurement before live tissue exposure.

ARMIE combines:

- Snap Spectacles (see-through mixed reality)

- A physical robotic arm THAT WE BUILT AND DESIGNED IN A DAY (embodied motor training)

- On-device, low-latency control via Arduino

Why do we use Real Robot Arm? Why not have controller in VR?

WHY real robotic arm? We got this question a lot! The short answer is to mimic real surgical experience! Target user need to be precise on practicing this.

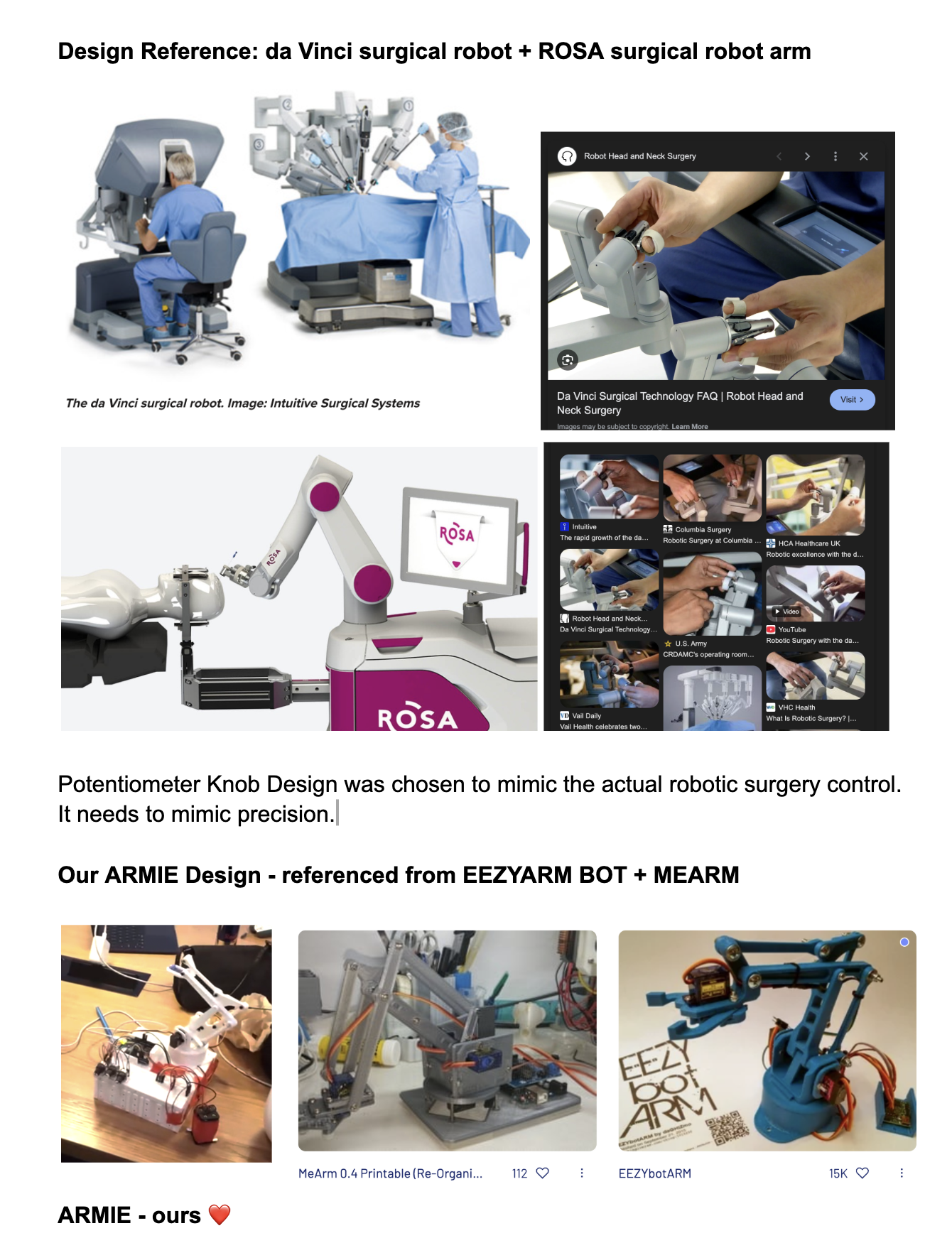

Real surgical arm we reference is davinci surgical arm + rosa one, Doctors are train using knob like controller.

FYI How much is a human head?

A complete human head/brain for surgical practice may cost between $500 and $3,000, with prices varying by location and preparation method.

Cadaver-based dissection remains a challenge of anatomical education and is essential for understanding human anatomy. At the same time, medical schools are increasingly adopting complementary training modalities to address cost, access, and scalability constraints.

Key drivers:

- High cost — Longitudinal analyses in Anatomical Sciences Education show that cadaver dissection is the most expensive teaching modality per student per year when compared with simulation, virtual dissection tables, and computer-assisted instruction.

- Limited supply — Thengal et al. report that 80% of medical students would not choose body donation, while 41% of Western medical schools rely primarily or exclusively on voluntary donor programs, raising sustainability concerns.

- Rising demand — Medical school admissions have increased by ~30% over the past decade, with projections suggesting intake could double by 2031, intensifying pressure on anatomical training infrastructure.

From an educator and SME perspective, these pressures indicate the need for scalable, repeatable pre-clinical training layers that prepare students before scarce, high-value hands-on experiences.

Simulation has long complemented real-world training in safety-critical fields like aviation. Surgical education is beginning a similar shift.

🎥 Core Capabilities

Performing Surgery in Mixed Reality

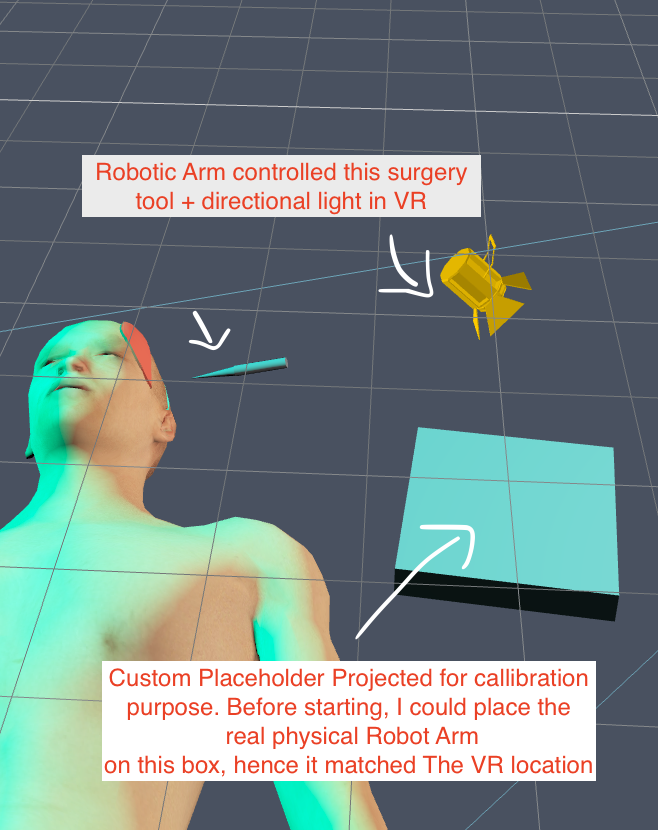

Physical robotic arm controlled via Arduino Uno Q (Qualcomm), synchronized with a virtual brain tumor in Snap Spectacles.

It was challenging to map the 3D in real world to VR + i also add directional light to mimic the brain surgery machine which has lights. If you turn the knob it will move the arm.

Learning Mode - Step-by-Step Guidance

Students receive expert-derived procedural steps overlaid directly in AR.

Multi-BLE Connection (Live Medical Signals)

Supports multiple simultaneous BLE devices, demonstrated with real-time heart-rate streaming representing patient vitals and future biomedical sensors.

For demo purpose, i connected to my Whoop which advertises my heart rate data in real time. It was interesting i was able to connect to any Whoop band (found out this is a security vulnerability on Whoop part!)

Teleoperation & Imitation Learning

Snap Spectacles write BLE commands back to Arduino, enabling:

- Teleoperation, expert demonstrations, motion logging, and allow imitation learning (expert → student)

Expert movements are stored as ground-truth trajectories.

The How?? Secret Sauce?!

I/O on Spectacles.

We used spectacles demo BLE Playground open source project for the BLE connection, it allows us to scan Bluetooth signals and make multiple BLE connection.

By default it will auto connect to my surgicalroboticarm bluetooth pheriperals, as i set the expected UUID and Service and my Whoop to demonstrate ability to get heart rate/medical signal.

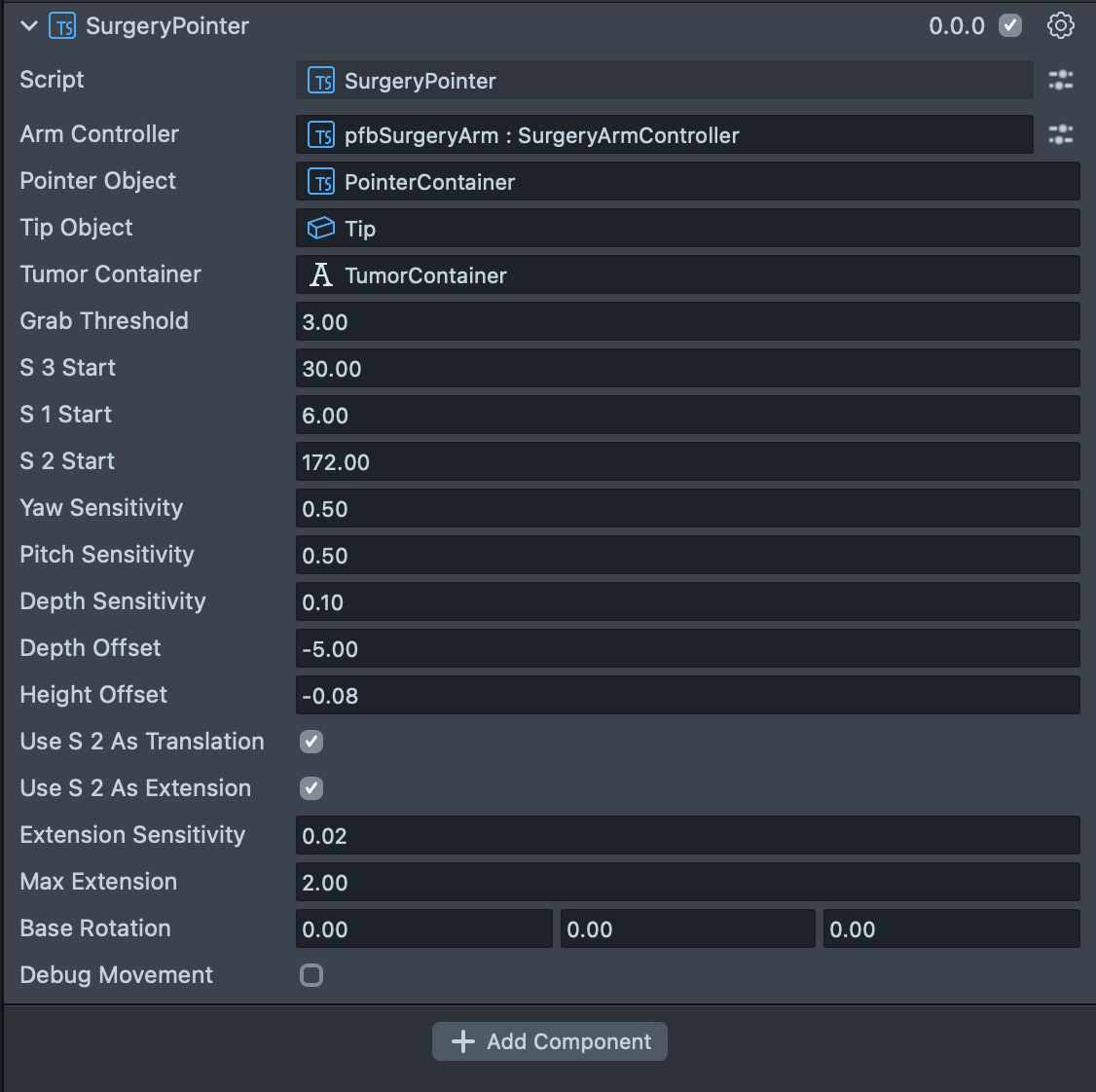

I also built custom TypeScript component that allows to add offset on multiple axis as real world loc might affect the rotation or direction of robotic arm, depends on the size of the real robotic arm.

How do we CALIBRATE real world position to VR!

Honestly it was a lot of testing, but what inspired me is projection mapping. I project the 2D plane with the real size of the base for the robotic arm, and have a default starting point of the robotic arm hand on the 3 servo motors.

That way the robotic arm can match the VR tip. I also add real direcrional light to mimic the real tools

I/O on Arduino Uno Q

On Arduino ino script, we have to make multiple service and characteristic.

- 1 for i/o preference: taking input from BLE write or potentionmeter

- another one is to advertise and stream heart rate data

See script here: https://github.com/liviaellen/ble-mithack/tree/master/Arduino/TumourControl

I setup a script to be able to scan all Bluetooth peripheral device and decode data packet coming from those device (Hex to Dec to Alophabet). This simple webpage is built with JavaScript + HTML. So I can check the Robotic Arm+Arduino can act as Bluetooth peripheral before i plugged it to Spectacles. https://github.com/liviaellen/ble-mithack/blob/master/web-test/index.html

Custom Physical Robot Arm

We 3D Print this custom ARM and built it IN A DAY -- Thanks to Jonathan Hardware Hack

Based on EEZYbotARM, heavily modified for this surgical demo purpose:

- Custom 3D-printed

- Rebalanced for precision and micro-movements

- Modified to fit the hardware oarts we have in hardware buffet MIT Reality Hack.

🧑⚕️ Training Modes

Professor Mode

- Expert performs procedure

- All motion captured

- Generates reusable ground-truth datasets

Student Mode

- Learner performs the same task

- Our logic will compare the motion data with the expert data

- Deviations and offsets computed for feedback

🧰 Hardware Stack

- Arduino Uno Q (Qualcomm-powered)

- Servo motors + potentiometers (joint sensing)

- Custom 3D-printed robotic arm

- Heart-rate sensor (vitals demo for testing) - snap spectacles allow user to connect to multiple devices.

- Snap Spectacles (AR visualization + BLE control)

🧠 What We Learned

- How to synchronize hardware, XR in real time

- Low-latency BLE read/write from AR to physical devices

- Running calibration on-device

- Why simulation is more effective with weight, friction, and resistance

🚧 Challenges We Faced

- Mapping real-world coordinates into Snap Spectacles with minimal drift

- Unpacking/Decoding the packet from BLE (Hex to Dex to Alphabet)

- Training the correct path for robotic arm on Qualcomm Arduino Uno

- Servo calibration and mechanical stability

- Keeping the Arduino run with lightweight functions when streaming data on BLE + controling robot arm

- Synchronizing physical and virtual arms

- Debugging across embedded device Arduino & Spectacles AR Glasses simultaneously

🌍 Vision

ARMIE is a prototype, but the direction is clear:

- scalable surgical training

- imitation learning data training - think ScaleAI for robotic embodied AI for healthcare

Sources & References

Cost Comparison of Anatomy Teaching Modalities Anatomical Sciences Education - Wiley https://anatomypubs.onlinelibrary.wiley.com/doi/10.1002/ase.2008

Cadaver Availability, Student Attitudes, and Medical Education Demand Thengal et al., National Library of Medicine (PMC) https://pmc.ncbi.nlm.nih.gov/articles/PMC12492534/

EEZYbotARM - Open-Source Robotic Arm Platform https://www.instructables.com/EEZYbotARM/

🧑🤝🧑 Contributors

Built With

- arduino

- python

- spectacles

- typescript

Log in or sign up for Devpost to join the conversation.