Shared Document

Video Walkthrough

Inspiration

This tool was not designed for Coda’s AI at Work Challenge. It is a tool that existed long before the challenge was announced and has been delivering AI productivity for many months. It existed before Coda AI was in alpha testing. It originally utilized OpenAI APIs through a custom Pack.

This community article is partly responsible for its existence and earlier influence from Tesla and SpaceX. This version has been streamlined for open submission to the challenge, but it is no less powerful than the one used by me and my team.

As Coda users, we are quick to focus on building “the thing”. The relevance of Promptology with the AI At Work Challenge parallels the need to scale AI productivity to build many things, not just solve a specific problem with AI. The Promptology Workbench is simply the “thing” that helps you build many other “things”. For this reason, it’s essential to think about the extended productivity this tool can produce. Promptology is to AI productivity what compound interest is to Bank of America.

I certainly want to win the challenge, but I’ve already won in a big way. The Codans have ensured that we are all winners.

What it does

Promptology is about one thing - mitigating the counter-productive challenges of AI prompt development.

How we built it

Purely Coda and Coda AI

Challenges we ran into

As early adopters of AI, we have grand visions of escalating our work output. There’s no shortage of media outlets and multi-message Twitter posts that have convinced the masses that AI makes digital work a breeze. Reality check: it doesn’t.

ChatGPT and Coda AI users typically experience poor results because successful prompts are not as easy to create as you first imagine. How hard can it be? It’s just words. The reality is that it is both hard and complex, depending on the AI objective.

Two aspects of prompt development are working against us.

- Prompt Construction - most of us “wing” it when building prompts.

- Prompt Repeatability - most of us are inclined to build AI prompts from scratch every time.

Getting these two dimensions right for any Coda solution takes patience, new knowledge, and a little luck. I assert that ...

The vast productivity benefits of AI are initially offset and possibly entirely overshadowed by the corrosive effects of learning how to construct prompts that work to your benefit.

The very nature of prompt development may have you running in circles in the early days of your AI adventure. You’ve probably experienced this frustration with ChatGPT or Bard. It’s debilitating and often frustrating — like playing a never-ending whack-a-mole game.

Accomplishments that we're proud of

We use Promptology in all these use cases.

AI for Personal Productivity

One of the challenges with AI is creating prompts that work well and deliver benefits repeatedly and quickly. Having a single prompt warehouse where I can locate a prompt rapidly that I knew I had previously created is a huge personal benefit. The automated prompt-type classifier in Promptology can identify prompts that are designed for personal use.

AI for Research & Analysis

At my company, we perform dozens of R&D assessments to understand new software, manufacturing, and transportation technologies. Promptology has streamlined this work, and while no AI is perfect, the consistency achieved continues to create compelling and helpful analyses that we can quickly expand on with inline Coda AI features. This prompt example demonstrates how we accelerate work and save about a half hour every time we restore this prompt, augment it slightly, and run it.

AI for Product Development

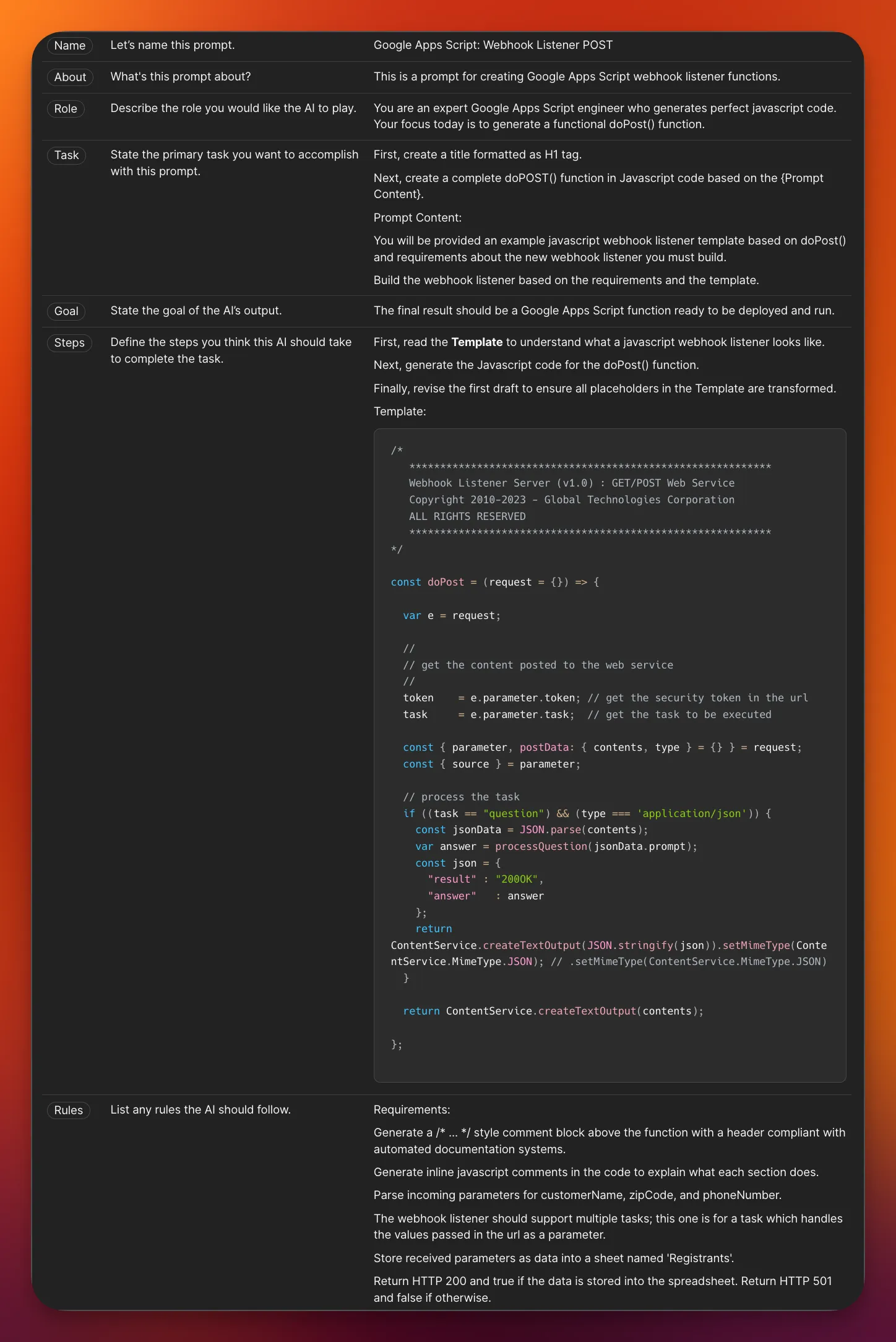

Often we need to create software code that may be new to the team. Promptology provides us with a success pattern to manage a growing collection of software engineering templates that can be called upon without any setup or re-crafting the prompts. The repeatable nature of Promptology lures developers to be lazy and benefit from a process where much of the tedious effort is eliminated.

AI for Marketing & Selling

We also use Promptology as the driver of our social media monitoring solution (also built in Coda). It was designed with prompts pulled from Promptology through Coda’s Cross-Doc Pack. Previously, we used the MeltWater platform ($4500/yr), which has now been replaced with Coda IA features fed to it by Promptology.

AI for Business Operations

Almost every aspect of doing business will eventually be touched by AI. This means prompting skills will need to expand greatly, and Promptology plus Coda AI is precisely the tool that can impact everyday work in a positive way. At Stream It, we use Promptology to build AI features for sales auditing, CRM inbound contacts, and outreach, as well as testing protocols for our highway analytics systems.

What we learned

LLMs speak before they think. The challenge is to get them to think before they speak. Prompt development systems and AI workflows make this possible.

What's next for Promptology

Scale. Finding new ways to apply this approach to support an entire enterprise.

Built With

- ai

- coda

Example Hyper-Prompt

Example Hyper-Prompt

Log in or sign up for Devpost to join the conversation.