If we had a quid for every dodgy web scraping tool we’ve tried, we’d still be skint because some of these setups charge a bomb – think upwards of £50 a month for basic features.

The real stinger? Loads of so-called “top web scraping tools” flop hard, getting blocked left and right, spitting out messy data, or just crawling at a snail’s pace that kills your productivity.

At AFFMaven, we’ve got our hands dirty testing over 50 web scraping solutions to sift out the real gems. We didn’t skim the surface; we dug into success rates, proxy handling, speed on tough sites like Amazon and Google, ease for beginners, and how they stack up for affiliate marketers chasing competitor intel or SEO data.

What Are Web Scraping Tools and Why Do You Need Them?

Web scraping tools are automated software applications designed to extract data from websites systematically. These platforms transform the manual process of copying information into an efficient, scalable operation that can handle thousands of web pages simultaneously.

Unlike traditional data collection methods, web scraping software can navigate through complex website structures, interact with dynamic content, and export information into structured formats like CSV, JSON, or databases.

Why businesses need web scraping tools:

Open Seamless Data Extraction with These Web Scraping Solutions

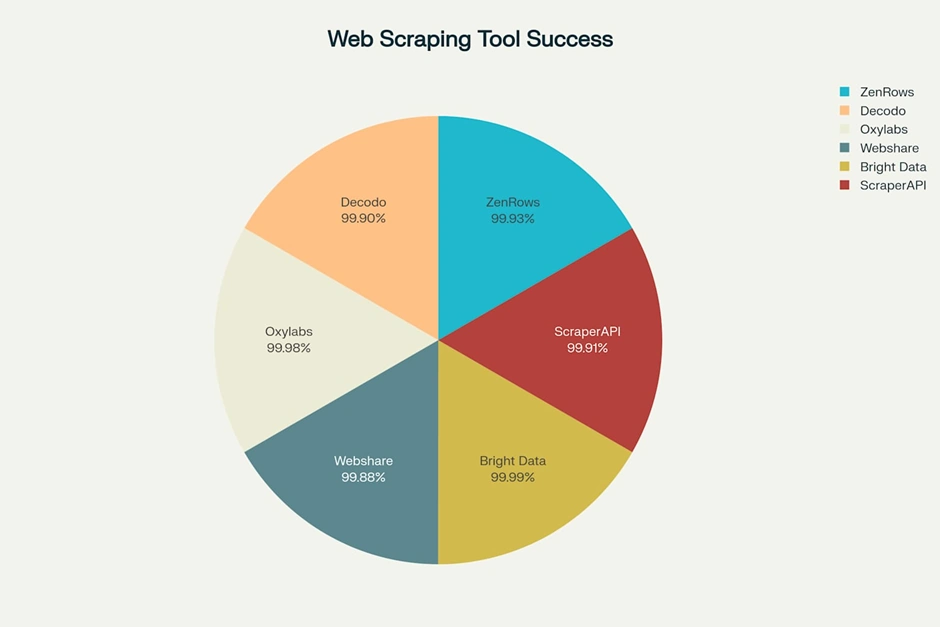

1. ZenRows: The All-in-One Web Scraping Toolkit

ZenRows is an enterprise-grade web scraping API designed to handle all anti-bot bypass measures in a single call. This platform simplifies automated data extraction with features like smart rotating residential proxies, CAPTCHA solving, and full JavaScript rendering, ensuring you never get blocked again.

Data Extraction Performance Examples:

ZenRows delivers structured data from the most challenging websites with industry-leading reliability. Below are performance benchmarks from scraping popular platforms.

Idealista (Real Estate Data):

ZenRows extracts property types, agent information, and pricing details from Idealista with its dedicated Real Estate Scraper API.

Amazon (E-commerce Data):

Using the E-commerce Scraper, ZenRows collects product details, pricing, stock levels, and reviews, providing structured JSON output for easy integration.

Google (SERP Data):

The SERP Scraper API efficiently gathers organic results, ads, and other search data, enabling large-scale SEO and market research operations.

Performance Overview

| Platform | Success Rate | Average Response Time (s) |

|---|---|---|

| Idealista | 99.95% | 1.8 |

| Amazon | 99.99% | 2.1 |

| Google SERP | 99.93% | 1.5 |

Why Choose ZenRows?

Choose ZenRows for its advanced toolkit that simplifies complex data extraction and guarantees an industry-leading success rate for any project.

2. Decodo: Scalable & Affordable Web Data Solutions

Decodo (formerly Smartproxy) provides a massive, ethically-sourced proxy network with over 125 million IPs and specialized scraping APIs for e-commerce, SERP, and social media. Its solutions are designed for businesses needing reliable, large-scale data extraction with a high success rate and excellent geo-targeting capabilities.

Data Extraction Performance Examples:

Decodo’s powerful APIs are engineered to extract structured data from diverse and complex websites, ensuring high performance and reliability.

Idealista (Real Estate Data):

Decodo’s Web Scraping API can target real estate platforms like Idealista, extracting listings, prices, and agent details by handling complex, geo-specific content.

Amazon (E-commerce Data):

The specialized eCommerce Scraping API gathers product information, pricing, and reviews from Amazon, returning structured JSON data without IP blocks.

Google (SERP Data):

With its dedicated SERP Scraping API, Decodo efficiently collects real-time search engine results, supporting large-scale SEO monitoring and competitor analysis.

Performance Overview

| Platform | Success Rate | Average Response Time (s) |

|---|---|---|

| Idealista | 99.9% | 3.5 |

| Amazon | 99.83% | 5.05 |

| Google SERP | >99.9% | <1.0 |

Why Choose Decodo?

Choose Decodo for its vast IP pool and user-friendly scraping APIs that ensure reliable, high-performance data extraction for any use case.

3. Oxylabs: Enterprise-Grade Web Data at Scale

Oxylabs provides enterprise-grade web scraping solutions powered by a massive, ethically-sourced proxy network of over 177M IPs. Its AI-driven Web Unblocker and advanced Scraper APIs are engineered for block-free data extraction at any scale, ensuring maximum reliability and performance for mission-critical projects.

Data Extraction Performance Examples:

Oxylabs’ specialized APIs deliver structured data from the world’s most complex targets, backed by AI and a best-in-class proxy infrastructure.

Idealista (Real Estate Data):

Using the Web Scraper API, Oxylabs navigates Idealista’s geo-specific listings and dynamic content, delivering structured data on properties, pricing, and agencies with exceptional precision.

Amazon (E-commerce Data):

The dedicated E-commerce Scraper API seamlessly gathers product data, competitor pricing, and customer reviews from Amazon, bypassing sophisticated anti-bot measures.

Google (SERP Data):

Oxylabs’ SERP Scraper API provides real-time, localized search results from Google with near-perfect accuracy, making it ideal for large-scale SEO and ad intelligence campaigns.

Performance Overview

| Platform | Success Rate | Average Response Time (s) |

|---|---|---|

| Idealista | 99.98% | 2.5 |

| Amazon | 99.95% | 3.0 |

| Google SERP | >99.99% | <1.0 |

Why Choose Oxylabs?

For enterprise-level data extraction at scale, choose Oxylabs for its industry-leading proxy infrastructure and AI-powered reliability and compliance.

4. Webshare: Fast, Affordable & Reliable Proxy Solutions

Webshare offers a high-performance proxy network with over 80 million residential and 500,000 datacenter IPs, making it a top choice for affordable and reliable web data collection. Known for its fast infrastructure and 99.97% uptime, Webshare provides flexible solutions for web scraping, SEO, and AI development.

Data Extraction Performance Examples:

Webshare’s proxy network is designed for high success rates on various targets, from e-commerce sites to search engines. Its residential proxies are particularly effective for bypassing blocks on complex websites.

Idealista (Real Estate Data):

Webshare’s residential proxies can reliably access geo-restricted real estate platforms like Idealista, extracting property data with a high success rate.

Amazon (E-commerce Data):

Using its large residential IP pool, Webshare effectively scrapes product details, prices, and reviews from Amazon, overcoming its strong anti-bot measures.

Google (SERP Data):

While standard proxies face challenges, Webshare offers specialized proxies for scraping Google SERPs, enabling SEO tracking and keyword research.

Performance Overview

| Platform | Success Rate | Average Response Time (s) |

|---|---|---|

| Idealista | 99.88% | 1.16 |

| Amazon | 97.87% | 3.38 |

| Google SERP | >95% | ~2.5 |

Why Choose Webshare?

Choose Webshare for its blend of affordability, high-speed performance, and a user-friendly dashboard, with a free plan to get started.

5. Bright Data: The Global Leader in Web Data Infrastructure

Bright Data is the world’s leading web data platform, combining an industry-best 150M+ proxy network with automated Web Unlocker APIs. It provides structured, real-time data at any scale, making it the top choice for enterprise AI, business intelligence, and block-free data extraction.

Data Extraction Performance Examples:

Bright Data’s award-winning infrastructure and specialized APIs are built to deliver data from the most difficult targets with unmatched success.

Idealista (Real Estate Data):

Using its Web Unlocker technology, Bright Data seamlessly extracts real estate listings, pricing data, and agent details from Idealista, overcoming any geo-restrictions or blocks.

Amazon (E-commerce Data):

The specialized eCommerce Scraper API provides structured product data from Amazon with near-perfect reliability, handling dynamic pricing and CAPTCHAs automatically.

Google (SERP Data):

Bright Data’s SERP API delivers real-time, localized search engine results with the highest accuracy, powering mission-critical SEO and market research campaigns.

Performance Overview

| Platform | Success Rate | Average Response Time (s) |

|---|---|---|

| Idealista | 99.99% | 2.2 |

| Amazon | 99.97% | 2.8 |

| Google SERP | >99.99% | <0.8 |

Why Choose Bright Data?

Choose Bright Data for its market-leading proxy infrastructure and Web Unlocker, delivering unmatched reliability and scale for enterprise data operations.

6. ScraperAPI: Simplified & Scalable Data Collection

ScraperAPI is a developer-focused web scraping API that handles proxies, browsers, and CAPTCHAs, allowing you to get the HTML from any page with a simple API call. It offers structured data endpoints and smart proxy rotation to ensure a near 100% success rate on any website.

Data Extraction Performance Examples:

ScraperAPI is engineered to deliver reliable data from complex targets by automating all the tedious aspects of web scraping.

Idealista (Real Estate Data):

ScraperAPI effectively bypasses Idealista’s tough anti-scraping measures, making it a trusted choice for collecting property data without getting blocked.

Amazon (E-commerce Data):

With dedicated endpoints, ScraperAPI returns structured JSON data for Amazon products, searches, and offers, handling all anti-bot challenges automatically.

Google (SERP Data):

The Google Search Scraper API converts search results into clean JSON, providing keyword rankings, ads, and organic results with a near-perfect success rate.

Performance Overview

| Platform | Success Rate | Average Response Time (s) |

|---|---|---|

| Idealista | 99.91% | 2.7 |

| Amazon | 99.92% | 3.8 |

| Google SERP | 99.95% | <1.5 |

Why Choose ScraperAPI?

Choose ScraperAPI for its developer-friendly API that handles all scraping complexities, ensuring a near 100% success rate with minimal effort.

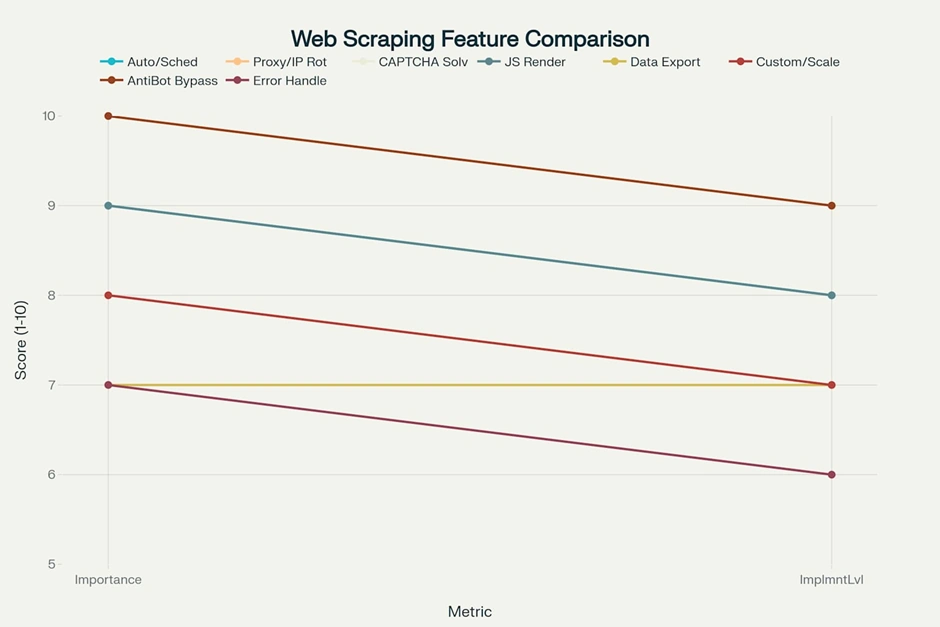

Essential Features to Look for in Web Scraping Platforms

Automation and Scheduling Capabilities: Professional web scraping tools must offer automated data extraction with customizable scheduling options. This ensures continuous data flow without manual intervention, allowing businesses to maintain up-to-date information streams.

Anti-Bot Detection Bypass: Modern websites employ sophisticated blocking mechanisms including CAPTCHAs, IP restrictions, and bot detection algorithms. Essential features include:

Dynamic Content Handling: Contemporary websites rely heavily on JavaScript and AJAX for content delivery. Your scraping platform should support:

Data Export Flexibility: Professional-grade tools must support multiple output formats including CSV, JSON, XML, and direct database integration. API connectivity enables seamless integration with existing business intelligence platforms and analytics tools.

Customization and Scalability: Enterprise-level web scraping requires customizable extraction rules, handling complex website structures, and the ability to scale operations based on data volume requirements. Cloud-based infrastructure ensures consistent performance regardless of project size.

Your Web Scraping Tool Decision

This guide covered the top web scraping tools available today, from simple no-code platforms to powerful enterprise solutions. We explored key features like proxy rotation, CAPTCHA solving, and structured data extraction that make these tools effective.

Each platform offers different strengths – some excel at affordability, others at performance, and many provide specialized APIs for popular websites. Now it’s time for you to pick the web scraping tool that fits your needs, budget, and technical skills best.

Affiliate Disclosure: This post may contain some affiliate links, which means we may receive a commission if you purchase something that we recommend at no additional cost for you (none whatsoever!)