Tracking query progress with Live Query Statistics

October 30, 2015 Leave a comment

How frustrating is it, to run a query on a database and it seems to be “stuck”. We’ve all seen that happen right? But how can you tell what the problem is, without letting the query complete (which could take a long time)? Microsoft (or actually the SQL Server team) gave us a new toy to play with that can help us in situations like this, and this tool is called “Live Query Statistics”.

Creating resources

To show you how the Live Query Statistics work, let’s create a sample table first, and insert 1.000 rows:

CREATE TABLE LiveQueryStats

(ID INT IDENTITY(1,1),

VALUE VARCHAR(10))

GO

INSERT INTO LiveQueryStats

(VALUE)

VALUES

('X')

GO 1000

Because we want to actually see something happen, let’s multiply the number of rows coming from the single table by using the query below:

SELECT * FROM LiveQueryStats T1 CROSS APPLY LiveQueryStats T2 CROSS APPLY LiveQueryStats T3

The query will return 1 billion rows (1.000.000.000), and will run for a while. This gives you the opportunity to look at the different features without rushing or losing your running query.

Live execution plan

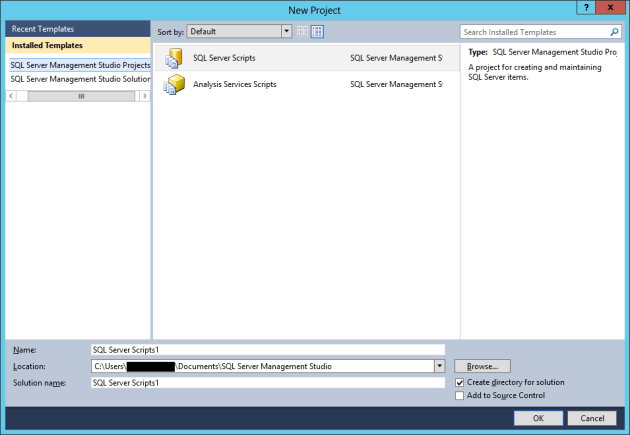

Before running the query above, you need to enable the Live Query Statistics just like you would do to the normal execution plan. This is an extra button added in the SQL Server 2016 SSMS (and the downloadable version of course). When that’s enabled, you can execute the query, and SQL Server Management Studio (SSMS) will automatically switch to the live execution plan.

In this execution plan, you’ll see the data flow through the components, so you can actually see what SQL Server is doing at a specific moment:

![]()

Query completion percentage

Another great addition is the overall completed percentage, that you can find at the bottom of your SSMS. This shows you the percentage of completion, that can help you estimate the time till completion:

![]()

Live rowcount

The properties window normally shows you the in-depth information of your query after completion (number of threads, memory grant, etc). With the live execution plan enabled, it can provide you with real-time statistics as well:

![]()

Live execution plan from Activity Monitor

From the Activity Monitor you can also open the live execution plan of running queries on your instance. In the Activity Monitor, you have an additional tab in SQL Server 2016 called “Active Expensive Queries”. In this tab you can right-click on a running query, and click on “Show Live Execution Plan”. This opens a new tab in SSMS with the execution plan:

![]()

Unfortunately this only works for queries that have the live statistics enabled before execution. I don’t expect this to change in the final product, mainly because of the negative performance impact this feature can have on your queries and instance.

Drawbacks, Limitations and Bugs

Because this is only a CTP version of SQL Server 2016, we can expect some bugs and limitation, so I’ll be the last one to judge. And I don’t think these limitations are a big drawback on the feature.

One of these bugs is a crashing SSMS. If you open a live execution plan from the Activity Monitor, and close that tab, SSMS crashes every now and then.

![]()

Another weird thing is that exiting SSMS by clicking on the close button (top right) when the live execution plan is open, causes the list of recently used SQL Servers (the list used when connecting object explorer for example) to be cleared for some reason.

This behavior is reproducible, so I think this is a small bug in SSMS, or the fact that I upgraded this instance from the first CTP version till the current version. But I’m sure this will be fixed by the SQL Server Team in the next releases.

The biggest pitfall (in my opinion) of this feature is also mentioned in the documentation. This feature is “primarily intended for troubleshooting purposes” and “can slow the overall query performance”. And I know, it look SO COOL to have this on all queries you’re running, but please be careful with this. Don’t enable this on every running query, but only use this to debug issues!

Another limitation for this feature (at least at the moment I’m writing this), is the use in combination with columnstore indexes, memory optimized tables and natively compiled stored procedures. You can read more about this here.

And I shouldn’t even have to mention this, but remember: you can only use Live Execution Plans when you have SHOWPLAN permissions on the database (same permissions you need to view normal execution plans).

Alternatives

One of the questions I had when I read about this: are there any alternative for this? One of the only things that come to mind is the “Track My Query” tool, written by Matan Yungman (Blog | @MatanYungman). This tool allows you to monitor your query, and it will show you what part of your query is currently running. For more in-depth information, I recommend the SQLBits session where he explains the inner-workings of this tool. I’ve seen this session in person, and it was an interesting session.

Conclusion

With all these awesome new features that will be shipped in SQL Server 2016, I think there’s a whole new way of looking at SQL Server as a product. I’ve always liked working with SQL Server, but this version is taking it to the next level.

With all these new features, debugging issues is going to be a bit easier, developing new stuff is going to be faster, and the overall usability is going to skyrocket. I can’t wait to get my hands on the finished product!