Inspiration

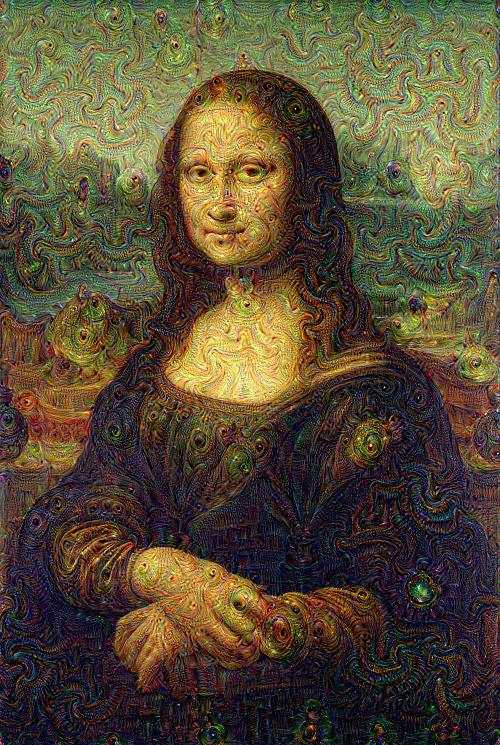

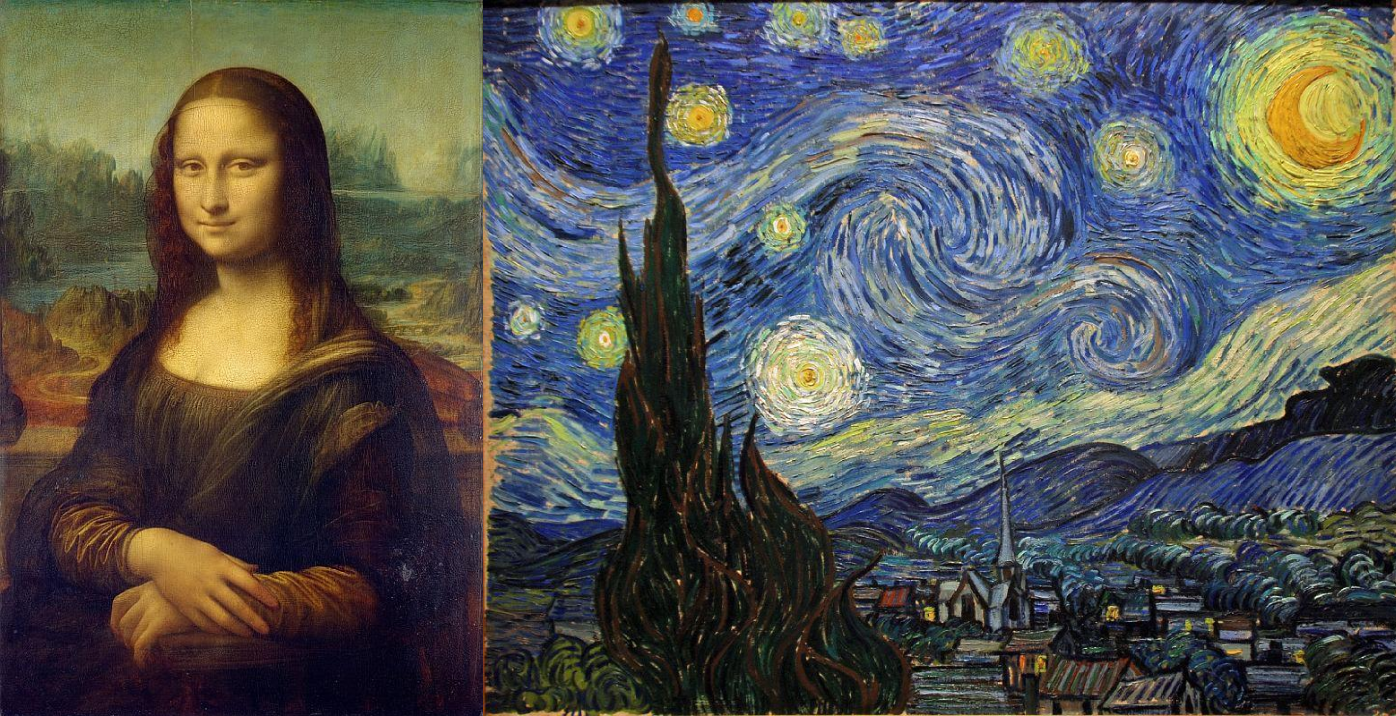

What happens when Mona Lisa meets a Starry Night? We used a computer vision library called DeepDream to make wonderful new fusions of art. To present these dreams we developed an interactive and immersive virtual reality art gallery.

How it works

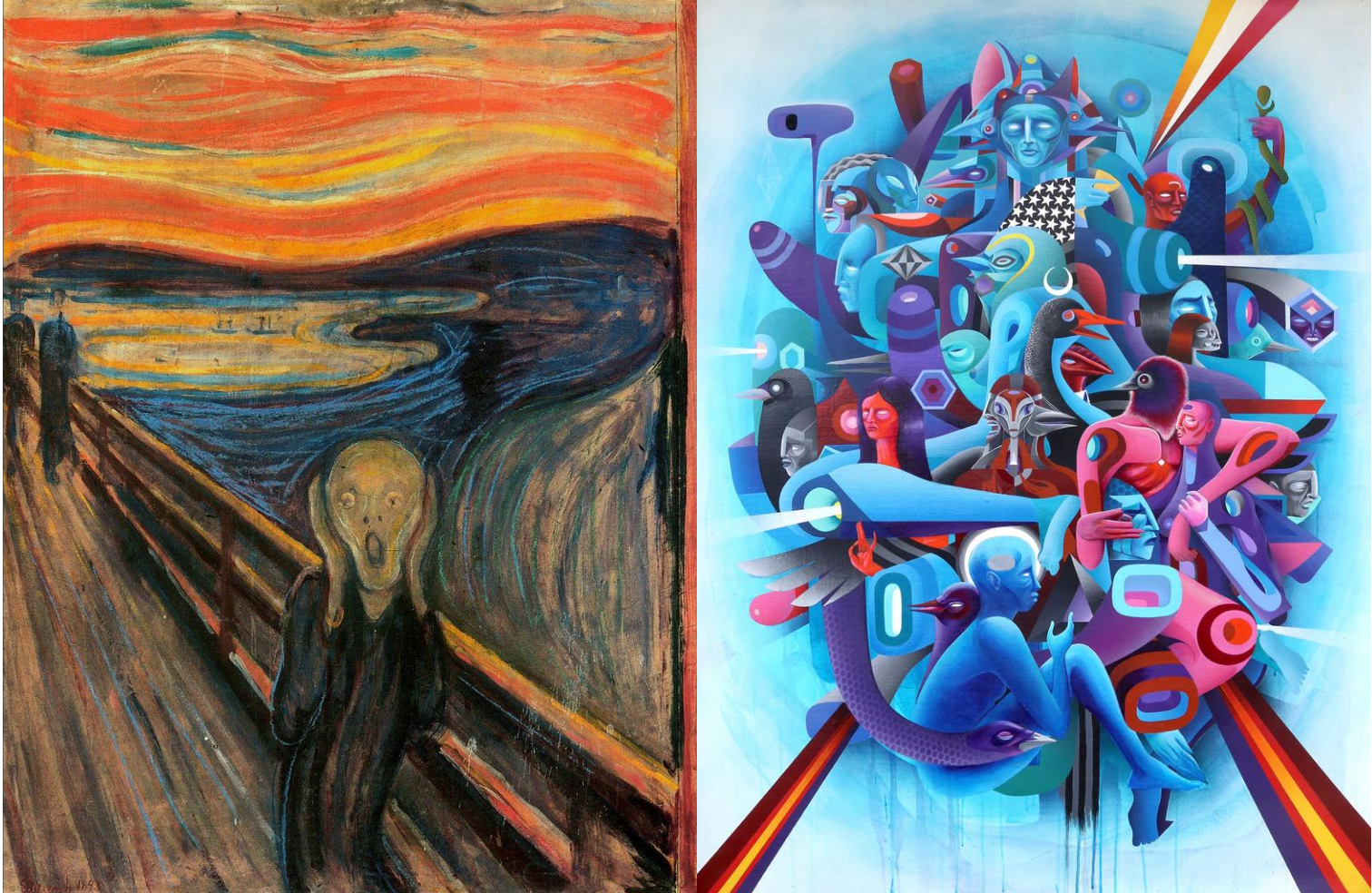

Our code is based on a deep neural network, which first considers an input "base" image (e.g. Mona Lisa), and then a target "guide" image (e.g. Starry Night).

Basically, it looks for patterns in the first image that remind it of the second image, and then imagines projections of the second image's patterns onto these first image's patterns.

For the actual gallery, we embedded 12 works of art into a Unity3D world.

We coded LeapVR hand interaction, so a user could select 2 pieces of art to fuse by DeepDream in real-time.

Challenges

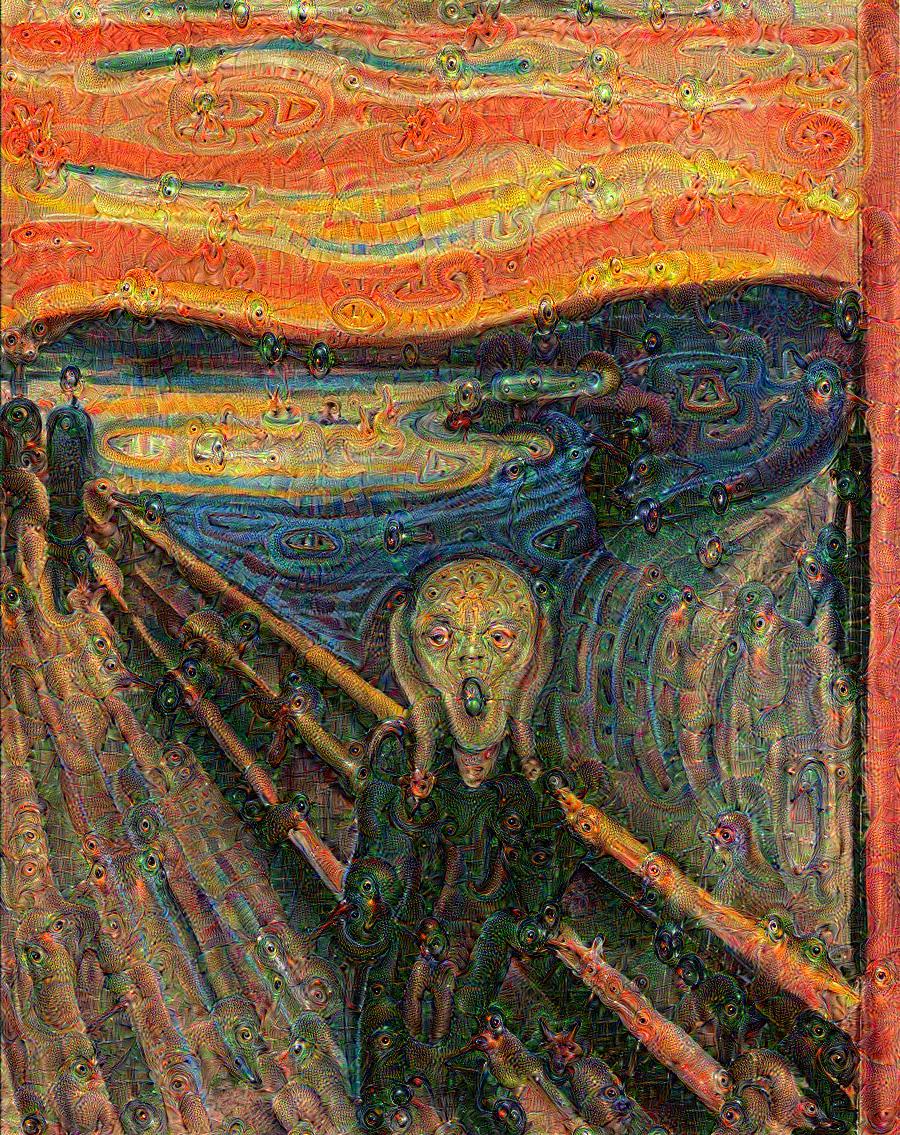

We made movies of the art fusions - e.g. "Starry Lisa", "Disintegration of Screams", "Surrounded by Artificial Houses" - but didn't have time during the 48 hour hackathon to embed them into Unity3D with LeapVR interaction.

Highlights

Projecting modern artist Doze Green onto The Scream makes it all the more scary.

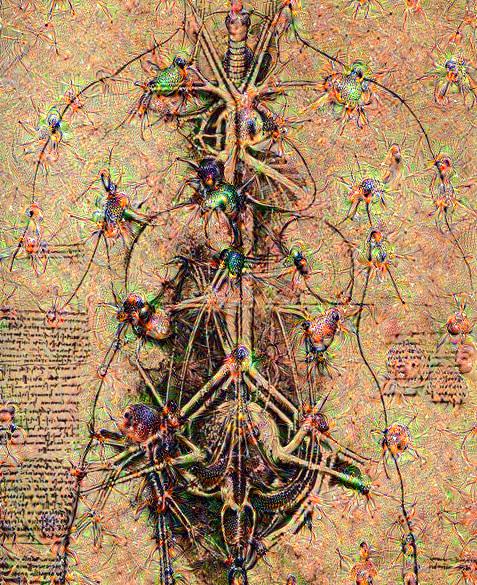

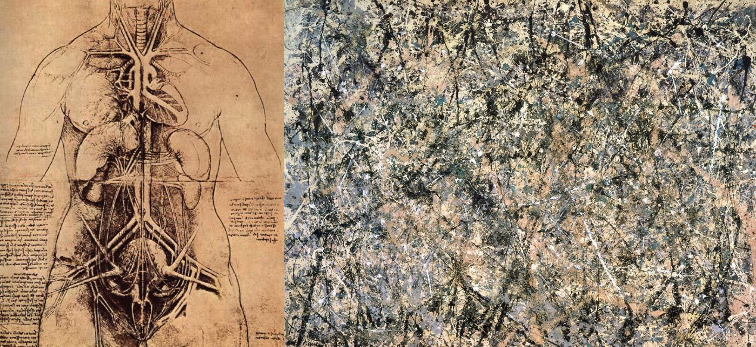

Leonardo da Vinci's sketches take on new abstractions after being infused with work by Jackson Pollock.

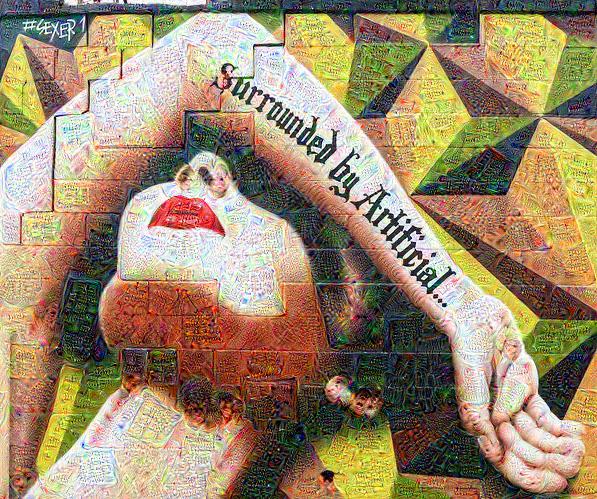

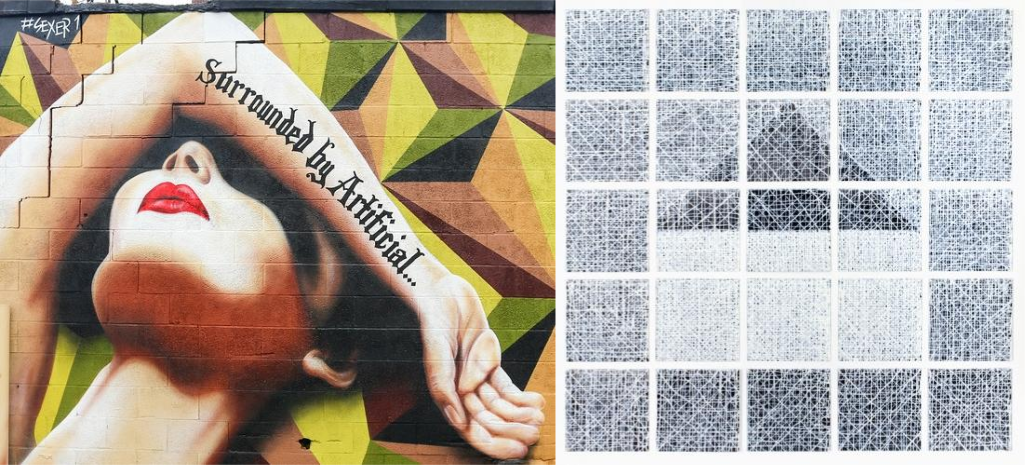

Work by New York City street artist Sexer is made even more "artificial" with help from MOMA artist Jennifer Bartlett.

Conclusion

We used artificial intelligence to create art for a virtual gallery. Sweet dreams.

Built With

- deepdream

- leapvr

- unity

Log in or sign up for Devpost to join the conversation.