Focura

A body-aware study companion

Focura is a privacy-first focus app that monitors your posture and phone-gaze in real time, sending gentle nudges whenever you slouch, drift to your screen, or need a break—helping you stay comfortable, distraction-free, and locked into productive Pomodoro sessions.

💡 Inspiration

Hours of exam prep left us stiff-necked and scatter-brained. Posture gadgets felt clunky, and “study-with-me” timers ignored biomechanics. We envisioned a software-only coach—one tab, one camera, zero data leaks—that guards both productivity and wellness.

🎯 What It Does

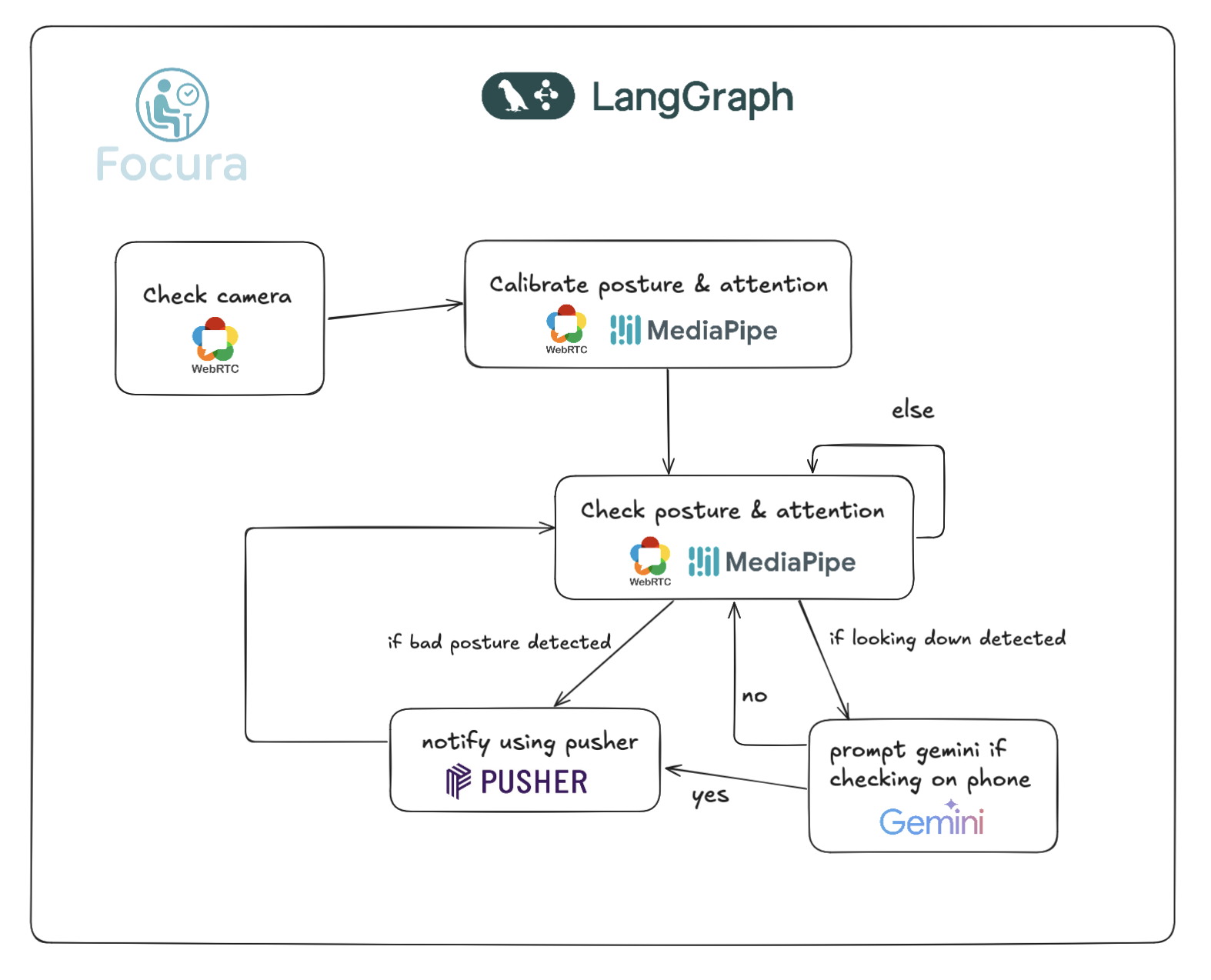

- Posture Sentinel – MediaPipe Pose flags ≥ 15° shoulder-ear drift and issues toast alerts.

- Phone-Gaze Detector – Downward face angle triggers “looking-down” events to curb doom-scrolling.

- Pomodoro & Hydration Coach – Classic 25 / 5-minute cycles with streak badges.

- Pair-Focus Mode – WebRTC mirrors posture tiles with friends for mutual accountability, signalled via Pusher Channels.

- Privacy First – All computer-vision inference runs locally; only lightweight JSON deltas leave the machine.

🏗️ How We Built It

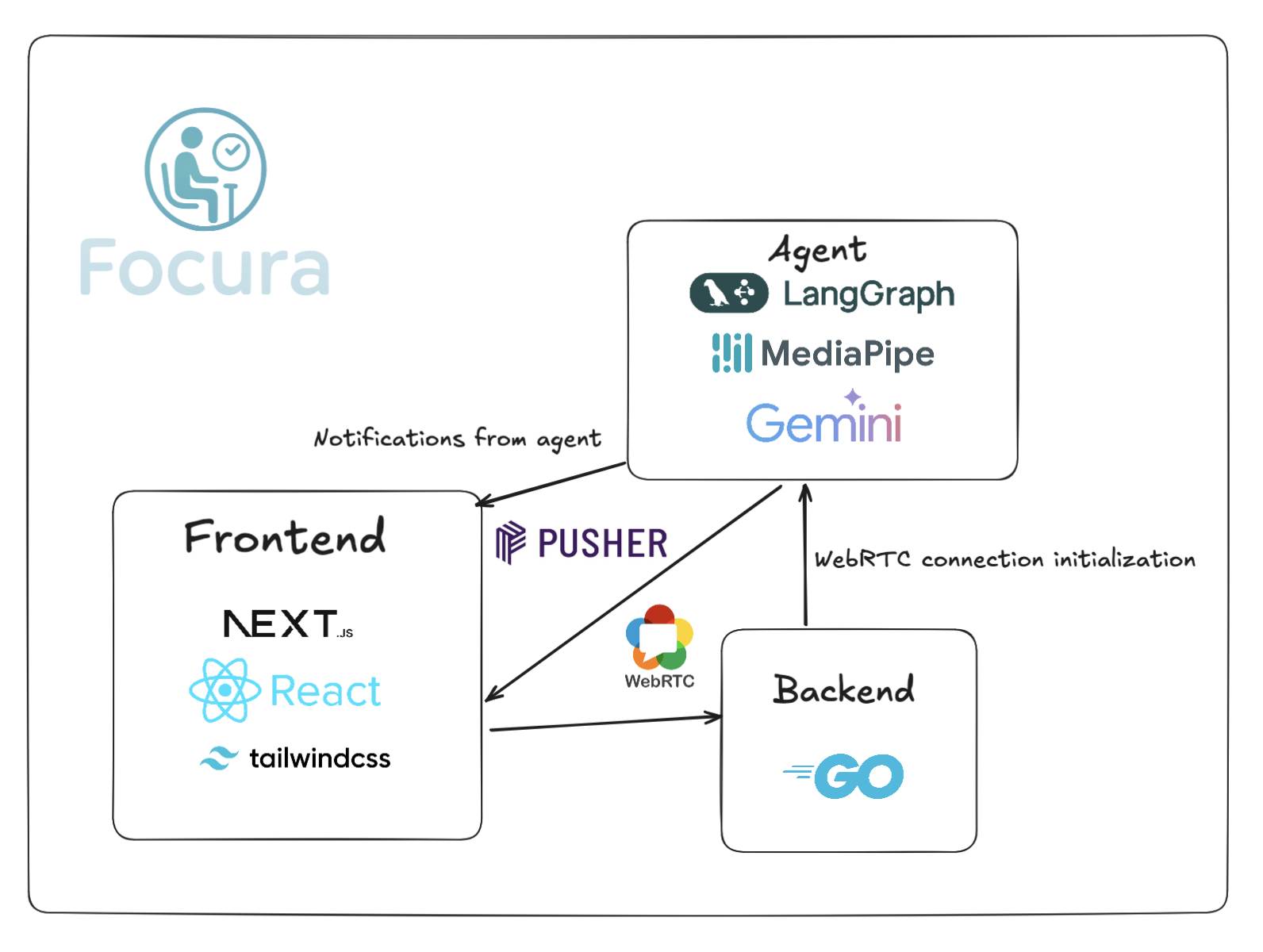

- LangChain-agent – Python 3.11 orchestrated by LangGraph continuously captures webcam frames with OpenCV, applies MediaPipe Pose landmarks, and converts posture or phone-gaze deviations into compact events.

- Realtime transport – Events publish to Pusher Channels; tiny Go serverless endpoints on Vercel act as authenticated triggers and also relay WebRTC SDP/candidates for optional peer video.

- Web dashboard – A Next.js 14 front-end, styled with Tailwind CSS and shadcn/ui, subscribes to Pusher streams, animates toast notifications, runs a Pomodoro timer, and embeds the WebRTC

<video>element—all in TypeScript React.

🧰 Tech Stack & Tools

🧗♂️ Challenges

- Camera permissions on macOS required a three-retry safety wrapper to avoid false negatives.

- Threshold tuning balanced user comfort vs. nag frequency by averaging five calibration frames and applying adaptive ± 15° bands.

- Serverless signalling—writing minimal Go handlers beat spinning up Socket.IO infrastructure during a 24-hour hackathon.

🏆 Accomplishments

- Autonomous computer-vision agent – Designed and implemented a self-calibrating posture agent that maintains a rolling baseline, recovers from camera errors, streams JSON-only events, and stays cross-platform.

- Sub-200 ms feedback loop – Achieved end-to-end alert latency fast enough to correct posture before discomfort sets in.

- Inclusive UI design – Delivered a colour-blind-safe interface without sacrificing clarity, aided by utility-first styling.

🔮 What’s Next

- Guided stretch routines after repeated bad-posture events.

- React Native companion app leveraging on-device Pose for mobile study sessions.

- Leaderboards and streaks to gamify consistency and build a posture-proud community.

Log in or sign up for Devpost to join the conversation.