Scale Diffusion: The City Never Sleeps. Neither Does the Data.

The best AI models are only as good as their data. We're building the machine that feeds itself.

The Problem

Training the next generation of AI video and autonomous driving models requires massive amounts of high-quality, paired data — frame sequences tied to human decisions, captured across diverse scenarios.

The current approaches to collecting this data are relics of an older era:

- Manual annotation is slow, expensive, and soul-crushingly boring. Labelers burn out in windowless towers, clicking boxes until their eyes blur. Quality drops. Costs scale linearly. It's assembly-line work for the information age — humans as cogs in a data machine.

- Synthetic data from simulation engines looks sterile — too clean, too perfect, like a city with no people in it. Models trained on it struggle when they hit the chaos of real streets. The domain gap is a canyon.

- Dashcam scraping gives you millions of hours of empty highways stretching to vanishing points and almost nothing interesting — no near-misses at rain-slicked intersections, no complex merges, no edge cases where AI actually fails.

- Fleet data from megacorps like Waymo and Tesla is locked in corporate vaults, inaccessible to the broader research community. The data exists behind reinforced walls. You just can't touch it.

The result? AI models that cruise perfectly on empty highways and seize up at their first crowded intersection.

The fundamental bottleneck isn't compute. It isn't model architecture. It's data — specifically, diverse urban scenario data with paired human inputs that captures the long tail of real-world driving through dense, living cities.

We need a way to generate high-quality training data at scale, across thousands of edge cases, with real human decision-making attached to every frame.

But nobody wants to sit in a labeling tower for $12/hour clicking bounding boxes under humming fluorescents.

So we asked: what if the workers didn't know they were working?

The Solution

Scale Diffusion transforms AI training data collection into something people actually want to do. Users navigate a towering 3D metropolis rendered in Three.js, threading through complex traffic scenarios while watching their world transform in real-time through AI diffusion models — and every frame pair, every input they generate, feeds the machine. Connect a Solana wallet, and the machine pays you back.

Here's what a session looks like:

0:00 — You open Scale Diffusion. A retro terminal flickers to life — monochrome text cascading down the screen, system diagnostics scrolling like factory readouts.

0:15 — You enter your Solana wallet address. The terminal validates it, then the screen fractures and rebuilds itself — fullscreen terminal collapsing into a 480x480 window, the city loading behind it.

0:20 — A 3D Manhattan rises before you. Towers rendered from real NYC geometry data fill the frame, streets cutting canyons between them. You grab the mouse, lock the pointer, and descend into the grid.

0:22 — Decart Mirage activates. Through WebRTC, your raw Three.js canvas streams to an AI diffusion model at 30fps. The city transforms — flat polygons become weathered concrete and glass, streets gain grime and texture, the sky fills with haze and distant lights. You're driving through a machine-hallucinated metropolis in real time.

0:30 — A scenario triggers: Rush Hour Intersection. Nine entities spawn from the urban flow — four cross-traffic vehicles, a cyclist weaving between lanes, three pedestrians, a jaywalker cutting against the signal. You have 30 seconds to navigate without collision.

1:00 — Scenario complete. Every frame pair (before/after AI transformation), every keyboard press, every mouse movement, your collision data, your success or failure — all logged. The machine has been fed.

1:01 — Next scenario loads. You keep driving. The data keeps flowing. The city never stops.

What Makes This Work

1. Real-Time AI Texture Generation (Decart Mirage)

Your game canvas streams at 30fps over WebRTC to Decart's Mirage model. The AI transforms raw Three.js geometry into a living cityscape, overlaid on your game at z-index 100. This creates paired data — the geometric "ground truth" canvas and the AI-rendered metropolis — synchronized frame-by-frame.

The pipeline:

- Canvas captures at 30fps via

captureStream() - WebSocket connects to Decart's streaming endpoint

RTCPeerConnectionexchanges SDP offers with VP8 codec- ICE candidates negotiate the fastest path through the network

- AI-transformed video streams back in real time

- Prompts update mid-stream as the city shifts around you

Latency under 100ms. Interactive framerates. The player never waits. The machine never rests.

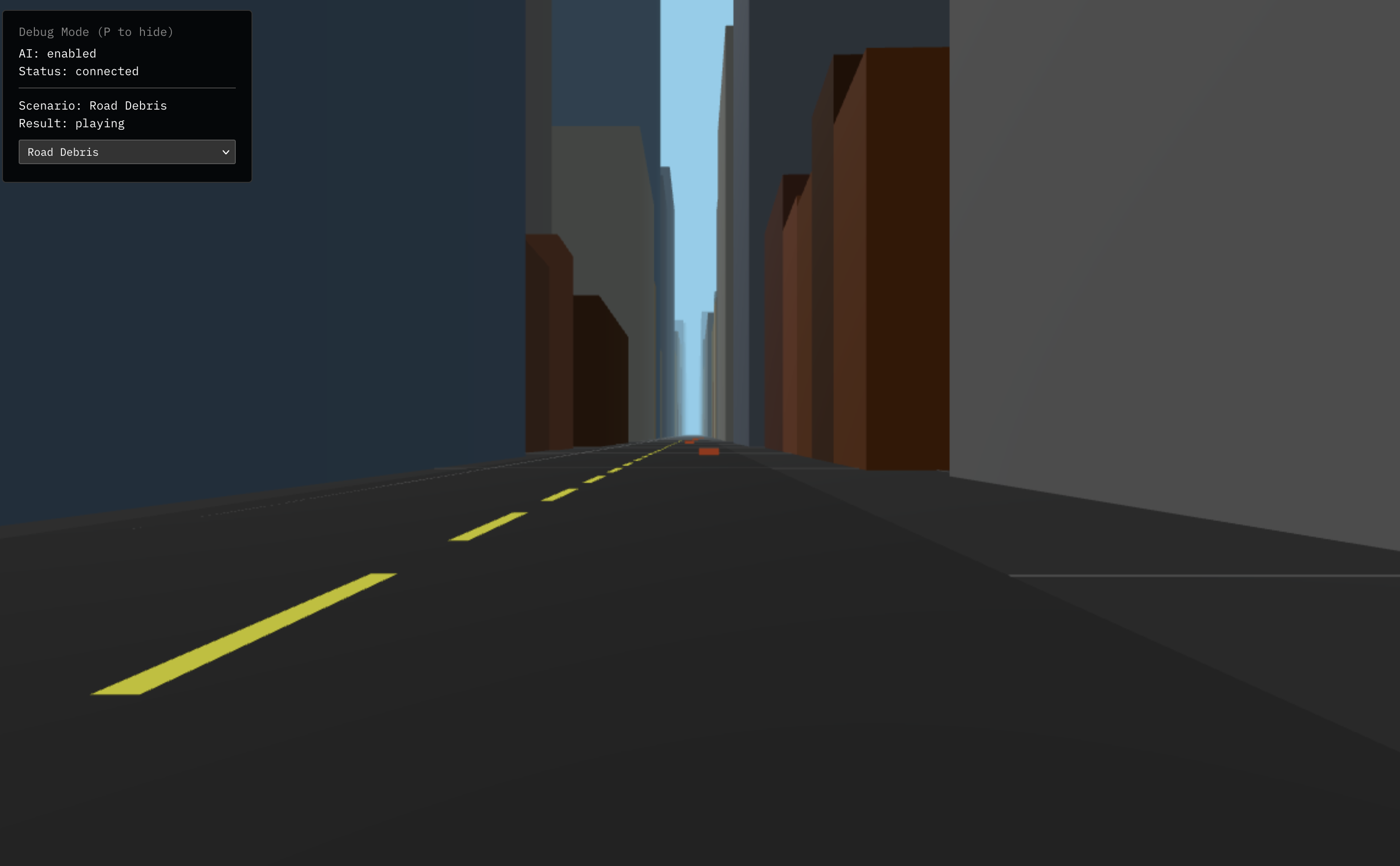

2. Scenario-Driven Edge Case Generation

11 hand-crafted urban scenarios systematically cover the situations where AI fails most — the chaos that clean simulations can't replicate:

| Scenario | Entities | What It Tests |

|---|---|---|

| Oncoming Traffic | Head-on vehicle | Basic collision avoidance |

| Highway Cut-In | Lane merger | Reaction time to lateral threats |

| Busy Intersection | Cross traffic + pedestrians | Multi-agent awareness |

| Road Debris | 4 obstacles | Static hazard navigation |

| Lead Vehicle Braking | Sudden stop ahead | Following distance judgment |

| Heavy Traffic | 3+ vehicles + oncoming | Multi-vehicle coordination |

| Rush Hour Intersection | 9 entities | Complex multi-wave traffic |

| Highway Pile-Up | 7 entities | Emergency scene navigation |

| School Zone Chaos | 8 entities | Unpredictable pedestrian behavior |

| Construction Zone | 7 entities | Narrow corridor maneuvering |

| Double Intersection Gauntlet | 9 entities | Chained decision-making under pressure |

Each scenario uses Bezier-interpolated entity trajectories, staged wave-based spawning, and success conditions (no_collision, reach_position, survive_duration). Entity types include vehicles, pedestrians, bicycles, obstacles, and emergency workers — all moving through the city with independent purpose.

This isn't random noise. It's orchestrated urban chaos — the exact edge-case data AI models starve for.

3. Streaming Video Diffusion Backend (StreamDiffusionV2)

For offline processing and model training, StreamDiffusionV2 runs causal video-to-video diffusion — the engine room powering the transformation:

- Wan 2.1 models (1.3B for real-time, 14B for quality)

- Causal DiT architecture with rolling KV cache — no full history reprocessing

- Adaptive noise scheduling based on frame-to-frame content changes

- Multi-GPU distributed inference via torchrun for production scale

- 2-step denoising at 480x832 achieving ~16fps on single GPU

The architecture processes video as a stream, not a batch — a continuous flow of frames through the machine, enabling real-time generation that scales from a single GPU to an entire cluster.

4. Blockchain-Native Rewards (Solana)

Every session is tied to a validated Solana wallet. The payout pipeline runs like clockwork:

Game Session → Frame Capture → S3 Raw Storage → SQS Queue

→ LangGraph Validation (blur, consistency, hallucination checks)

→ S3 Clean Storage → Token Payout to Wallet

Only quality-validated data triggers rewards. Drive well, generate clear data from diverse angles, and the machine compensates you. The blockchain makes every payout transparent — visible on-chain for anyone to verify. No corporate vaults. No hidden ledgers.

5. Physics-Based Collision Detection

Point-in-polygon testing with circle-polygon intersection for precise building boundary collision from real Manhattan geometry. Eight perimeter sample points plus center testing ensure no clipping through walls — the city's architecture is solid, unyielding. Collision events feed directly into scenario success/failure conditions and data quality signals.

Built With

- amazon-web-services

- decart

- langgraph

- langsmith

- solana

- three

- webrtc

- websockets

Log in or sign up for Devpost to join the conversation.