Anyone can be a DJ!

GitHub»

Cindy Li

·

Cindy Yang

·

Elise Zhu

Inspiration

Most DJ tools are locked behind expensive hardware, steep learning curves, or clunky software. We wanted to create something more intuitive, immersive, and fun — where anyone, regardless of their experience or equipment, could feel like they were in control of the music.

The idea for wubwub was born out of a simple question: What if we could DJ with just our hands and a browser?

That question turned into an exploration of gesture control, real-time audio manipulation, and bringing physical feedback (LEDs, sound effects) to life in a web-first, cross-platform experience.

What it Does

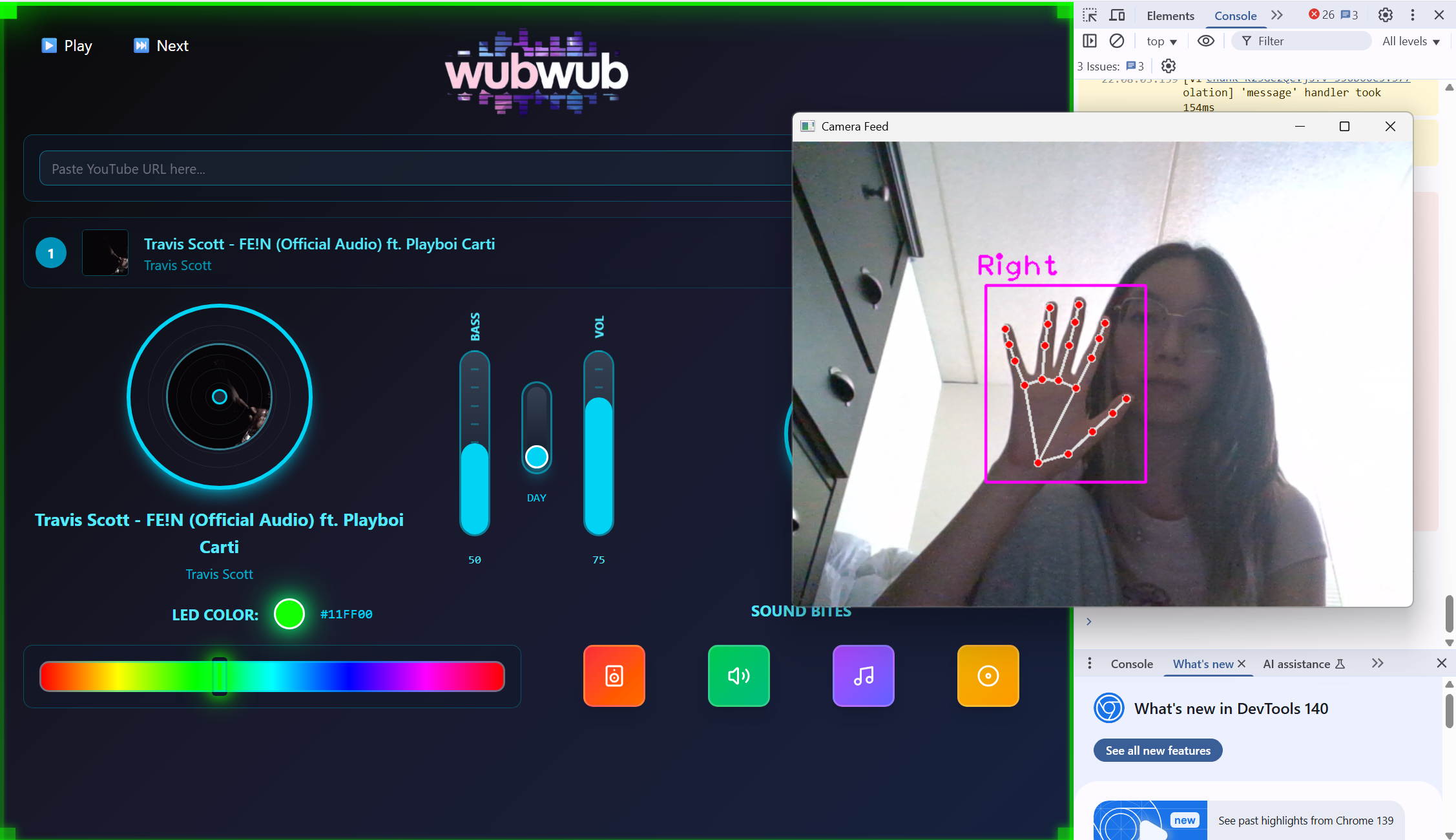

wubwub is a web-based, gesture-controlled music mixing interface that brings the energy of a DJ setup straight into the browser. Built with computer vision, no keyboard or mouse is required. Every control is fully responsive to hand movements!

Features

- 👋 Uses your hand gestures (via webcam) to control volume, bass, and effects

- 💿 Dual spinning discs display album art for current and queued tracks

- 🌌 “Nightcore mode” — triggered by gesture — alters playback speed and visuals in real time

- 🔗 Add songs directly via YouTube links, no account required

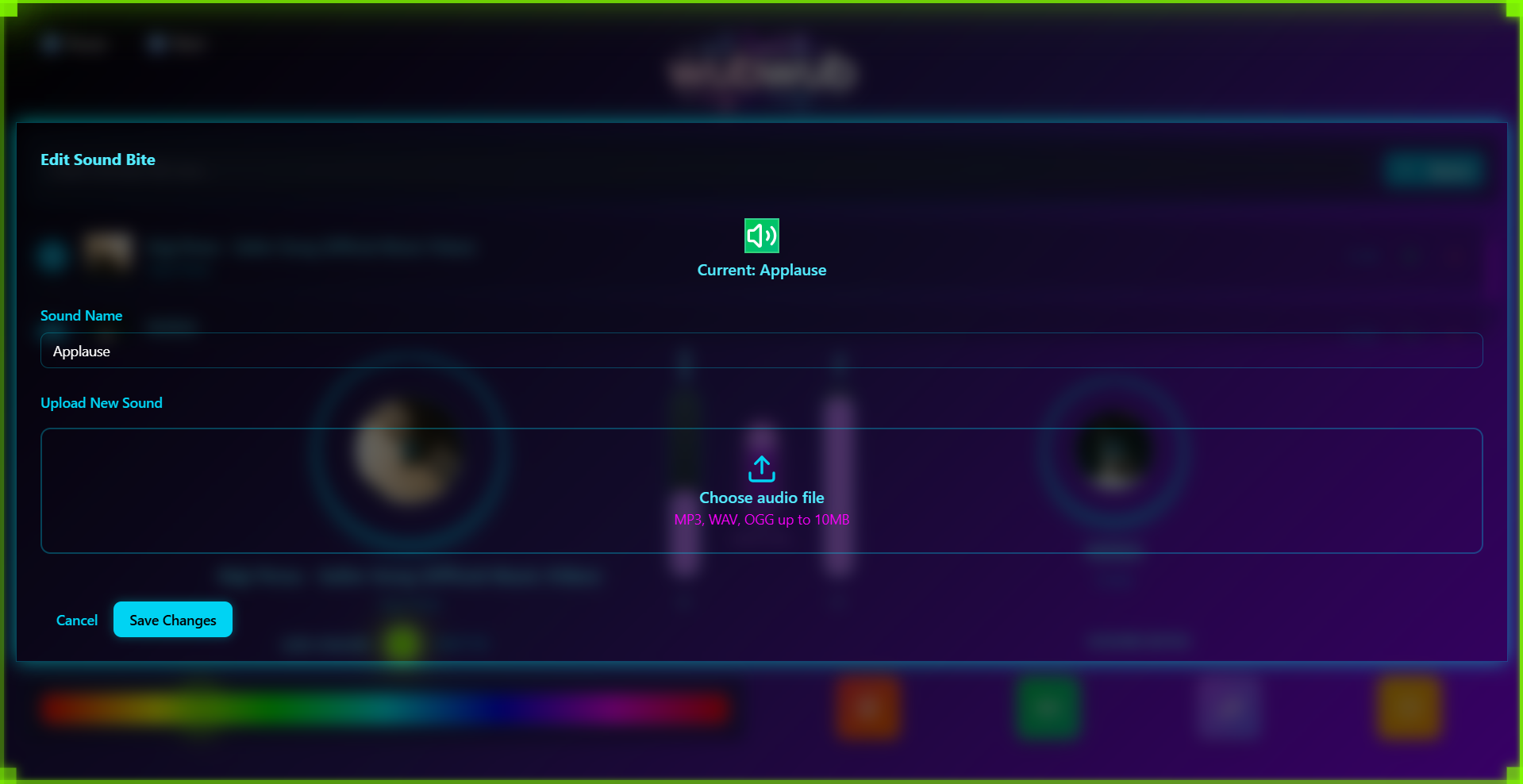

- 🎛️ Customizable sound bites for on-the-fly remixing, editable through a pop-up menu

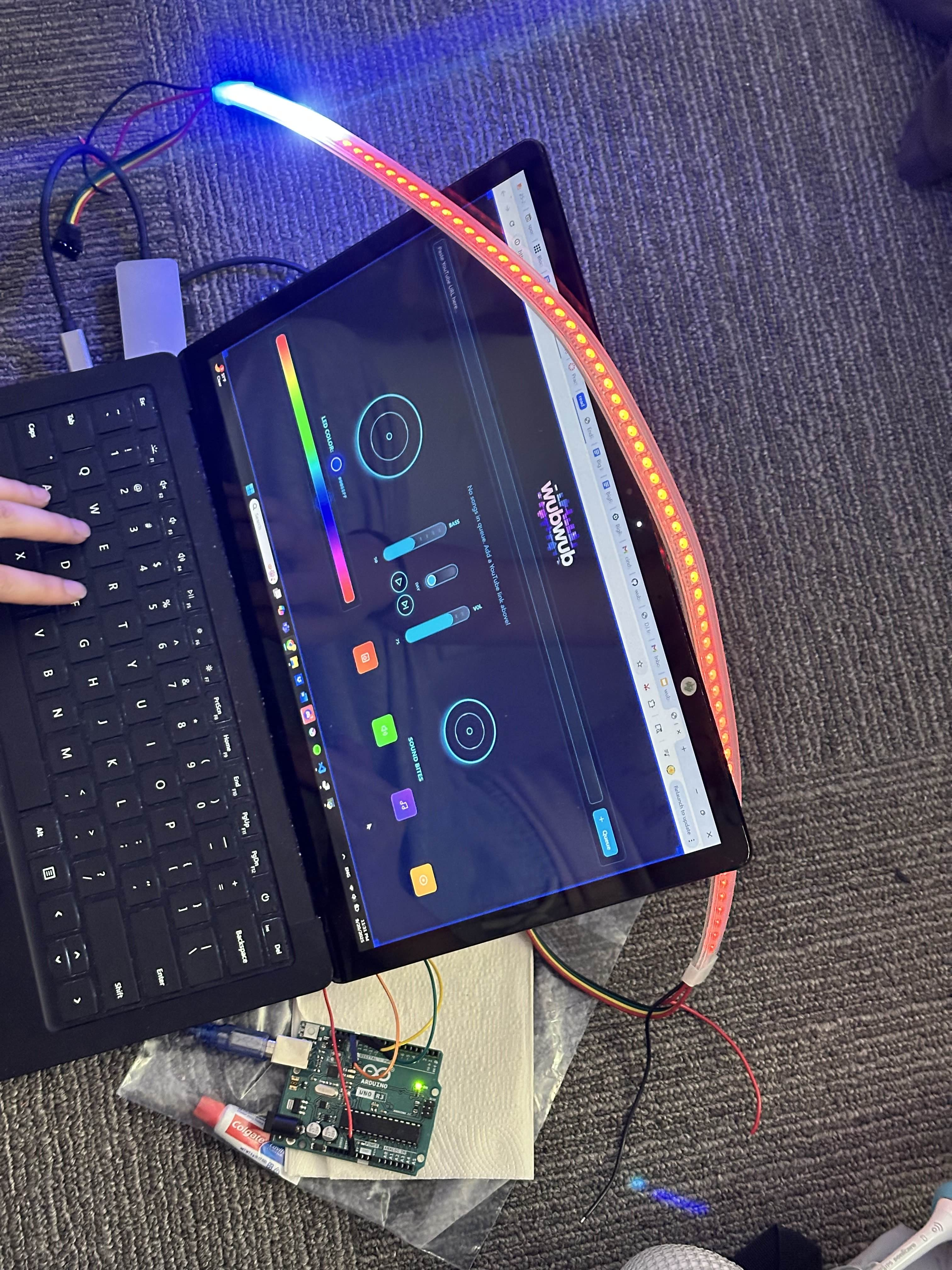

- 🎨 LED lighting synced to the mood and beat of the song, with visuals along the border of the interface

How We Built It

Technologies

MongoDB

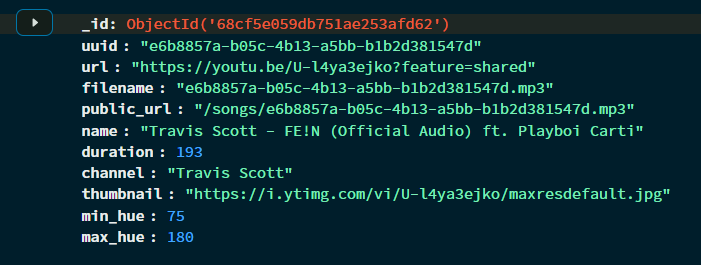

MongoDB stores the active song queue, sound bite configurations, and user session data. It allows the system to persist state across reloads and ensures seamless queue management when multiple tracks are added.

Gemini

Gemini is used to enrich the music experience by generating color hues that match the mood of each song. These hues are displayed on both the website and LED strip and flash to the beat!

Web Audio API

The Web Audio API is a low-level audio processing interface built into modern browsers. Instead of just playing audio files, it exposes a graph-based system of audio nodes that can be connected, modified, and rerouted in real time.

In our project, audio streams from YouTube-DLP are fed into AudioContext nodes, where we apply effects like biquad filters for bass boosting, playback rate adjustments for Nightcore, and gain nodes for volume control. Because the API runs natively in the browser, these transformations are highly performant and sample-accurate, giving us professional-grade sound manipulation without external software.

Mediapipe and OpenCV

Mediapipe provides a pretrained, GPU-optimized pipeline for tracking hand landmarks in real time (21 key points per hand). Under the hood, it uses deep learning models to estimate 3D positions of these landmarks from a webcam feed. We use these points to detect gestures. For example, vertical hand movement mapped to volume, or a toggle gesture mapped to the Nightcore switch.

Meanwhile, OpenCV handles lower-level video stream preprocessing (frame capture, smoothing, thresholding). Mediapipe alone can track landmarks, but OpenCV gives us fine control to stabilize and clean the input data. Together, they provide a fast, accurate gesture recognition system that works entirely on consumer webcams.

Youtube DLP

YouTube-DLP is a Python-based tool that bypasses YouTube’s standard playback UI to fetch the direct media streams and metadata. Behind the hood, it parses YouTube’s page structure, extracts the dynamic streaming manifests (DASH/HLS), and resolves the highest-quality audio link.

We use this to retrieve audio in a format directly consumable by the Web Audio API, along with thumbnails/metadata for the spinning CD visuals. Since fetching and parsing manifests can take time, we threaded this process so the user doesn’t experience UI freezes while adding new tracks.

Arduino and Adafruit

Arduino drives connected Adafruit hardware to sync the digital experience with the physical world. LED strips update in real-time with the color visualizer, bringing the board’s energy into a live glowing border. This is communicated through serial instructions from the python server.

Sockets and Multithreading

Instead of having the front end constantly poll a REST API for updates (which is inefficient and introduces latency), we used WebSockets to push gesture recognition results directly from the CV backend to the React interface. This gives near real-time responsiveness — the moment a hand moves, the slider updates.

We also applied multithreading in the backend: YouTube-DLP runs in its own thread so video metadata extraction and audio URL resolution don’t block the main Flask event loop. This ensures that gesture control and LED updates remain smooth while new songs are loading in parallel.

Figma and Tailwind

Figma was used to design the UI with an emphasis on neon, futuristic DJ vibes. Tailwind CSS translated those designs into a clean, responsive, and customizable interface that feels both modern and immersive.

Vite & React

React powers the interactive UI — from the dual spinning CDs to the live queue and sound bite grid. Vite makes development fast and efficient with hot reloading and optimized builds for smooth browser performance.

Flask

Flask serves as the project’s backend framework, connecting all the pieces together. It manages the API endpoints, handles YouTube-DLP calls, talks to MongoDB, and coordinates real-time communication with the front end and hardware.

Challenges We Ran Into

- Gesture Drift: Early versions had noisy hand data. We had to balance smoothing with responsiveness so that sliders didn’t lag behind real gestures.

- YouTube Latency: YouTube-DLP fetches can take time. We used multithreading to avoid blocking the Flask server and keep UI smooth.

- LED Timing: Coordinating LED color flashes with music mood changes over serial required careful integration.

- Gesture Ambiguity: Distinguishing between different hand poses with similar motions required fine-tuned landmark logic.

What We Learned

- Latency matters: When building reactive experiences, every frame counts. We gained a deep appreciation for concurrency, socket design, and non-blocking architectures.

- Web Audio is underrated: It’s a powerful but underused API — learning how to harness it made a huge difference in audio quality and control.

- Hardware + software fusion (LEDs + UI) is incredibly rewarding, but requires careful timing to feel seamless.

- The power of threads and event-driven design in Flask/Python was key to keeping everything reactive and non-blocking.

What's Next

- Multiplayer mode — mix with friends live in the same session, with shared queues

- Visualizations — add beat-synced particle effects or waveform displays

- Cloud DJ sets — save and share your mixes as downloadable playlists

- Enhanced LED syncing — use FFT data to pulse LEDs not just by mood, but by beat and frequency

Contact

Cindy Li (audio processing, hardware, youtube extraction) - cl2674@cornell.edu

Cindy Yang (design, mongodb, hardware, gemini, threading) - cwyang@umich.edu

Elise Zhu (gesture recognition, sockets, sound bites) - eyz7@georgetown.edu

Log in or sign up for Devpost to join the conversation.