Inspiration

When we got to Boston for this hackathon, we were pretty lost on what we should get for food. We really wanted to try experiencing Boston food culture but we kept getting lost scrolling google search results that just surfaced main chain restaurants. Eventually we gave up and walked to the nearest place that we have already heard of. It was fine, but we knew we were missing out on what makes Boston food special. That's when we realized that with AI agents, google data processing and sentiment analysis we could find the right places to eat every time that fit all of our preferences. No more going to the same boring place. No more missing out on real cultural experiences. You, Me, and Food. Together we make Yumi.

What it does

Yumi is an agentic social network that actually solves the group dining problem. You write reviews in natural language like you're texting a friend, and Gemini extracts what you actually care about: flavor profiles, atmosphere, price sensitivity, specific cuisines. Over time, it builds a taste profile for you.

When you want recommendations, just ask with natural language, or even speak to it. The AI retrieves your preferences and uses function calling to search and rank restaurants that match your taste. You mention your friends with '@' like Discord, and the system merges everyone's preferences intelligently. It finds restaurants that work for the whole group, not just the majority. No more endless group chats trying to decide where to eat.

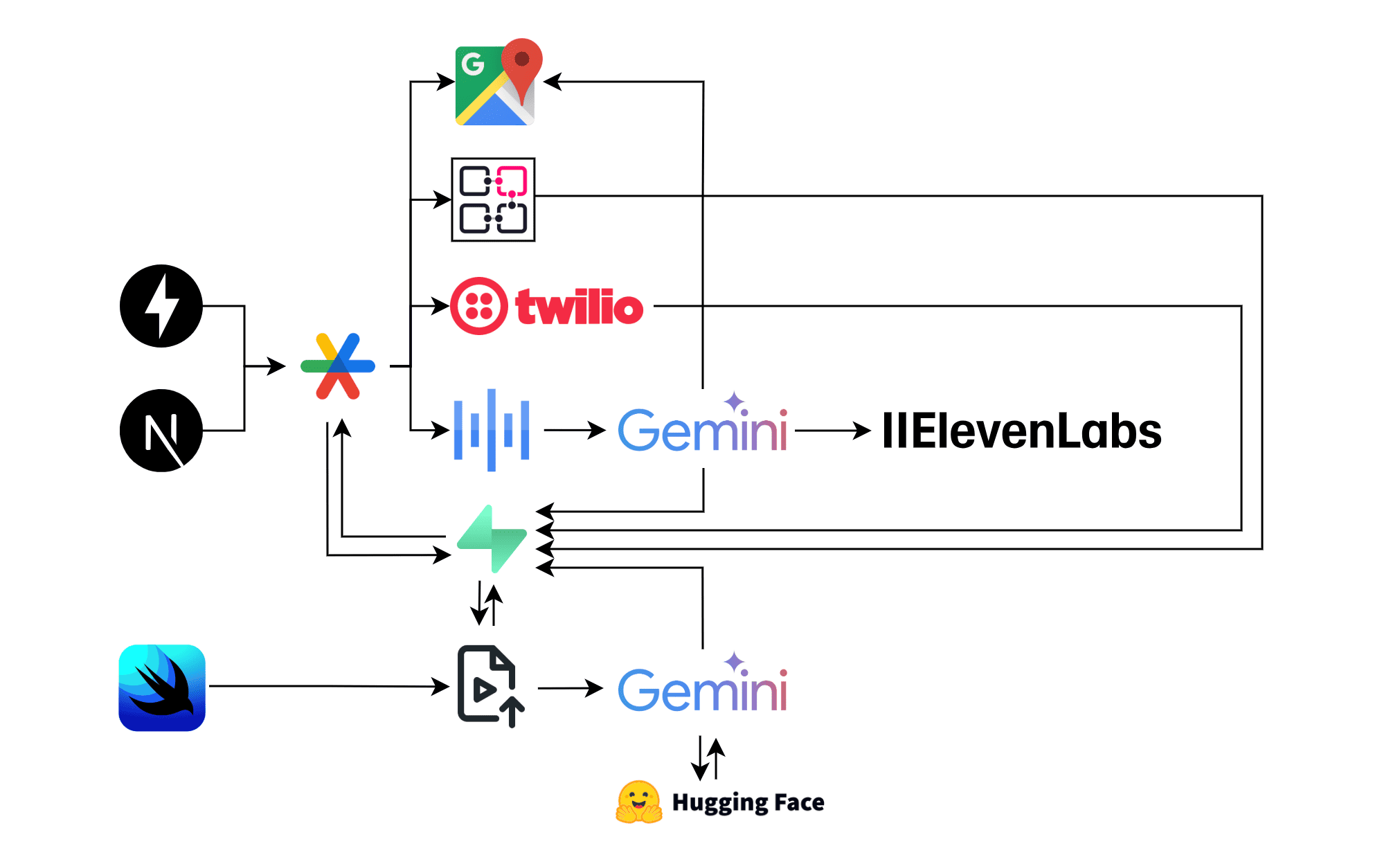

How we built it

In 36 hours, we built an iOS app in SwiftUI, a Python FastAPI backend, and a Next.js web dashboard. The iOS app handles food photos with optimized image resizing that cuts upload time by 70%. The backend uses Gemini Flash with function calling, where we defined custom tools like get_user_preferences and get_nearby_restaurants. Gemini decides when to call them and orchestrates the entire search.

We built a custom Google Places scraper that divided Boston into overlapping grid cells and populated thousands of restaurants into our Supabase PostgreSQL database with PostGIS for spatial queries. The friend mentions use a custom React hook that watches for @ symbols and queries the backend in real-time. Everything deploys to production: Render for backend, Netlify for frontend, Supabase for database and auth.

Challenges we ran into

Making AI search fast enough was difficult. Early versions took 15-20 seconds. We fixed it by using Gemini Flash, implementing function calling so the model uses tools instead of guessing, and caching taste profiles. We also tried extracting structured JSON from reviews but it kept breaking on edge cases. Switching to natural language summaries made everything way more robust.

Merging preferences across multiple users was tricky. If one person loves spicy food and another hates it, what do you recommend? We implemented a smart union strategy that accommodates everyone, then told the LLM explicitly to find places that work for the whole group. Getting an iOS app, web dashboard, and backend to work together seamlessly in 36 hours required optimistic UI updates and a lot of background processing.

Accomplishments that we're proud of

We built a fully functional agentic system in 36 hours that solves a real cultural problem. We came to Boston to experience the food scene and ended up building the tool we needed to actually find it. The taste profile system learns from natural language and the group preference merging is pretty accurate. Overall, we shipped an iOS app, a web dashboard, and a backend with AI orchestration and spatial database queries!

Most importantly, we proved that AI agents can do more than chat. They can learn, coordinate across multiple users, and make decisions that solve real coordination problems. The function calling implementation lets Gemini orchestrate complex searches. The natural language approach is way more flexible than traditional filters. And we did all this while scraping thousands of Boston restaurants.

What we learned

Building with LLMs in a hackathon taught us that the hard part isn't the AI, it's the caching, error handling, making it fast enough to feel real. We learned that natural language is a better interface than forms. People want to just say what they liked, and the AI can extract structure when needed.

We also learned that social features need to be functional, not decorative. The friend graph isn't for likes and comments, it's for solving the group coordination problem. This shift from social network to agentic coordination platform changed everything about how we designed features. We learned that the best hackathon projects come from problems you experience yourself. We built Yumi because we needed it.

What's next for Yumi

We want the system to proactively suggest meetups based on overlapping preferences, automatically call restaurants for reservations using voice AI, and learn from actual dining outcomes to improve recommendations. We're building a reputation system where the AI learns which friends have similar taste to you and weights their preferences accordingly.

We also want to expand beyond Boston to help people discover local food culture in any city, integrate with calendars for automatic scheduling, and handle dietary restrictions across entire groups. The vision is simple: AI agents do the coordination work, so humans can focus on experiencing local culture and spending time together over good food.

Built With

- elevenlabs

- fastapi

- gemini

- google-gemini-ai

- google-places

- netlify

- next.js

- python

- react

- render

- sentence-transformers

- supabase

- swift

- swiftui

- tailwind

- tailwind-css

- twilio

- typescript

Log in or sign up for Devpost to join the conversation.