alpha launch trailer

3D AI POWERED OPEN-WORLD

GAMING EXPERIENCE

12K

Generated Models

2

Person Team

30

Days to Alpha

100+

AI-generated Sounds

4

Km² Map

2

Custom Typefaces

Explore an immersive

future metropolis

directly in your browser

WHAT

The Dream is a fully playable 3D open-world experience created by a two-person team in 30 days, leveraging over 12,000 AI-generated assets. The project demonstrates a new paradigm in game development, where small teams can produce expansive, high-fidelity worlds previously achievable only through large AAA pipelines.

By embedding 3D AI assets directly into worldbuilding , The Dream challenges conventional assumptions around scale, cost, and production time — proving that massive interactive environments no longer require large studios or months of modeling props.

The initial goal was deliberately ambitious: to build the alpha of Chapter One in time for submission to the Chroma Awards, where the project went on to win in its category.

By embedding 3D AI assets directly into worldbuilding , The Dream challenges conventional assumptions around scale, cost, and production time — proving that massive interactive environments no longer require large studios or months of modeling props.

The initial goal was deliberately ambitious: to build the alpha of Chapter One in time for submission to the Chroma Awards, where the project went on to win in its category.

HOW

The world was created entirely in Unreal Engine 5.5. All 3D assets were generated and modeled in Blender, then refined and exported into Unreal to build a cohesive open-world environment.

Sound design explored AI as a creative tool: the voiceover was AI-generated, and all environmental sounds — including the foundational musical elements present in the world — were generated with AI and further shaped and composed in Ableton.

The online version is streamed via Streampixel, with a Steam release scheduled for February. From the outset, the goal was to make the experience accessible to everyone — not only those with the latest graphics cards. While AI significantly reduced time spent on asset modeling, texture work and visual refinement were largely done by hand.

Sound design explored AI as a creative tool: the voiceover was AI-generated, and all environmental sounds — including the foundational musical elements present in the world — were generated with AI and further shaped and composed in Ableton.

The online version is streamed via Streampixel, with a Steam release scheduled for February. From the outset, the goal was to make the experience accessible to everyone — not only those with the latest graphics cards. While AI significantly reduced time spent on asset modeling, texture work and visual refinement were largely done by hand.

WHY

Many still perceive 3D AI as experimental, unreliable, or disconnected from real production workflows. As a result, adoption remains hesitant — not due to lack of interest, but due to the scarcity of real-world examples showing the quality, scalability, and creative potential AI workflows can deliver.

This was made to close that gap. By presenting a real-time 3D world built mostly with AI-generated assets, The Dream transforms promises into a tangible proof. Rather than asking creators to imagine benefits, it allows them to step inside the result.

By reducing time spent on assets, the team was able to focus more deeply on storytelling, world logic, and meaningful narrative outcomes, while maintaining strong artistic control and visual cohesion across the experience.

This was made to close that gap. By presenting a real-time 3D world built mostly with AI-generated assets, The Dream transforms promises into a tangible proof. Rather than asking creators to imagine benefits, it allows them to step inside the result.

By reducing time spent on assets, the team was able to focus more deeply on storytelling, world logic, and meaningful narrative outcomes, while maintaining strong artistic control and visual cohesion across the experience.

An interactive online narrative experience Playable on any computer with a decent internet connection

APPROACH

Early testing focused on defining the visual language and understanding the practical boundaries of the technology. The mesh-based asset approach enabled a fast, stable real-time experience, making performance a core design constraint from the outset.

A key focus was identifying a style where the technology could truly excel. This led to a tight feedback loop between AI generation, human curation, and creative direction — clarifying where current AI workflows provide the most value and where manual intervention remains essential.

Most of the world was modeled within the first week, allowing the majority of the remaining time to be dedicated to environmental storytelling, atmosphere, and detail. The final week was reserved almost entirely for testing, optimisation, and performance validation.

A key focus was identifying a style where the technology could truly excel. This led to a tight feedback loop between AI generation, human curation, and creative direction — clarifying where current AI workflows provide the most value and where manual intervention remains essential.

Most of the world was modeled within the first week, allowing the majority of the remaining time to be dedicated to environmental storytelling, atmosphere, and detail. The final week was reserved almost entirely for testing, optimisation, and performance validation.

TECH

The world was built entirely in Unreal Engine 5.5. All 3D assets were generated using 404—GEN — a decentralized 3D generator running on Bittensor Subnet 17, accessed through its Blender plugin, then refined and exported into Unreal.

This streamlined production and enabled stable, consistent frame rates across the entire experience and gives an option to explore the lates graphical possibilities without having an access to the latest graphics cards.

Sound design also explored AI-native workflows. The voiceover was AI-generated, and all environmental sounds—including the foundational musical layers within the world—were generated with AI and further shaped and mixed in Ableton.The online version is powered by Streampixel, with a Steam release scheduled for February.

This streamlined production and enabled stable, consistent frame rates across the entire experience and gives an option to explore the lates graphical possibilities without having an access to the latest graphics cards.

Sound design also explored AI-native workflows. The voiceover was AI-generated, and all environmental sounds—including the foundational musical layers within the world—were generated with AI and further shaped and mixed in Ableton.The online version is powered by Streampixel, with a Steam release scheduled for February.

STORY

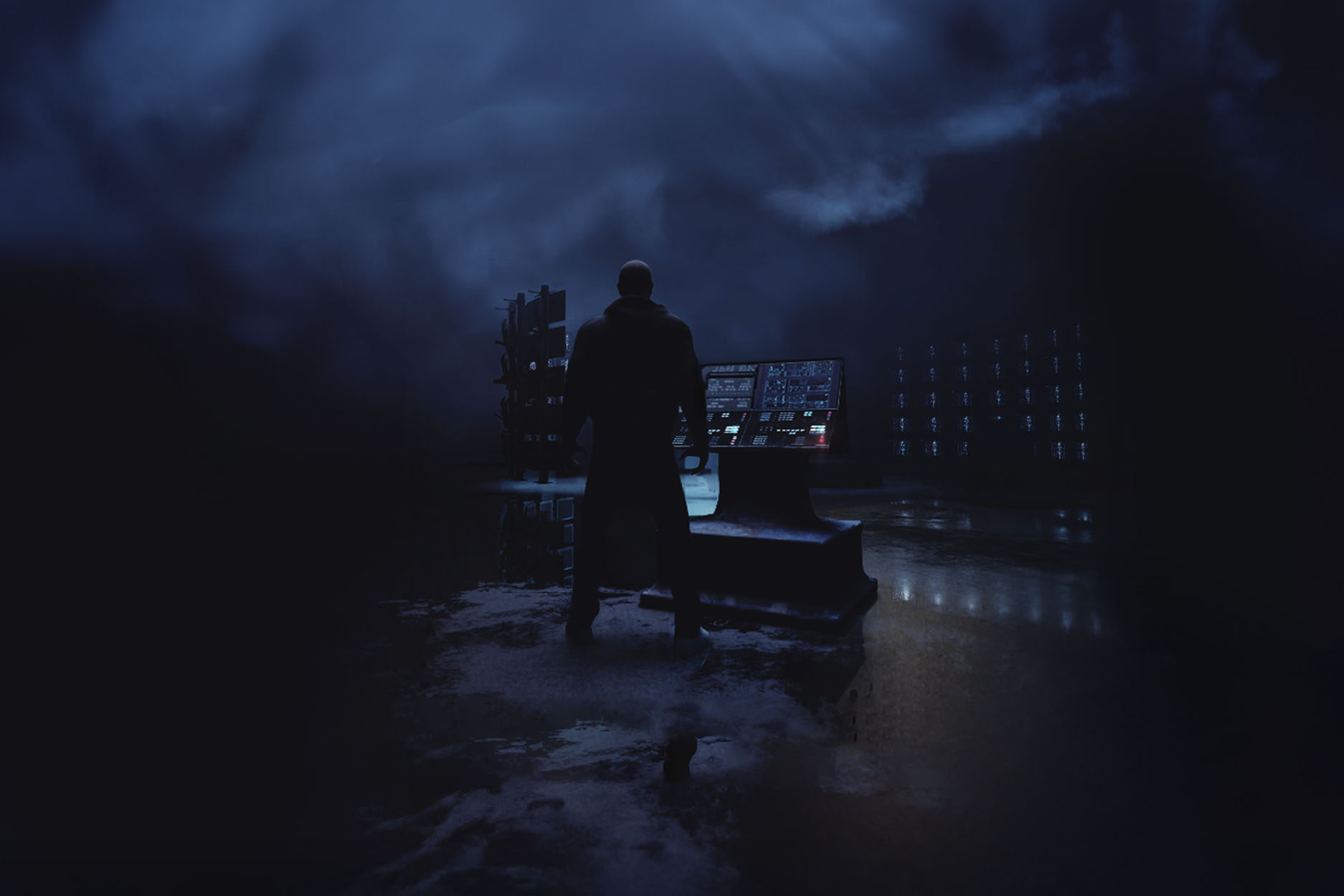

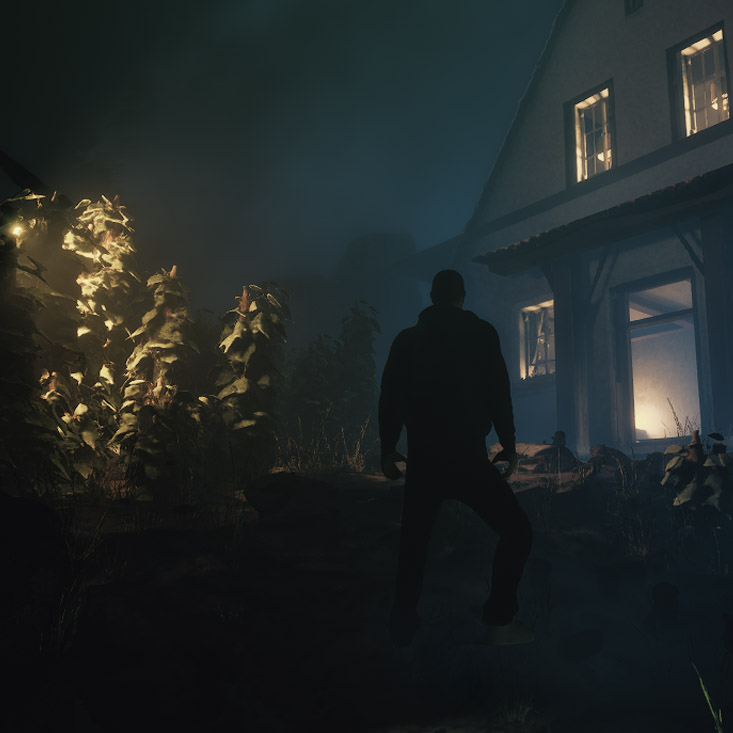

Set in a future metropolis suspended in mist, familiar architectural cues are subtly disrupted, reflecting a world shaped by dreamlike moments unfolding deep into the night. The player searches for Xavia, who disappears without explanation. This search pulls the player into a fragmented layer of the city, where distinctions between reality, simulation, and recollection begin to collapse.

Narrative progression is embedded in space, sound, and interaction. The world itself becomes the storyteller, expressing tension through glitches and environmental shifts rather than explicit exposition.

This chapter introduces the world and its narrative foundation, with Chapter 2—releasing in February—expanding the mechanics and pushing the experience deeper.

Narrative progression is embedded in space, sound, and interaction. The world itself becomes the storyteller, expressing tension through glitches and environmental shifts rather than explicit exposition.

This chapter introduces the world and its narrative foundation, with Chapter 2—releasing in February—expanding the mechanics and pushing the experience deeper.

INDIVIDUALLY GENERATED ASSETS COMBINE INTO A LARGER STRUCTURES THAT BECOME THE WORLD

WORKFLOW

By offloading large portions of asset generation, the production emphasis shifted away from repetitive modeling tasks toward composition, lighting, and narrative pacing. Instead of searching for ill-fitting ready-made or marketplace assets and adapting them to the project’s visual language, environments could be generated to fit the world from the outset. Manually modeling rocks adds little value to the final experience, yet repeating the same assets too often breaks immersion. Generative workflows made almost endless variations.

Time was spent deciding what should exist and why, rather than how long it would take to produce each element. This enabled denser scenes and a more intentional visual rhythm without increasing production overhead. AI was treated as a generative material, replacing ill-fitting ready-made and marketplace assets rather than acting as an automation shortcut.

Time was spent deciding what should exist and why, rather than how long it would take to produce each element. This enabled denser scenes and a more intentional visual rhythm without increasing production overhead. AI was treated as a generative material, replacing ill-fitting ready-made and marketplace assets rather than acting as an automation shortcut.

stylistic consistency

Across both image and sound, the material was shaped, edited, through traditional artistic tools, maintaining authorship, intention, and qualitative control while allowing the work to operate at a larger scale.

Earlier approaches, including available Gaussian splat models, proved conceptually promising but technically limiting, unable to perform in real time within current constraints. Recent iterations enabled stable, detailed mesh results aligned with the project’s visual ambitions.

Narratively, the work reflects on a widening divide between those with access to emerging technologies and those increasingly alienated from them. This tension formed the environmental storytelling, unfolding through stark contrasts—grimy, graffiti-filled post-industrial streets against a late-19th-century houses rendered in chiaroscuro style light.

Earlier approaches, including available Gaussian splat models, proved conceptually promising but technically limiting, unable to perform in real time within current constraints. Recent iterations enabled stable, detailed mesh results aligned with the project’s visual ambitions.

Narratively, the work reflects on a widening divide between those with access to emerging technologies and those increasingly alienated from them. This tension formed the environmental storytelling, unfolding through stark contrasts—grimy, graffiti-filled post-industrial streets against a late-19th-century houses rendered in chiaroscuro style light.

RELEASES

The Dream is currently expanding into Chapter Two, with active gameplay development underway this spring. This next chapter deepens the world through more advanced mechanics and systemic interactions, including dynamic time-of-day changes, more direct and responsive interactions with NPCs, and a greater sense of agency in how the player affects the environment.

These additions are designed to not only broaden the playable space, but to deepen the character of the protagonist and the player’s emotional relationship to the world.

Due to the constraints of the original 30-day development timeline, not all aspects of the project’s full vision were feasible to realize in the initial alpha. Ongoing development is focused on bridging this gap — expanding depth, and adding deeper levels of environmental storytelling.

These additions are designed to not only broaden the playable space, but to deepen the character of the protagonist and the player’s emotional relationship to the world.

Due to the constraints of the original 30-day development timeline, not all aspects of the project’s full vision were feasible to realize in the initial alpha. Ongoing development is focused on bridging this gap — expanding depth, and adding deeper levels of environmental storytelling.

small elements (eg. stairs) were generated, then refined in blender and brough in UE.

NO DOWNLOAD NEEDED,

STREAM NOW

SCREENSHOTS

CHROMA AWARD

WINNER

Interactive Narrative Category

DELIVERABLES:

ONLINE STREAMABLE ALPHA VERSION

in 30 days AND THE WEBSITE

CREATED OCT 2025

TEAM:

huo—WU

(ERNO FORSSTRÖM),

Ivan LEHUSHA

MADE WITH

404—GEN ASSETS

Generate your own