For January 2026, we welcome Omar Abou Mrad as our DSF member of the month! ⭐

Omar is a helper in the Django Discord server, he has helped and continuously help folks around the world in their Django journey! He is part of the Discord Staff Team. He has been a DSF member since June 2024.

You can learn more about Omar by visiting Omar's website and his GitHub Profile.

Let’s spend some time getting to know Omar better!

Hello! My name is Omar Abou Mrad, a 47-year-old husband to a beautiful wife and father of three teenage boys. I’m from Lebanon (Middle East), have a Computer Science background, and currently work as a Technical Lead on a day-to-day basis. I’m mostly high on life and quite enthusiastic about technology, sports, food, and much more!

I love learning new things and I love helping people. Most of my friends, acquaintances, and generally people online know me as Xterm.

xterm is simply the terminal emulator for the X Window System. I first encountered it back in the mid to late 90s when I started using Redhat 2.0 operating system. things weren’t easy to set up back then, and the terminal was where you spent most of your time.

Nevertheless, I had to wait months (or was it years?) on end for the nickname "Xterm" to expire on Freenode back in mid 2000s, before I snatched and registered it.

Alas, I did! Xterm, c'est moi! >:-]

We landed on Django (~1.1) fairly early at work, as we wanted to use Python with an ORM while building websites for different clients. The real challenge came when we took on a project responsible for managing operations, traceability, and reporting at a pipe-manufacturing company.

By that time, most of the team was already well-versed in Django (~1.6), and we went head-on into building one of the most complicated applications we had done to date, everything from the back office to operators’ devices connected to a Django-powered system.

Since then, most of our projects have been built with Django at the core.

We love Django.

I've used a multitude of frameworks professionally before Django, primarily in Java (EE, SeamFramework, ...) and .NET (ASP.NET, ASP.NET MVC) as well as sampling different frameworks for educational purposes.

I suppose if I could snap my fingers and get things to exist in django it wouldn't be something new as much as it is official support of:

But since we're finger-snapping things to existence, it would be awesome if every component of django (core, orm, templates, forms, "all") could be installed separately in such a way that you could cherry pick what you want to install, so we could dismiss those pesky (cough) arguments (cough) about Django being bulky.

I'm involved in numerous projects currently at work, most of which are based on Django, but the one I'm working right now consists of doing integrations and synchronizations with SAP HANA for different modules, in different applications.

It's quite the challenge, which makes it twice the fun.

I would like to mention that I'm extremely thankful for any and all core and 3rd Party libraries out there!

In no particular order:

First and foremost, I want to highlight what an excellent staff team we have on the Official Django Discord. While I don’t feel I hold a candle to what the rest of the team does daily, we complement each other very well.

To me, being a good helper means:

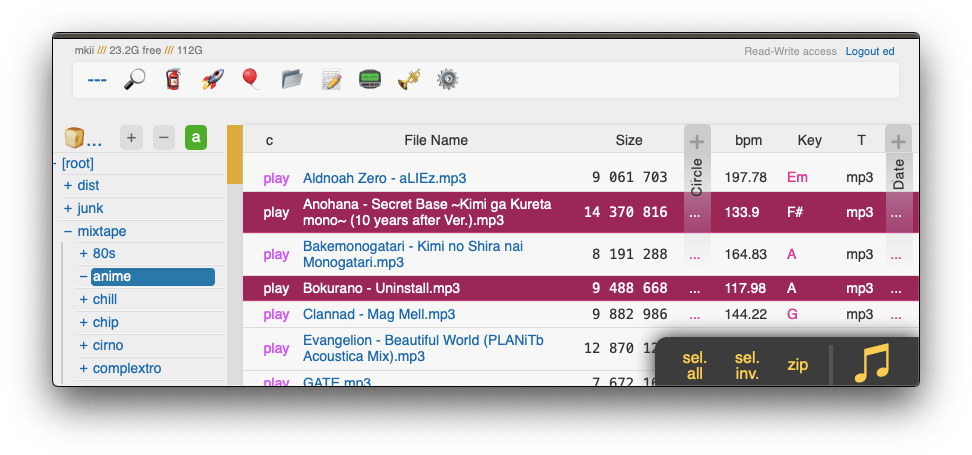

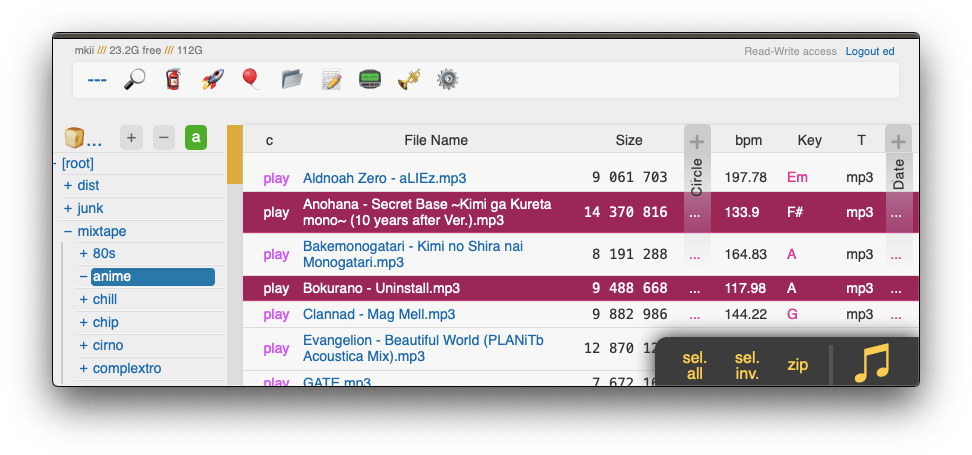

Imagine you're having a discussion with a djangonaut friend or colleague about some data modeling, or answering some question or concern they have, or reviewing some ORM code in a repository on github, or helping someone on IRC, Slack, Discord, the forums... or simply you want to do some quick ORM experiment but not disturb your current project.

The most common ways people deal with this, is by having a throw-away project that they add models to, generate migrations, open the shell, run the queries they want, reset the db if needed, copy the models and the shell code into some code sharing site, then send the link to the recipient. Not to mention needing to store the code they experiment with in either separate scripts or management commands so they can have them as references for later.

I loved what DDT gave me with the queries transparency, I loved experimenting in the shell with shell_plus --print-sql and I needed to share things online. All of this was cumbersome and that’s when DryORM came into existence, simplifying the entire process into a single code snippet.

The need grew massively when I became a helper on Official Django Discord and noticed we (Staff) could greatly benefit from having this tool not only to assist others, but share knowledge among ourselves. While I never truly wanted to go public with it, I was encouraged by my peers on Discord to share it and since then, they've been extremely supportive and assisted in its evolution.

The unexpected thing however, was for DryORM to be used in the official code tracker, or the forums, or even in Github PRs! Ever since, I've decided to put a lot of focus and effort on having features that can support the django contributors in their quest evolve Django.

So here's a shout-out to everyone that use DryORM!

Yes, I am and thank you! I think the application has reached a point where new feature releases will slow down, so it’s entering more of a maintenance phase now, which I can manage.

Hopefully soon we'll have the discord bot executing ORM snippet :-]

Oh wow, not working, what's that like! :-]

Early mornings are usually reserved for weight training.\ Followed by a long, full workday.\ Then escorting and watching the kids at practice.\ Evenings are spent with my wife.\ Late nights are either light gaming or some tech-related reading and prototyping.\

Weekends look very similar, just with many more kids sports matches!

I want to thank everyone who helped make Django what it is today.

If you’re reading this and aren’t yet part of the Discord community, I invite you to join us! You’ll find many like-minded people to discuss your interests with. Whether you’re there to help, get help, or just hang around, it’s a fun place to be.

Thank you for doing the interview, Omar!

Today we've issued the 5.2.10 and 6.0.1 bugfix releases.

The release packages and checksums are available from our downloads page, as well as from the Python Package Index.

The PGP key ID used for these releases is Jacob Walls: 131403F4D16D8DC7

For December 2025, we welcome Clifford Gama as our DSF member of the month! ⭐

Clifford contributed to Django core with more than 5 PRs merged in few months! He is part of the Triage and Review Team. He has been a DSF member since October 2024.

You can learn more about Clifford by visiting Clifford's website and his GitHub Profile.

Let’s spend some time getting to know Clifford better!

I'm Clifford. I hold a Bachelor's degree in Mechanical Engineering from the University of Zimbabwe.

During my first year in college, I was also exploring open online courses on EDx and I came across CS50's introduction to web development. After watching the introductory lecture -- which introduced me to git and GitHub -- I discovered Django's excellent documentation and got started on the polls tutorial. The docs were so comprehensive and helpful I never felt the need to return to CS50. (I generally prefer comprehensive first-hand, written learning material over summaries and videos.)

At the time, I had already experimented with flask, but I guess mainly because I didn't know SQL and because flask didn't have an ORM, I never quite picked it up. With Django I felt like I was taking a learning fast-track where I'd learn everything I needed in one go!

And that's how I started using Django.

At the moment, I’ve been focusing on improving my core skills in preparation for remote work, so I haven’t been starting new projects because of that.

That said, I’ve been working on a client project involving generating large, image-heavy PDFs with WeasyPrint, where I’ve been investigating performance bottlenecks and ways to speed up generation time, which was previously around 30 minutes 😱.

I’ve been reading Boost Your Git DX by Adam Johnson and learning how to boost my Git and shell developer experience, which has been a great read. Aside from that, inspired by some blogs and talks by Haki Benita, I am also learning about software design and performance. Additionally, I am working on improving my general fluency in Python.

I am not familiar with any other frameworks, but if I had magic powers I'd add production-grade static-file serving in Django.

The ORM, Wagtail and Django's admin.

I started contributing to Django in August last year, which is when I discovered the community, which was a real game changer for me. Python was my first course at university, and I loved it because it was creative and there was no limit to what I could build with it.

Whenever I saw a problem in another course that could be solved programmatically, I jumped at it. My proudest project from that time was building an NxN matrix determinant calculator after learning about recursion and spotting the opportunity in an algebra class.

After COVID lockdown, I gave programming up for a while. With more time on my hands, I found myself prioritizing programming over core courses, so I took a break. Last year, I returned to it when I faced a problem that I could only solve with Django. My goal was simply to build an app quickly and go back to being a non-programmer, but along the way I thought I found a bug in Django, filed a ticket, and ended up writing a documentation PR. That’s when I really discovered the Django community.

What attracted me most was that contributions are held to high standards, but experienced developers are always ready to help you reach them. Contributing was collaborative, pushing everyone to do their best. It was a learning opportunity too good to pass up.

About the time after I contributed my first PR, I started looking at open tickets to find more to work on, and keep on learning.

Sometimes a ticket was awaiting triage, in which case the first step was to triage it before assigning it to working on it, and sometimes the ticket I wanted was already taken, in which case I'd look at the PR if available. Reviewing a PR can be a faster way to learn about a particular part of the codebase, because someone has already done most of the investigative part of work, so I reviewed PRs as well.

After a while I got an invitation from Sarah Boyce, one of the fellows, to join the team. I didn't even know that I could join before I got the invitation, so I was thrilled!

It’s been rewarding. I’ve gained familiarity with the Django codebase and real experience collaborating with others, which already exceeds what I expected when I started contributing.

One unexpected highlight was forming a friendship through one of the first PRs I reviewed.

SiHyun Lee and I are now both part of the triage and review team, and I’m grateful for that connection.

My main hobby is storytelling in a broad sense. In fact, it was a key reason I returned to programming after a long break. I enjoy discovering enduring stories from different cultures, times, and media—ranging from the deeply personal and literary to the distant and philosophical. I recently watched two Japanese classics and found I quite love them. I wrote about one of the films on my blog, and I also get to practice my Japanese, which I’ve been learning on Duolingo for about two years. I also enjoy playing speed chess.

If there’s an issue you care about, or one that touches a part of the codebase you’re familiar with or curious about, jump in. Tickets aren’t always available to work on, but reviews always are, and they’re open to everyone. Reviewing helps PRs move faster, including your own if you have any open, sharpens your understanding of a component, and often clarifies the problem itself.

As Simon Charette puts it:

“Triaging issues and spending time understanding them is often more valuable than landing code itself as it strengthen our common understanding of the problem and allow us to build a consistent experience accross the diverse interfaces Django provides.”

And you can put it on your CV!

I’m grateful to everyone who contributes to making every part of Django what it is. I’m particularly thankful to whoever nominated me to be the DSF Member of the month.

I am optimistic about the future of Django. Django 6.1 is already shaping up with new features, and there are new projects like Django Bolt coming up.

Happy new year 🎊!

Thank you for doing the interview, Clifford and happy new year to the Django community 💚!

As we wrap up another strong year for the Django community, we wanted to share an update and a thank you. This year, we raised our fundraising goal from $200,000 to $300,000, and we are excited to say we are now over 88% of the way there. That puts us firmly in the home stretch, and a little more support will help us close the gap and reach 100%.

So why the higher goal this year? We expanded the Django Fellows program to include a third Fellow. In August, we welcomed Jacob Tyler Walls as our newest Django Fellow. That extra capacity gives the team more flexibility and resilience, whether someone is taking parental leave, time off around holidays, or stepping away briefly for other reasons. It also makes it easier for Fellows to attend more Django events and stay connected with the community, all while keeping the project running smoothly without putting too much pressure on any one person.

We are also preparing to raise funds for an executive director role early next year. That work is coming soon, but right now, the priority is finishing this year strong.

We want to say a sincere thank you to our existing sponsors and to everyone who has donated so far. Your support directly funds stable Django releases, security work, community programs, and the long-term health of the framework. If you or your organization have end-of-year matching funds or a giving program, this is a great moment to put them to use and help push us past the finish line.

If you would like to help us reach that final stretch, you can find all the details on our fundraising page

Other ways to support Django:

Thank you for helping support Django and the people who make it possible. We are incredibly grateful for this community and everything you do to keep Django strong.

We extend our gratitude to Thibaud Colas and Sarah Abderemane, who are completing their terms on the board. Their contributions shaped the foundation in meaningful ways, and the following highlights only scratch the surface of their work.

Thibaud served as President in 2025 and Secretary in 2024. He was instrumental in governance improvements, the Django CNA initiative, election administration, and creating our first annual report. He also led our birthday campaign and helped with the creation of several new working groups this year. His thoughtful leadership helped the board navigate complex decisions.

Sarah served as Vice President in 2025 and contributed significantly to our outreach efforts, working group coordination, and membership management. She also served as a point of contact for the Django CNA initiative alongside Thibaud.

Both Thibaud and Sarah did too many things to list here. They were amazing ambassadors for the DSF, representing the board at many conferences and events. They will be deeply missed, and we are happy to have their continued membership and guidance in our many working groups.

On behalf of the board, thank you both for your commitment to Django and the DSF. The community is better for your service.

Thank you to Tom Carrick and Jacob Kaplan-Moss for their service as officers in 2025.

Tom served as Secretary, keeping our meetings organized and our records in order. Jacob served as Treasurer, providing careful stewardship of the foundation's finances. Their dedication helped guide the DSF through another successful year.

We welcome Priya Pahwa and Ryan Cheley to the board, and congratulate Jacob Kaplan-Moss on his re-election.

The board unanimously elected our officers for 2026:

I'm honored to serve as President for 2026. The DSF has important work ahead, and I'm looking forward to building on the foundation that previous boards have established.

Our monthly board meeting minutes may be found at dsf-minutes, and December's minutes are available.

If you have a great idea for the upcoming year or feel something needs our attention, please reach out to us via our Contact the DSF page. We're always open to hearing from you.

The Code of Conduct working group received 4 reports and met 12 times in 2025. This transparency report is a brief account of how those reports were handled. This year’s number is lower than previous years in part because of the formation of the Online Community Working Group which handles moderation on our official spaces and has been able to act directly on smaller scale infractions. In some cases we received additional reporting while investigating initial reports, but have not counted those as separate instances.

This working group conducts business in several ways. It has online meetings, typically once per month. It also discusses issues in a Slack channel, but most cases are handled in the meetings. The group welcomed three new members this year: Ariane Djeupang, Natalia Bidart, and Priya Pahwa. Natalia was selected by the new Online Communities Working Group as their liaison to the Code of Conduct Working group; Ariane and Priya were elected by the working group. The group also saw Jay Miller step down this year. We all want to thank Jay for his continued role in our community and for all the work he did with the Code of Conduct group.

It was the group’s intention to work with a consultant to update our Code of Conduct and processes. We reached out to two consultants to help with that work, but unfortunately we weren’t able to engage either to get that work completed. We hope to progress with that in 2026. In the meantime, we made a few internal process tweaks - creating up a new “ask CoC” channel with key stakeholders to discuss moderation and CoC enforcement, and having our team set up as moderators in GitHub until we find a better model.

Two reports from late 2024 carried into this year. Two reports resulted in suspensions from the relevant platforms. Another was shared with local event organizers.

Finally, this section provides a brief summary of the kinds of cases that were handled:

The Online Community Working Group has introduced a new GitHub repository designed to manage and track ideas, suggestions, and improvements across Django's various online community platforms.

Primarily inspired by the rollout of the New Features repository, the Online Community Working Group has launched their own version that works in conjunction with the Online Community Working Group Ideas GitHub project to provide a mechanism to gather feedback, suggestions, and ideas from across the online community and track their progression.

The primary aim is to help better align Django's presence across multiple online platforms by providing:

If you have an idea or a suggestion for any of Django's online community platforms (such as the Forum, Discord, or elsewhere), the process starts by creating an issue in the new repository.

You'll be asked to summarise the idea, and answer a couple of short questions regarding which platform it applies to and the rationale behind your idea.

The suggestion will be visible on the public board, and people will be able to react to the idea with emoji responses as a quick measure of support, or provide longer-form answers as comments on the issue.

The Online Community Working Group will review, triage, and respond to all suggestions, before deciding whether or how they can be implemented across the community.

Note that we're not asking that you stop using any mechanisms in place within the particular community you're a part of currently—the Discord #suggestions channel is not going away, for example. However, we may ask that a suggestion or idea flagged within a particular platform be raised via this new GitHub repo instead, in order increase its visibility, apply it to multiple communities, or simply better track its resolution.

The Online Community Working Group was relatively recently set up, with the aim of improving the experience for members of all Django's communities online. This new repository takes a first step in that direction. Check out the repository at django/online-community-working-group on GitHub to learn more and start helping shape Django's truly excellent community presence online.

In accordance with our security release policy, the Django team is issuing releases for Django 5.2.9, Django 5.1.15, and Django 4.2.27. These releases address the security issues detailed below. We encourage all users of Django to upgrade as soon as possible.

FilteredRelation was subject to SQL injection in column aliases, using a suitably crafted dictionary, with dictionary expansion, as the **kwargs passed to QuerySet.annotate() or QuerySet.alias() on PostgreSQL.

Thanks to Stackered for the report.

This issue has severity "high" according to the Django security policy.

Algorithmic complexity in django.core.serializers.xml_serializer.getInnerText() allowed a remote attacker to cause a potential denial-of-service triggering CPU and memory exhaustion via specially crafted XML input submitted to a service that invokes XML Deserializer. The vulnerability resulted from repeated string concatenation while recursively collecting text nodes, which produced superlinear computation resulting in service degradation or outage.

Thanks to Seokchan Yoon (https://ch4n3.kr/) for the report.

This issue has severity "moderate" according to the Django security policy.

Patches to resolve the issue have been applied to Django's main, 6.0 (currently at release candidate status), 5.2, 5.1, and 4.2 branches. The patches may be obtained from the following changesets.

The PGP key ID used for this release is Natalia Bidart: 2EE82A8D9470983E

As always, we ask that potential security issues be reported via private email to security@djangoproject.com, and not via Django's Trac instance, nor via the Django Forum. Please see our security policies for further information.

The 2026 DSF Board Election has closed, and the following candidates have been elected:

They will all serve two years for their term.

Directors elected for the 2025 DSF Board - Abigail Gbadago, Jeff Triplett, Paolo Melchiorre, Tom Carrick - are continuing with one year left to serve on the board.

Therefore, the combined 2026 DSF Board of Directors are:

* Elected to a two year term

Congratulations to our winners, and a huge thank you to our departing board members Sarah Abderemane and Thibaud Colas.

Thank you again to everyone who nominated themselves. Even if you were not successful, you gave our community the chance to make their voices heard in who they wanted to represent them.

an exposition on the great utility of using the parent-index method of representing trees in J.

This is similar, but with K and less comprehensive: https://github.com/JohnEarnest/ok/blob/gh-pages/docs/Trees.md

Since the invention of AVL trees in 1962, many kinds of binary search trees have been proposed. Notable are red-black trees, in which bottom-up rebalancing after an insertion or deletion takes O(1) amortized time and O(1) rotations worst-case. But the design space of balanced trees has not been fully explored. We continue the exploration. Our contributions are three. We systematically study the use of ranks and rank differences to define height-based balance in binary trees. Different invariants on rank differences yield AVL trees, red-black trees, and other kinds of balanced trees. By relaxing AVL trees, we obtain a new kind of balanced binary tree, the weak AVL tree, abbreviated wavl tree, whose properties we develop. Bottom-up rebalancing after an insertion or deletion takes O(1) amortized time and at most two rotations, improving the three or more rotations per deletion needed in all other kinds of balanced trees of which we are aware. The height bound of a wavl tree degrades gracefully from that of an AVL tree as the number of deletions increases, and is never worse than that of a red-black tree. Wavl trees also support top-down, fixed look-ahead rebalancing in O(1) amortized time. Finally, we use exponential potential functions to prove that in wavl trees rebalancing steps occur exponentially infrequently in rank. Thus most of the rebalancing is at the bottom of the tree, which is crucial in concurrent applications and in those in which rotations take time that depends on the subtree size.

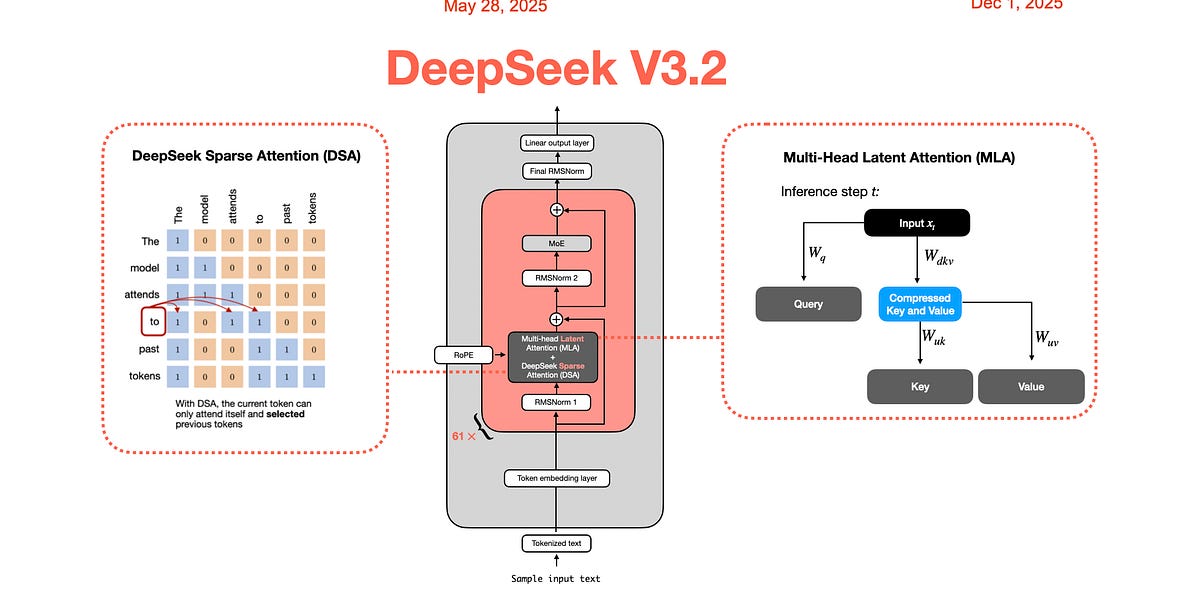

Note that this does assume some prior transformer architecture knowledge, but if you know how attention works then you should at least be able to get the overall idea.

I did an experiment to train Qwen2.5-Coder-7B on a niche diagraming language, and the model was able to generate syntactically correct code 86% of the time.

This post outlined the process and decisions I took during the training process. I think it might be helpful to share it here to get feedback, since I know there is a lot I did wrong along the road and could learn from the feedback of many people here.

Paper Abstract: Large language models (LLMs) are increasingly used as evaluators in lieu of humans. While scalable, their judgments are noisy due to imperfect specificity and sensitivity of LLMs, leading to biased accuracy estimates. Although bias-correction methods exist, they are underutilized in LLM research and typically assume exact knowledge of the model's specificity and sensitivity. Furthermore, in general we only have estimates of these values and it is not well known how to properly construct confidence intervals using only estimates. This work presents a simple plug-in framework that corrects such bias and constructs confidence intervals reflecting uncertainty from both test and calibration dataset, enabling practical and statistically sound LLM-based evaluation. Additionally, to reduce uncertainty in the accuracy estimate, we introduce an adaptive algorithm that efficiently allocates calibration sample sizes.

I built a small Python CLI and library called “elf” based on the workflow I have used for Advent of Code over the past few years. It automates common AoC tasks and provides a typed API for scripting.

Features:

• Cached puzzle input fetching

• Safe answer submission with guardrails and guess history

• Private leaderboard retrieval (table, JSON, or typed models)

• Cross-platform support (macOS, Linux, Windows)

• Built with Typer, Rich, httpx, and Pydantic

PyPI: https://pypi.org/project/elf

Feedback is welcome.

Hi,

I have done some looking around on the Django documentation regarding backporting and it seems like new features are not typically backported. In my particular case, I was looking to backport the recent addition of Haitian Creole to version 5.2, as we are using the Arches (GH archesproject/arches) platform to catalog elements of Haitian cultural heritage. I have created a fork of 5.2 which patches in the linked fix and am using that in our implementation. Of course, it would be ideal to upstream the language support if possible, especially since Django 6.0 was only just released (congrats!).

All this to say, I wanted to confirm that I was reading the policy correctly, and that I should not create a ticket and PR to backport the addition of Haitian Creole to 5.2.

Thanks for your time!

3 posts - 2 participants

Hey Team at Django,

Here I am seeking your support for contribution. How should I start.

Thanks,

Ashutosh

2 posts - 2 participants

Not sure if correct place to post, and this will be an incredible dumb question, but.. how do I know if my async ORM funcs are actually running in async?

Do I need a specific dB backend engine?

Can I log a specific property of the db.connection ?

I ask because it appears as though my ORM funcs like aget() are being called as just get().

Im using a profiler to help debug some performance issues and noticed I never see a Django function call of aget I only ever seen get().

Perhaps this is expected behaviour, but hence I was curious if I could know for sure they’re running in async.

My setup is uvicorn with Django ninja API, and a view marked async with aget() call inside.

2 posts - 2 participants

Hi Django translation team,

I’m Ehsan, and I’d love to help translate Django documentation into Persian (fa). I saw that the Persian translation is currently at 0%, and I’m eager to start contributing.

I’ve created an account on Transifex and requested to join the Persian (fa) translation team.

Could you please add me to the Persian translation team so I can start helping?

Thanks a lot!

Ehsan

1 post - 1 participant

I have this idea for a change to the CSRF protection, but looking for someone to point out the security hole(s) in it I’m failing to find myself:

The tl;dr is that I think requests that don’t send cookies should be automatically exempted from CSRF protection.

In its current incarnation, CsrfMiddleware protects all requests if they’re using one of the unsafe (RFC 9110) methods. This creates some friction when you have a Django project that uses both “browser” type views (cookie-based authentication) and “API” type views (Authorization header based authentication, typically using the Bearer authentication scheme); The API type views have to be explicitly exempted from protection, and it’s impossible for the same view to support both authentication schemes.

But seeing as the absence of cookies would mean there’s nothing to really ‘forge’, couldn’t it make sense to simply accept the request if no cookies are present? That should make supporting things like Bearer tokens for views work out of the box. Is there a security problem to this I’m missing?

I am aware of Implement Modern CSRF Protection · Issue #98 · django/new-features · GitHub, which is a radically different approach; if that ends up being implemented, my suggestion here isn’t relevant, although mine should maintain exactly the same browser support as the current does.

2 posts - 2 participants

Hi everyone, I’m Sneha from India. I’m learning Django as part of my journey to become a Python-based full-stack developer, and I want to start contributing to Django step by step.

I’ve been reading the documentation and exploring the codebase, but I’m not sure which beginner-friendly areas or tickets are good starting points. Any guidance or suggestions would be really helpful. Thank you!

2 posts - 2 participants

Over on the discord server we have had more than a few people excited and then confused about the new tasks framework coming in Django 6.0. The confusion mainly comes from mismatched expectations from those that have not been following the development of the feature and what precisely is available in 6.0. This has resulted in myself and others explaining that the main point of what is being introduced in 6.0 is for third-party packages to adopt the API and any normal devs would need a third-party package (eg django-tasks) to actually have a background task.

My suggestion for the 6.X release cycle (at least) would be to call out the need for a third-party package at the top of the docs page on tasks if a developer wants to have actual background tasks operated by a worker. This is likely a callout to django-tasks, but other packages could then be highlighted as they become available (I’m aware chancy has this as an unreleased feature)

3 posts - 3 participants

Hi everyone, I’m Krishna from India.

I want to start contributing to Django and prepare for GSoC 2026.

Can anyone guide me towards beginner-friendly tickets?

2 posts - 2 participants

I would like to reopen ticket 30515, but according to this. I shouldn’t.

However, I would like to reopen the discussion, to perhaps redecide on closing the ticket as wontfix.

There seems to be some indications that there are use cases where django.shortcuts.redirect is impractical and django.shortcuts.resolve_url is the right tool to use.

For my own part, I am implementing SSO login on multiple products where I work, and for this resolve_url has proven the right tool. That is after I discovered it, after I had already implemented something similar myself.

Using resolve_url has made my code simpler, by making it much easier to get the actual URL behind settings like e.g. LOGIN_REDIRECT_URL, and LOGIN_URL, that may be a path or a name, depending on how the project is setup. In some of my code I have to process resolved URLs, and may not even redirect to it. Also in testing, resolve_url has proven useful to e.g. verify that redirection headers are set to the correct value.

Now review is dragging along long enough that I can write this post, and all because resolve_url is not documented along with the other shortcuts.

1 post - 1 participant

The topic page for Models has a paragraph which says:

Once you have defined your models, you need to tell Django you’re going to use those models. Do this by editing your settings file and changing the

INSTALLED_APPSsetting to add the name of the module that contains yourmodels.py.

While it is technically correct that a package is a special type of module, might it be more helpful to say “… name of the package that contains …”?

If folks agree, I’d be happy to create a ticket and a PR.

1 post - 1 participant

Fine-tuning & Reinforcement Learning for LLMs. 🦥 Train OpenAI gpt-oss, Qwen3, Llama 4, DeepSeek-R1, Gemma 3, TTS 2x faster with 70% less VRAM.

Notebooks are beginner friendly. Read our guide. Add your dataset, click "Run All", and export your finetuned model to GGUF, Ollama, vLLM or Hugging Face.

Unsloth supports Free Notebooks Performance Memory use| gpt-oss (20B) | ▶️ Start for free | 1.5x faster | 70% less |

| Gemma 3n (4B) | ▶️ Start for free | 1.5x faster | 50% less |

| Qwen3 (14B) | ▶️ Start for free | 2x faster | 70% less |

| Qwen3 (4B): GRPO | ▶️ Start for free | 2x faster | 80% less |

| Gemma 3 (4B) | ▶️ Start for free | 1.6x faster | 60% less |

| Phi-4 (14B) | ▶️ Start for free | 2x faster | 70% less |

| Llama 3.2 Vision (11B) | ▶️ Start for free | 2x faster | 50% less |

| Llama 3.1 (8B) | ▶️ Start for free | 2x faster | 70% less |

| Mistral v0.3 (7B) | ▶️ Start for free | 2.2x faster | 75% less |

| Orpheus-TTS (3B) | ▶️ Start for free | 1.5x faster | 50% less |

pip install unsloth

For Windows install instructions, see here.

sesame/csm-1b and STT openai/whisper-large-v3.full_finetuning = True, 8-bit with load_in_8bit = True.📣 Introducing Unsloth Dynamic 4-bit Quantization! We dynamically opt not to quantize certain parameters and this greatly increases accuracy while only using <10% more VRAM than BnB 4-bit. See our collection on Hugging Face here.

📣 Llama 4 by Meta, including Scout & Maverick are now supported.

📣 Phi-4 by Microsoft: We also fixed bugs in Phi-4 and uploaded GGUFs, 4-bit.

📣 Vision models now supported! Llama 3.2 Vision (11B), Qwen 2.5 VL (7B) and Pixtral (12B) 2409

📣 Llama 3.3 (70B), Meta's latest model is supported.

📣 We worked with Apple to add Cut Cross Entropy. Unsloth now supports 89K context for Meta's Llama 3.3 (70B) on a 80GB GPU - 13x longer than HF+FA2. For Llama 3.1 (8B), Unsloth enables 342K context, surpassing its native 128K support.

📣 We found and helped fix a gradient accumulation bug! Please update Unsloth and transformers.

📣 We cut memory usage by a further 30% and now support 4x longer context windows!

| 📚 Documentation & Wiki | Read Our Docs |

| Follow us on X | |

| 💾 Installation | Pip install |

| 🔮 Our Models | Unsloth Releases |

| ✍️ Blog | Read our Blogs |

Reddit Reddit |

Join our Reddit |

You can also see our documentation for more detailed installation and updating instructions here.

Install with pip (recommended) for Linux devices:

pip install unsloth

To update Unsloth:

pip install --upgrade --force-reinstall --no-cache-dir unsloth unsloth_zoo

See here for advanced pip install instructions.

[!warning] Python 3.13 does not support Unsloth. Use 3.12, 3.11 or 3.10

Install NVIDIA Video Driver: You should install the latest version of your GPUs driver. Download drivers here: NVIDIA GPU Drive.

Install Visual Studio C++: You will need Visual Studio, with C++ installed. By default, C++ is not installed with Visual Studio, so make sure you select all of the C++ options. Also select options for Windows 10/11 SDK. For detailed instructions with options, see here.

Install CUDA Toolkit: Follow the instructions to install CUDA Toolkit.

Install PyTorch: You will need the correct version of PyTorch that is compatible with your CUDA drivers, so make sure to select them carefully. Install PyTorch.

Install Unsloth:

pip install unsloth

To run Unsloth directly on Windows:

SFTConfig, set dataset_num_proc=1 to avoid a crashing issue:SFTConfig(

dataset_num_proc=1,

...

)

For advanced installation instructions or if you see weird errors during installations:

torch and triton. Go to https://pytorch.org to install it. For example pip install torch torchvision torchaudio tritonnvcc. If that fails, you need to install cudatoolkit or CUDA drivers.xformers manually. You can try installing vllm and seeing if vllm succeeds. Check if xformers succeeded with python -m xformers.info Go to https://github.com/facebookresearch/xformers. Another option is to install flash-attn for Ampere GPUs.torch, triton, and xformers are compatible with one another. The PyTorch Compatibility Matrix may be useful.bitsandbytes and check it with python -m bitsandbytes⚠️Only use Conda if you have it. If not, use Pip. Select either pytorch-cuda=11.8,12.1 for CUDA 11.8 or CUDA 12.1. We support python=3.10,3.11,3.12.

conda create --name unsloth_env \

python=3.11 \

pytorch-cuda=12.1 \

pytorch cudatoolkit xformers -c pytorch -c nvidia -c xformers \

-y

conda activate unsloth_env

pip install unsloth

mkdir -p ~/miniconda3

wget https://repo.anaconda.com/miniconda/Miniconda3-latest-Linux-x86_64.sh -O ~/miniconda3/miniconda.sh

bash ~/miniconda3/miniconda.sh -b -u -p ~/miniconda3

rm -rf ~/miniconda3/miniconda.sh

~/miniconda3/bin/conda init bash

~/miniconda3/bin/conda init zsh

⚠️Do **NOT** use this if you have Conda. Pip is a bit more complex since there are dependency issues. The pip command is different for torch 2.2,2.3,2.4,2.5 and CUDA versions.

For other torch versions, we support torch211, torch212, torch220, torch230, torch240 and for CUDA versions, we support cu118 and cu121 and cu124. For Ampere devices (A100, H100, RTX3090) and above, use cu118-ampere or cu121-ampere or cu124-ampere.

For example, if you have torch 2.4 and CUDA 12.1, use:

pip install --upgrade pip

pip install "unsloth[cu121-torch240] @ git+https://github.com/unslothai/unsloth.git"

Another example, if you have torch 2.5 and CUDA 12.4, use:

pip install --upgrade pip

pip install "unsloth[cu124-torch250] @ git+https://github.com/unslothai/unsloth.git"

And other examples:

pip install "unsloth[cu121-ampere-torch240] @ git+https://github.com/unslothai/unsloth.git"

pip install "unsloth[cu118-ampere-torch240] @ git+https://github.com/unslothai/unsloth.git"

pip install "unsloth[cu121-torch240] @ git+https://github.com/unslothai/unsloth.git"

pip install "unsloth[cu118-torch240] @ git+https://github.com/unslothai/unsloth.git"

pip install "unsloth[cu121-torch230] @ git+https://github.com/unslothai/unsloth.git"

pip install "unsloth[cu121-ampere-torch230] @ git+https://github.com/unslothai/unsloth.git"

pip install "unsloth[cu121-torch250] @ git+https://github.com/unslothai/unsloth.git"

pip install "unsloth[cu124-ampere-torch250] @ git+https://github.com/unslothai/unsloth.git"

Or, run the below in a terminal to get the optimal pip installation command:

wget -qO- https://raw.githubusercontent.com/unslothai/unsloth/main/unsloth/_auto_install.py | python -

Or, run the below manually in a Python REPL:

try: import torch

except: raise ImportError('Install torch via `pip install torch`')

from packaging.version import Version as V

v = V(torch.__version__)

cuda = str(torch.version.cuda)

is_ampere = torch.cuda.get_device_capability()[0] >= 8

if cuda != "12.1" and cuda != "11.8" and cuda != "12.4": raise RuntimeError(f"CUDA = {cuda} not supported!")

if v <= V('2.1.0'): raise RuntimeError(f"Torch = {v} too old!")

elif v <= V('2.1.1'): x = 'cu{}{}-torch211'

elif v <= V('2.1.2'): x = 'cu{}{}-torch212'

elif v < V('2.3.0'): x = 'cu{}{}-torch220'

elif v < V('2.4.0'): x = 'cu{}{}-torch230'

elif v < V('2.5.0'): x = 'cu{}{}-torch240'

elif v < V('2.6.0'): x = 'cu{}{}-torch250'

else: raise RuntimeError(f"Torch = {v} too new!")

x = x.format(cuda.replace(".", ""), "-ampere" if is_ampere else "")

print(f'pip install --upgrade pip && pip install "unsloth[{x}] @ git+https://github.com/unslothai/unsloth.git"')

UNSLOTH_USE_MODELSCOPE=1, and install the modelscope library by: pip install modelscope -U.unsloth_cli.py also supports

UNSLOTH_USE_MODELSCOPE=1to download models and datasets. please remember to use the model and dataset id in the ModelScope community.

from unsloth import FastLanguageModel, FastModel

import torch

from trl import SFTTrainer, SFTConfig

from datasets import load_dataset

max_seq_length = 2048 # Supports RoPE Scaling internally, so choose any!

# Get LAION dataset

url = "https://huggingface.co/datasets/laion/OIG/resolve/main/unified_chip2.jsonl"

dataset = load_dataset("json", data_files = {"train" : url}, split = "train")

# 4bit pre quantized models we support for 4x faster downloading + no OOMs.

fourbit_models = [

"unsloth/Meta-Llama-3.1-8B-bnb-4bit", # Llama-3.1 2x faster

"unsloth/Meta-Llama-3.1-8B-Instruct-bnb-4bit",

"unsloth/Meta-Llama-3.1-70B-bnb-4bit",

"unsloth/Meta-Llama-3.1-405B-bnb-4bit", # 4bit for 405b!

"unsloth/Mistral-Small-Instruct-2409", # Mistral 22b 2x faster!

"unsloth/mistral-7b-instruct-v0.3-bnb-4bit",

"unsloth/Phi-3.5-mini-instruct", # Phi-3.5 2x faster!

"unsloth/Phi-3-medium-4k-instruct",

"unsloth/gemma-2-9b-bnb-4bit",

"unsloth/gemma-2-27b-bnb-4bit", # Gemma 2x faster!

"unsloth/Llama-3.2-1B-bnb-4bit", # NEW! Llama 3.2 models

"unsloth/Llama-3.2-1B-Instruct-bnb-4bit",

"unsloth/Llama-3.2-3B-bnb-4bit",

"unsloth/Llama-3.2-3B-Instruct-bnb-4bit",

"unsloth/Llama-3.3-70B-Instruct-bnb-4bit" # NEW! Llama 3.3 70B!

] # More models at https://huggingface.co/unsloth

model, tokenizer = FastModel.from_pretrained(

model_name = "unsloth/gemma-3-4B-it",

max_seq_length = 2048, # Choose any for long context!

load_in_4bit = True, # 4 bit quantization to reduce memory

load_in_8bit = False, # [NEW!] A bit more accurate, uses 2x memory

full_finetuning = False, # [NEW!] We have full finetuning now!

# token = "hf_...", # use one if using gated models

)

# Do model patching and add fast LoRA weights

model = FastLanguageModel.get_peft_model(

model,

r = 16,

target_modules = ["q_proj", "k_proj", "v_proj", "o_proj",

"gate_proj", "up_proj", "down_proj",],

lora_alpha = 16,

lora_dropout = 0, # Supports any, but = 0 is optimized

bias = "none", # Supports any, but = "none" is optimized

# [NEW] "unsloth" uses 30% less VRAM, fits 2x larger batch sizes!

use_gradient_checkpointing = "unsloth", # True or "unsloth" for very long context

random_state = 3407,

max_seq_length = max_seq_length,

use_rslora = False, # We support rank stabilized LoRA

loftq_config = None, # And LoftQ

)

trainer = SFTTrainer(

model = model,

train_dataset = dataset,

tokenizer = tokenizer,

args = SFTConfig(

max_seq_length = max_seq_length,

per_device_train_batch_size = 2,

gradient_accumulation_steps = 4,

warmup_steps = 10,

max_steps = 60,

logging_steps = 1,

output_dir = "outputs",

optim = "adamw_8bit",

seed = 3407,

),

)

trainer.train()

# Go to https://github.com/unslothai/unsloth/wiki for advanced tips like

# (1) Saving to GGUF / merging to 16bit for vLLM

# (2) Continued training from a saved LoRA adapter

# (3) Adding an evaluation loop / OOMs

# (4) Customized chat templates

RL including DPO, GRPO, PPO, Reward Modelling, Online DPO all work with Unsloth. We're in 🤗Hugging Face's official docs! We're on the GRPO docs and the DPO docs! List of RL notebooks:

import os

os.environ["CUDA_VISIBLE_DEVICES"] = "0" # Optional set GPU device ID

from unsloth import FastLanguageModel

import torch

from trl import DPOTrainer, DPOConfig

max_seq_length = 2048

model, tokenizer = FastLanguageModel.from_pretrained(

model_name = "unsloth/zephyr-sft-bnb-4bit",

max_seq_length = max_seq_length,

load_in_4bit = True,

)

# Do model patching and add fast LoRA weights

model = FastLanguageModel.get_peft_model(

model,

r = 64,

target_modules = ["q_proj", "k_proj", "v_proj", "o_proj",

"gate_proj", "up_proj", "down_proj",],

lora_alpha = 64,

lora_dropout = 0, # Supports any, but = 0 is optimized

bias = "none", # Supports any, but = "none" is optimized

# [NEW] "unsloth" uses 30% less VRAM, fits 2x larger batch sizes!

use_gradient_checkpointing = "unsloth", # True or "unsloth" for very long context

random_state = 3407,

max_seq_length = max_seq_length,

)

dpo_trainer = DPOTrainer(

model = model,

ref_model = None,

train_dataset = YOUR_DATASET_HERE,

# eval_dataset = YOUR_DATASET_HERE,

tokenizer = tokenizer,

args = DPOConfig(

per_device_train_batch_size = 4,

gradient_accumulation_steps = 8,

warmup_ratio = 0.1,

num_train_epochs = 3,

logging_steps = 1,

optim = "adamw_8bit",

seed = 42,

output_dir = "outputs",

max_length = 1024,

max_prompt_length = 512,

beta = 0.1,

),

)

dpo_trainer.train()

We tested using the Alpaca Dataset, a batch size of 2, gradient accumulation steps of 4, rank = 32, and applied QLoRA on all linear layers (q, k, v, o, gate, up, down):

Model VRAM 🦥 Unsloth speed 🦥 VRAM reduction 🦥 Longer context 😊 Hugging Face + FA2| Llama 3.3 (70B) | 80GB | 2x | >75% | 13x longer | 1x |

| Llama 3.1 (8B) | 80GB | 2x | >70% | 12x longer | 1x |

We tested Llama 3.1 (8B) Instruct and did 4bit QLoRA on all linear layers (Q, K, V, O, gate, up and down) with rank = 32 with a batch size of 1. We padded all sequences to a certain maximum sequence length to mimic long context finetuning workloads.

GPU VRAM 🦥Unsloth context length Hugging Face + FA2| 8 GB | 2,972 | OOM |

| 12 GB | 21,848 | 932 |

| 16 GB | 40,724 | 2,551 |

| 24 GB | 78,475 | 5,789 |

| 40 GB | 153,977 | 12,264 |

| 48 GB | 191,728 | 15,502 |

| 80 GB | 342,733 | 28,454 |

We tested Llama 3.3 (70B) Instruct on a 80GB A100 and did 4bit QLoRA on all linear layers (Q, K, V, O, gate, up and down) with rank = 32 with a batch size of 1. We padded all sequences to a certain maximum sequence length to mimic long context finetuning workloads.

GPU VRAM 🦥Unsloth context length Hugging Face + FA2| 48 GB | 12,106 | OOM |

| 80 GB | 89,389 | 6,916 |

You can cite the Unsloth repo as follows:

@software{unsloth,

author = {Daniel Han, Michael Han and Unsloth team},

title = {Unsloth},

url = {http://github.com/unslothai/unsloth},

year = {2023}

}

Expose your FastAPI endpoints as Model Context Protocol (MCP) tools, with Auth!

Expose your FastAPI endpoints as Model Context Protocol (MCP) tools, with Auth!

Authentication built in, using your existing FastAPI dependencies!

FastAPI-native: Not just another OpenAPI -> MCP converter

Zero/Minimal configuration required - just point it at your FastAPI app and it works

Preserving schemas of your request models and response models

Preserve documentation of all your endpoints, just as it is in Swagger

Flexible deployment - Mount your MCP server to the same app, or deploy separately

ASGI transport - Uses FastAPI's ASGI interface directly for efficient communication

If you prefer a managed hosted solution check out tadata.com.

We recommend using uv, a fast Python package installer:

uv add fastapi-mcp

Alternatively, you can install with pip:

pip install fastapi-mcp

The simplest way to use FastAPI-MCP is to add an MCP server directly to your FastAPI application:

from fastapi import FastAPI

from fastapi_mcp import FastApiMCP

app = FastAPI()

mcp = FastApiMCP(app)

# Mount the MCP server directly to your FastAPI app

mcp.mount()

That's it! Your auto-generated MCP server is now available at https://app.base.url/mcp.

FastAPI-MCP provides comprehensive documentation. Additionaly, check out the examples directory for code samples demonstrating these features in action.

FastAPI-MCP is designed as a native extension of FastAPI, not just a converter that generates MCP tools from your API. This approach offers several key advantages:

Native dependencies: Secure your MCP endpoints using familiar FastAPI Depends() for authentication and authorization

ASGI transport: Communicates directly with your FastAPI app using its ASGI interface, eliminating the need for HTTP calls from the MCP to your API

Unified infrastructure: Your FastAPI app doesn't need to run separately from the MCP server (though separate deployment is also supported)

This design philosophy ensures minimum friction when adding MCP capabilities to your existing FastAPI services.

Thank you for considering contributing to FastAPI-MCP! We encourage the community to post Issues and create Pull Requests.

Before you get started, please see our Contribution Guide.

Join MCParty Slack community to connect with other MCP enthusiasts, ask questions, and share your experiences with FastAPI-MCP.

MIT License. Copyright (c) 2025 Tadata Inc.

OCR, layout analysis, reading order, table recognition in 90+ languages

Surya is a document OCR toolkit that does:

It works on a range of documents (see usage and benchmarks for more details).

Detection OCR |

|

|

|

|

|

Surya is named for the Hindu sun god, who has universal vision.

Discord is where we discuss future development.

| Japanese | Image | Image | Image | Image | Image |

| Chinese | Image | Image | Image | Image | |

| Hindi | Image | Image | Image | Image | |

| Arabic | Image | Image | Image | Image | |

| Chinese + Hindi | Image | Image | Image | Image | |

| Presentation | Image | Image | Image | Image | Image |

| Scientific Paper | Image | Image | Image | Image | Image |

| Scanned Document | Image | Image | Image | Image | Image |

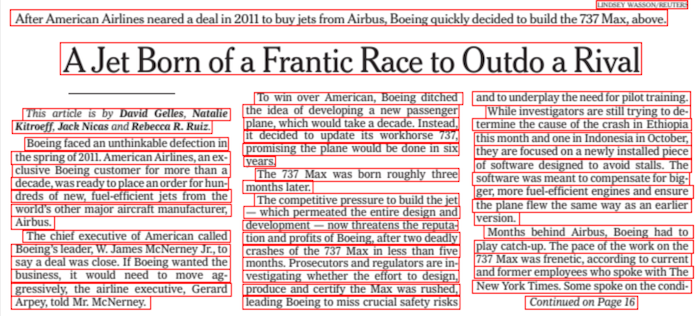

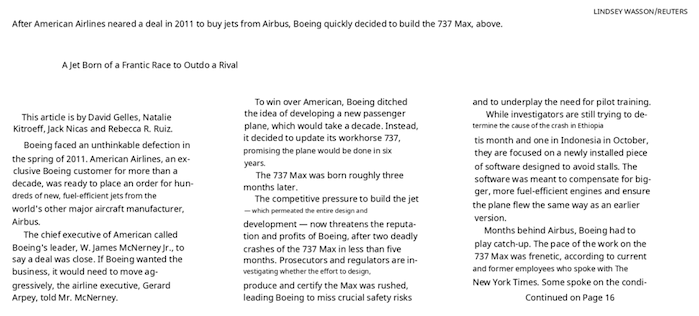

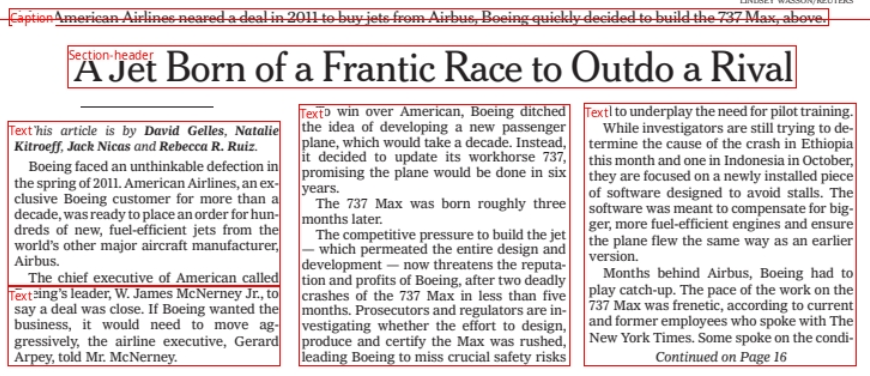

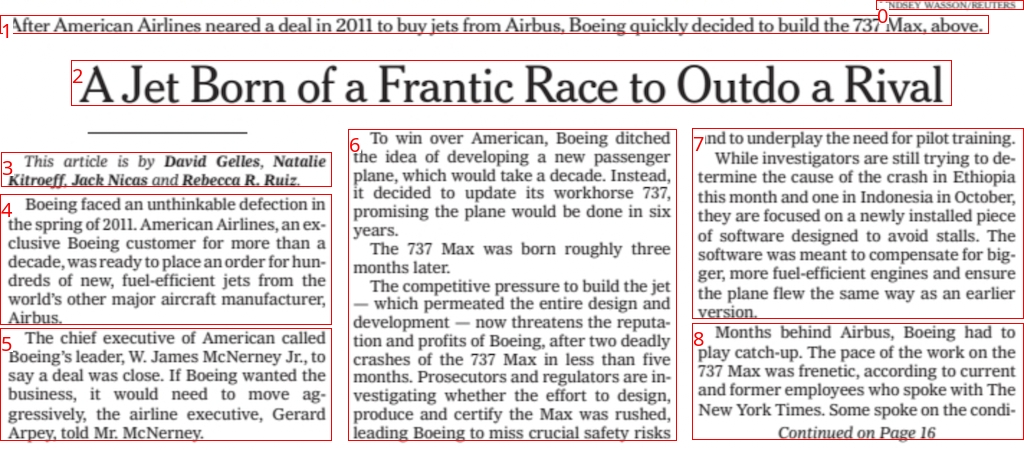

| New York Times | Image | Image | Image | Image | |

| Scanned Form | Image | Image | Image | Image | Image |

| Textbook | Image | Image | Image | Image |

There is a hosted API for all surya models available here:

Our model weights use a modified AI Pubs Open Rail-M license (free for research, personal use, and startups under $2M funding/revenue) and our code is GPL. For broader commercial licensing or to remove GPL requirements, visit our pricing page here.

You'll need python 3.10+ and PyTorch. You may need to install the CPU version of torch first if you're not using a Mac or a GPU machine. See here for more details.

Install with:

pip install surya-ocr

Model weights will automatically download the first time you run surya.

surya/settings.py. You can override any settings with environment variables.TORCH_DEVICE=cuda.I've included a streamlit app that lets you interactively try Surya on images or PDF files. Run it with:

pip install streamlit pdftext

surya_gui

This command will write out a json file with the detected text and bboxes:

surya_ocr DATA_PATH

DATA_PATH can be an image, pdf, or folder of images/pdfs--task_name will specify which task to use for predicting the lines. ocr_with_boxes is the default, which will format text and give you bboxes. If you get bad performance, try ocr_without_boxes, which will give you potentially better performance but no bboxes. For blocks like equations and paragraphs, try block_without_boxes.--images will save images of the pages and detected text lines (optional)--output_dir specifies the directory to save results to instead of the default--page_range specifies the page range to process in the PDF, specified as a single number, a comma separated list, a range, or comma separated ranges - example: 0,5-10,20.--disable_math - by default, surya will recognize math in text. This can lead to false positives - you can disable this with this flag.The results.json file will contain a json dictionary where the keys are the input filenames without extensions. Each value will be a list of dictionaries, one per page of the input document. Each page dictionary contains:

text_lines - the detected text and bounding boxes for each line

text - the text in the lineconfidence - the confidence of the model in the detected text (0-1)polygon - the polygon for the text line in (x1, y1), (x2, y2), (x3, y3), (x4, y4) format. The points are in clockwise order from the top left.bbox - the axis-aligned rectangle for the text line in (x1, y1, x2, y2) format. (x1, y1) is the top left corner, and (x2, y2) is the bottom right corner.chars - the individual characters in the line

text - the text of the characterbbox - the character bbox (same format as line bbox)polygon - the character polygon (same format as line polygon)confidence - the confidence of the model in the detected character (0-1)bbox_valid - if the character is a special token or math, the bbox may not be validwords - the individual words in the line (computed from the characters)

text - the text of the wordbbox - the word bbox (same format as line bbox)polygon - the word polygon (same format as line polygon)confidence - mean character confidencebbox_valid - if the word is a special token or math, the bbox may not be validpage - the page number in the fileimage_bbox - the bbox for the image in (x1, y1, x2, y2) format. (x1, y1) is the top left corner, and (x2, y2) is the bottom right corner. All line bboxes will be contained within this bbox.Performance tips

Setting the RECOGNITION_BATCH_SIZE env var properly will make a big difference when using a GPU. Each batch item will use 40MB of VRAM, so very high batch sizes are possible. The default is a batch size 512, which will use about 20GB of VRAM. Depending on your CPU core count, it may help, too - the default CPU batch size is 32.

from PIL import Image

from surya.foundation import FoundationPredictor

from surya.recognition import RecognitionPredictor

from surya.detection import DetectionPredictor

image = Image.open(IMAGE_PATH)

foundation_predictor = FoundationPredictor()

recognition_predictor = RecognitionPredictor(foundation_predictor)

detection_predictor = DetectionPredictor()

predictions = recognition_predictor([image], det_predictor=detection_predictor)

This command will write out a json file with the detected bboxes.

surya_detect DATA_PATH

DATA_PATH can be an image, pdf, or folder of images/pdfs--images will save images of the pages and detected text lines (optional)--output_dir specifies the directory to save results to instead of the default--page_range specifies the page range to process in the PDF, specified as a single number, a comma separated list, a range, or comma separated ranges - example: 0,5-10,20.The results.json file will contain a json dictionary where the keys are the input filenames without extensions. Each value will be a list of dictionaries, one per page of the input document. Each page dictionary contains:

bboxes - detected bounding boxes for text

bbox - the axis-aligned rectangle for the text line in (x1, y1, x2, y2) format. (x1, y1) is the top left corner, and (x2, y2) is the bottom right corner.polygon - the polygon for the text line in (x1, y1), (x2, y2), (x3, y3), (x4, y4) format. The points are in clockwise order from the top left.confidence - the confidence of the model in the detected text (0-1)vertical_lines - vertical lines detected in the document

bbox - the axis-aligned line coordinates.page - the page number in the fileimage_bbox - the bbox for the image in (x1, y1, x2, y2) format. (x1, y1) is the top left corner, and (x2, y2) is the bottom right corner. All line bboxes will be contained within this bbox.Performance tips

Setting the DETECTOR_BATCH_SIZE env var properly will make a big difference when using a GPU. Each batch item will use 440MB of VRAM, so very high batch sizes are possible. The default is a batch size 36, which will use about 16GB of VRAM. Depending on your CPU core count, it might help, too - the default CPU batch size is 6.

from PIL import Image

from surya.detection import DetectionPredictor

image = Image.open(IMAGE_PATH)

det_predictor = DetectionPredictor()

# predictions is a list of dicts, one per image

predictions = det_predictor([image])

This command will write out a json file with the detected layout and reading order.

surya_layout DATA_PATH

DATA_PATH can be an image, pdf, or folder of images/pdfs--images will save images of the pages and detected text lines (optional)--output_dir specifies the directory to save results to instead of the default--page_range specifies the page range to process in the PDF, specified as a single number, a comma separated list, a range, or comma separated ranges - example: 0,5-10,20.The results.json file will contain a json dictionary where the keys are the input filenames without extensions. Each value will be a list of dictionaries, one per page of the input document. Each page dictionary contains:

bboxes - detected bounding boxes for text

bbox - the axis-aligned rectangle for the text line in (x1, y1, x2, y2) format. (x1, y1) is the top left corner, and (x2, y2) is the bottom right corner.polygon - the polygon for the text line in (x1, y1), (x2, y2), (x3, y3), (x4, y4) format. The points are in clockwise order from the top left.position - the reading order of the box.label - the label for the bbox. One of Caption, Footnote, Formula, List-item, Page-footer, Page-header, Picture, Figure, Section-header, Table, Form, Table-of-contents, Handwriting, Text, Text-inline-math.top_k - the top-k other potential labels for the box. A dictionary with labels as keys and confidences as values.page - the page number in the fileimage_bbox - the bbox for the image in (x1, y1, x2, y2) format. (x1, y1) is the top left corner, and (x2, y2) is the bottom right corner. All line bboxes will be contained within this bbox.Performance tips

Setting the LAYOUT_BATCH_SIZE env var properly will make a big difference when using a GPU. Each batch item will use 220MB of VRAM, so very high batch sizes are possible. The default is a batch size 32, which will use about 7GB of VRAM. Depending on your CPU core count, it might help, too - the default CPU batch size is 4.

from PIL import Image

from surya.layout import LayoutPredictor

image = Image.open(IMAGE_PATH)

layout_predictor = LayoutPredictor()

# layout_predictions is a list of dicts, one per image

layout_predictions = layout_predictor([image])

This command will write out a json file with the detected table cells and row/column ids, along with row/column bounding boxes. If you want to get cell positions and text, along with nice formatting, check out the marker repo. You can use the TableConverter to detect and extract tables in images and PDFs. It supports output in json (with bboxes), markdown, and html.

surya_table DATA_PATH

DATA_PATH can be an image, pdf, or folder of images/pdfs--images will save images of the pages and detected table cells + rows and columns (optional)--output_dir specifies the directory to save results to instead of the default--page_range specifies the page range to process in the PDF, specified as a single number, a comma separated list, a range, or comma separated ranges - example: 0,5-10,20.--detect_boxes specifies if cells should be detected. By default, they're pulled out of the PDF, but this is not always possible.--skip_table_detection tells table recognition not to detect tables first. Use this if your image is already cropped to a table.The results.json file will contain a json dictionary where the keys are the input filenames without extensions. Each value will be a list of dictionaries, one per page of the input document. Each page dictionary contains:

rows - detected table rows

bbox - the bounding box of the table rowrow_id - the id of the rowis_header - if it is a header row.cols - detected table columns

bbox - the bounding box of the table columncol_id- the id of the columnis_header - if it is a header columncells - detected table cells

bbox - the axis-aligned rectangle for the text line in (x1, y1, x2, y2) format. (x1, y1) is the top left corner, and (x2, y2) is the bottom right corner.text - if text could be pulled out of the pdf, the text of this cell.row_id - the id of the row the cell belongs to.col_id - the id of the column the cell belongs to.colspan - the number of columns spanned by the cell.rowspan - the number of rows spanned by the cell.is_header - whether it is a header cell.page - the page number in the filetable_idx - the index of the table on the page (sorted in vertical order)image_bbox - the bbox for the image in (x1, y1, x2, y2) format. (x1, y1) is the top left corner, and (x2, y2) is the bottom right corner. All line bboxes will be contained within this bbox.Performance tips

Setting the TABLE_REC_BATCH_SIZE env var properly will make a big difference when using a GPU. Each batch item will use 150MB of VRAM, so very high batch sizes are possible. The default is a batch size 64, which will use about 10GB of VRAM. Depending on your CPU core count, it might help, too - the default CPU batch size is 8.

from PIL import Image

from surya.table_rec import TableRecPredictor

image = Image.open(IMAGE_PATH)

table_rec_predictor = TableRecPredictor()

table_predictions = table_rec_predictor([image])

This command will write out a json file with the LaTeX of the equations. You must pass in images that are already cropped to the equations. You can do this by running the layout model, then cropping, if you want.

surya_latex_ocr DATA_PATH

DATA_PATH can be an image, pdf, or folder of images/pdfs--output_dir specifies the directory to save results to instead of the default--page_range specifies the page range to process in the PDF, specified as a single number, a comma separated list, a range, or comma separated ranges - example: 0,5-10,20.The results.json file will contain a json dictionary where the keys are the input filenames without extensions. Each value will be a list of dictionaries, one per page of the input document. See the OCR section above for the format of the output.

from PIL import Image

from surya.texify import TexifyPredictor

image = Image.open(IMAGE_PATH)

predictor = TexifyPredictor()

predictor([image])

You can also run a special interactive app that lets you select equations and OCR them (kind of like MathPix snip) with:

pip install streamlit==1.40 streamlit-drawable-canvas-jsretry

texify_gui

The following models have support for compilation. You will need to set the following environment variables to enable compilation:

COMPILE_DETECTOR=trueCOMPILE_LAYOUT=trueCOMPILE_TABLE_REC=trueAlternatively, you can also set COMPILE_ALL=true which will compile all models.

Here are the speedups on an A10 GPU:

Model Time per page (s) Compiled time per page (s) Speedup (%)| Detection | 0.108808 | 0.10521 | 3.306742151 |

| Layout | 0.27319 | 0.27063 | 0.93707676 |

| Table recognition | 0.0219 | 0.01938 | 11.50684932 |

surya/recognition/languages.py. Text detection, layout analysis, and reading order will work with any language.If OCR isn't working properly:

2048px width.DETECTOR_BLANK_THRESHOLD and DETECTOR_TEXT_THRESHOLD if you don't get good results. DETECTOR_BLANK_THRESHOLD controls the space between lines - any prediction below this number will be considered blank space. DETECTOR_TEXT_THRESHOLD controls how text is joined - any number above this is considered text. DETECTOR_TEXT_THRESHOLD should always be higher than DETECTOR_BLANK_THRESHOLD, and both should be in the 0-1 range. Looking at the heatmap from the debug output of the detector can tell you how to adjust these (if you see faint things that look like boxes, lower the thresholds, and if you see bboxes being joined together, raise the thresholds).If you want to develop surya, you can install it manually:

git clone https://github.com/VikParuchuri/surya.gitcd suryapoetry install - installs main and dev dependenciespoetry shell - activates the virtual environment

| surya | .62 | 0.97 |

| tesseract | .45 | 0.88 |

Tesseract is CPU-based, and surya is CPU or GPU. I tried to cost-match the resources used, so I used a 1xA6000 (48GB VRAM) for surya, and 28 CPU cores for Tesseract (same price on Lambda Labs/DigitalOcean).

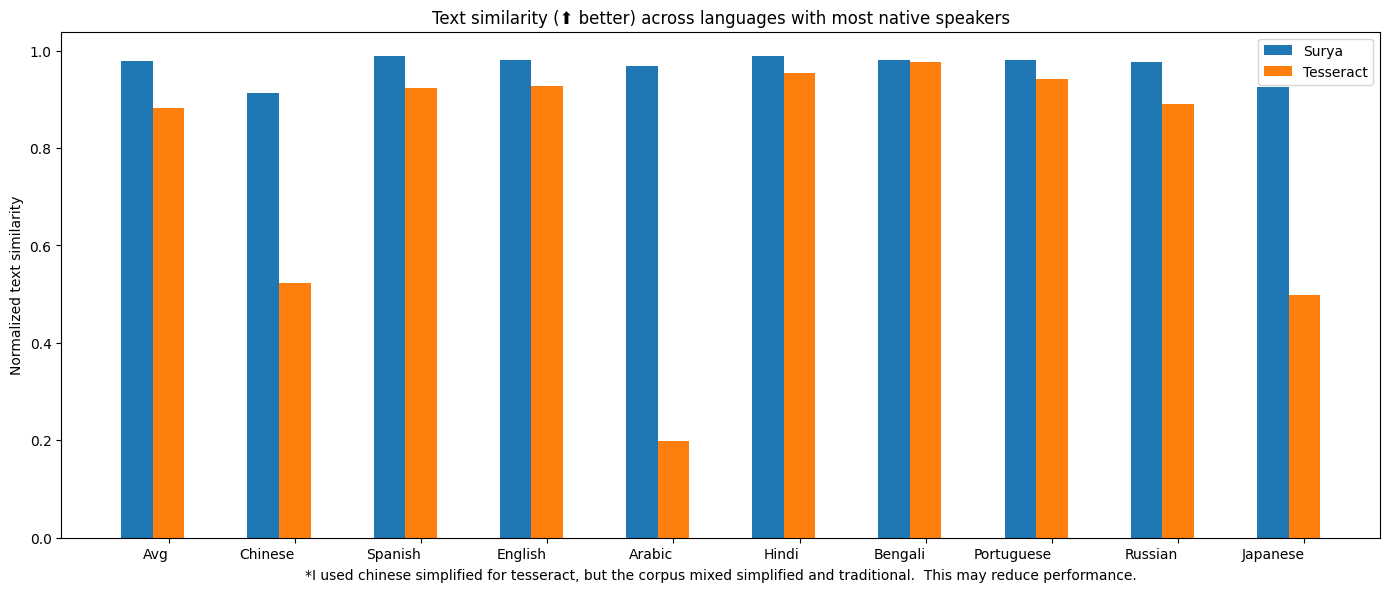

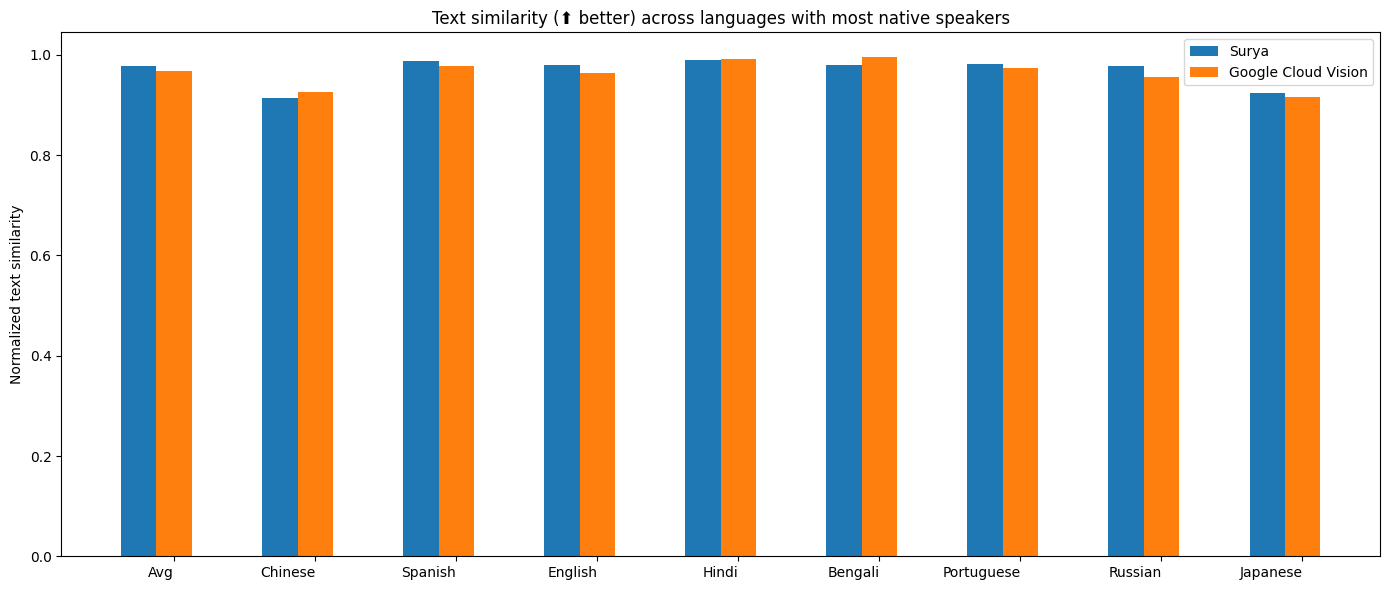

I benchmarked OCR against Google Cloud vision since it has similar language coverage to Surya.

Methodology

I measured normalized sentence similarity (0-1, higher is better) based on a set of real-world and synthetic pdfs. I sampled PDFs from common crawl, then filtered out the ones with bad OCR. I couldn't find PDFs for some languages, so I also generated simple synthetic PDFs for those.

I used the reference line bboxes from the PDFs with both tesseract and surya, to just evaluate the OCR quality.

For Google Cloud, I aligned the output from Google Cloud with the ground truth. I had to skip RTL languages since they didn't align well.

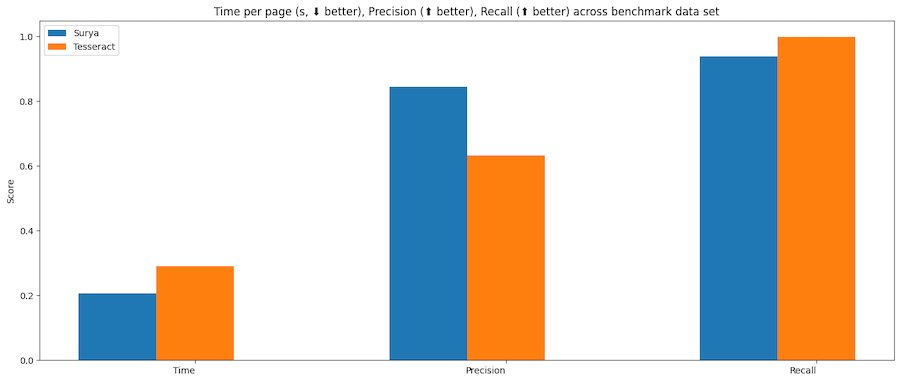

| surya | 47.2285 | 0.094452 | 0.835857 | 0.960807 |

| tesseract | 74.4546 | 0.290838 | 0.631498 | 0.997694 |

Tesseract is CPU-based, and surya is CPU or GPU. I ran the benchmarks on a system with an A10 GPU, and a 32 core CPU. This was the resource usage:

Methodology

Surya predicts line-level bboxes, while tesseract and others predict word-level or character-level. It's hard to find 100% correct datasets with line-level annotations. Merging bboxes can be noisy, so I chose not to use IoU as the metric for evaluation.

I instead used coverage, which calculates:

First calculate coverage for each bbox, then add a small penalty for double coverage, since we want the detection to have non-overlapping bboxes. Anything with a coverage of 0.5 or higher is considered a match.

Then we calculate precision and recall for the whole dataset.

| Image | 0.91265 | 0.93976 |

| List | 0.80849 | 0.86792 |

| Table | 0.84957 | 0.96104 |

| Text | 0.93019 | 0.94571 |

| Title | 0.92102 | 0.95404 |

Time per image - .13 seconds on GPU (A10).

Methodology

I benchmarked the layout analysis on Publaynet, which was not in the training data. I had to align publaynet labels with the surya layout labels. I was then able to find coverage for each layout type:

88% mean accuracy, and .4 seconds per image on an A10 GPU. See methodology for notes - this benchmark is not perfect measure of accuracy, and is more useful as a sanity check.

Methodology

I benchmarked the reading order on the layout dataset from here, which was not in the training data. Unfortunately, this dataset is fairly noisy, and not all the labels are correct. It was very hard to find a dataset annotated with reading order and also layout information. I wanted to avoid using a cloud service for the ground truth.

The accuracy is computed by finding if each pair of layout boxes is in the correct order, then taking the % that are correct.

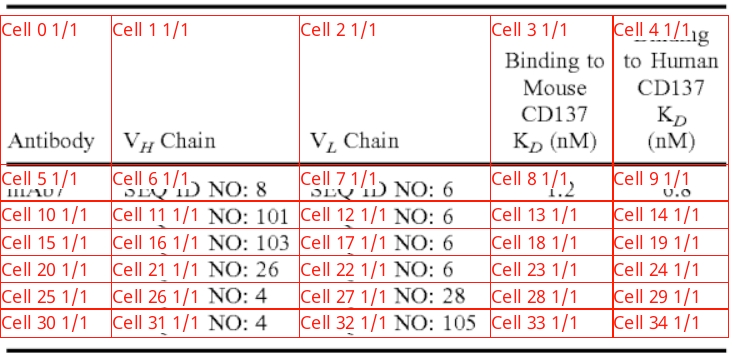

| Surya | 1 | 0.98625 | 0.30202 |

| Table transformer | 0.84 | 0.86857 | 0.08082 |

Higher is better for intersection, which the percentage of the actual row/column overlapped by the predictions. This benchmark is mostly a sanity check - there is a more rigorous one in marker

Methodology

The benchmark uses a subset of Fintabnet from IBM. It has labeled rows and columns. After table recognition is run, the predicted rows and columns are compared to the ground truth. There is an additional penalty for predicting too many or too few rows/columns.

| texify | 0.122617 | 35.6345 |

This inferences texify on a ground truth set of LaTeX, then does edit distance. This is a bit noisy, since 2 LaTeX strings that render the same can have different symbols in them.

You can benchmark the performance of surya on your machine.

poetry install --group dev - installs dev dependenciesText line detection

This will evaluate tesseract and surya for text line detection across a randomly sampled set of images from doclaynet.

python benchmark/detection.py --max_rows 256

--max_rows controls how many images to process for the benchmark--debug will render images and detected bboxes--pdf_path will let you specify a pdf to benchmark instead of the default data--results_dir will let you specify a directory to save results to instead of the default oneText recognition

This will evaluate surya and optionally tesseract on multilingual pdfs from common crawl (with synthetic data for missing languages).

python benchmark/recognition.py --tesseract

--max_rows controls how many images to process for the benchmark

--debug 2 will render images with detected text

--results_dir will let you specify a directory to save results to instead of the default one

--tesseract will run the benchmark with tesseract. You have to run sudo apt-get install tesseract-ocr-all to install all tesseract data, and set TESSDATA_PREFIX to the path to the tesseract data folder.

Set RECOGNITION_BATCH_SIZE=864 to use the same batch size as the benchmark.

Set RECOGNITION_BENCH_DATASET_NAME=vikp/rec_bench_hist to use the historical document data for benchmarking. This data comes from the tapuscorpus.

Layout analysis

This will evaluate surya on the publaynet dataset.

python benchmark/layout.py

--max_rows controls how many images to process for the benchmark--debug will render images with detected text--results_dir will let you specify a directory to save results to instead of the default oneReading Order

python benchmark/ordering.py

--max_rows controls how many images to process for the benchmark--debug will render images with detected text--results_dir will let you specify a directory to save results to instead of the default oneTable Recognition

python benchmark/table_recognition.py --max_rows 1024 --tatr

--max_rows controls how many images to process for the benchmark--debug will render images with detected text--results_dir will let you specify a directory to save results to instead of the default one--tatr specifies whether to also run table transformerLaTeX OCR

python benchmark/texify.py --max_rows 128

--max_rows controls how many images to process for the benchmark--results_dir will let you specify a directory to save results to instead of the default oneText detection was trained on 4x A6000s for 3 days. It used a diverse set of images as training data. It was trained from scratch using a modified efficientvit architecture for semantic segmentation.

Text recognition was trained on 4x A6000s for 2 weeks. It was trained using a modified donut model (GQA, MoE layer, UTF-16 decoding, layer config changes).

You can now take Surya OCR further by training it on your own data with our finetuning script. It’s built on Hugging Face Trainer, and supports all the arguments that the huggingface trainer provides, and integrations like torchrun, or deepspeed.

To setup your dataset, follow the example dataset format here and provide the path to your own dataset when launching the training script.

# Tested on 1xH100 GPU

# Set --pretrained_checkpoint_path to load from a custom checkpoint, otherwise

# the default surya ocr weights will be loaded as the initialization

python surya/scripts/finetune_ocr.py \

--output_dir $OUTPUT_DIR \

--dataset_name datalab-to/ocr_finetune_example \

--per_device_train_batch_size 64 \

--gradient_checkpointing true \

--max_sequence_length 1024

This is a minimal training script to get you started finetuning Surya. Our internal training stack includes character bounding box finetuning, sliding window attention with specialized attention masks, custom kernels, augmentations, and other optimizations that can push OCR accuracy well beyond standard finetuning. If you want to get the most out of your data, reach us at [email protected]!

This work would not have been possible without amazing open source AI work:

Thank you to everyone who makes open source AI possible.

If you use surya (or the associated models) in your work or research, please consider citing us using the following BibTeX entry:

@misc{paruchuri2025surya,

author = {Vikas Paruchuri and Datalab Team},

title = {Surya: A lightweight document OCR and analysis toolkit},

year = {2025},

howpublished = {\url{https://github.com/VikParuchuri/surya}},

note = {GitHub repository},

}

Simple, scalable AI model deployment on GPU clusters

GPUStack is an open-source GPU cluster manager for running AI models.

If you are using NVIDIA GPUs, ensure Docker and NVIDIA Container Toolkit are installed on your system. Then, run the following command to start the GPUStack server.

docker run -d --name gpustack \

--restart=unless-stopped \

--gpus all \

--network=host \

--ipc=host \

-v gpustack-data:/var/lib/gpustack \

gpustack/gpustack

For more details on the installation or other GPU hardware platforms, please refer to the Installation Documentation.

After the server starts, run the following command to get the default admin password:

docker exec gpustack cat /var/lib/gpustack/initial_admin_password

Open your browser and navigate to http://your_host_ip to access the GPUStack UI. Use the default username admin and the password you retrieved above to log in.

A desktop installer is available for macOS and Windows — see the documentation for installation details.

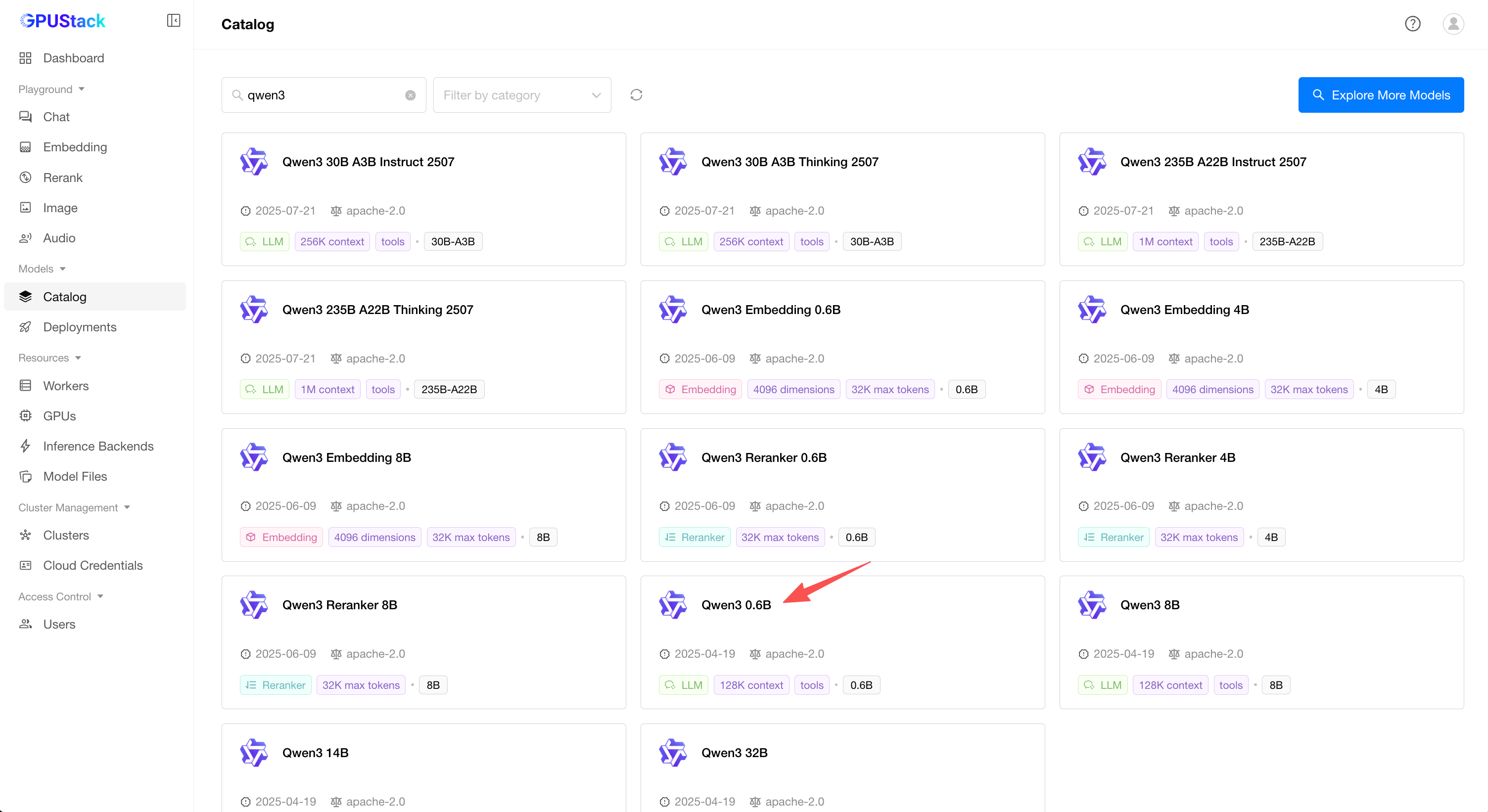

Navigate to the Catalog page in the GPUStack UI.

Select the Qwen3 model from the list of available models.

After the deployment compatibility checks pass, click the Save button to deploy the model.

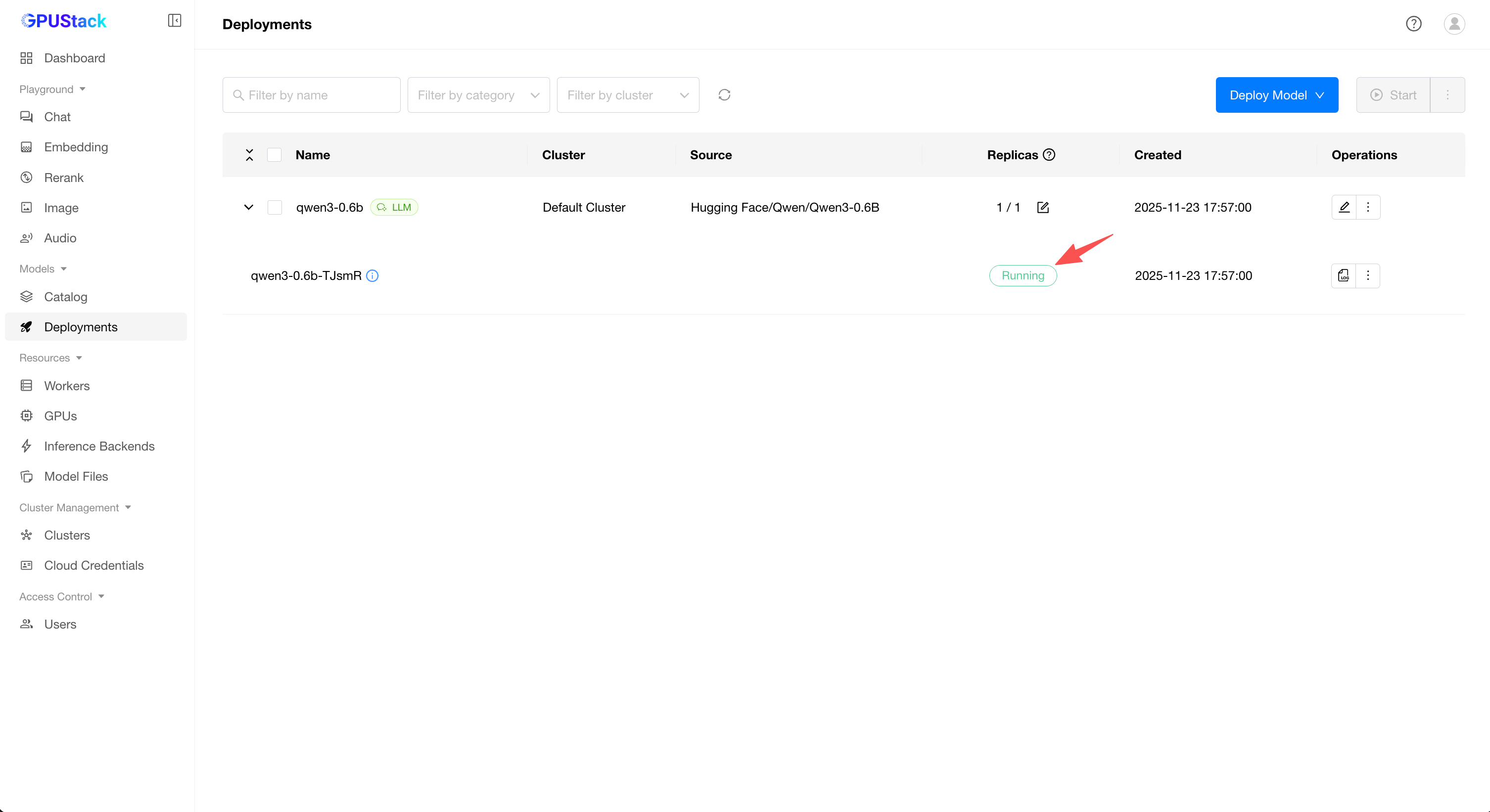

Running, the model has been deployed successfully.

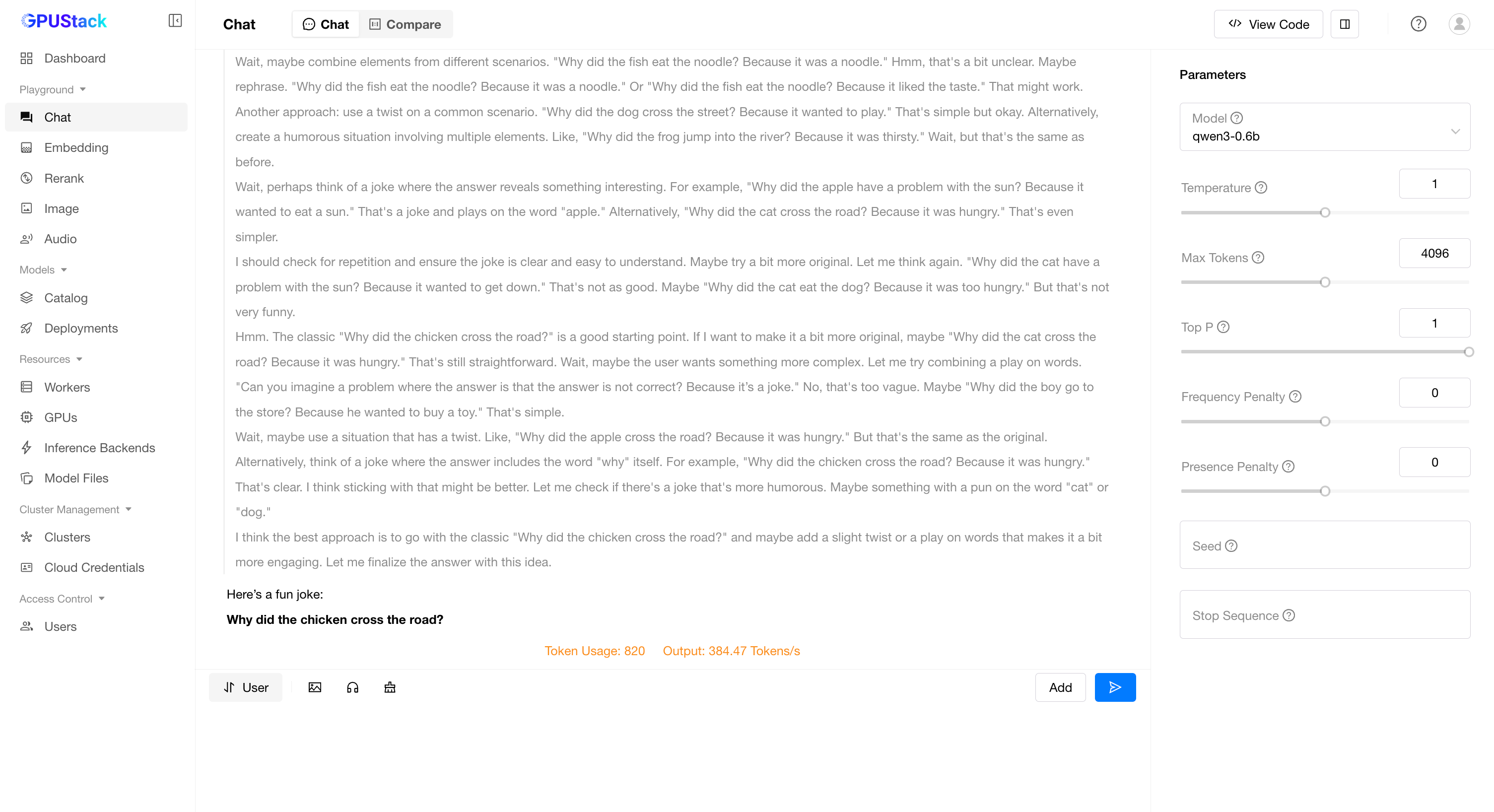

Playground - Chat in the navigation menu, check that the model qwen3 is selected from the top-right Model dropdown. Now you can chat with the model in the UI playground.

Hover over the user avatar and navigate to the API Keys page, then click the New API Key button.

Fill in the Name and click the Save button.

Copy the generated API key and save it somewhere safe. Please note that you can only see it once on creation.

You can now use the API key to access the OpenAI-compatible API endpoints provided by GPUStack. For example, use curl as the following:

# Replace `your_api_key` and `your_gpustack_server_url`

# with your actual API key and GPUStack server URL.

export GPUSTACK_API_KEY=your_api_key

curl http://your_gpustack_server_url/v1/chat/completions \

-H "Content-Type: application/json" \

-H "Authorization: Bearer $GPUSTACK_API_KEY" \

-d '{

"model": "qwen3",

"messages": [

{

"role": "system",

"content": "You are a helpful assistant."

},

{

"role": "user",

"content": "Tell me a joke."

}

],

"stream": true

}'

GPUStack uses vLLM, Ascend MindIE, llama-box (bundled llama.cpp and stable-diffusion.cpp server) and vox-box as the backends and supports a wide range of models. Models from the following sources are supported:

Local File Path

| Large Language Models(LLMs) | Qwen, LLaMA, Mistral, DeepSeek, Phi, Gemma |

| Vision Language Models(VLMs) | Llama3.2-Vision, Pixtral , Qwen2.5-VL, LLaVA, InternVL3 |

| Diffusion Models | Stable Diffusion, FLUX |

| Embedding Models | BGE, BCE, Jina, Qwen3-Embedding |

| Reranker Models | BGE, BCE, Jina, Qwen3-Reranker |

| Audio Models | Whisper (Speech-to-Text), CosyVoice (Text-to-Speech) |

For full list of supported models, please refer to the supported models section in the inference backends documentation.

GPUStack serves the following OpenAI compatible APIs under the /v1-openai path:

For example, you can use the official OpenAI Python API library to consume the APIs:

from openai import OpenAI

client = OpenAI(base_url="http://your_gpustack_server_url/v1-openai", api_key="your_api_key")

completion = client.chat.completions.create(

model="llama3.2",

messages=[

{"role": "system", "content": "You are a helpful assistant."},

{"role": "user", "content": "Hello!"}

]

)

print(completion.choices[0].message)

GPUStack users can generate their own API keys in the UI.

Please see the official docs site for complete documentation.

Install Python (version 3.10 to 3.12).

Run make build.

You can find the built wheel package in dist directory.