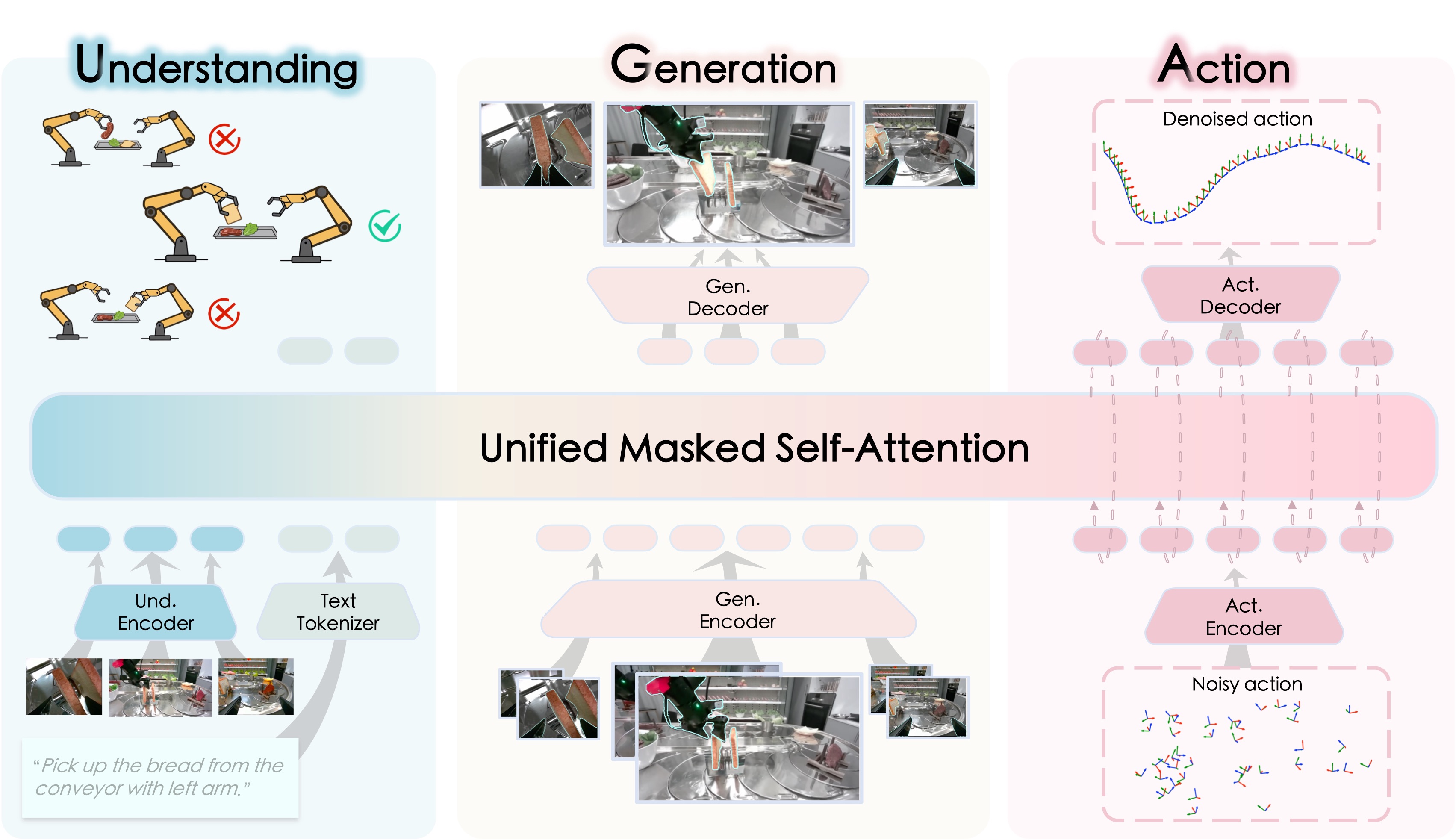

InternVLA-A1 unifies scene understanding, visual foresight generation, and action execution into a single framework.

- 🔮 The Core: Synergizes MLLM's semantic understanding with world-model-style dynamic prediction, enabling it to "imagine" the future and guide adaptive actions.

- 🚀 The Fuel: Empowered by high-fidelity synthetic data (InternData-A1).

- ⚡ The Output: Tackles highly dynamic scenarios with effortless mastery.

express_sorting.mp4 |

parcel_handling.mp4 |

Overcooked.mp4 |

sort_parts.mp4 |

zig_bag.mp4 |

unscrew_cap.mp4 |

- Release InternVLA-A1-3B

- Add quick-start for fine-tuning on

lerobot/pusht - 🔥NEW!!! Release guideline of large-scale dataset pretraining at "tutorials"

- Release InternVLA-A1-2B

This repository has been tested on Python 3.10 and CUDA 12.8. We recommend using conda to create an isolated environment.

conda create -y -n internvla_a1 python=3.10

conda activate internvla_a1

pip install --upgrade pipWe use FFmpeg for video encoding/decoding and SVT-AV1 for efficient storage.

conda install -c conda-forge ffmpeg=7.1.1 svt-av1 -ypip install torch==2.7.1 torchvision==0.22.1 torchaudio==2.7.1 \

--index-url https://download.pytorch.org/whl/cu128pip install torchcodec numpy scipy transformers==4.57.1 mediapy loguru pytest omegaconf

pip install -e .We replace the default implementations of several model modules (e.g., π0, InternVLA_A1_3B, InternVLA_A1_2B) to support custom architectures for robot learning.

TRANSFORMERS_DIR=${CONDA_PREFIX}/lib/python3.10/site-packages/transformers/

cp -r src/lerobot/policies/pi0/transformers_replace/models ${TRANSFORMERS_DIR}

cp -r src/lerobot/policies/InternVLA_A1_3B/transformers_replace/models ${TRANSFORMERS_DIR}

cp -r src/lerobot/policies/InternVLA_A1_2B/transformers_replace/models ${TRANSFORMERS_DIR}Make sure the target directory exists—otherwise create it manually.

export HF_TOKEN=your_token # for downloading hf models, tokenizers, or processors

export HF_HOME=path_to_huggingface # default: ~/.cache/huggingfaceln -s ${HF_HOME}/lerobot dataThis allows the repo to access datasets via ./data/.

bash launch/internvla_a1_3b_finetune.sh lerobot/pusht abs falseHere, abs indicates using absolute actions, and false means that the training

script will use the statistics file (stats.json) provided by lerobot/pusht itself.

This section provides a tutorial for fine-tuning InternVLA-A1-3B with InternData-A1 real dataset: download a dataset → convert it to v3.0 format → fine-tune InternVLA-A1-3B on the A2D Pick-Pen task.

In this example, we use the A2D Pick-Pen task from the Genie-1 real-robot dataset.

hf download \

InternRobotics/InternData-A1 \

real/genie1/Put_the_pen_from_the_table_into_the_pen_holder.tar.gz \

--repo-type dataset \

--local-dir dataExtract the downloaded archive, clean up intermediate files, and rename the dataset to follow the A2D naming convention:

tar -xzf data/real/genie1/Put_the_pen_from_the_table_into_the_pen_holder.tar.gz -C data

rm -rf data/real

mkdir -p data/v21

mv data/set_0 data/v21/a2d_pick_penAfter this step, the dataset directory structure should be:

data/

└── v21/

└── a2d_pick_pen/

├── data/

├── meta/

└── videos/

The original dataset is stored in LeRobot v2.1 format. This project requires LeRobot v3.0, so a format conversion is required.

Run the following command to convert the dataset:

python src/lerobot/datasets/v30/convert_my_dataset_v21_to_v30.py \

--old-repo-id v21/a2d_pick_pen \

--new-repo-id v30/a2d_pick_penAfter conversion, the dataset will be available at:

data/v30/a2d_pick_pen/

This project fine-tunes policies using relative (delta) actions. Therefore, you must compute per-dataset normalization statistics (e.g., mean/std) for the action stream before training.

Run the following command to compute statistics for v30/a2d_pick_pen:

python util_scripts/compute_norm_stats_single.py \

--action_mode delta \

--chunk_size 50 \

--repo_id v30/a2d_pick_penThis script will write a stats.json file under ${HF_HOME}/lerobot/stats/delta/v30/a2d_pick_pen/stats.json.

bash launch/internvla_a1_3b_finetune.sh v30/a2d_pick_pen delta truev30/a2d_pick_pen specifies the dataset, delta indicates that relative (delta) actions are used, and true means that external normalization statistics are loaded instead of using the dataset’s built-in stats.json.

Before running launch/internvla_a1_3b_finetune.sh, make sure to replace the environment variables inside the script with your own settings, including but not limited to:

HF_HOMEWANDB_API_KEYCONDA_ROOT- CUDA / GPU-related environment variables

- Paths to your local dataset and output directories

All the code within this repo are under CC BY-NC-SA 4.0. Please consider citing our project if it helps your research.

@article{contributors2026internvla_a1,

title={InternVLA-A1: Unifying Understanding, Generation and Action for Robotic Manipulation},

author={InternVLA-A1 contributors},

journal={arXiv preprint arXiv:2601.02456},

year={2026}

}