flash1dkmeans: Log-Time K-Means Clustering for 1D Data [Paper]

An optimized

Exploits the fact that one-dimensional data can be sorted.

For the lower level functions prefixed with numba_, Numba acceleration is used,

so callers can utilize these functions within their own Numba-accelerated functions.

Note that this library is not an implementation of optimal 1D

This library utilizes Numba, a JIT compiler, for acceleration. As there is a compile time overhead, the first invocation may be slower than usual.

Numba caches the compiled functions, so execution times should stabilize after the first invocation.

Finds a Lloyd's algorithm solution (i.e. convergence) for the two-cluster case, in

Uses the greedy

(We use

For number of elements

-

two-cluster algorithm:

$O(\log{n})$

($+ O(n)$ for prefix sum calculation if not provided,$+ O(n \cdot \log{n})$ for sorting if not sorted) -

$k$ -cluster algorithm:$O(k ^ 2 \cdot \log{k} \cdot \log{n}) + O(i \cdot \log{n} \cdot k)$

(The first term is for greedy$k$ -means++ initialization, and the latter for Lloyd's algorithm)

($+ O(n)$ for prefix sum calculation if not provided,$+ O(n \cdot \log{n})$ for sorting if not sorted)

This approach significantly improves upon standard

Note that you must use the underlying numba_ functions directly in order to directly supply prefix sums and skip sorting.

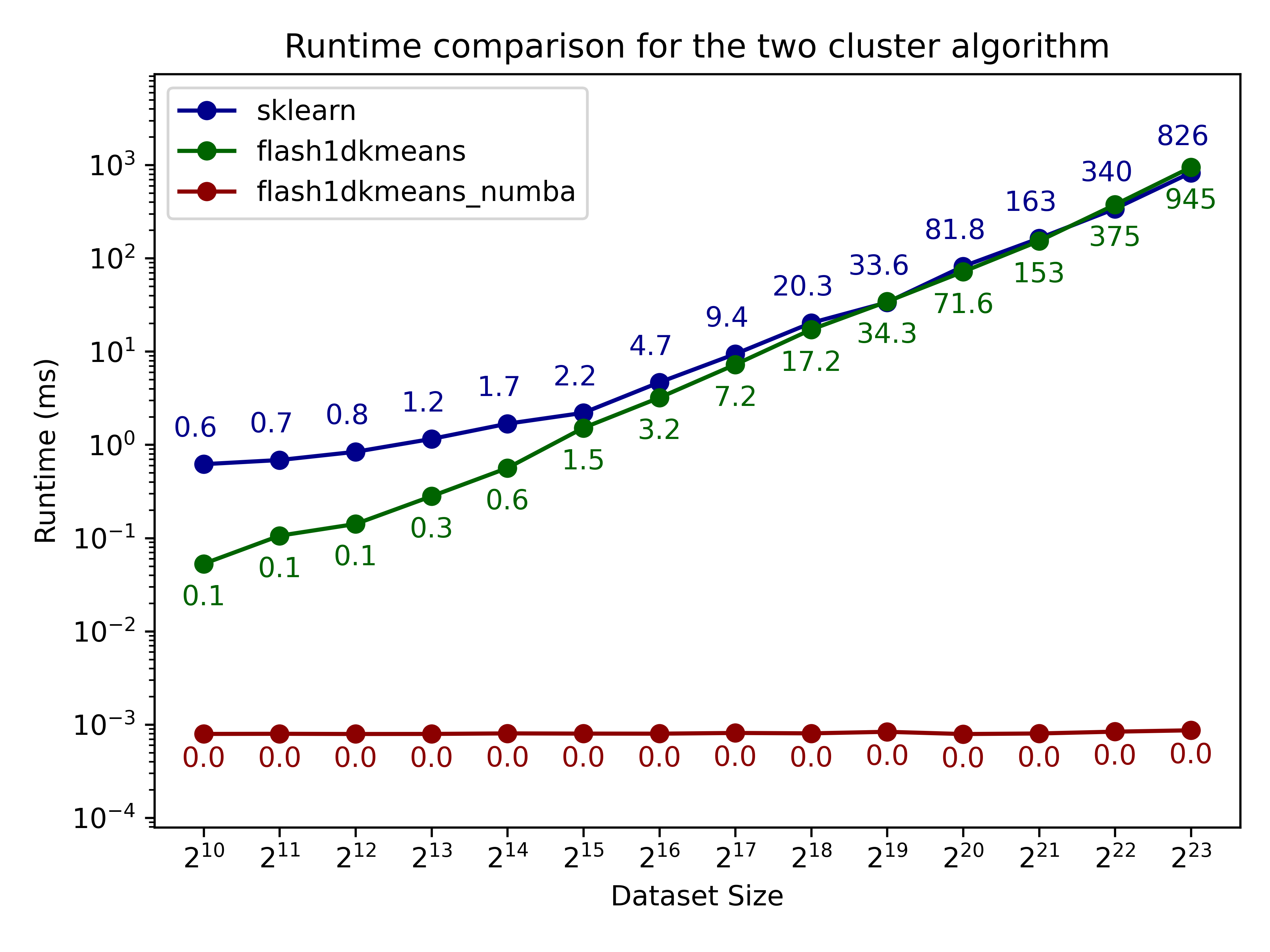

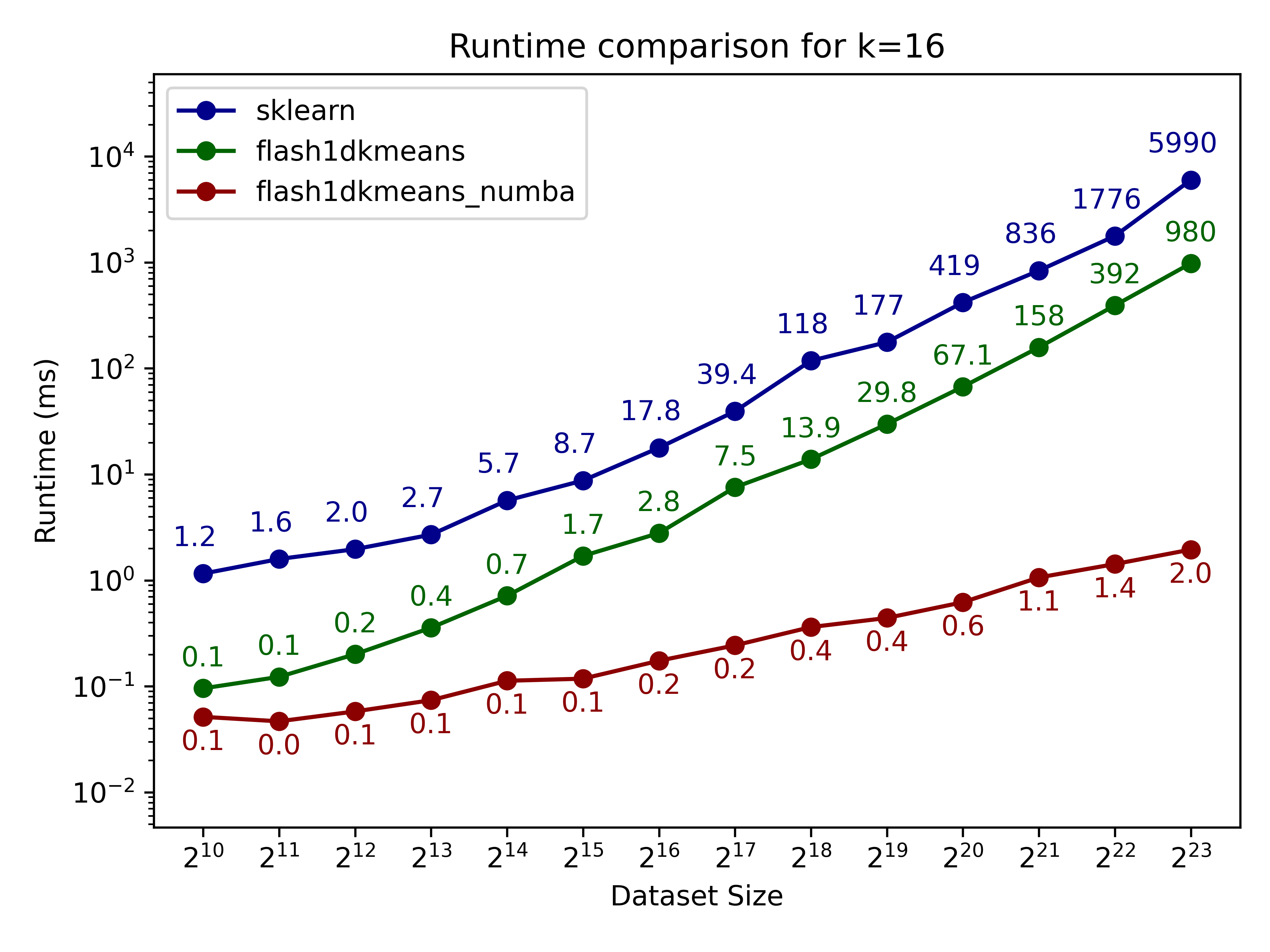

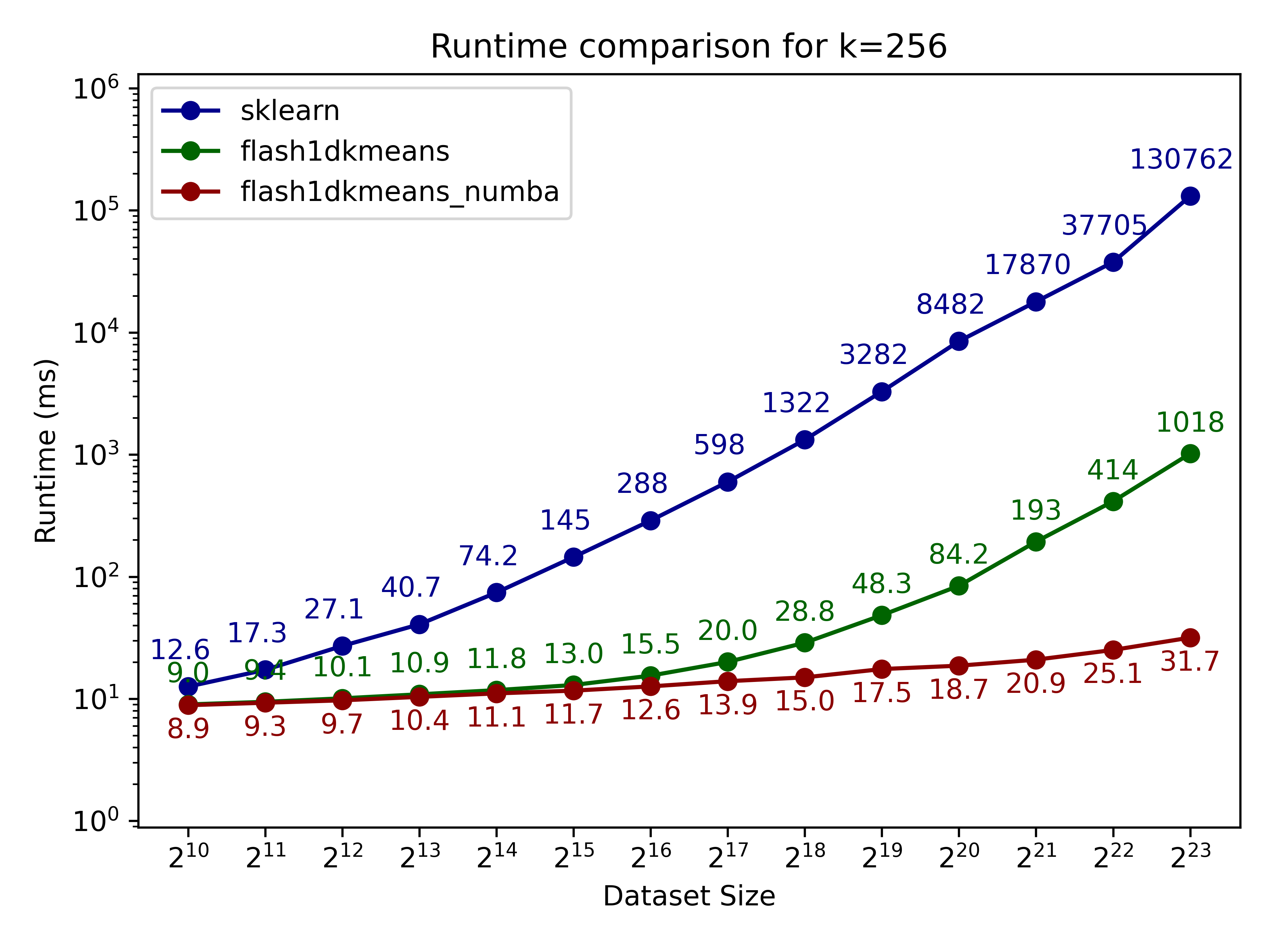

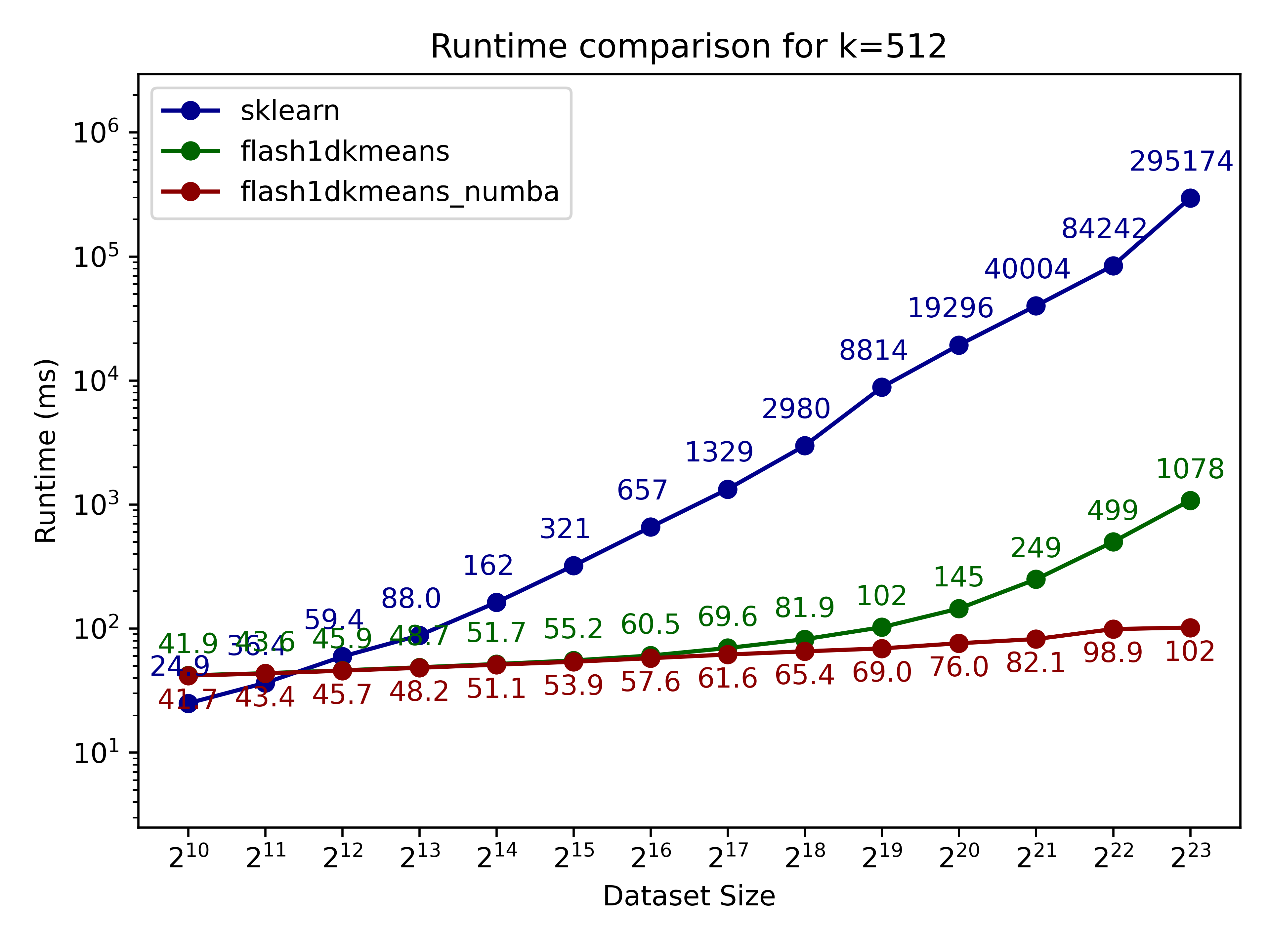

Here we compare flash1dkmeans against one of the most commonly used

In the figures below, we show the

-

flash1dkmeans measures the wrapper function

kmeans_1d, which includes the sorting and prefix sum calculation overheads. -

flash1dkmeans_numba measures the underlying Numba-accelerated functions, excluding the sorting and prefix sum calculation overheads. (A case where this performance is useful is when you only have to sort once, while calling

$k$ -means multiple times on different segments of the same data - or if you already have the sorted prefix sum calculations ready. Both happened to be the case for Any-Precision LLM.)

|

|

|

|

You can confirm that flash1dkmeans is several orders of magnitude faster, even when measured with the wrapper function, including the sorting and prefix sum calculation overheads.

These speeds are achieved while running an optimized but mathematically equivalent algorithm to sklearn’s implementation for the

Additionally, you can see that for the two-cluster algorithm, the algorithm indeed is

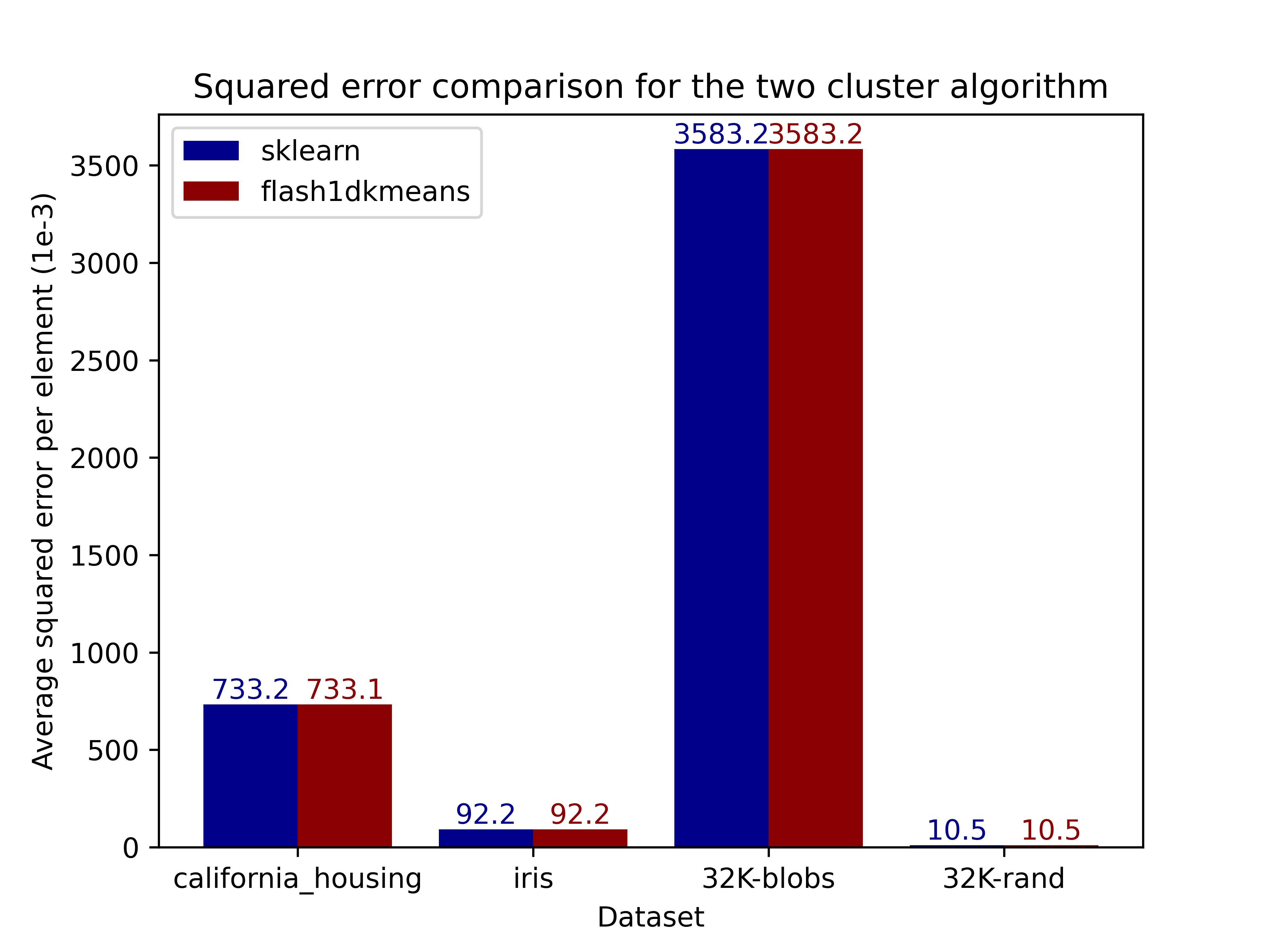

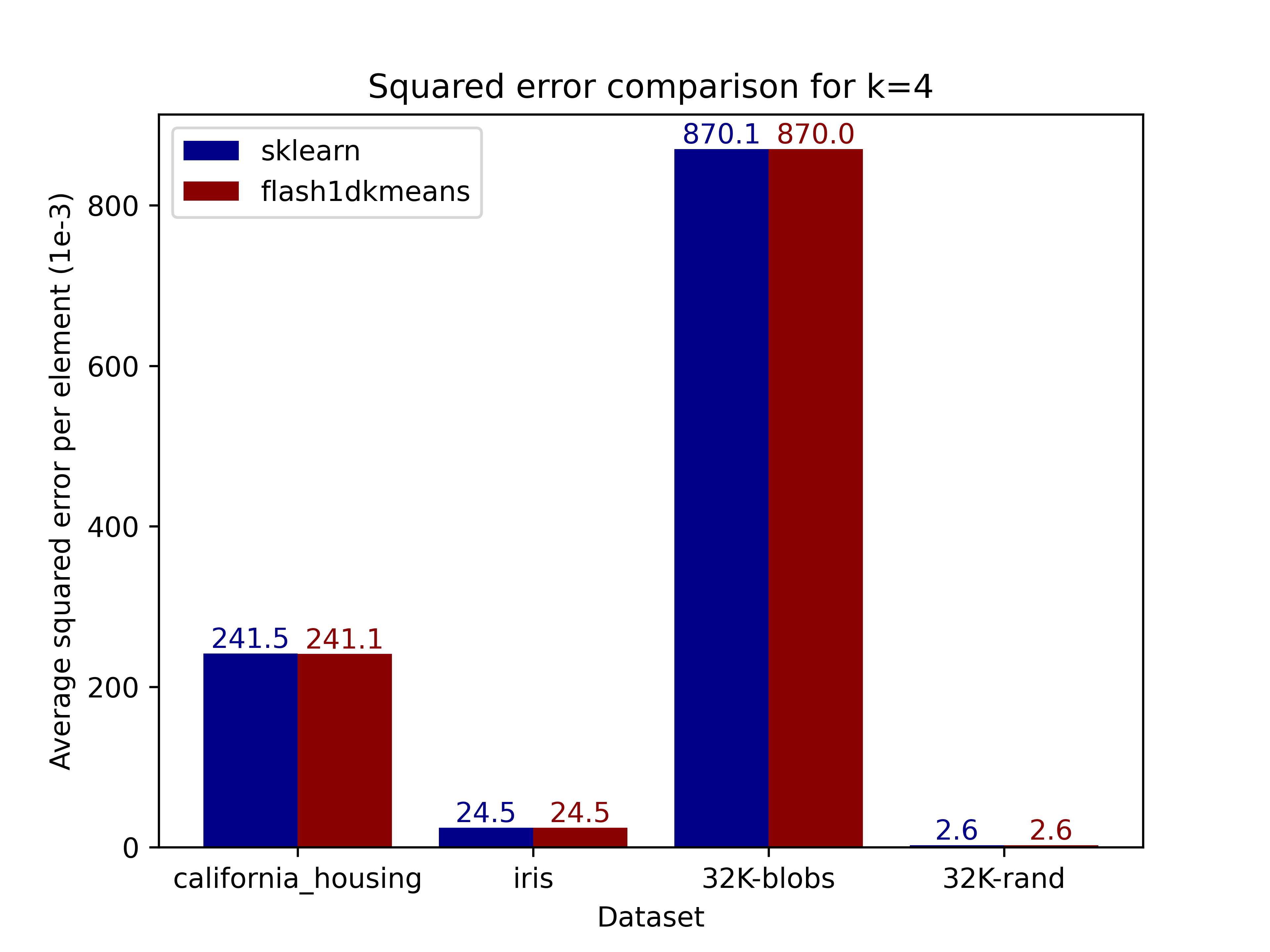

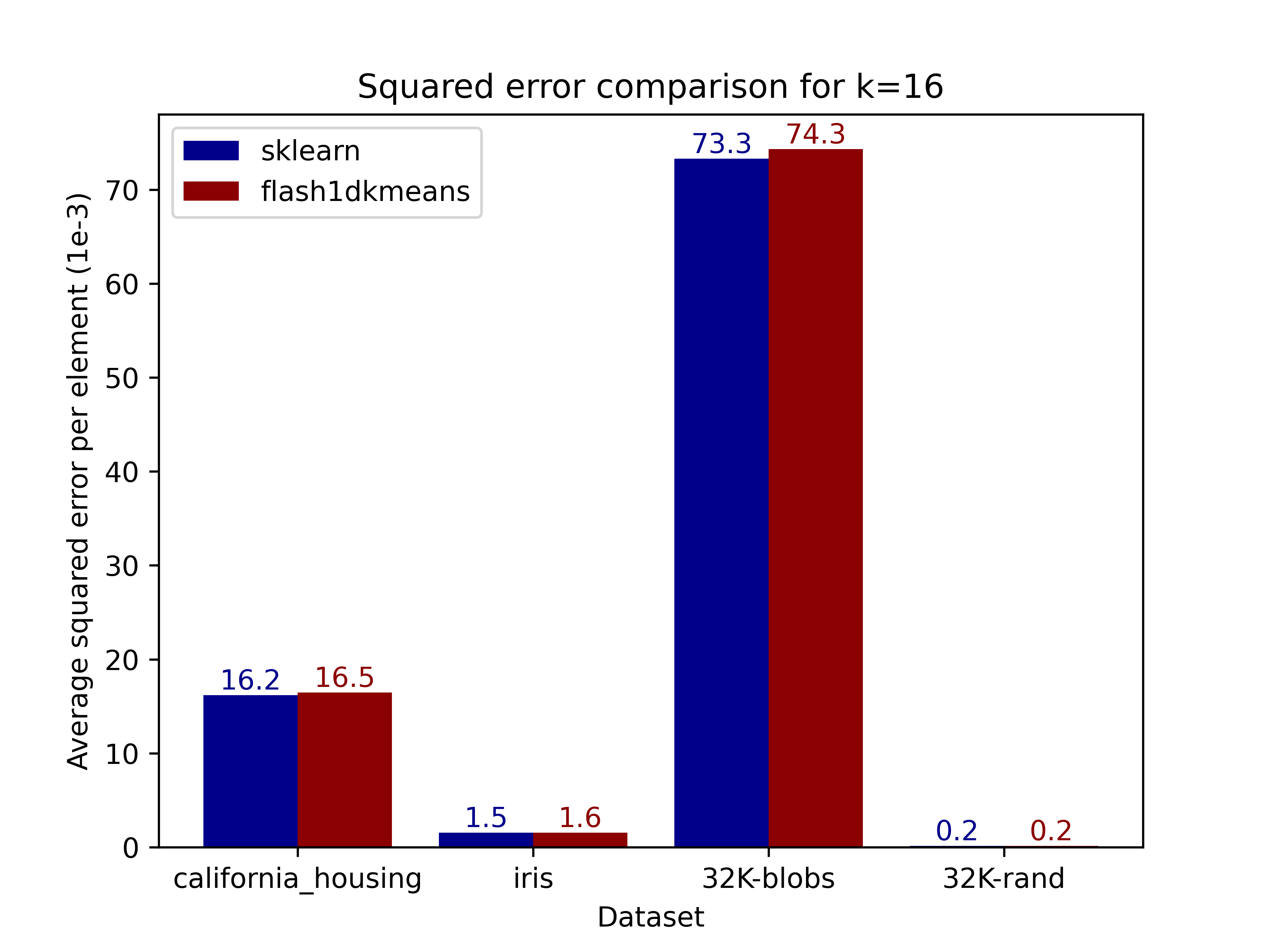

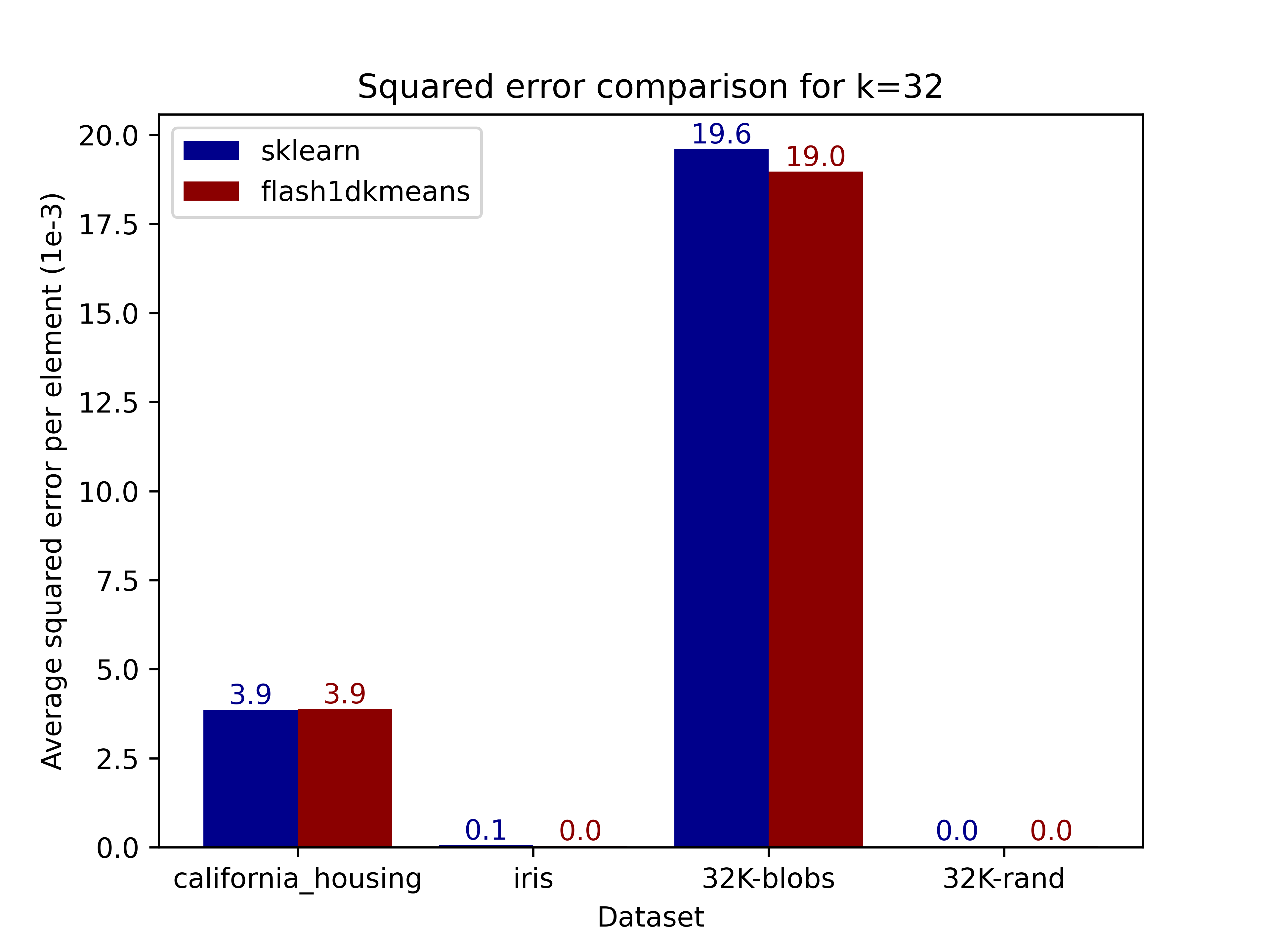

The figures below compare the squared error of the clusterings on real and generated datasets obtained using scikit-learn. Results demonstrate that flash1dkmeans indeed produces clustering results near identical to those of scikit-learn's

|

|

|

|

pip install flash1dkmeansfrom flash1dkmeans import kmeans_1d, kmeans_1d_two_clusters

data = [1.0, 2.0, 3.0, 4.0, 5.0, 6.0, 7.0, 8.0, 9.0, 10.0]

k = 2

# The optimized k-means++ initialization and Lloyd's algorithm

centroids, labels = kmeans_1d(data, k)

# The faster two-cluster deterministic algorithm

centroids, labels = kmeans_1d_two_clusters(data)from flash1dkmeans import kmeans_1d

import numpy as np

data = np.array([1.0, 2.0, 3.0, 4.0, 5.0, 6.0, 7.0, 8.0, 9.0, 10.0])

weights = np.random.random_sample(data.shape)

k = 3

# The optimized k-means++ initialization and Lloyd's algorithm

centroids, labels = kmeans_1d(

data, k,

sample_weights=weights, # sample weights

max_iter=100, # maximum number of iterations

random_state=42, # random seed

)

# The faster two-cluster deterministic algorithm

centroids, labels = kmeans_1d_two_clusters(

data,

sample_weights=weights, # sample weights

)Optional arguments is_sorted can be set to True if the data is already sorted. Optional argument return_cluster_borders can be set to True to return the cluster borders (i.e. the indices where the clusters change) instead of the labels. Refer to the docstrings for more information.

The underlying Numba-accelerated function numba_kmeans_1d_k_clusters can be used directly for more control.

This is useful when the algorithm is run multiple times on different segments of the data, or to use within another Numba-accelerated function.

The list of available functions are as follows:

numba_kmeans_1d_two_clustersnumba_kmeans_1d_two_clusters_unweightednumba_kmeans_1d_k_clusternumba_kmeans_1d_k_cluster_unweighted

All of these functions assume the data is sorted beforehand.

from flash1dkmeans import numba_kmeans_1d_k_cluster

import numpy as np

n, k = 1024, 4

# Generate random data

data = np.random.random_sample(n)

data = np.sort(data)

# Generate random weights

weights = np.random.random_sample(data.shape)

# Calculate prefix sums

weights_prefix_sum = np.cumsum(weights)

weighted_X_prefix_sum = np.cumsum(data * weights)

weighted_X_squared_prefix_sum = np.cumsum(data ** 2 * weights)

middle_idx = n // 2

# Providing prefix sums reduces redundant calculations

# This is useful when the algorithm is run multiple times on different segments of the data

for start_idx, stop_idx in [(0, middle_idx), (middle_idx, n)]:

centroids, cluster_borders = numba_kmeans_1d_k_cluster( # Note that data MUST be sorted beforehand

data, k, # Note how the sample weights are not provided when the prefix sums are provided

max_iter=100, # maximum number of iterations

weights_prefix_sum=weights_prefix_sum, # prefix sum of the sample weights, leave empty for unweighted data

weighted_X_prefix_sum=weighted_X_prefix_sum, # prefix sum of the weighted data

weighted_X_squared_prefix_sum=weighted_X_squared_prefix_sum, # prefix sum of the squared weighted data

start_idx=start_idx, # start index of the data

stop_idx=stop_idx, # stop index of the data

random_state=42, # random seed

)Refer to the docstrings for more information.

This repository has been created to be used in Any-Precision LLM project,

where multiple 1D

However, the algorithm is general and can be used for any 1D

The mathematical proof of the algorithm's correctness and the detailed explanation of the algorithms can be found in my thesis.

To cite this work, you may reference the arXiv preprint:

@misc{hyun2024logtime1dkmeans,

title={Log-Time K-Means Clustering for 1D Data: Novel Approaches with Proof and Implementation},

author={Jake Hyun},

year={2024},

eprint={2412.15295},

archivePrefix={arXiv},

primaryClass={cs.DS},

url={https://arxiv.org/abs/2412.15295},

}