Plan for Speed: Dilated Scheduling for Masked Diffusion Language Models

This is the official implementation of the Dilated Unmasking Scheduler (DUS) paper for masked diffusion language models (MDLMs).

- Python 3.8+

- PyTorch 2.0+

- CUDA-compatible GPU (recommended)

git clone https://github.com/omerlux/DUS.git

cd DUS

pip install -r requirements.txt- Quick Start

- Abstract

- Method Overview

- Results

- Files Included

- Supported Models

- Usage

- Key Functions

- Citation

- License

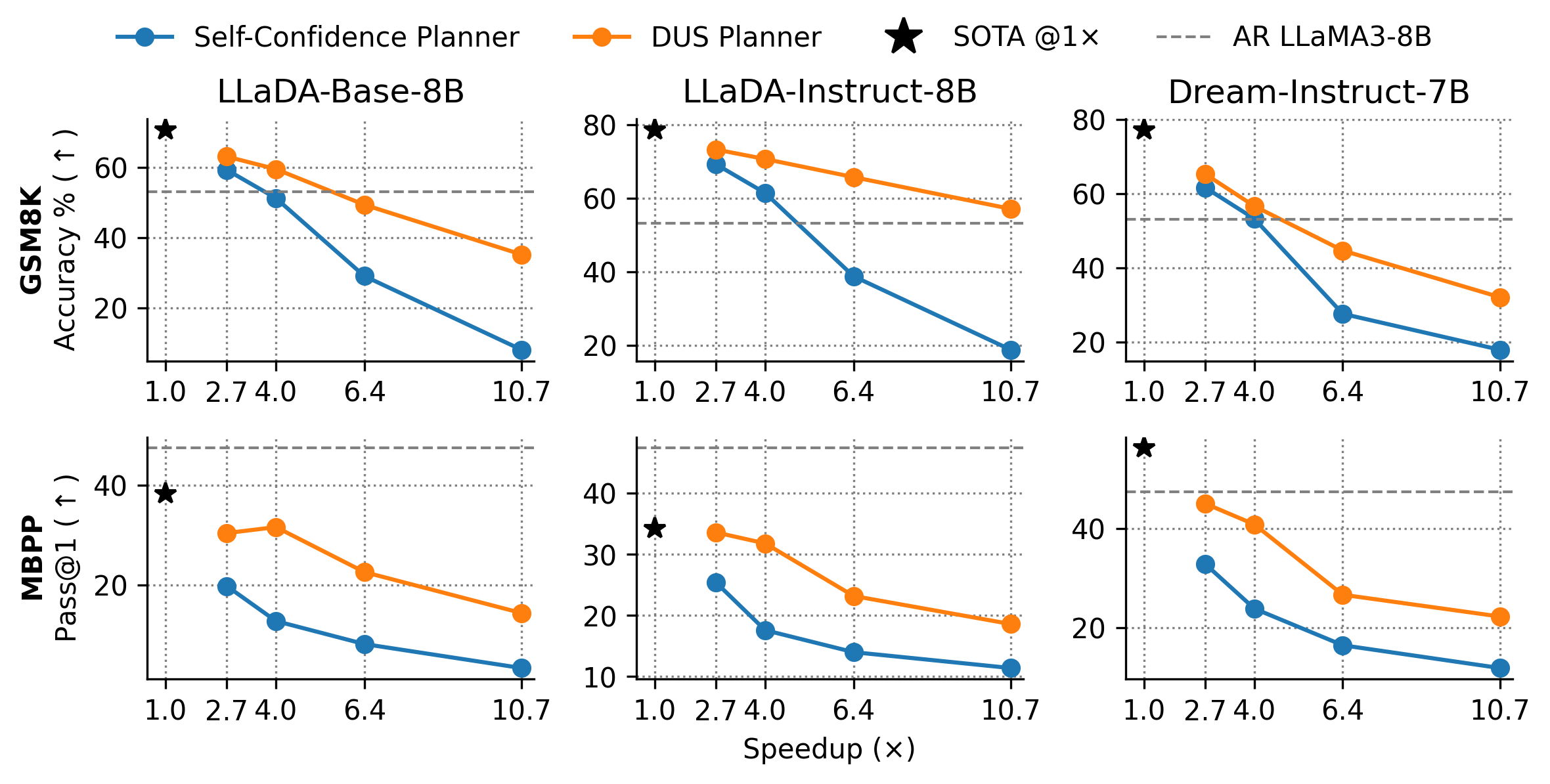

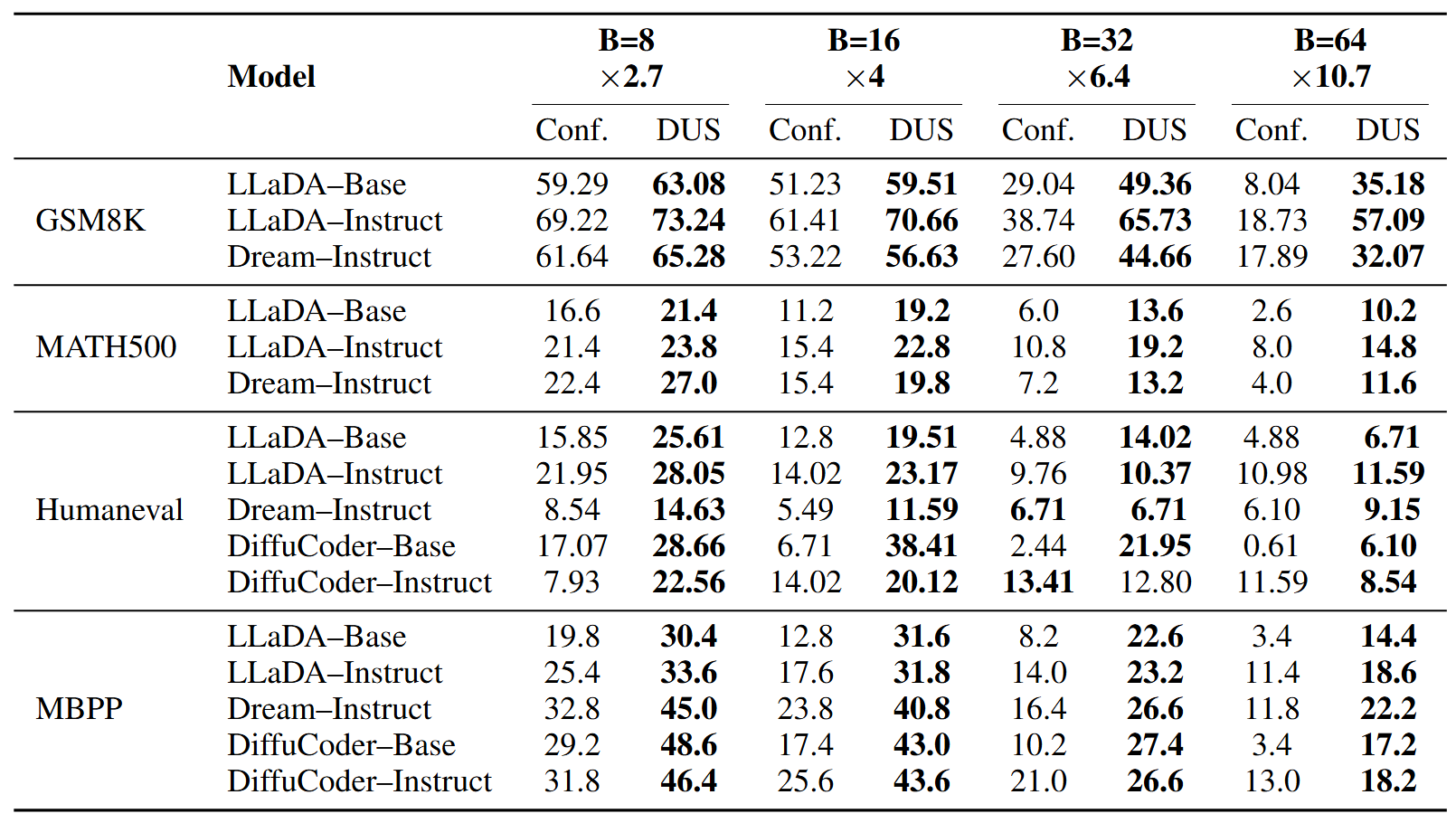

Masked diffusion language models (MDLMs) promise fast, non-autoregressive text generation, yet existing samplers, which pick tokens to unmask based on model confidence, ignore interactions when unmasking multiple positions in parallel and effectively reduce to slow, autoregressive behavior. We propose the Dilated Unmasking Scheduler (DUS), an inference-only, planner-model-free method that partitions sequence positions into non-adjacent dilated groups and unmasks them in parallel so as to minimize an upper bound on joint entropy gain at each denoising step. By explicitly trading off the number of network calls against generation quality, DUS recovers most of the performance lost under traditional parallel unmasking strategies. Across math (GSM8K, MATH500), code (HumanEval, MBPP) and general‐knowledge benchmarks (BBH, MMLU-Pro), DUS outperforms confidence‐based planners—without modifying the underlying denoiser, and reveals the true speed-quality frontier of MDLMs.

Generation process visualization: blue indicates the start of unmasking, different colors show tokens unmasked at different stages

Modern large‐scale LLMs almost universally use autoregressive (AR) decoding—predicting one token at a time in strict left-to-right order. While AR yields high local fidelity, it is subject to error accumulation and enforces

By contrast, masked diffusion treats the entire sequence as a latent "noisy" mask and gradually unmasks tokens over a small number of denoising passes. In principle this supports any-order token revelations and fully parallel updates—trading off the number of passes (and thus latency) against generation fidelity.

Almost all existing diffusion samplers collapse back to AR speed and quality by unmasking one token per step, using denoiser confidence or entropy to pick the next index. In effect, the denoiser becomes an implicit planner, but it still:

- Ignores interactions between multiple tokens unmasked in the same step

- Fails to account for how revealing

$x_i$ would change the uncertainty of$x_j$ if both are revealed together

As soon as you try to unmask more than one token at once, quality plummets.

DUS is a model-agnostic, planner-model-free inference scheduler that requires no extra training or changes to the denoiser.

- Let

$G$ be the sequence length. Set$K=\lceil\log G\rceil$ and${C_1,...,C_K}$ be a partition of the$G$ positions into$K$ non-adjacent groups - Each candidate group

$C_k$ has on average$G/K$ tokens with minimal pairwise dependencies

For

- Unmask all tokens in

$C_k$ simultaneously - Run one pass of the denoiser over the full sequence

Under a one-order fast-mixing Markov chain on token positions, non-adjacent tokens exhibit negligible mutual information, so:

Hence, grouping non-adjacent tokens in each

-

AR baseline:

$G$ denoiser calls (one per token) -

DUS:

$K=\lceil\log G\rceil$ calls$\Rightarrow$ $\approx G/\log G \times$ speedup - Empirical result: DUS preserves up to 25% of quality even at 5×–10× fewer passes

By explicitly managing the number of unmasking steps via a dilated schedule, DUS uncovers the true speed–quality frontier of masked diffusion LMs—delivering sub-linear, any-order generation at practical fidelity.

DUS achieves significant speedups while maintaining competitive performance:

- Speed: Up to 5×-10× faster than autoregressive generation

- Quality: Preserves up to 75% of original performance quality

- Efficiency: Requires only ⌈log G⌉ denoising steps for sequence length G

- Benchmarks: Evaluated on GSM8K, MATH500, HumanEval, MBPP, BBH, and MMLU-Pro

For detailed results and visualizations, see our paper and website.

eval_mdlm.py: Evaluation harness for masked diffusion language modelsgenerate.py: Core generation functions with dilated unmasking schedulerrequirements.txt: Required dependencies

This implementation supports:

- Dream models (

Dream-org/Dream-v0-Base-7B,Dream-org/Dream-v0-Instruct-7B) - DiffuCoder models (

apple/DiffuCoder-7B-Base,apple/DiffuCoder-7B-Instruct) - LLaDA models (

GSAI-ML/LLaDA-8B-Base,GSAI-ML/LLaDA-8B-Instruct) - Other compatible masked language models

-

Install dependencies:

pip install -r requirements.txt

-

Run evaluation with confidence-based scheduler:

python eval_mdlm.py --tasks gsm8k --model mdlm_dist --model_args "model_path=GSAI-ML/LLaDA-8B-Base,gen_length=256,steps=64,block_length=32,remasking=low_confidence" -

Run evaluation with dilated unmasking scheduler (DUS):

python eval_mdlm.py --tasks gsm8k --model mdlm_dist --model_args "model_path=GSAI-ML/LLaDA-8B-Base,gen_length=256,block_length=32,generation=mdlm_scheduled,scheduler=dilated,base=2,base_skip=1,confidence_threshold=0.3,remasking=low_confidence" -

Or use VS Code debugger with the provided launch configuration.

generate(): Confidence-based generation with various remasking strategies (traditional approach)generate_scheduled(): Generation with dilated unmasking scheduler (DUS) - our proposed method

If you use this code in your research, please cite:

@article{luxembourg2025plan,

title={Plan for Speed: Dilated Scheduling for Masked Diffusion Language Models},

author={Luxembourg, Omer and Permuter, Haim and Nachmani, Eliya},

journal={arXiv preprint arXiv:2506.19037},

year={2025}

}We thank the authors of LLaDA for their open-source implementation.

This project is licensed under the MIT License - see the LICENSE file for details.