… or “Making Robust LLM Computational Pipelines from Software Engineering Perspective”

Abstract

Large Language Models (LLMs) are powerful tools with diverse capabilities, but from Software Engineering (SE) Point Of View (POV) they are unpredictable and slow. In this presentation we consider five ways to make more robust SE pipelines that include LLMs. We also consider a general methodological workflow for utilizing LLMs in “every day practice.”

Here are the five approaches we consider:

- DSL for configuration-execution-conversion

- Infrastructural, language-design level solution

- Detailed, well crafted prompts

- AKA “Prompt engineering”

- Few-shot training with examples

- Via a Question Answering System (QAS) and code templates

- Grammar-LLM chain of responsibility

- Testings with data types and shapes over multiple LLM results

Compared to constructing SE pipelines, Literate Programming (LP) offers a dual or alternative way to use LLMs. For that it needs support and facilitation of:

- Convenient LLM interaction (or chatting)

- Document execution (weaving and tangling)

The discussed LLM workflows methodology is supported in Python, Raku, Wolfram Language (WL). The support in R is done via Python (with “reticulate”, [TKp1].)

The presentation includes multiple examples and showcases.

Modeling of the LLM utilization process is hinted but not discussed.

Here is a mind-map of the presentation:

Here are the notebook used in the presentation:

- Presentation’s notebook

- Robust-LLM-Pipelines-SouthFL-DSSG-Python.ipynb

- Robust-LLM-Pipelines-SouthFL-DSSG-Raku.ipynb

General structure of LLM-based workflows

All systematic approaches of unfolding and refining workflows based on LLM functions, will include several decision points and iterations to ensure satisfactory results.

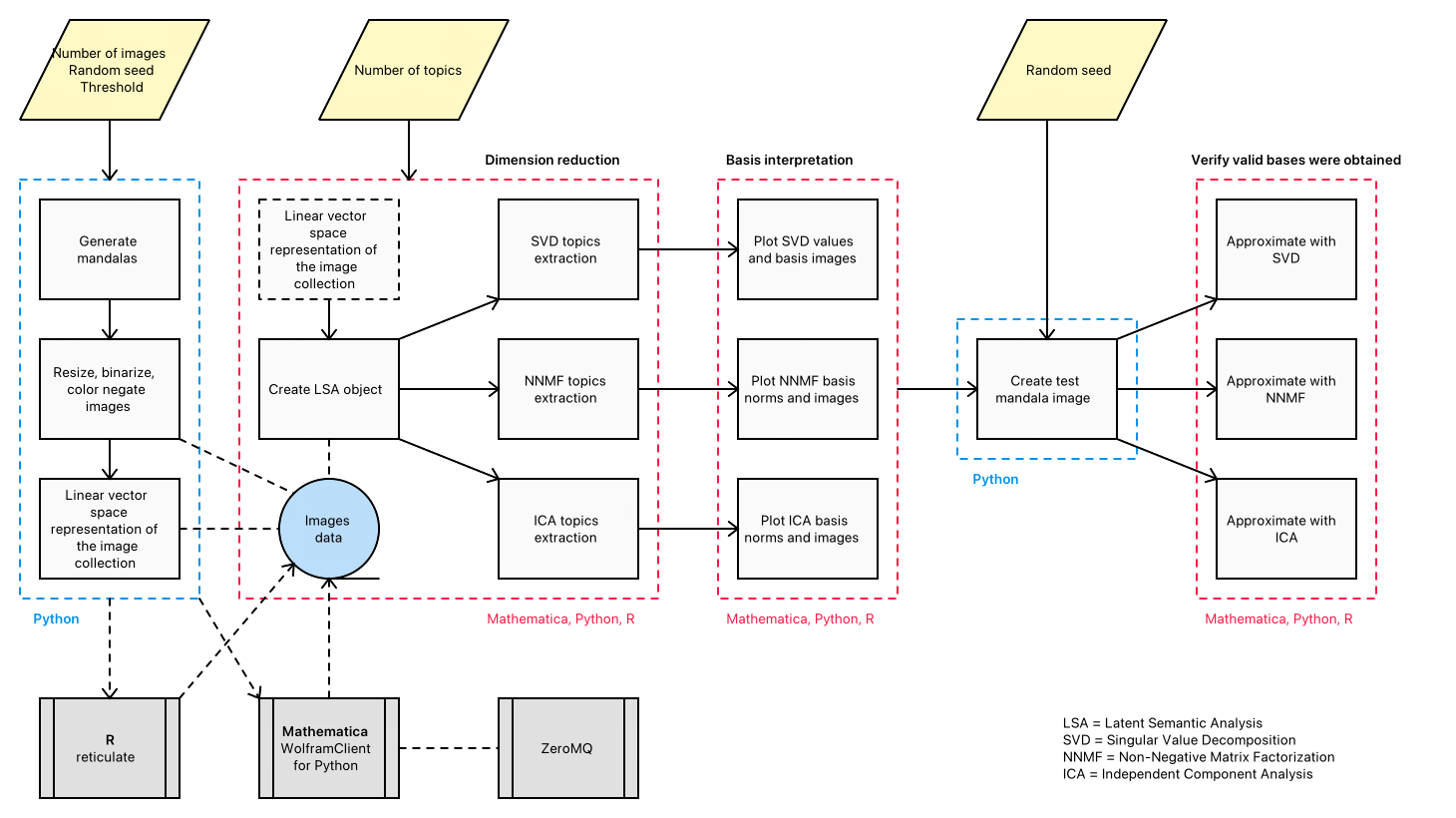

This flowchart outlines such a systematic approach:

References

Articles, blog posts

[AA1] Anton Antonov, “Workflows with LLM functions”, (2023), RakuForPrediction at WordPress.

Notebooks

[AAn1] Anton Antonov, “Workflows with LLM functions (in Raku)”, (2023), Wolfram Community.

[AAn2] Anton Antonov, “Workflows with LLM functions (in Python)”, (2023), Wolfram Community.

[AAn3] Anton Antonov, “Workflows with LLM functions (in WL)”, (2023), Wolfram Community.

Packages

Raku

[AAp1] Anton Antonov, LLM::Functions Raku package, (2023-2024), GitHub/antononcube. (raku.land)

[AAp2] Anton Antonov, LLM::Prompts Raku package, (2023-2024), GitHub/antononcube. (raku.land)

[AAp3] Anton Antonov, Jupyter::Chatbook Raku package, (2023-2024), GitHub/antononcube. (raku.land)

Python

[AAp4] Anton Antonov, LLMFunctionObjects Python package, (2023-2024), PyPI.org/antononcube.

[AAp5] Anton Antonov, LLMPrompts Python package, (2023-2024), GitHub/antononcube.

[AAp6] Anton Antonov, JupyterChatbook Python package, (2023-2024), GitHub/antononcube.

[MWp1] Marc Wouts, jupytext Python package, (2021-2024), GitHub/mwouts.

R

[TKp1] Tomasz Kalinowski, Kevin Ushey, JJ Allaire, RStudio, Yuan Tang, reticulate R package, (2016-2024)

Videos

[AAv1] Anton Antonov, “Robust LLM pipelines (Mathematica, Python, Raku)”, (2024), YouTube/@AAA4Predictions.

[AAv2] Anton Antonov, “Integrating Large Language Models with Raku”, (2023), The Raku Conference 2023 at YouTube.