The AI SRE you want

by your side at 3 a.m.

RunLLM is an always-on AI SRE that integrates with your stack, investigates alerts, and gets you to resolution faster.

Built for Trust. Trusted in Production.

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

Why an AI SRE?

Your observability tools are great at alerting. RunLLM tells you what's wrong, and what to do about it—in minutes.

Improve Uptime

Recover faster with evidence-backed investigations and clear next steps.

Reduce Alert Fatigue

Cut the noise and fire drills that distract and burn out your team.

Prevent Repeat Incidents

Spot risk early and learn from every incident to stop recurring issues.

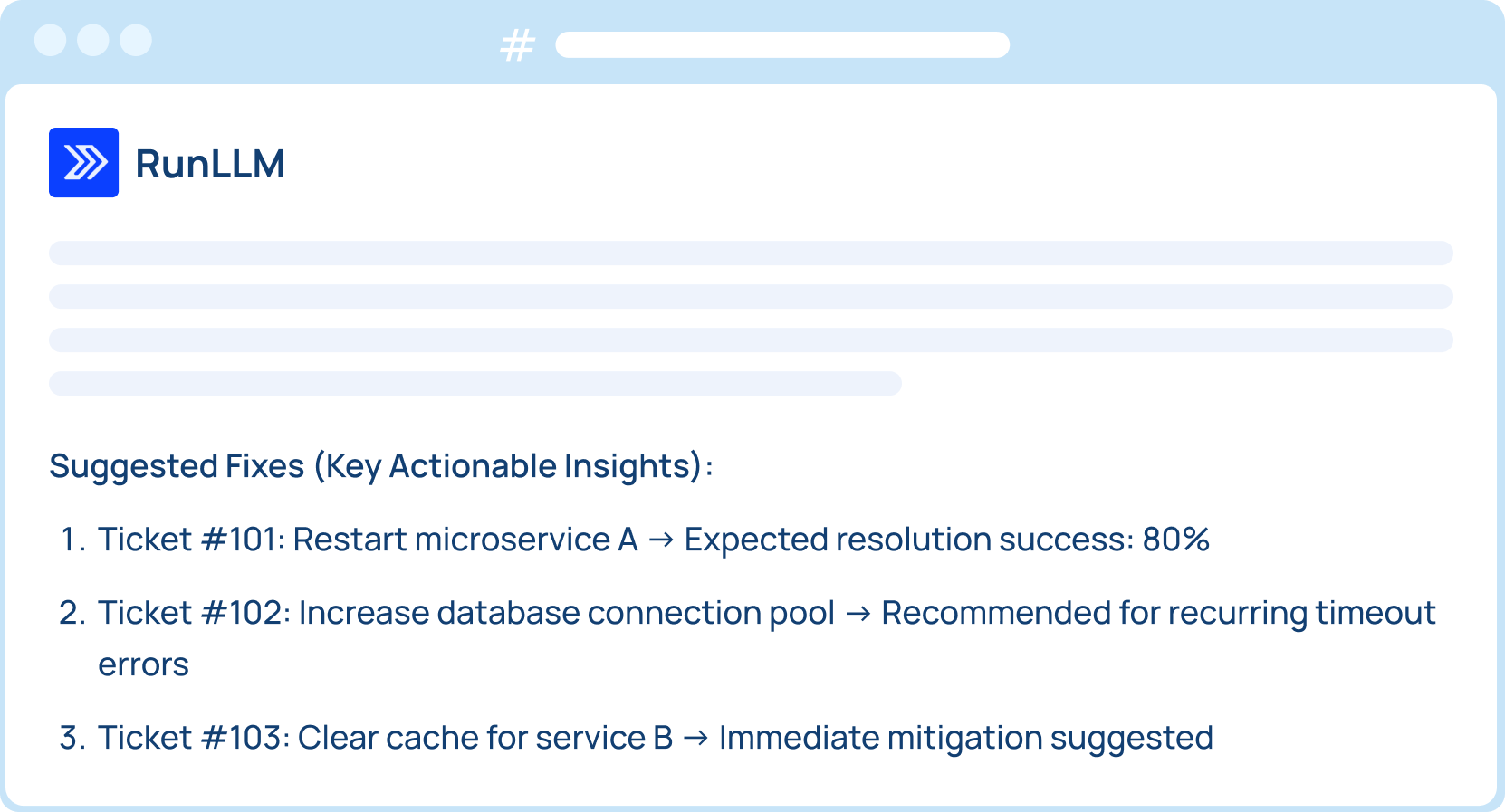

The RunLLM AI SRE

RunLLM correlates alerts, logs, metrics, traces, and tickets in minutes, then guides you through RCA, mitigation, and postmortems.

Integrates

Connects to your observability tools, code, ticketing, docs, and chat so every investigation starts with full context.

Investigates

When alerts fire, RunLLM correlates evidence across your stack and delivers clear next steps.

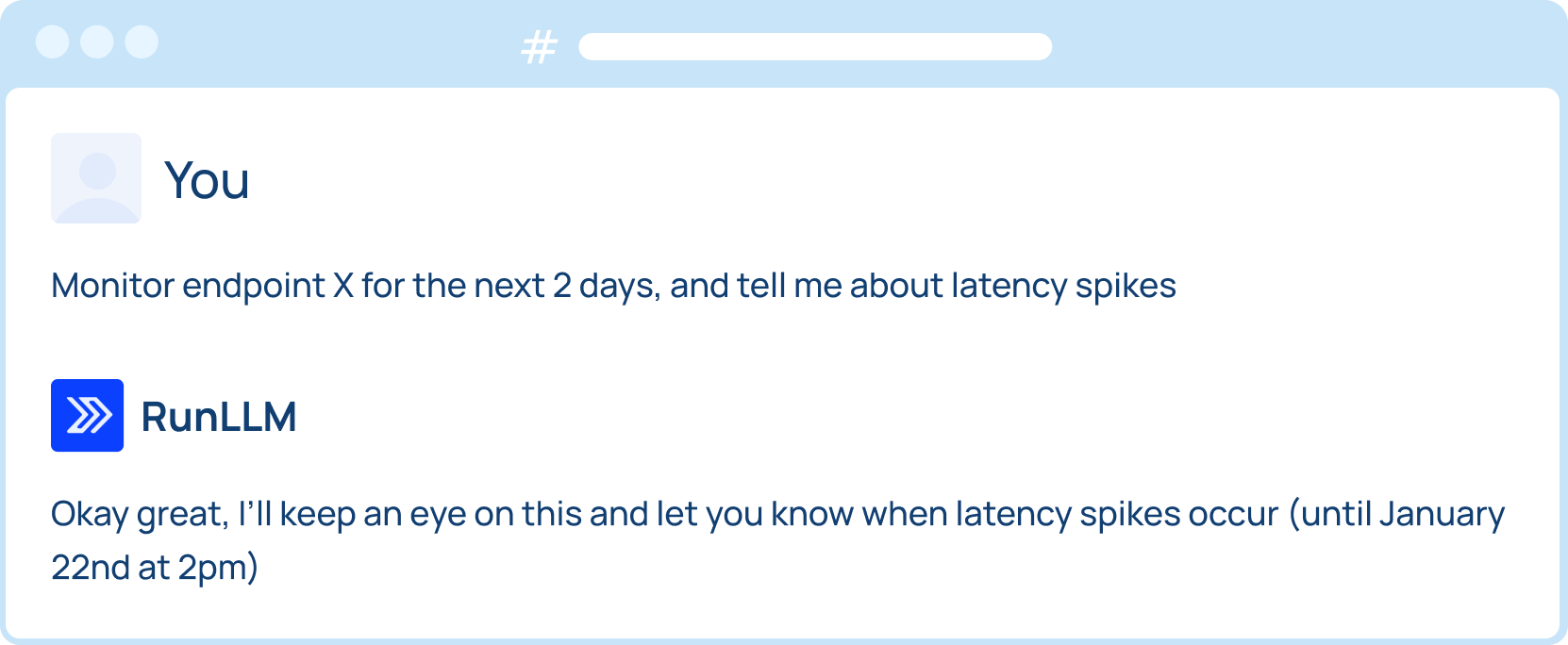

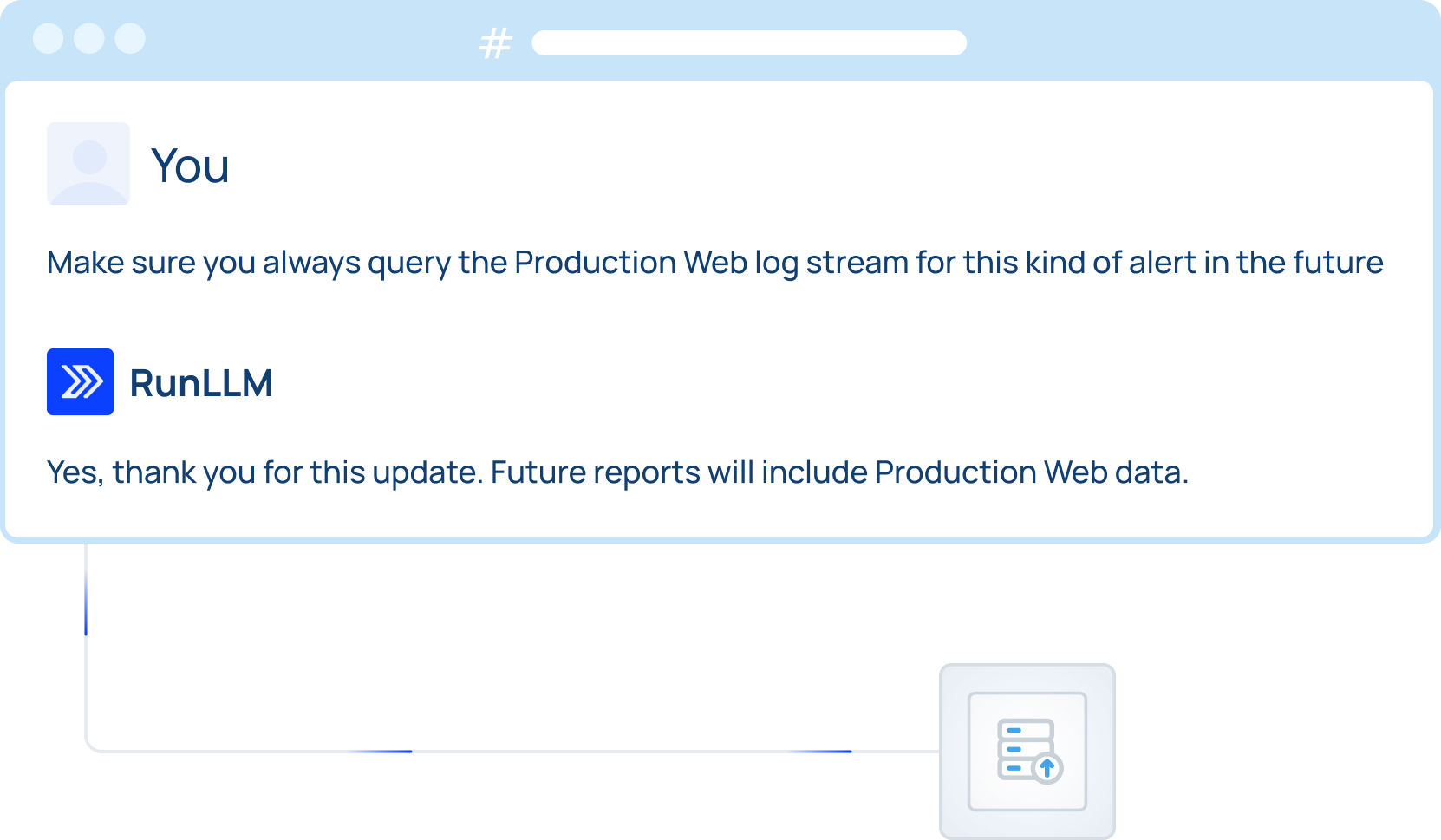

Learns

Improves with every investigation and user-provided correction, lowering MTTR over time.

Prevents

Continuously analyzes incidents, logs, and customer tickets to surface risks early, before customers are impacted.

Why RunLLM

Resolve Faster. Sleep Better.

For things that go bump in the night, RunLLM investigates across observability, deploys, tickets, and code so you can put incidents to bed faster.

Day-One Value

Safe by Default

Rapid RCA

Your Agent, Your Way

Continuously Learns

Always-on Expertise

Day-One Value

Connect your tools and see results quickly, without a long setup project.

Get live in days, not weeks, using the stack you already run today

Simply connect your observability tools without installing anything on your infrastructure

Ramps new team members to on-call confidence in weeks, not months

Safe by Default

Start read-only, then expand permissions when you trust the outputs.

RunLLM starts in read-only mode, investigating without making changes

OAuth-based access uses scoped permissions your tools already support

Requires approval before taking actions like opening PRs on your behalf

Rapid RCA

Evidence-backed investigations and clear next steps.

Correlates evidence across your telemetry, deploys, tickets, code, and docs

Answers in minutes, sparing engineers hours of hunting across tools

Delivers prioritized next steps for mitigation with verification checks

.png)

Your Agent, Your Way

Works where your team works, from alert to analysis.

Slack-first delivery, with a full UI when you need to go deeper

Customizable outputs and handoffs (format, verbosity, routing)

Ask follow-up questions to keep investigating without starting over

Continuously learns

Every incident and correction improves investigations over time.

Learns which checks and queries work best for each alert pattern

Reuses proven investigation steps from similar past incidents

Captures tribal knowledge so expertise never walks out the door

Always-on Expertise

Gives every engineer veteran-level guidance during live incidents.

Makes past incident learnings easy to apply without pulling senior engineers in

Provides clear verification steps so fixes are confirmed under pressure

Ramps new team members to on-call confidence in weeks, not months

Powered by UC Berkeley Research

RunLLM was founded by PhDs and Professors from UC Berkeley’s world-renowned Computer Science Department and its AI and LLM research center, RISELab.

With deep expertise in AI, LLMs, data systems, and scalable infrastructure our team applies cutting-edge research to solve the hardest real-world technical challenges.

About RunLLM.webp)

Watch Video

.png)

Read the Latest

From thought leadership to product guides, we have resources for you.

.png)

.png)

.png)

.png)