Computer Vision

Computer Vision

ConvMixer-768_32

Combines large-kernel depthwise convolutions for spatial mixing with pointwise convolutions for channel mixing in a simple, MLP-style block.

Useful for research, ablation studies, and lightweight models, oTering transformer-like performance with very few architectural components.

Computer Vision

Computer Vision

ConvNeXt-T

Modernized CNN incorporating transformer-era design cues like large kernels, fewer activations, and simplified blocks.

Performs well in high-resolution tasks (detection/segmentation) whileretaining convolutional eTiciency, making it great for real-time industrial vision.

Computer Vision

Computer Vision

DeeplabV3plus-Resnet50

DeepLabv3+ uses an encoder-decoder architecture with atrous spatial pyramid pooling (ASPP) to capture multi-scale context and refine object boundaries for precise segmentation.

Widely used in autonomous driving, medical imaging, and satellite imagery where accurate pixel-level class labels are critical.

Computer Vision

Computer Vision

DepthAnythingV2-Small

DepthAnythingV2 uses a transformer-based encoder-decoder architecture with multi-scale feature fusion to generate high-resolution, accurate depth maps from monocular or multi-view RGB inputs.

Applied in robotics, AR/VR, autonomous navigation, and 3D scene reconstruction where precise spatial understanding of the environment is required.

Computer Vision

Computer Vision

EfficientNetV2-S

Uses compound scaling and fused-MBConv blocks to achieve fast training and high accuracy across image resolutions.

Suited for production-scale cloud deployments where both accuracy and training speed matter (e.g., large-dataset classification).

Computer Vision

Computer Vision

EfficientViT-L2

Designed for efficient vision transformers using linear attention, structured pruning, and hardware-friendly operator choices.

Great for edge AI deployments that want transformer accuracy with mobile-level latency—e.g., drones, AR glasses, and security cameras.

Computer Vision

Computer Vision

Facenet-MobilenetV1

FaceNet-Mobilenet combines a lightweight MobileNet backbone with a triplet-loss-trained embedding network to generate compact, discriminative facial feature vectors for identity verification.

Used for mobile and edge authentication, smartphone unlock, attendance tracking, and verification systems where low-latency, on-device recognition is critical.

Multimodal

Multimodal

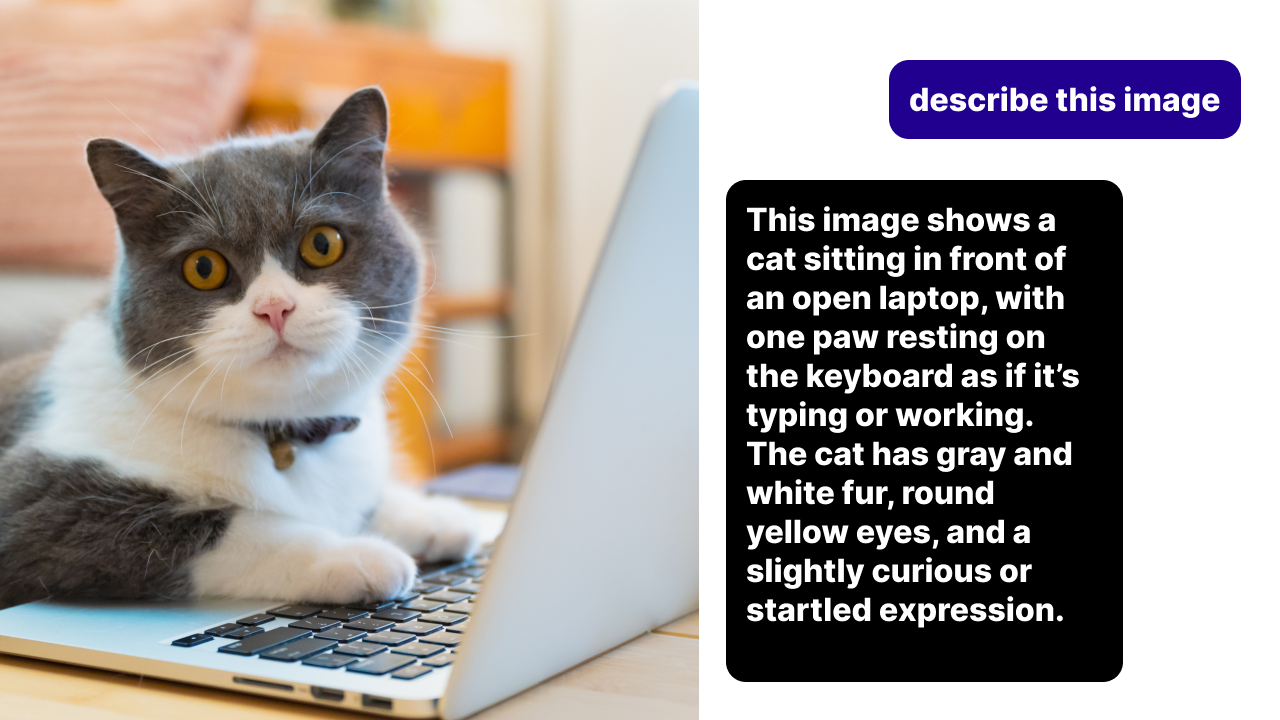

LLaVA-Onevision-Qwen2

LLaVA OneVision integrates a large language model with a visual encoder to perform multimodal reasoning, allowing the model to generate text outputs conditioned on visual input.

Suitable for visual question answering, interactive AI assistants, and reasoning over diagrams or documents combining image and text information

Multimodal

Multimodal

LongCLIP-B16

LongCLIP extends CLIP with a memory-efficient architecture to handle high-resolution and long-sequence images, enabling robust image-text alignment over larger visual contexts.

Useful for zero-shot large-scale image retrieval, document understanding, and multimedia search where high-resolution image-text matching is needed.

Computer Vision

Computer Vision

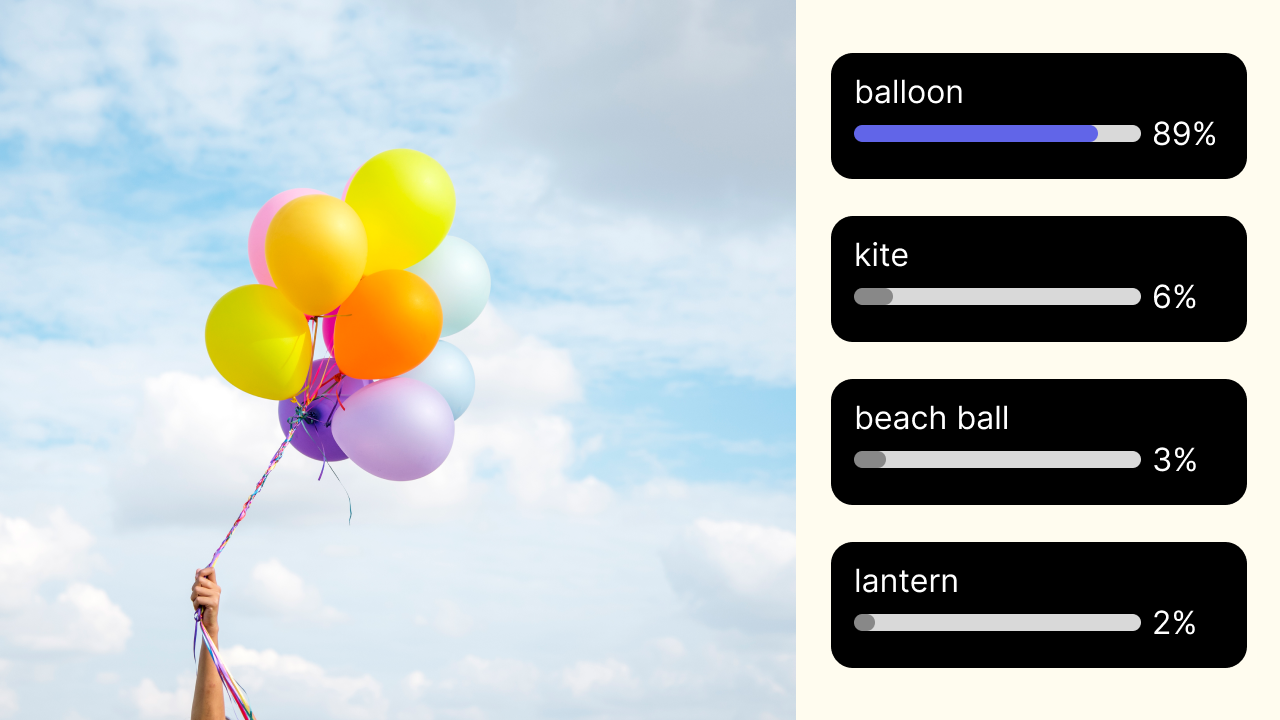

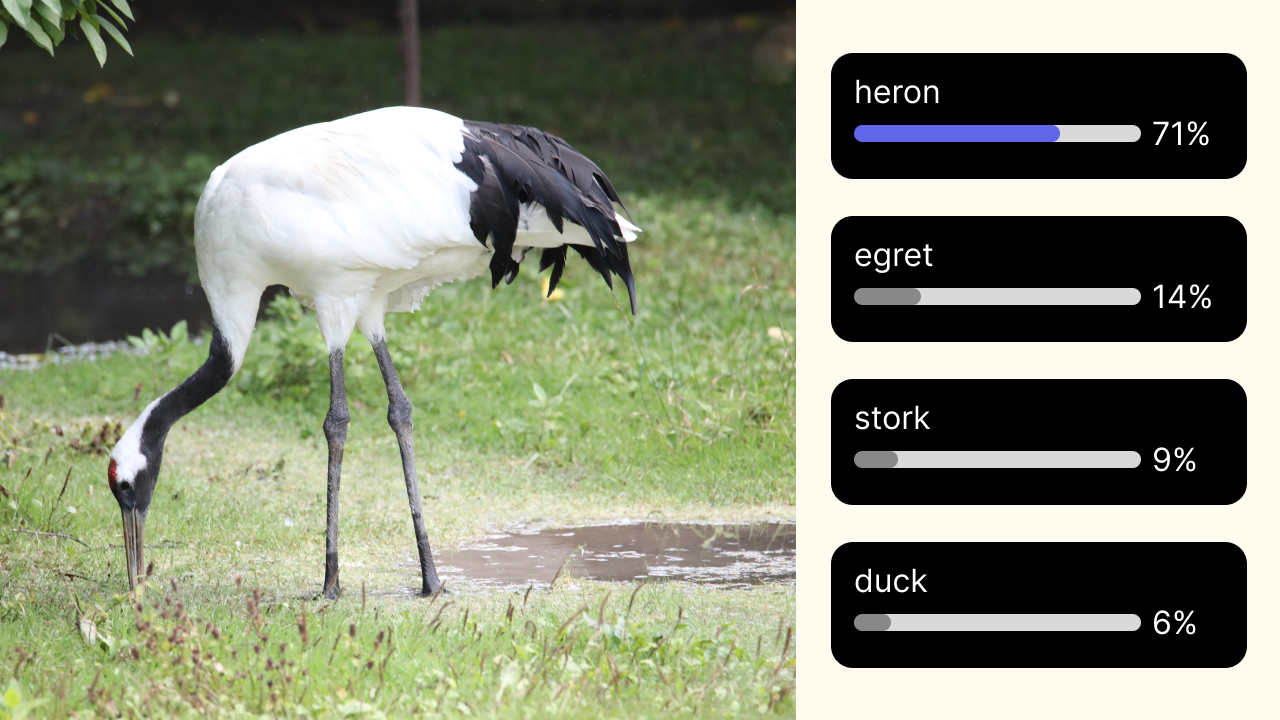

MobileNetV2

Employs inverted residual blocks with linear bottlenecks for highly eTicient, low-latency inference.

Ideal for on-device vision tasks such as mobile apps, robotics, and embedded systems where power and compute are limited

Multimodal

Multimodal

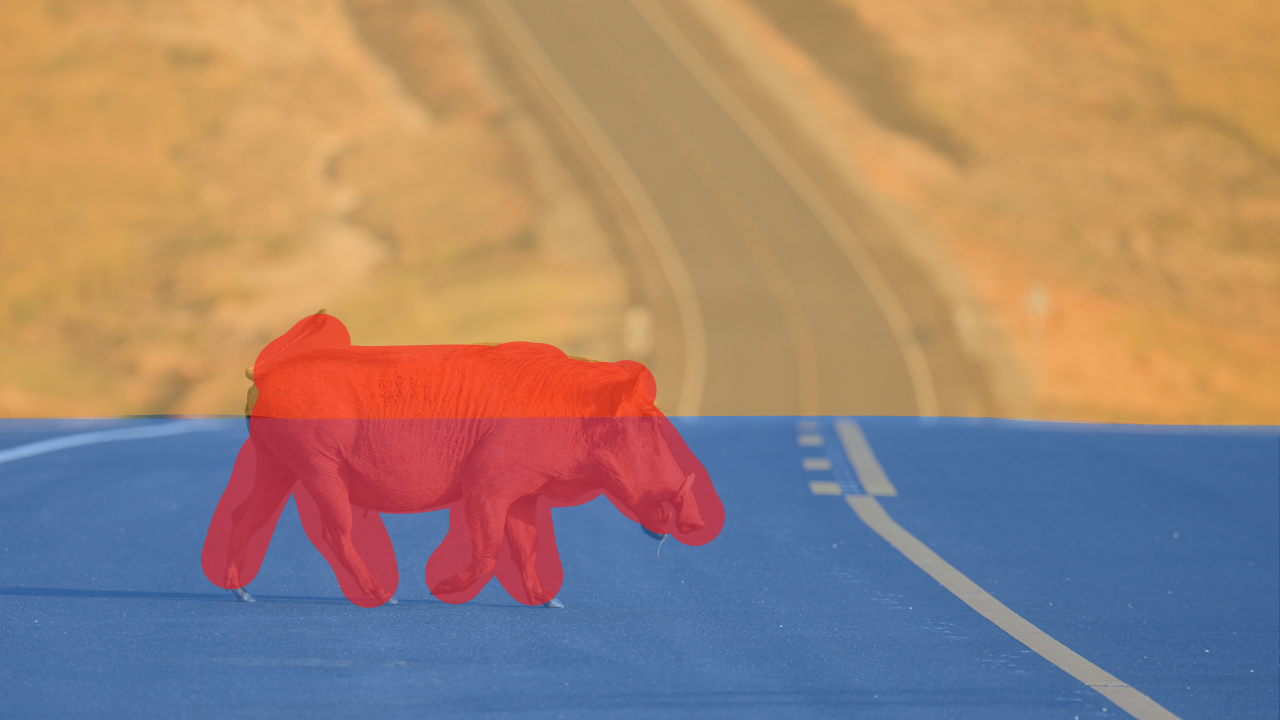

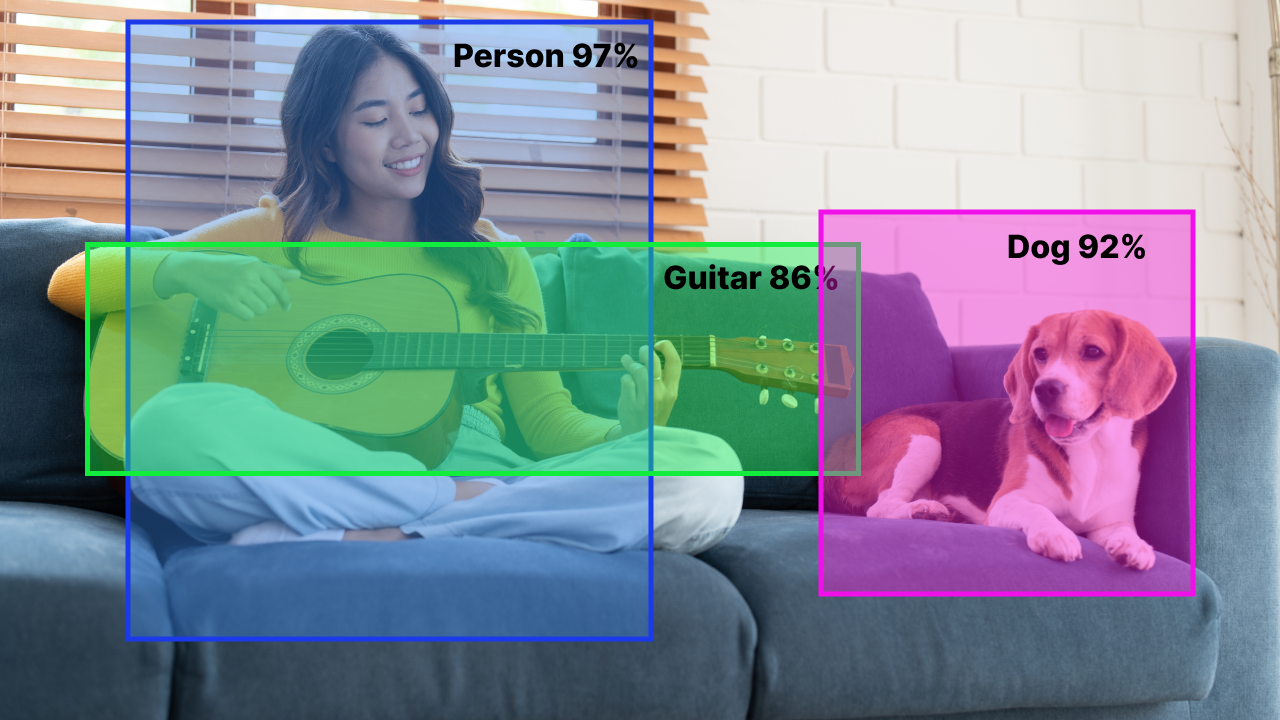

OWLViT-B32

OWL-ViT is a zero-shot open-vocabulary detector that aligns visual features with text embeddings from a pre-trained language model, supporting flexible object detection without task-specific training.

Ideal for open-vocabulary detection scenarios such as robotics, wildlife monitoring, and general object detection where new object categories may appear dynamically.

Computer Vision

Computer Vision

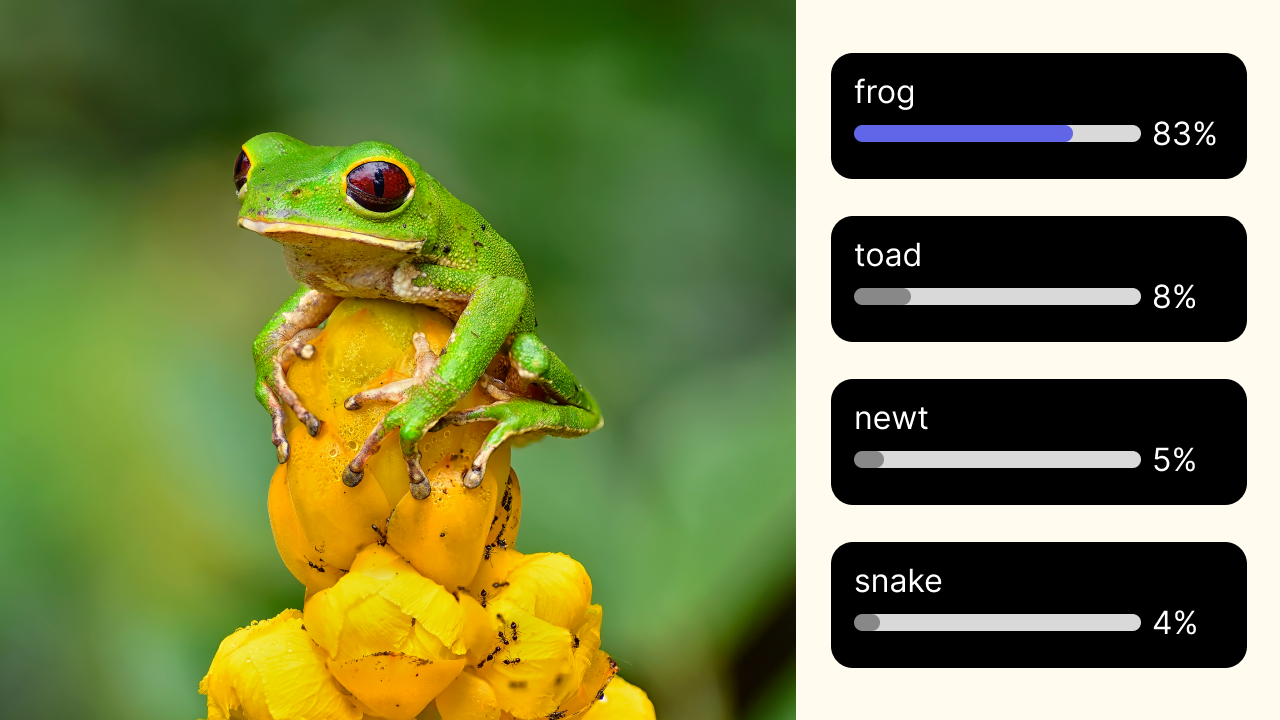

ResNet-50

Uses deep residual blocks that stabilize training and enable very deep CNN architectures.

Strong baseline for classification and widely used as a feature extractor in detection, segmentation, and re-ID pipelines.

Computer Vision

Computer Vision

RetinaFace-Resnet50

RetinaFace is a high-precision, single-stage face detector using a multi-task CNN to predict bounding boxes, facial landmarks, and dense feature maps for accurate detection under varying poses and occlusions.

Ideal for real-time face detection in surveillance cameras, video conferencing, and access control systems where robust detection is required

Computer Vision

Computer Vision

RTMPose-M

RTMPose is a high-speed, high-accuracy keypoint detector using an efficient CNN backbone, decoupled head, and refined training recipe for robust multi-person pose estimation.

Used for real-time human pose tracking in sports analytics, fitness apps, surveillance, driver monitoring, gesture control, and robotics.

Computer Vision

Computer Vision

SwinV2-T

Introduces scaled cosine attention and improved positional encoding while keeping Swin’s hierarchical, shifted-window attention.

Excellent for large-scale or high-resolution applications such as medical imaging, remote sensing, or HD autonomous-driving inputs.

Computer Vision

Computer Vision

Topformer-B

TopFormer combines lightweight CNN backbones with transformer-based context modules to efficiently capture long-range dependencies while maintaining real-time inference speed.

Suitable for mobile and edge devices, urban scene parsing, and robotics where a balance between speed and segmentation accuracy is required

Computer Vision

Computer Vision

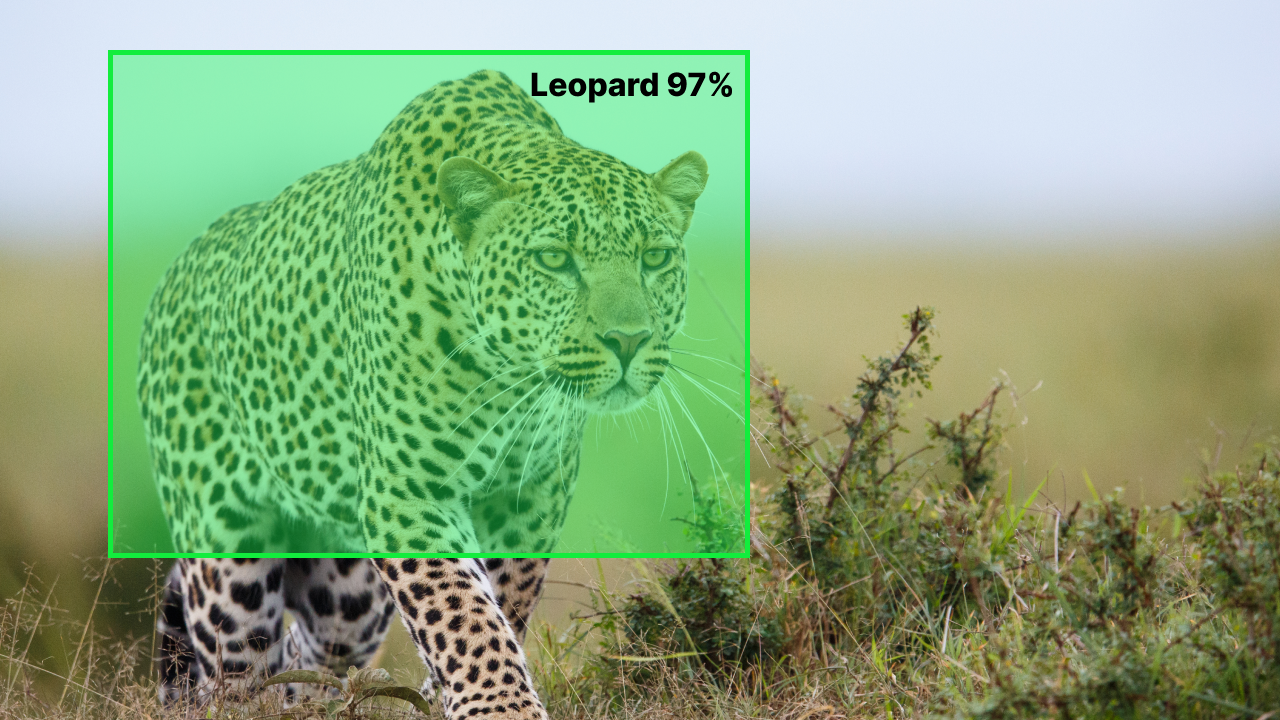

YOLOX-S

YOLOX_s is machine learning model performing object detection on COCO dataset.

YOLOX modernizes the YOLO pipeline with an anchor-free design, decoupled head, strong data augmentation, and SimOTA label assignment.

Ideal for real-time, on-device applications such as traffic monitoring, dashcams, drones, and pedestrian detection where speed and robustness under variable lighting are critical.