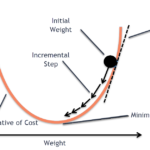

Stochastic Gradient Descent (SGD) is a fundamental optimization algorithm that has become the backbone of modern machine learning, particularly in training deep neural networks. Let’s dive deep into how it works, its advantages, and why it’s so widely used. The Core Concept At its heart, SGD is an optimization technique that helps find the minimum […]

Articles Tagged: sgd

Latest Articles

- How Python Developers Discover Technical Content in 2025: The Rise of Independent Blogs

- How Python, AI, and Machine Learning are Transforming the Future of Cybersecurity?

- Jira Power Moves Every Python Pro Should Know

- Integrating Shopify with External Systems Using Python

- Infrastructure Security Tips to Protect Your Python-Based AI Projects Before Deployment

Tags

Python is a beautiful language.