The ClickHouse blog has a posted a piece by yours truly introducing pg_clickhouse, a PostgreSQL extension to run ClickHouse queries from PostgreSQL:

While clickhouse_fdw and its predecessor, postgres_fdw, provided the foundation for our FDW, we set out to modernize the code & build process, to fix bugs & address shortcomings, and to engineer into a complete product featuring near universal pushdown for analytics queries and aggregations.

Such advances include:

- Adopting standard PGXS build pipeline for PostgreSQL extensions

- Adding prepared INSERT support to and adopting the latest supported

- release of the ClickHouse C++ library

- Creating test cases and CI workflows to ensure it works on PostgreSQL versions 13-18 and ClickHouse versions 22-25

- Support for TLS-based connections for both the binary protocol and the HTTP API, required for ClickHouse Cloud

- Bool, Decimal, and JSON support

- Transparent aggregate function pushdown, including for ordered-set aggregates like

percentile_cont()- SEMI JOIN pushdown

I’ve spent most of the last couple months working on this project, learning a ton about ClickHouse, foreign data wrappers, C and C++, and query pushdown. Interested? Try ou the Docker image:

docker run --name pg_clickhouse -e POSTGRES_PASSWORD=my_pass \

-d ghcr.io/clickhouse/pg_clickhouse:18

docker exec -it pg_clickhouse psql -U postgres -c 'CREATE EXTENSION pg_clickhouse'

Or install it from PGXN (requires C and C++ build tools, cmake, and the openssl libs, libcurl, and libuuid):

pgxn install pg_clickhouse

Or download it and build it yourself from:

Let me know what you think!

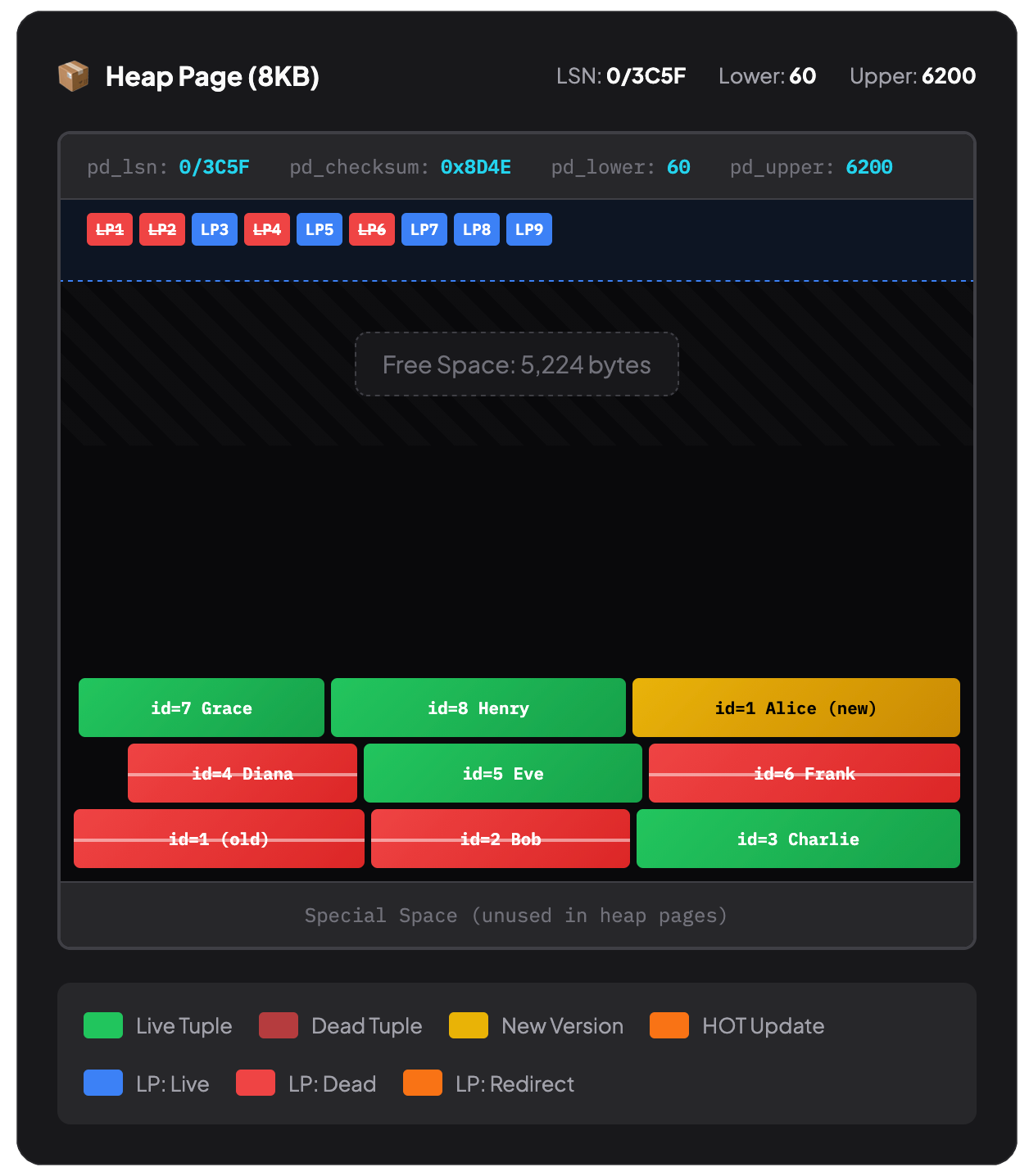

PostgreSQL 17 introduced streaming I/O – grouping multiple page reads into a single system call and using smarter posix_fadvise() hints. That alone gave up to ~30% faster sequential scans in some workloads, but it was still strictly synchronous: each backend process would issue a read and then sit there waiting for the kernel to return data before proceeding. Before PG17, PostgreSQL typically read one 8kB page at a time.

PostgreSQL 17 introduced streaming I/O – grouping multiple page reads into a single system call and using smarter posix_fadvise() hints. That alone gave up to ~30% faster sequential scans in some workloads, but it was still strictly synchronous: each backend process would issue a read and then sit there waiting for the kernel to return data before proceeding. Before PG17, PostgreSQL typically read one 8kB page at a time.