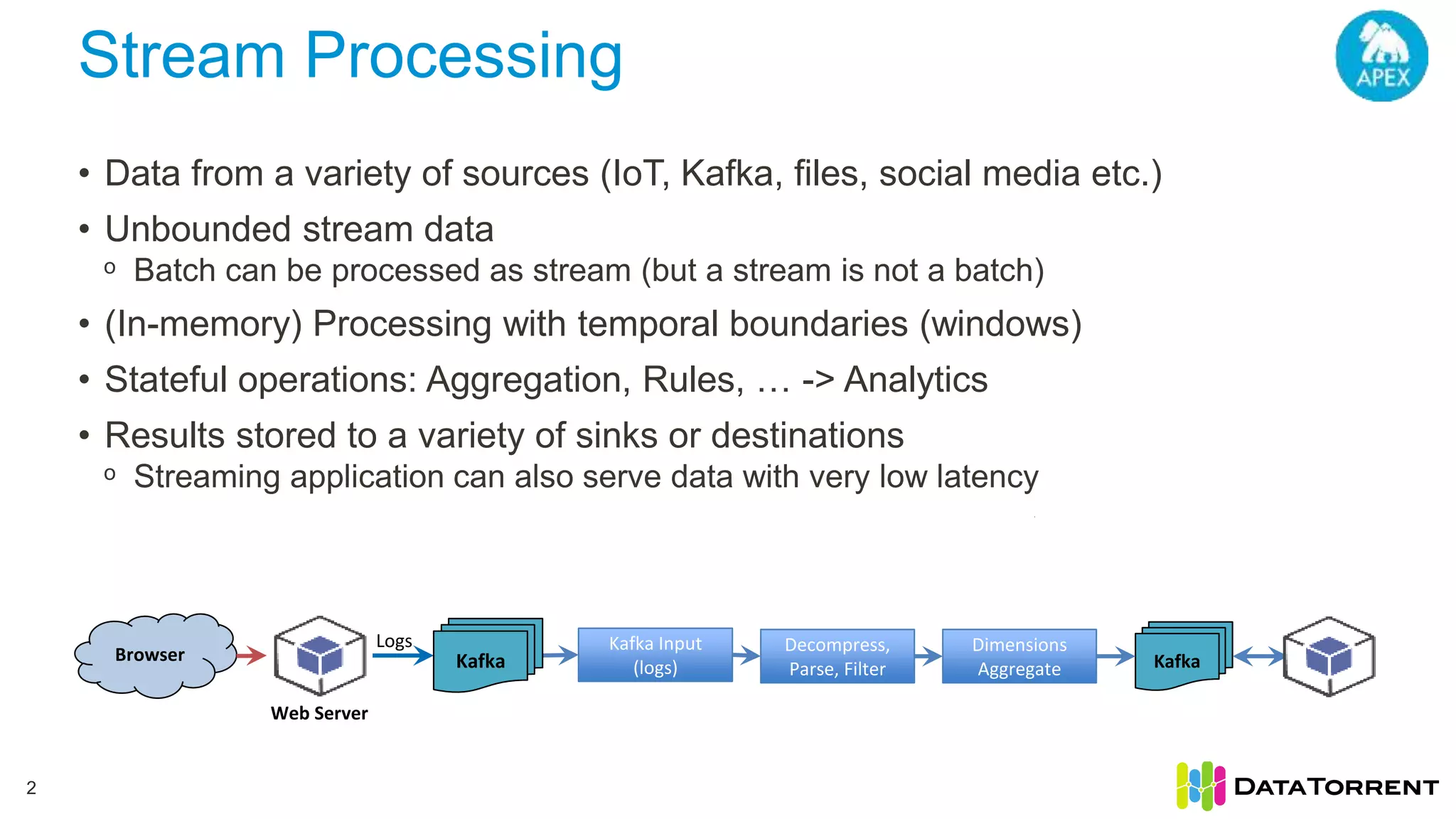

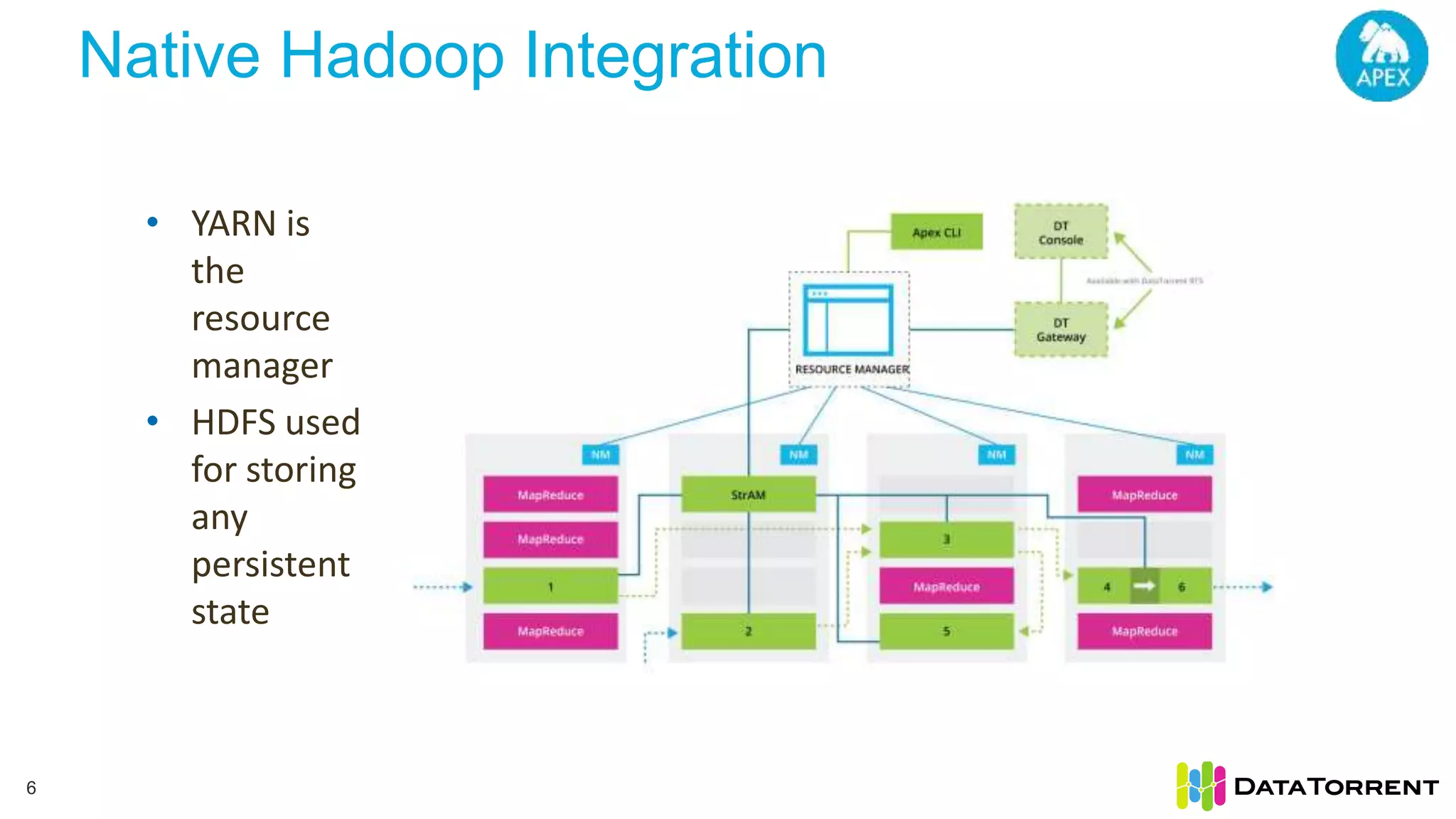

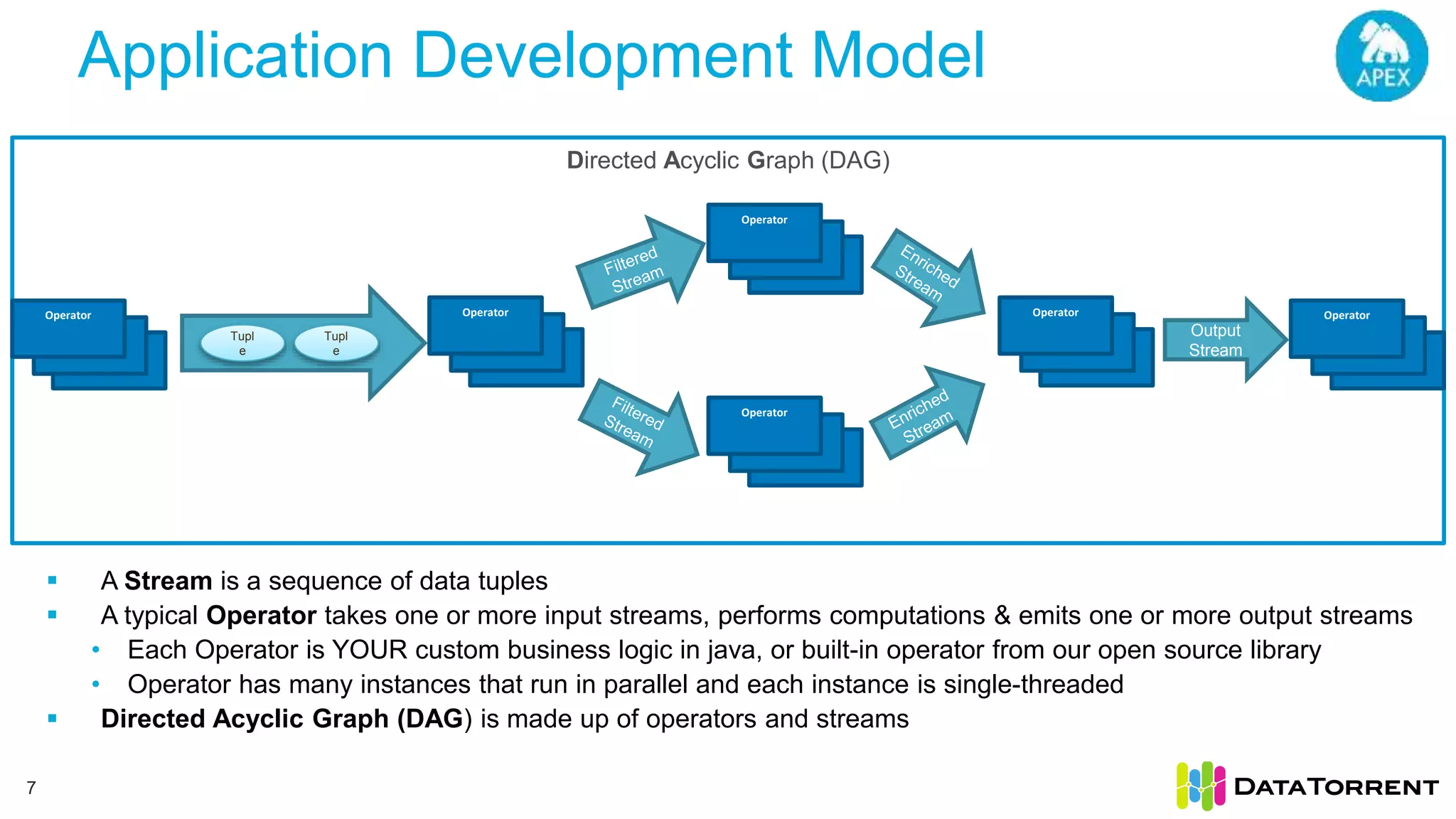

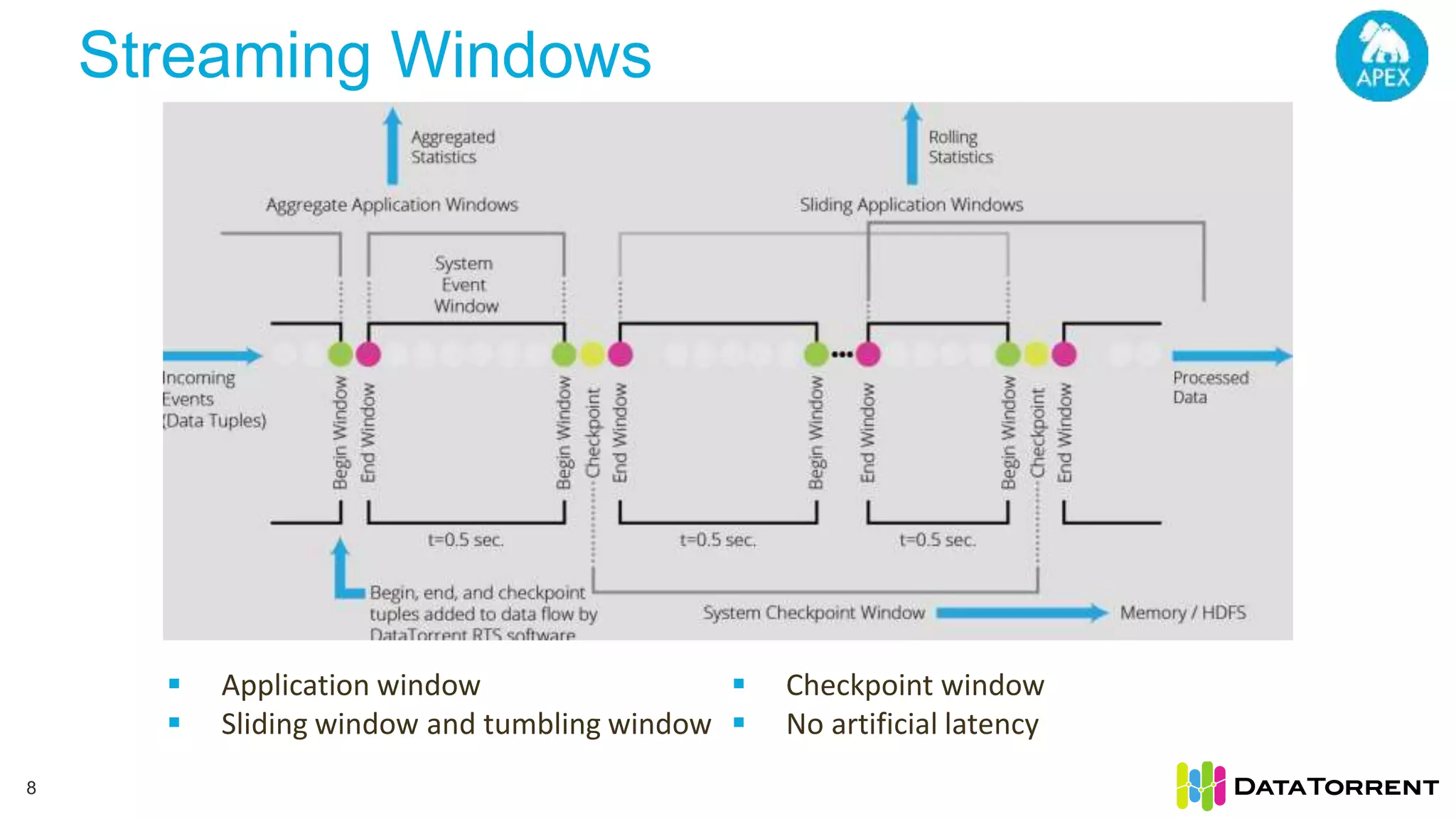

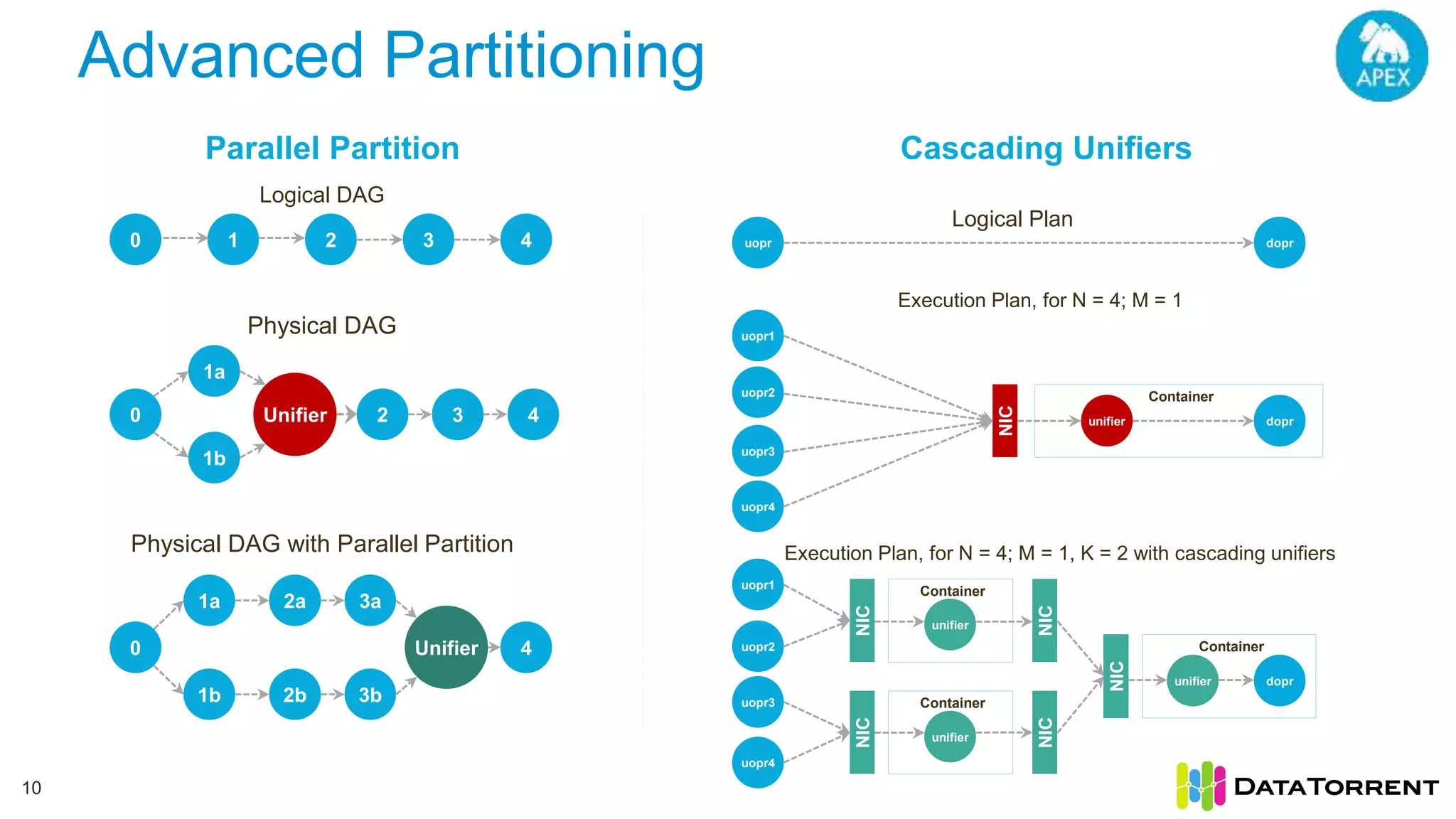

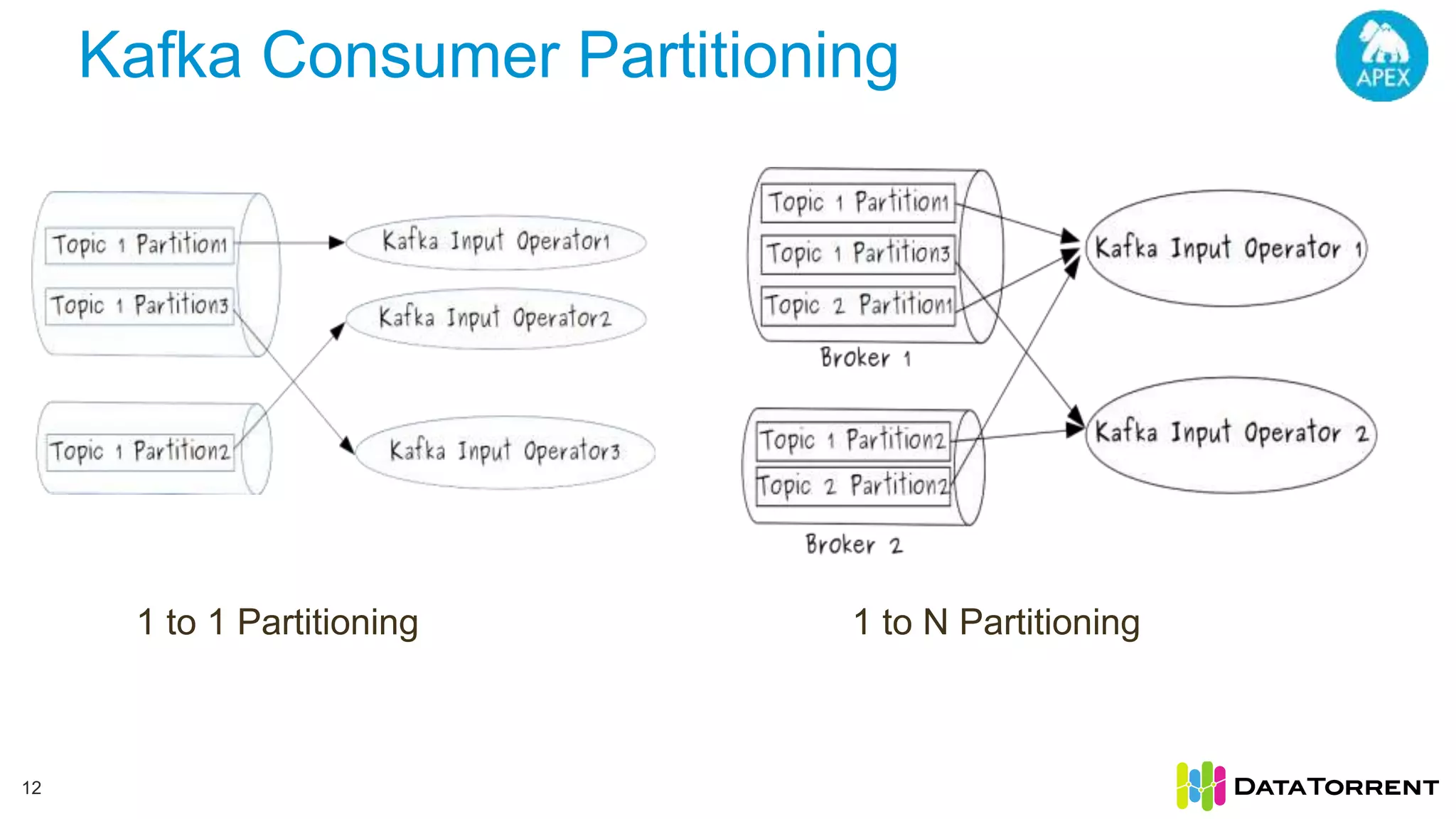

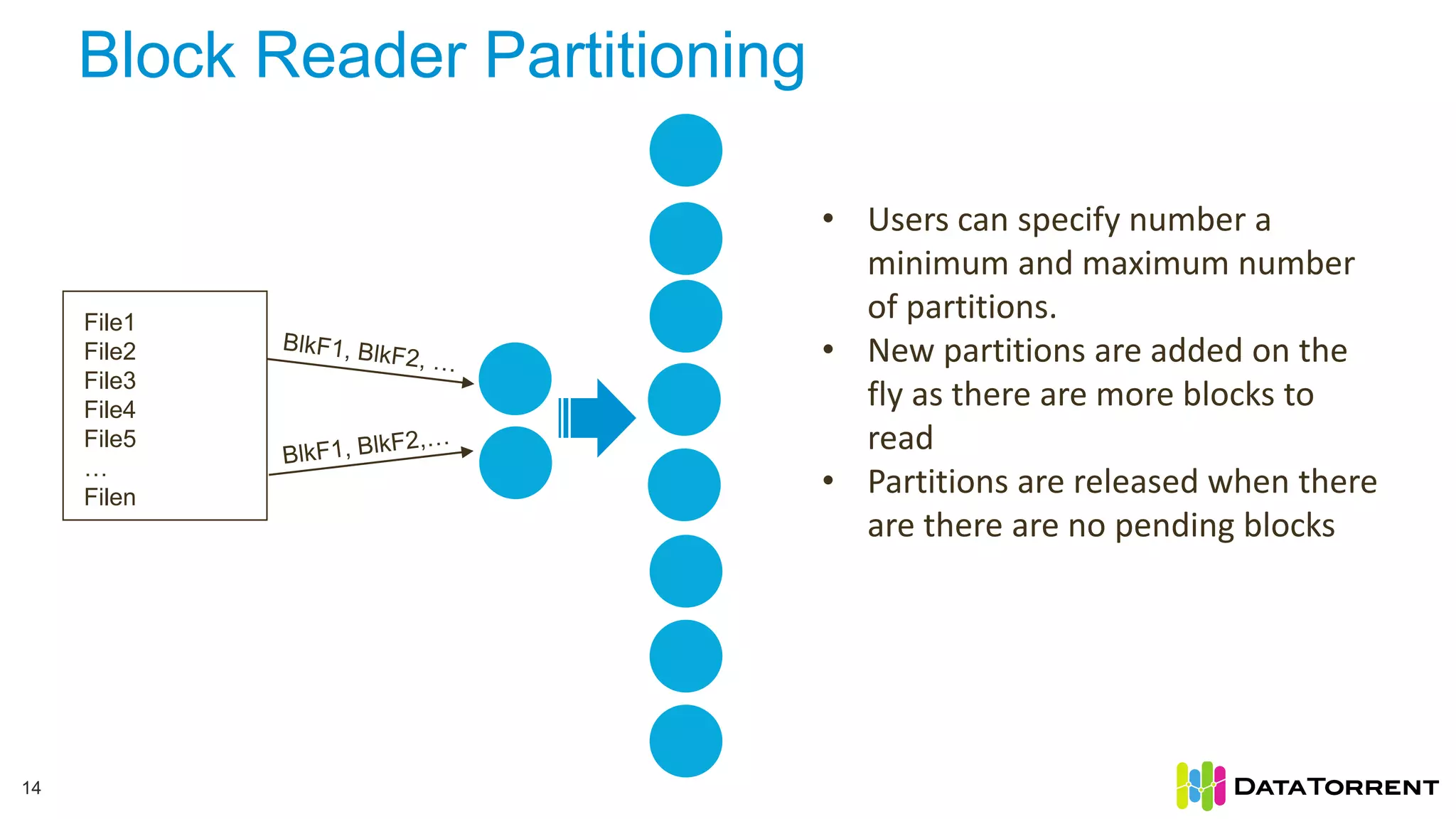

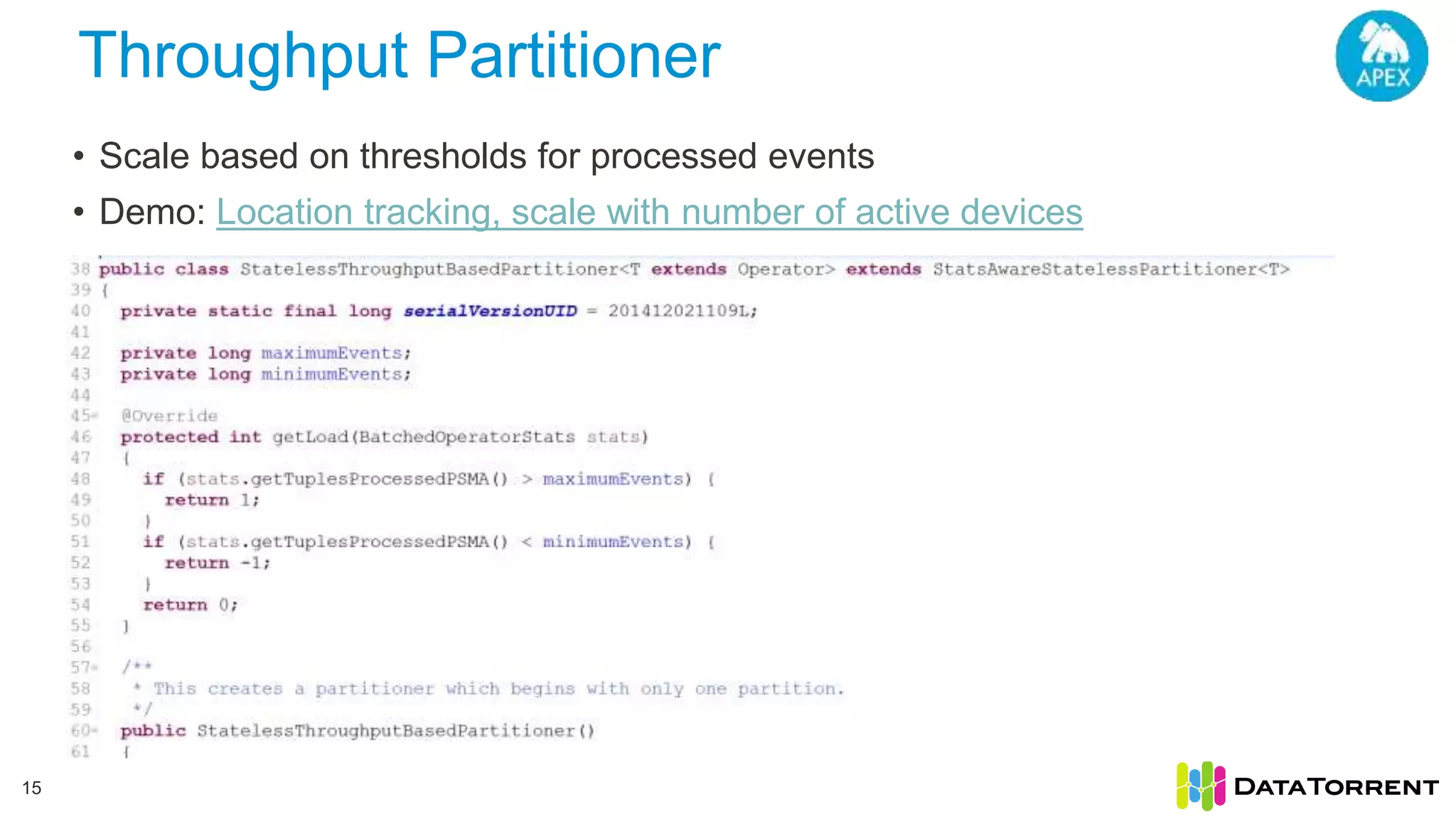

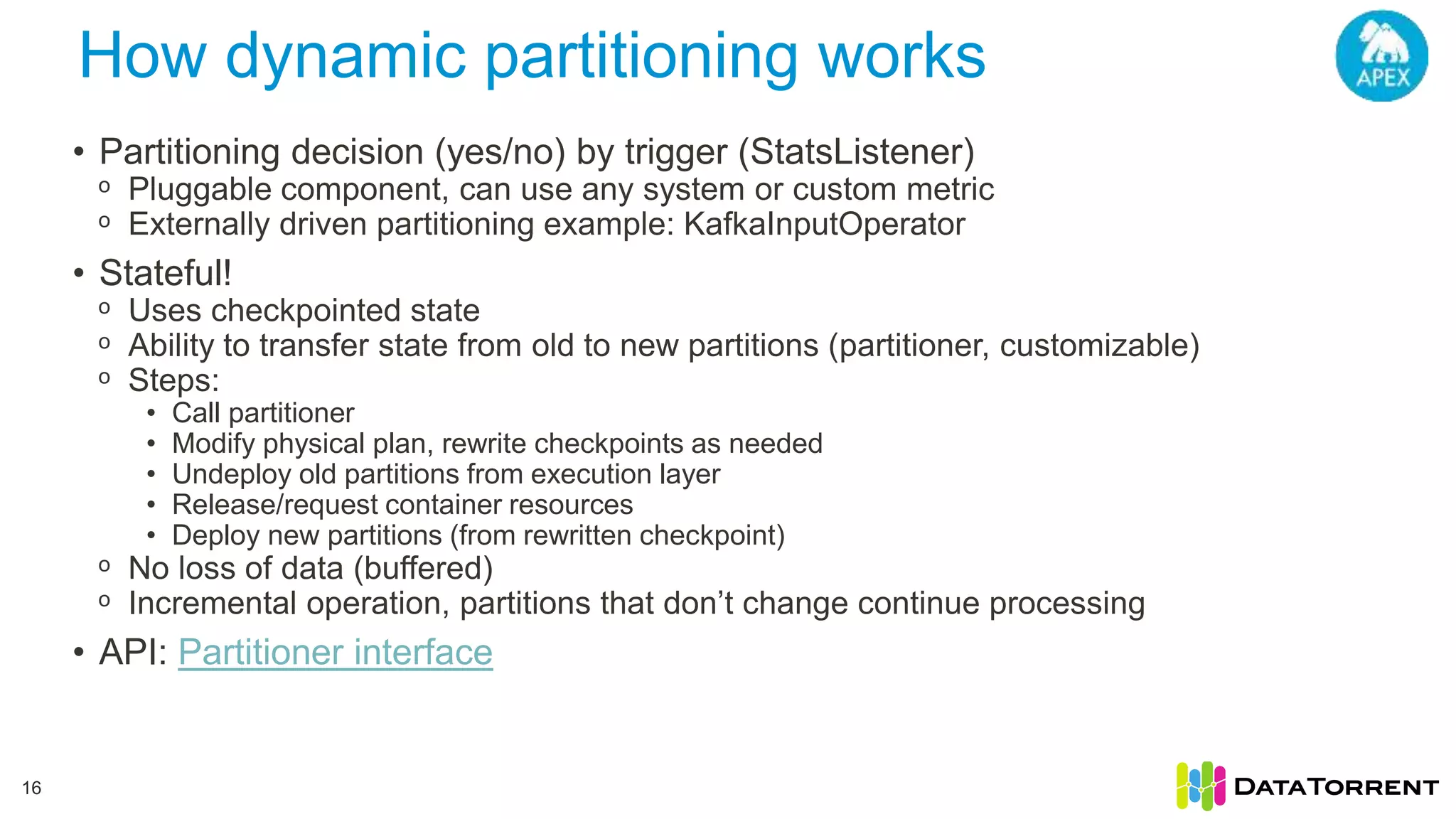

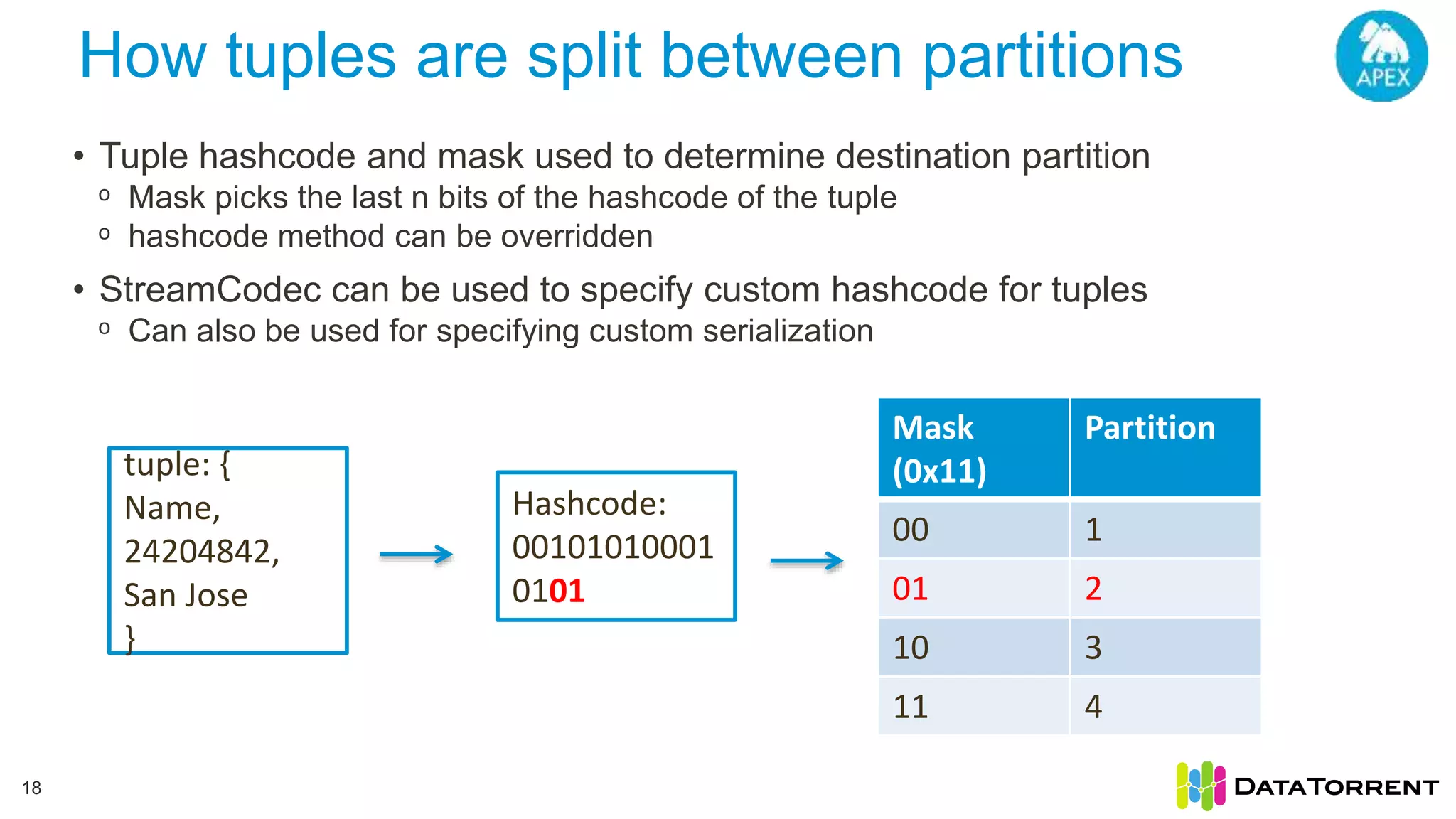

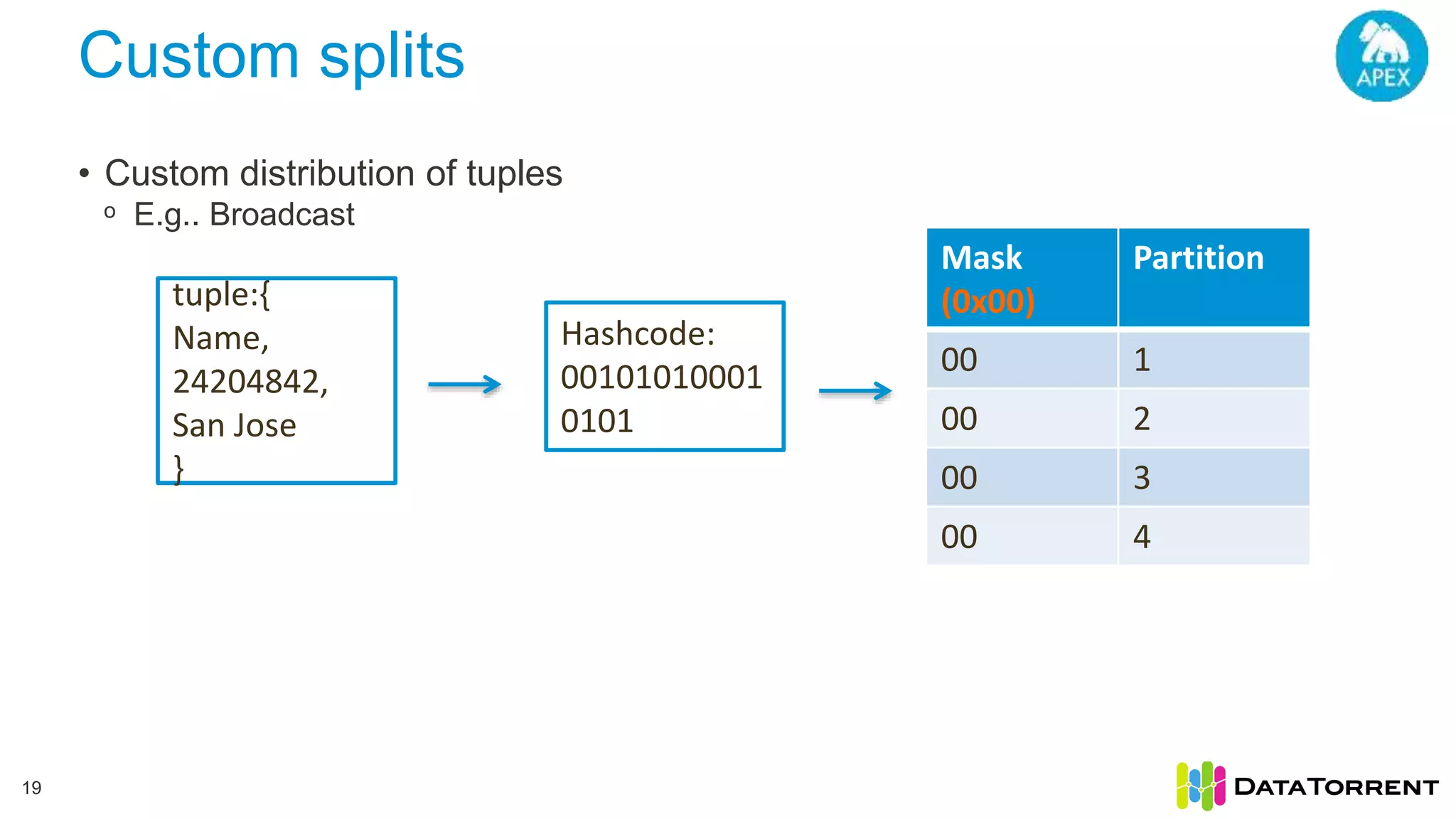

The document discusses smart partitioning with Apache Apex, an in-memory stream processing platform designed for high throughput and low latency data processing from various sources. It highlights features such as dynamic partitioning, support for diverse partitioning schemes, and the ability to scale applications while ensuring fault tolerance and reliability. Additionally, it details the architecture and development model, including partitioning mechanisms that enable efficient data handling and processing.