Blob Detection using OpenCV

Machine Learning courses with 100+ Real-time projects Start Now!!

Ever wondered how computers uncover hidden treasures within images? Welcome to the realm of ‘Blob Detection,’ where even the most inconspicuous regions become keys to unlocking visual mysteries.

In this captivating journey, we’ll explore the art of identifying and isolating significant regions in images using the power of OpenCV.

Get ready to enter the world of blobs, those enigmatic objects with the power to alter how we view and interact with digital images.

Know what’s actually Blob Detection in Image Processing

Blob detection in OpenCV is a specialized image processing technique used to identify and locate regions of interest within an image that exhibit distinct characteristics, such as variations in intensity, color, or texture. These regions, referred to as “blobs,” are often irregularly shaped and differ significantly from their surroundings, making them valuable candidates for various computer vision tasks.

The primary objective of blob detection is to extract relevant information from an image by isolating these distinct regions. Blobs can represent a range of objects or features, from cell nuclei in medical images to vehicles in surveillance footage. Detecting and analyzing blobs is a fundamental step in various applications, including object recognition, tracking, image segmentation, and quality control.

OpenCV, a versatile open-source computer vision library, provides tools and functions specifically designed for blob detection. These functions enable users to efficiently locate and characterize blobs within images, making the process accessible even to those without extensive image processing expertise.

In essence, blob detection acts as a digital spotlight, highlighting significant regions within an image, and OpenCV simplifies this process by offering a structured framework to harness the power of this technique for a wide array of applications.

Understanding Blobs: Unveiling the Essence of Distinctive Regions

Blobs, those seemingly ordinary yet astonishingly intricate components of an image, hold the potential to revolutionize our understanding of visual data. In the context of image analysis and computer vision, a “blob” refers to a region characterized by a unique combination of characteristics such as color, intensity, or texture. These regions stand out from their surroundings like stars in the night sky, and their identification is crucial for tasks ranging from object recognition to medical image analysis.

Distinctive Features of Blobs:

1. Intensity Contrasts: Blobs manifest as areas with significantly different pixel intensities compared to their neighboring regions. These contrasts can be positive (bright blobs on a darker background) or negative (dark blobs on a brighter background).

2. Texture and Color Variations: While pixel intensity variations are a primary factor, blobs can also possess distinctive textures or color distributions that set them apart. These variations contribute to their uniqueness and visual impact.

3. Shape and Contour Characteristics: The outline or contour of a blob can exhibit distinct shapes, such as circles, ellipses, or irregular polygons. These shapes help in distinguishing blobs from other image elements.

4. Size Spectrum: Blobs can vary significantly in size, from minuscule points to substantial regions. Their size is often a result of the image’s nature and the specific application requirements.

Application in Computer Vision:

Blobs are not just aesthetic curiosities but powerful tools harnessed by computer vision algorithms.

They are instrumental in:

- Object Tracking: Blobs can be used to track the movement of objects across consecutive frames in a video.

- Feature Detection: Identifying blobs serves as a basis for detecting distinctive features within an image, aiding in tasks like matching and recognition.

- Blob detection is a key stage in the segmentation process, which includes dividing a picture into useful sections.

Medical Imaging and Beyond:

In medical imaging, blobs are pivotal for identifying anomalies, tumors, or specific structures within images like X-rays and MRIs. Similarly, they find applications in quality control within manufacturing, identifying defects and irregularities in products.

OpenCV Blob Detection Algorithms: Navigating the Intricacies of Insightful Recognition

Within the vibrant canvas of computer vision, blob detection algorithms act as skilled interpreters, deciphering the subtleties of distinctive regions within images. As we journey deeper into the heart of image analysis, let’s unveil the intricacies of these algorithms that hold the key to revealing hidden treasures.

Laplacian of Gaussian (LoG) Algorithm:

The Laplacian of Gaussian, affectionately known as LoG, is a prominent algorithm in the realm of blob detection. This algorithm intertwines the concepts of differentiation and Gaussian smoothing to create a unique lens that highlights areas of varying pixel intensities.

The steps in this process include:

1. Gaussian Smoothing: The image is convolved with a Gaussian filter to suppress noise and emphasize significant structures.

2. Laplacian Operator: The Laplacian operator is applied to the smoothed image, which emphasizes abrupt changes in pixel values. This creates a map of intensity variations.

3. Blob Localization: Peaks in the Laplacian response map correspond to blob centers, and the scale of the Gaussian filter corresponds to the blob’s size.

Difference of Gaussians (DoG) Algorithm:

The Difference of Gaussians, or DoG, takes a more efficient route to accomplish similar results.

It’s an approximation of the Laplacian of Gaussian that involves the difference between two Gaussian-blurred versions of an image:

1. Gaussian Blurring: The image is convolved with two Gaussian filters of different standard deviations. The larger filter emphasizes larger blobs, while the smaller filter emphasizes smaller ones.

2. Subtraction: The smoothed images are subtracted, creating the DoG image. Positive values indicate regions of interest or potential blobs.

3. Blob Localization: Peaks in the DoG image indicate blob centers. This method is computationally efficient and works well for blob detection tasks.

Significance in Object Detection:

Blob detection algorithms find immense value in object detection scenarios. The distinctive characteristics of blobs make them excellent candidates for identifying objects of interest in complex environments. In applications such as facial recognition, tracking moving objects, and analyzing biological specimens, these algorithms lay the groundwork for robust recognition and analysis.

Customization and Adaptation:

One of the remarkable attributes of blob detection algorithms is their adaptability. By adjusting parameters like the size of the Gaussian filter or the threshold for peak detection, you can tailor the algorithm to suit specific use cases and optimize accuracy.

Algorithm Parameters: Fine-Tuning the Art of Blob Detection

The art of blob detection involves a delicate balance between precision and adaptability. To master this art, one must wield the power of algorithm parameters—knobs that control the sensitivity, accuracy, and behavior of the detection process. In this segment, we dive into the syntax, parameters, and the expansive landscape of customization that these parameters unlock.

Syntax and Functionality:

The cv2.SimpleBlobDetector class in OpenCV encapsulates the entire blob detection process, providing a structured framework for fine-tuning parameters and achieving optimal results.

The syntax to create a blob detector instance is as follows:

params = cv2.SimpleBlobDetector_Params() detector = cv2.SimpleBlobDetector_create(params)

Parameters and Customization:

1. Thresholds:

minThreshold and maxThreshold: These parameters define the range of pixel intensities that are considered for blob detection. A higher minThreshold may ignore smaller blobs, while a lower maxThreshold could lead to false positives.

2. Size and Shape Filters:

- filterByArea: Set this parameter to `True` to enable filtering blobs by their area (size).

- minArea and maxArea: Define the minimum and maximum acceptable blob areas. This helps filter out noise and irrelevant regions.

3. Circularity, Convexity, and Inertia:

filterByCircularity, filterByConvexity, filterByInertia: Toggle these parameters to filter blobs based on circularity, convexity, and inertia. Each criterion offers unique insights into blob shapes and characteristics.

Customization and Adaptation:

The real power of these parameters lies in the ability to tailor blob detection to specific scenarios. Imagine an application where you’re tracking vehicles in a surveillance video. By tweaking the parameters, you can focus on identifying larger, well-defined blobs while ignoring smaller elements like noise or pedestrians.

For instance, by adjusting the `minThreshold` and `maxThreshold`, you can fine-tune the sensitivity to detect blobs with specific intensity ranges. Altering the `minArea` and `maxArea` parameters lets you filter out tiny or overly large blobs based on your project’s requirements. The circularity, convexity, and inertia filters come into play when you need to distinguish between round, convex, or elongated shapes.

A Symphony of Precision:

Blob detection algorithm parameters offer a symphony of customization, allowing you to compose an orchestra of sensitivity and accuracy. With each parameter tweak, you orchestrate a performance that’s uniquely suited to your application’s needs. The ability to adjust these parameters empowers you to extract meaningful insights from images and transform them into actionable data.

Filtering Blobs in OpenCV: Refining Insights in Image Analysis

In the mesmerizing world of image analysis, not all blobs are created equal. The key to extracting meaningful information lies in the art of filtering blobs—refining the diverse pool of detected regions to reveal the most relevant and distinctive ones. In this exploration, we unravel the intricate dance of blob filtering in OpenCV, where algorithms and parameters come together to sift through the visual landscape and uncover the hidden gems.

At its core, blob filtering is a multi-step process that involves assessing the attributes of detected blobs against specific criteria. Blobs that meet these criteria are retained, while others are discarded. This process helps eliminate noise, isolate key features, and focus analysis on regions of utmost significance.

Working of Blob Filtering:

1. Detecting Blobs: Begin by detecting blobs within the image using techniques like Laplacian of Gaussian or Difference of Gaussians.

2. Defining Filtering Criteria: Specify the criteria for filtering based on attributes such as blob size, shape, or intensity. These criteria form the basis for retaining or rejecting blobs.

3. Evaluating Blobs: Evaluate each detected blob against the defined criteria. Blobs that satisfy these criteria proceed to the next stage.

4. Filtering Process: The filtering process involves discarding blobs that do not meet the specified criteria. This results in a refined set of blobs that are relevant for the given application.

Types of Blob Filtering:

1. Size Filtering: Blobs can be filtered based on their area, allowing you to eliminate tiny noise regions or overly large blobs.

2. Shape Filtering: Shape-based filters assess the circularity, convexity, or aspect ratio of blobs. This enables you to focus on blobs with specific geometric attributes.

cv2.SimpleBlobDetector – Syntax

params = cv2.SimpleBlobDetector_Params() params.filterByArea = True params.minArea = 100 detector = cv2.SimpleBlobDetector_create(params)

Code Example – Blob Filtering:

import cv2

import numpy as np

import matplotlib.pyplot as plt

# Load the image

image = cv2.imread(r"C:\Users\satchit\Desktop\DataFlair\Blob Detection in OpenCV\Wildlife-Deer.jpg", cv2.IMREAD_GRAYSCALE)

# Setup the blob detector parameters

params = cv2.SimpleBlobDetector_Params()

params.filterByArea = True

params.minArea = 100

# Create the blob detector

detector = cv2.SimpleBlobDetector_create(params)

# Detect blobs

keypoints = detector.detect(image)

# Draw detected blobs on the image

output_image = cv2.drawKeypoints(image, keypoints, None, (0, 0, 255), cv2.DRAW_MATCHES_FLAGS_DRAW_RICH_KEYPOINTS)

# Display the output image

cv2.imshow('Blob Filtering', output_image)

cv2.waitKey(0)

cv2.destroyAllWindows()Original Image:

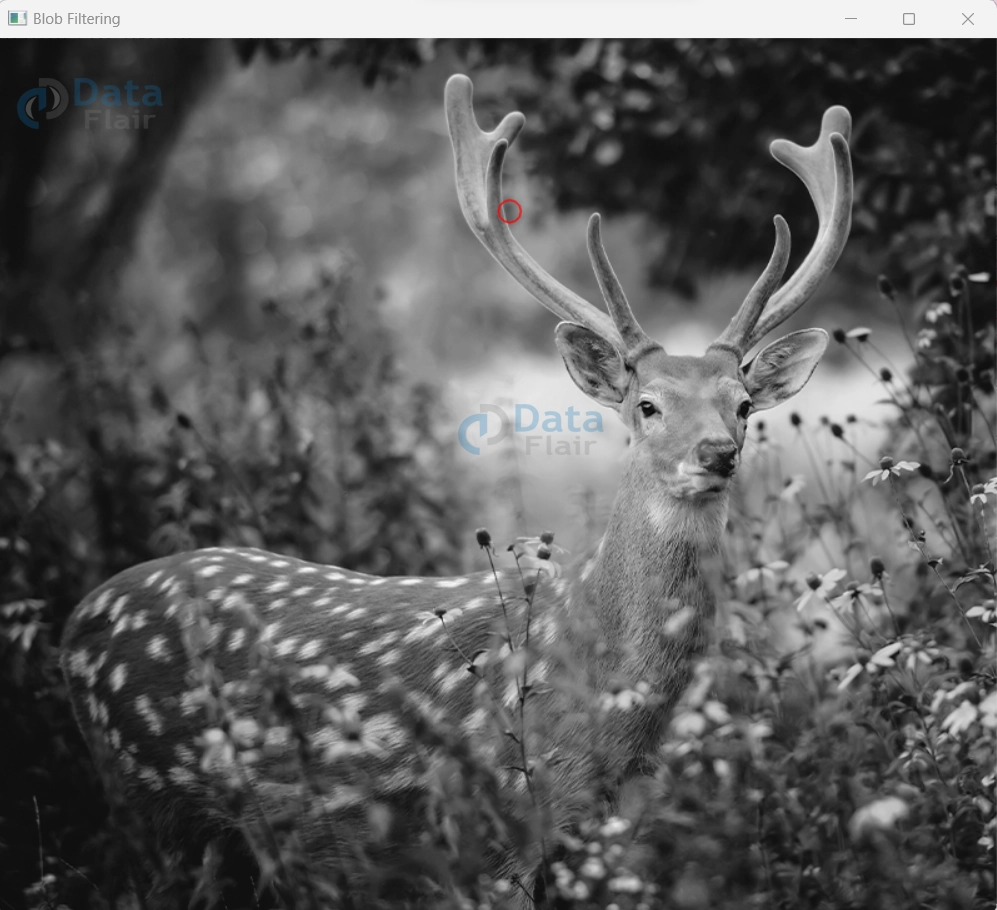

Blob Detection:

In this code, we set up blob detector parameters to filter blobs based on area using cv2.SimpleBlobDetector_Params(). Blobs with an area smaller than the specified `minArea` are filtered out. The resulting image showcases the effects of blob filtering, emphasizing only those blobs that meet the size criteria, thus refining the visual narrative.

Background Subtraction using Subtractor MOG2 in OpenCV: Illuminating Moving Objects

In the ever-evolving landscape of computer vision, the quest to distinguish moving objects from their stationary surroundings leads us to the realm of background subtraction. This powerful technique, facilitated by OpenCV’s Subtractor MOG2, enables us to dissect video streams, unveiling the dynamic dance of moving elements against a backdrop of stillness. In this journey, we delve into the art of isolating motion, revealing how Subtractor MOG2 reshapes the way we perceive visual narratives.

Types of Background Subtraction:

Background subtraction techniques classify into two main categories:

1. Single-Frame Background Subtraction: This technique involves creating a static background model from a single frame and then subtracting this model from subsequent frames to identify changes.

2. Multi-Frame Background Subtraction: Here, the algorithm adapts to variations in the background over multiple frames, making it more robust to changing conditions.

cv2.createBackgroundSubtractorMOG2() – Syntax and Parameters:

bg_subtractor = cv2.createBackgroundSubtractorMOG2(

history=500, varThreshold=16, detectShadows=True)- history: Number of frames considered for the background model.

- varThreshold: Threshold value for classifying pixel differences as foreground.

- detectShadows: Set to `True` to detect shadows.

Code Example – Background Subtraction:

import cv2

# Create a VideoCapture object

cap = cv2.VideoCapture(r"C:\Users\DataFlair\Pictures\Saved Pictures\New folder\Aug 13-23'\WhatsApp Video 2023-08-14 at 4.44.55 PM.mp4")

# Create a background subtractor object

bg_subtractor = cv2.createBackgroundSubtractorMOG2(history=500, varThreshold=16, detectShadows=True)

while True:

ret, frame = cap.read()

if not ret:

break

# Apply background subtraction

fg_mask = bg_subtractor.apply(frame)

# Display the original frame and the foreground mask

cv2.imshow('Original Frame', frame)

cv2.imshow('Foreground Mask', fg_mask)

if cv2.waitKey(30) & 0xFF == ord('q'):

break

cap.release()

cv2.destroyAllWindows()In this code, we use the `cv2.createBackgroundSubtractorMOG2()` function to create a background subtractor object. We then read frames from a video and apply background subtraction using the subtractor object. The resulting foreground mask highlights moving objects against the background. In order to grasp the moving elements that were recognised visually, the original frame and the foreground mask are displayed in separate windows.

Background subtraction using Subtractor MOG2 isn’t just about isolating motion; it’s about revealing the stories that unfold within videos. By harnessing the syntax, parameters, and intricacies of Subtractor MOG2, you’re equipped to uncover dynamic narratives that dance amidst static backdrops, transforming the way we analyze visual content.

Summary

As we draw the curtain on our exploration of background subtraction using Subtractor MOG2 in OpenCV, we find ourselves equipped with a powerful lens that reveals the hidden choreography of moving elements within videos. Through the orchestration of syntax, parameters, and algorithms, we’ve transcended the static world and embraced the dynamic realm, where the interplay of foreground and background paints a vivid picture of motion.

Background subtraction is more than a technical feat; it’s a journey into the heart of visual storytelling. With every pixel that changes, every shadow that morphs, we’re reminded of the layers of complexity that lie beneath seemingly mundane frames. The Subtractor MOG2 technique unravels the tapestry of movement, granting us the ability to dissect, understand, and harness motion for a multitude of applications.

So, as you venture forth into the world of computer vision, remember that background subtraction isn’t just about revealing what moves; it’s about discovering the narratives that unfold when the stillness of background meets the vibrancy of movement. Armed with the knowledge of Subtractor MOG2, you’re poised to embark on a journey where every frame tells a story—a story waiting to be explored, understood, and embraced.

If you are Happy with DataFlair, do not forget to make us happy with your positive feedback on Google