Learn Web Scraping using Python

Master Python with 70+ Hands-on Projects and Get Job-ready - Learn Python

Many of us would have come across situations when we need to extract the information from the websites. We generally copy the required information from the website. What if the data is too large that it’s hard to copy? This is where web scraping comes into play. And we will be learning to do web scraping using Python. Let’s start

What is Web scraping?

Web scraping is an automated method of extracting data from websites. Wondering we can copy and paste? Of course, you can but it will be tiring to separate the data you require from the others, and also data on the websites are unstructured. And so, we do web scraping that helps to collect these unstructured data and store it in a structured form.

Thinking if it would be legal to scrape from any website? Some websites actually allow web scraping while some don’t. We can check the website’s “robots.txt” file, append “/robots.txt” to the URL, to know more about this information. that you want to scrape.

Applications of Web Scraping

We just talked that we extract data by scraping. Have you got a doubt about what we will be doing with the data? Here are some applications for you.

1. Price Comparison: This is used for comparing the prices of similar products from different online shopping websites.

2. Gatherings emails: We all would have got marketing emails from a website you subscribed to? Do you think these emails are sent individually? Of course, no! Using web scraping email IDs are collected and then send bulk emails.

3. Scraping Social Media Content: Social Media websites are scraped to find out what’s trending.

4. Research and Analysis: Large set of data (Statistics, Reviews, Temperature, etc.) are used for analysis and R&D, for developing a model and testing.

5. Listings: Details of job openings, interviews, etc are collected from different websites and made available in one place.

Why is Python Good for Web Scraping?

We will be using Python to do web scraping which is very suitable because of the following reasons.

1. Ease of Use: Python is simple to code with easy to learn syntax

2. Large Collection of Libraries: It has a huge collection of libraries, which provides methods and services making the coding task easier. It also has modules specifically for web scraping purposes.

3. Dynamically typed: Python does not need you to define data types for variables. This saves time and makes the job faster.

4. Small code, large task: As said previously, Python has built in functions that make the small codes do large tasks.

5. Community: It has a huge community working on improvements and clearing queries. These active communities, help you whenever you are struck.

Libraries for Web scraping

Python has libraries explicitly used for the purpose of web scraping. And these libraries come with multiple built-in functions, making scraping easy. These include

- Requests

- Beautiful Soup

- lxml

- Selenium

The requests Library

Requests is a library used for making HTTP requests to a specific URL and getting the response. It also contains some inbuilt functions for managing both the request and response.

This library can be installed using the below command:

pip install requests

Making the request

Once installed, you can get the HTTP request of the required URL using the get() function. It also extracts the information from the server related to the website. Therefore, this object can be used to get information like URL, status, content, etc., as shown in the below code.

Example of making a HTTP request:

import requests

# Making a GET request

r = requests.get('https://data-flair.training/')

print(r)

#printing the URL

print(r.url)

# print the status code

print(r.status_code)

# print content of request

print(r.content)Output

Beautiful Soup

Beautiful soup is a Python library specifically built for web scraping. It works with a parser to extract data from HTML, to parse, search and modify by generating a parse tree. It can also turn even invalid markup into a parse tree. However, it cannot request data from web servers in the form of HTML file and so we use the requests library.

This library can be installed using the following command:

pip install beautifulsoup4

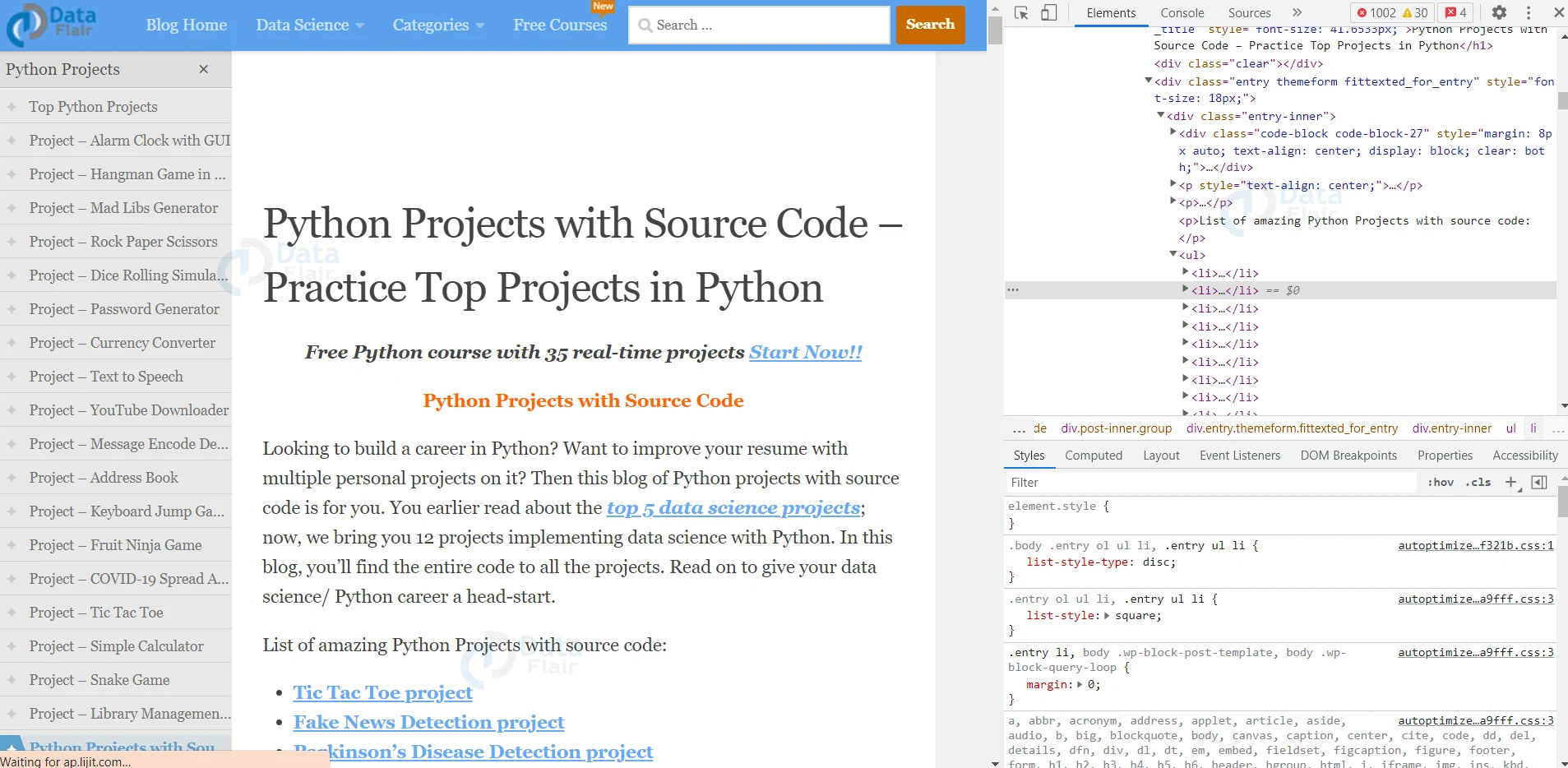

Inspecting Website

Before extracting any information from a website, it is important to understand its structure. It helps in understanding the format of the data inside the website and in extracting the required information. It can be done right clicking the mouse on the website and selecting the inspect option.

After doing this, you get to see the Document Object Model (DOM) of the website as shown below.

Parsing HTML using Beautiful Soup

Now let’s see how to get the HTML version that forms a website using beautiful soup.

Example of parsing HTML using Beautiful soup:

import requests

from bs4 import BeautifulSoup

# Making a GET request

r = requests.get('https://data-flair.training/')

# parsing HTML

soup = BeautifulSoup(r.content, 'html.parser')

print(soup.prettify())

Output

Here, we first make a request to the website using the get() function and send the content to the BeautifulSoup() class. The object obtained is what we see in the below figure showing the HTML format of the page.

lxml

lxml is a Python parsing library that works with both HTML and XML. It is a fast, powerful, and easy-to-use library, especially when extracting data from large datasets. However, it is impacted by poorly designed HTML, making its parsing capabilities impeded. Even with this library we first need to request the HTML form using the requests library. This library can be installed using the below command

pip install lxml

Scraping with lxml works in the following way:

1. We first get requests from the website as discussed above

2. And then using the fromstring() function in the html module in lxml library we get the tree object as shown below

response = requests.get() from lxml import html tree = html.fromstring(response.text)

Then we use the xpath() function to give a query to extract the required information from the website

Selenium

Nowadays almost all the websites built are responsive and dynamic. This poses a problem with the python web scraping libraries like requests. This is where the role of selenium comes into the role which is an open-source browser automation tool (web driver) that initiates the rendering web pages, just like any browser.

It mainly requires three components:

- Web Browser – Chrome, Edge, Firefox and Safari

- Driver for the browser

- The Python selenium package

You can install the package using the below command

pip install selenium

Now we start by importing an appropriate class for the browser from the selenium package. Then we create the object of the class giving the path of the driver executable.

After this, we will use the get() method to load any page in the browser as shown below.

Example of loading a page using selenium:

from selenium.webdriver import Chrome driverObj = Chrome(executable_path='/driver/path/on/your/device') driverObj.get(‘https://data-flair.training/’)

Selenium also allows us to use of CSS selectors and XPath to extract data from the websites. Let see an example to get all the blog titles using CSS selectors.

Example of getting all the blog titles using CSS selectors:

blog_titles = driverObj.get_elements_by_css_selector(' h2.blog-card__content-title')

for title in blog_tiles:

print(title.text)

driver.quit() # closing the browser

Besides being able to handle dynamic websites, selenium makes the web scraping process slow. And the reason is that it must first execute the JavaScript code for each page before making it available for parsing. And because of this it is unideal for large-scale data extraction.

Comparison between the three web scraping libraries

| Requests | Beautiful Soup | lxml | Selenium | |

| Purpose | Making the HTTP requests | Parsing | Parsing | Making HTTP requests |

| Ease-of-use | High | High | Medium | Medium |

| Speed | Fast | Fast | Very fast | Slow |

| Ease of learning | High | High | Medium | Medium |

| Documentation | Very good | Very good | Good | Good |

| JavaScript Support | None | None | None | Yes |

| CPU and Memory Usage | Low | Low | Low | High |

| Size of Project Supported | Large and small | Large and small | Large and small | Small |

Picking a web driver and browser

Every web scraper needs a browser to connect to the destination URL. We recommend you to use a regular browser (or not a headless one) for testing purposes, especially for newcomers. This would make the troubleshooting and debugging processes simpler by giving a better understanding of the entire process.

On the other hand, headless browsers can be used later on as they are more efficient for complex tasks. Here we will be using the Chrome browser. You can also use firefox and download the web driver that matches your browser’s version.

You can do this by selecting the requisite package, and downloading and unzipping it. Then copy the driver’s executable file to any easily accessible directory.

Selecting an appropriate URL

Previously we saw how to inspect a website and get a better understanding of its structure. Here are some more tips for you to help you pick an URL:

- It is very important to ensure that you are scraping public data, and are not falling into third-party rights issues. You can confirm it with the help of robots.txt file for guidance.

- Avoid data hidden in Javascript elements as these sometimes need to be scraped by performing specific actions and require a more sophisticated use of Python and its logic.

- Avoid image scraping because you can easily download them directly with Selenium.

Extracting information

There seems to be a lot of HTML content on the output. Don’t worry, we also have methods to extract useful information like title

Example of extracting data from the website:

import requests

from bs4 import BeautifulSoup

# Making a GET request

r = requests.get('https://data-flair.training/')

# check status code for response received

# success code - 200

soup = BeautifulSoup(r.content, 'html.parser')

#Extracting the title

page_title = soup.title.text

# Extract body of page

page_body = soup.body

# Extract head of page

page_head = soup.head

# print the result

print(page_title)

print(page_head)

print(page_body)

Output

We can also get the tags and details of it. Let’s see how to get the details of the title and its tags.

Example of getting tags and tag information from the website:

import requests

from bs4 import BeautifulSoup

# Making a GET request

r = requests.get('https://data-flair.training/')

# check status code for response received

# success code - 200

soup = BeautifulSoup(r.content, 'html.parser')

# Getting the title tag of the page

print(soup.title)

# Getting the name of the title tag

print(soup.title.name)

# Getting the name of parent tag of the title

print(soup.title.parent.name)

Output

title

head

Selecting with Beautiful

Beautiful soup has a select element that returns the list of elements that we would like to select like headings, etc.

Let’s see an example of selecting the heading 2’s returning the 2nd one from the obtained list respectively.

Example of selecting a heading from a website:

import requests

from bs4 import BeautifulSoup

# Making a GET request

r = requests.get('https://www.amazon.in/')

# check status code for response received

# success code - 200

soup = BeautifulSoup(r.content, 'html.parser')

second_head = soup.select('h2')[1].text

print(second_head)

Output

Wondering what if we want to get all the headings or other components? Then, I would like to ask you which construct do we use to access multiple elements or run through different conditions?

Yes, you are correct, the looping statements. Let’s now see how to get all the elements under a tag.

Example of selecting all headings from the website:

import requests

from bs4 import BeautifulSoup

# Making a GET request

r = requests.get('https://data-flair.training/')

soup = BeautifulSoup(r.content, 'html.parser')

all_h1_tags = []

for element in soup.select('h1'):

all_h1_tags.append(element.text)

print(all_h1_tags)Output

Finding components

This library also has other methods like find that is used for searching the required elements from the class. Let’s dive deeper into it with some examples.

Finding by class

Let’s search based on the class. In the below example, we search for a div tag with the class ‘a-section’. And then find all the heading 2s from the website and print them.

Example of finding elements by class:

import requests

from bs4 import BeautifulSoup

# Making a GET request

r = requests.get('https://www.amazon.in/')

soup = BeautifulSoup(r.content, 'html.parser')

s = soup.find('div', class_='a-section')

content = s.find_all('h2')

print(content)

Output

Finding by Id

We know that when we add some components, we add class, id, etc. to add properties and add uniqueness to the elements. As we are done searching based in class, let’s search based on id. It’s the same as the above example we saw, except that we use the id parameter in the find() function, to search.

Example of finding elements by id:

import requests

from bs4 import BeautifulSoup

# Making a GET request

r = requests.get('https://data-flair.training/')

# Parsing the HTML

soup = BeautifulSoup(r.content, 'html.parser')

s = soup.find('div',id="cf-wrapper")

content = s.find_all('p')

print(content)

Output

Extracting Text from the tags

If you see the above outputs, we see that tags also got included along with the text. But when it comes to real life applications, the important part is the content. We will take the above example and see how we remove the tags from it.

Example of getting text from the tags:

import requests

from bs4 import BeautifulSoup

# Making a GET request

r = requests.get('https://data-flair.training/')

# Parsing the HTML

soup = BeautifulSoup(r.content, 'html.parser')

s = soup.find('div',id="cf-wrapper")

content = s.find_all('p')

for line in content:

print(line.text)

Output

Here we find all the paragraphs and then run a loop to print all the found elements. This avoids the appearance of tags in the output.

Extracting Links

In many of the cases, links get attached to the content on the website. And this is done using the <a> tag by giving the link to be attached to the ‘href’ attribute of the tag. This information is what we will use along with the find_all() function to extract the links. Let’s see an example.

Example of getting links from a website:

import requests

from bs4 import BeautifulSoup

# Making a GET request

r = requests.get('https://data-flair.training/')

# Parsing the HTML

soup = BeautifulSoup(r.content, 'html.parser')

for link in soup.find_all('a'):

print(link.get('href'))

Output

Extracting Image Information

We also have images attached in the website and extracting these is what we see in the below example.

Example of extracting images from a website:

import requests

from bs4 import BeautifulSoup

# Making a GET request

r = requests.get('https://www.amazon.in/')

soup = BeautifulSoup(r.content, 'html.parser')

images_list = []

images = soup.select('img')

for image in images:

src = image.get('src')

alt = image.get('alt')

images_list.append({"src": src, "alt": alt})

for image in images_list:

print(image)Output

Here we saw that we select all the ‘img’ tags from the website and then get the information of ‘src’ and ‘alt’ attributes from each of the images and print them.

Scraping multiple Pages

Scraping information from various elements from multiple websites can be tedious. Beautiful soup can also scrape through multiple pages from the same website or from different URLs. We will see both cases.

When we use a website with multiple pages, then we take a base url and run a for loop to go through each of the websites. Let’s say we have a website with 10 pages, and we want to extract information from each website, say title.

Example of running through multiple pages:

import requests

from bs4 import BeautifulSoup as bs

URL = ''

for page in range(1, 10):

req = requests.get(URL + str(page) + '/')

soup = bs(req.text, 'html.parser')

print(soup.title.text)

We can follow the same process to run through multiple URLs by storing them in a list.

Example of running through multiple URLs:

import requests

from bs4 import BeautifulSoup as bs

URLs = ['https://www.amazon.in/','https://www.flipkart.com/']

for i in URLs:

req = requests.get(i)

soup = bs(req.text, 'html.parser')

print(soup.title.text)

Output

Online Shopping Site for Mobiles, Electronics, Furniture, Grocery, Lifestyle, Books & More. Best Offers!

Here we store all the URLs in a list and then run through each URL in the list using a loop to extract required information from each website.

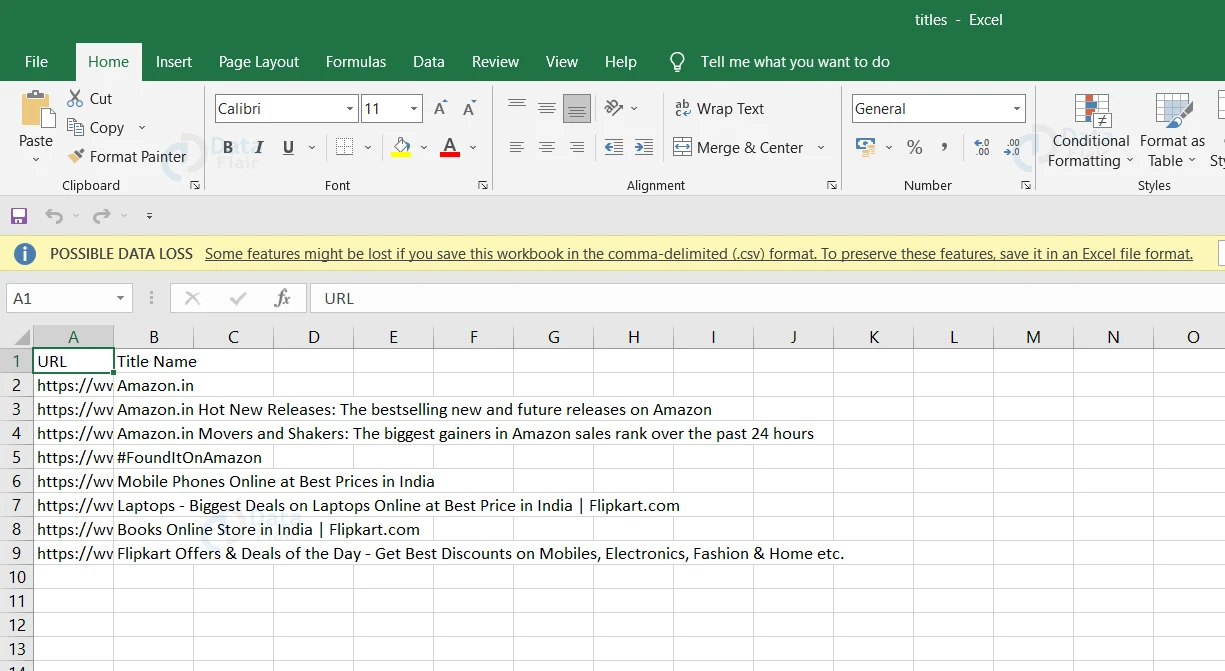

Saving Information in CSV

We can also save the information obtained from the website(s) in our device, for example in the form of a CSV. In the below example, we run through different URLs and use the for loop to run through each website and get its title. Finally, save the title and the URL in the form of a CSV.

import requests

from bs4 import BeautifulSoup as bs

import csv

URLs = ['https://www.amazon.in/gp/bestsellers/?ref_=nav_em_cs_bestsellers_0_1_1_2',

'https://www.amazon.in/gp/new-releases/?ref_=nav_em_cs_newreleases_0_1_1_3',

'https://www.amazon.in/gp/movers-and-shakers/?ref_=nav_em_ms_0_1_1_4',

'https://www.amazon.in/finds?ref_=nav_em_sbc_desktop_foundit_0_1_1_27',

'https://www.flipkart.com/mobile-phones-store?otracker=nmenu_sub_Electronics_0_Mobiles',

'https://www.flipkart.com/laptops-store?otracker=nmenu_sub_Electronics_0_Laptops',

'https://www.flipkart.com/books-store?otracker=nmenu_sub_Sports%2C%20Books%20%26%20More_0_Books',

'https://www.flipkart.com/offers-store?otracker=nmenu_offer-zone'

]

titles_list = []

for i in URLs:

req = requests.get(i)

soup = bs(req.text, 'html.parser')

d = {}

d['URL'] = i

d['Title Name'] = soup.title.text

titles_list.append(d)

filename = r'C:\Users\Sai Siva Teja\Downloads\titles.csv'

with open(filename, 'w', newline='') as f:

w = csv.DictWriter(f,['URL','Title Name'])

w.writeheader()

w.writerows(titles_list)

Python Web Scraping Output

Conclusion

Here we are at the end of the article on web scraping with python. In this article, we got introduced with the concept of web scraping, sending HTTP requests, and extracting website data using the beautiful soup in python. Hope you enjoyed this article. Happy learning!

Did we exceed your expectations?

If Yes, share your valuable feedback on Google