Inspiration

Fork Fighter began with a simple question: can an everyday object like a fork serve as a high-precision mixed-reality controller? This curiosity sparked an exploration into how playful interactions and computer vision could come together. The goal was to craft something whimsical on the surface yet technically ambitious underneath.

What it does

When a plate enters view, a virtual red chilli appears at its center. Here, the player pierces the virtual chilli using a real fork, triggering the portal to open which unleashes tiny vegetable invaders riding miniature tanks. They launch paint-ball shots at the display, splattering the scene and raising the pressure to survive.

The fork becomes the primary weapon, a physical interface offering tactile feedback no virtual controller can match.

If enemies escape the plate, they jump toward the headset and distort the mixed-reality environment.

How it was built

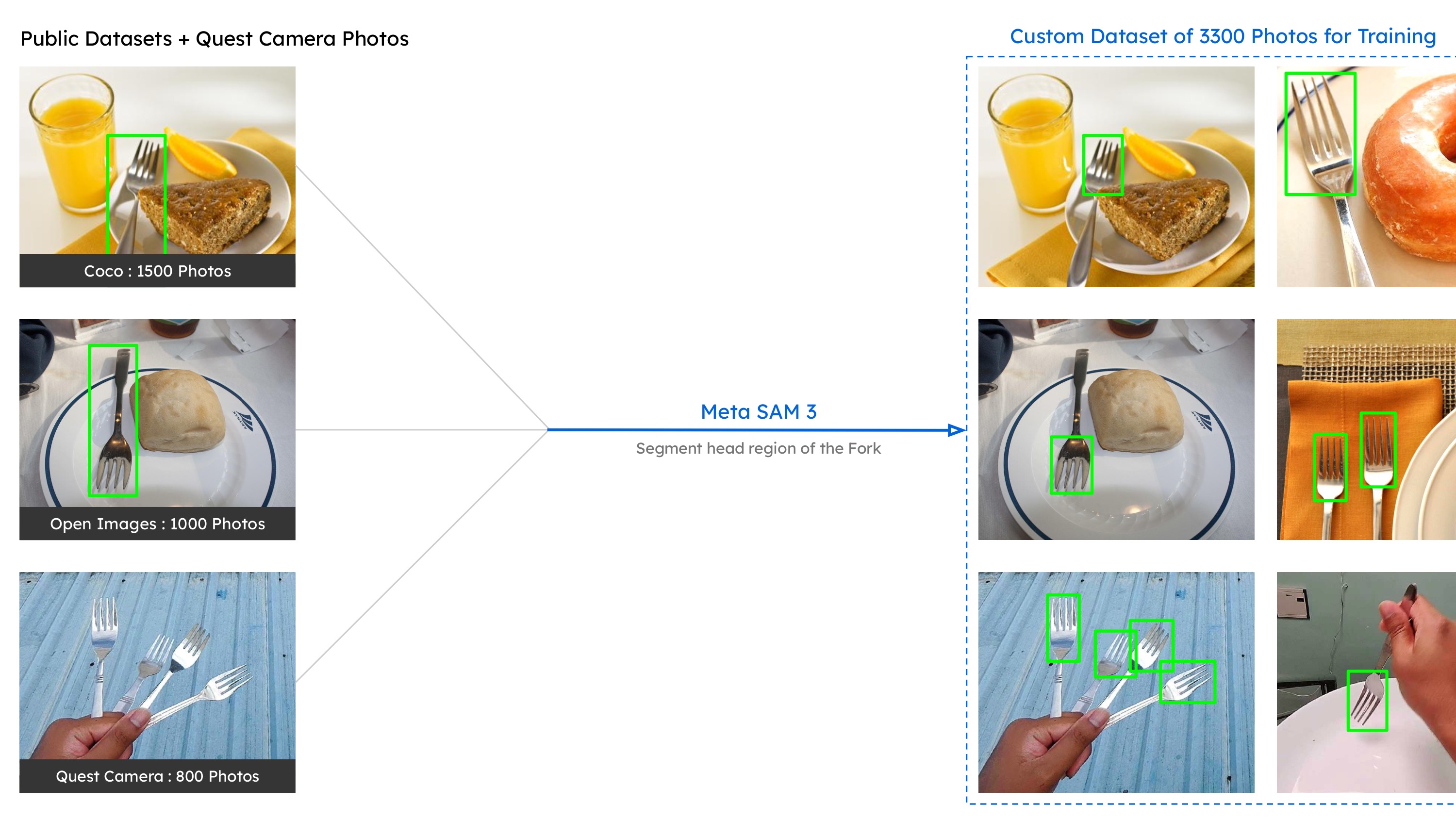

1. Custom Dataset for Fork-Head Detection

Only the head region of the fork needed to be detected, but public datasets typically label the entire utensil rather than the head region, so samples from COCO, Open Images, and a Custom Quest app were combined, and Meta’s SAM 3 was used to segment and label the head region on more than 3,500 fork images in under 1 hour.

Only the head region of the fork needed to be detected, but public datasets typically label the entire utensil rather than the head region, so samples from COCO, Open Images, and a Custom Quest app were combined, and Meta’s SAM 3 was used to segment and label the head region on more than 3,500 fork images in under 1 hour.

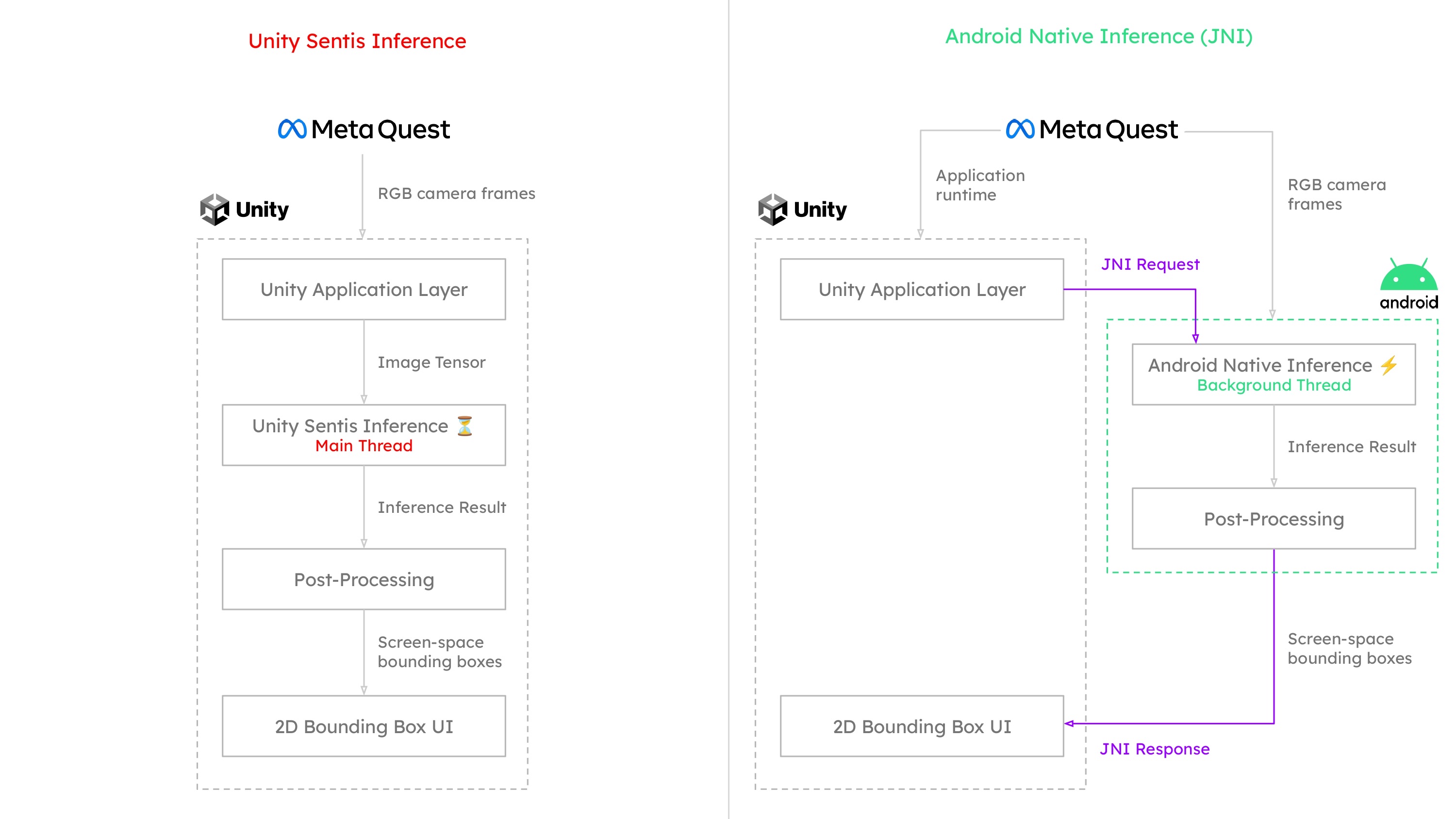

2. Near Real-Time Tracking at 72 FPS

To sustain 72 FPS, model inference was executed through Android JNI instead of Unity Sentis. This offered finer control over native processing, reduced latency, and improved access to hardware acceleration.

To sustain 72 FPS, model inference was executed through Android JNI instead of Unity Sentis. This offered finer control over native processing, reduced latency, and improved access to hardware acceleration.

Two lightweight, single-class YOLO11n models serves as the backbone of the tracking system, optimized specifically for plate and fork detection.

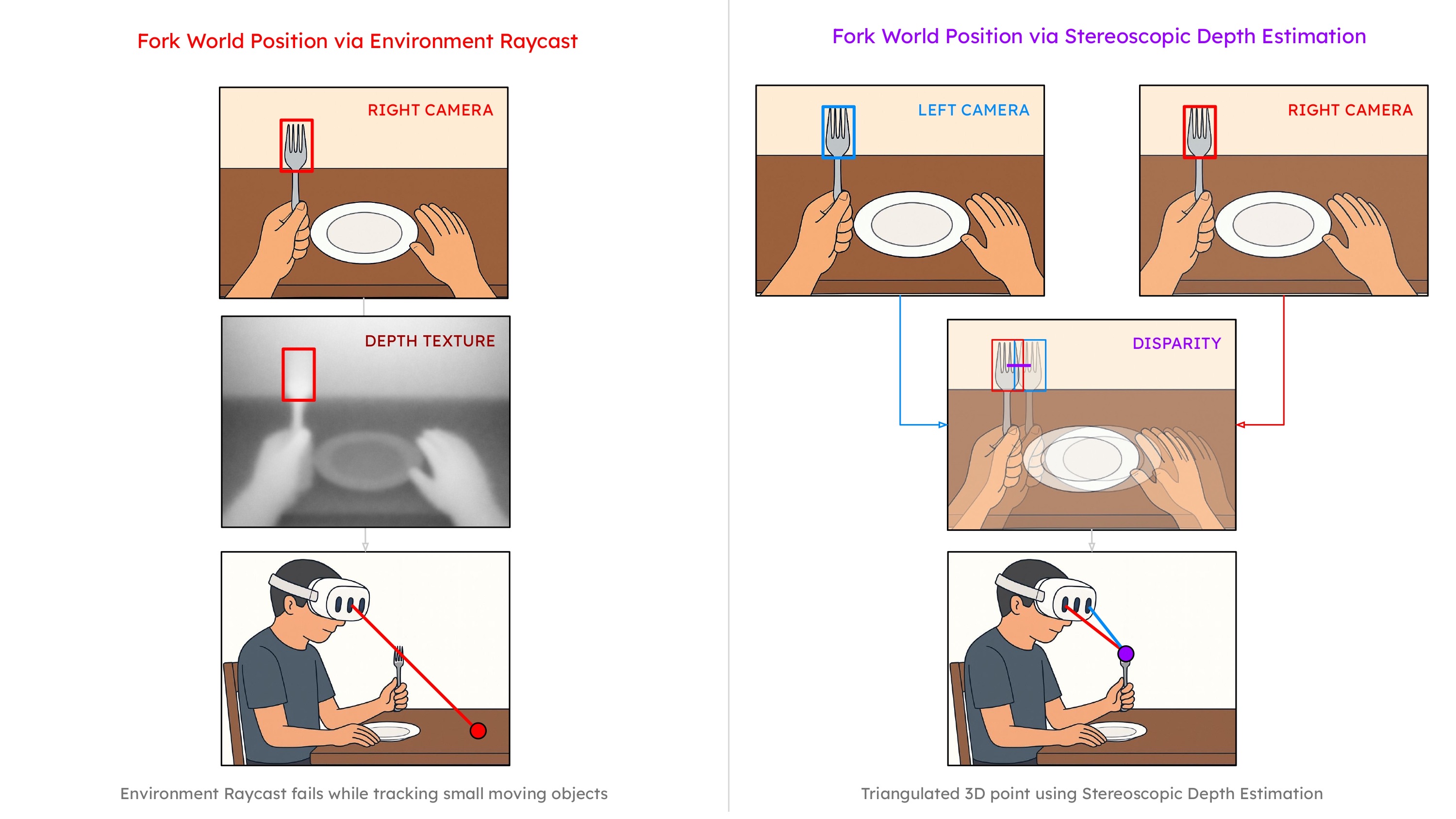

3. Calculating 3D Position Without Hit Testing

Traditional hit testing performed poorly for a small, fast, reflective object like fork

Traditional hit testing performed poorly for a small, fast, reflective object like fork

To solve this, A stereo-vision disparity method was implemented:

- Run inference on left and right camera frames via Android JNI

- Process detections

- Compute depth using disparity

- Reconstruct the fork’s 3D position in real time

This approach removed the need for hit tests and significantly increased stability.

Challenges I ran into

- Maintaining stable detections despite glare, motion blur, and the fork’s small size

- Sustaining 72 FPS while performing stereo inference

- Calibrating left and right camera timing and alignment

- Balancing strict performance requirements with responsive, enjoyable gameplay

Accomplishments that I am proud of

- Built a first-of-its-kind mixed-reality game controlled by an everyday fork

- Created a high-performance stereo tracking system without hit testing

- Assembled a robust fork-head dataset using SAM 3

- Released the entire project as open source to encourage learning and experimentation

What I learned

I learned how much complexity hides behind interactions that feel simple in mixed reality. I gained deeper experience with segmentation models, lightweight YOLO variants, and stereo-vision pipelines.

I also discovered how powerful MR can feel when physical tactile feedback connects directly to virtual action.

What is next for Fork Fighter

- Expanding interaction types such as scooping, slicing, blocking, and combo attacks

- Adding more complex enemy behavior and boss waves

- Experimenting with multiplayer and shared MR scenarios

- Exploring other everyday objects as controllers

- Continuing to refine the model, dataset, and game experience

Fork Fighter is now Open Source, and I hope it inspires new ideas for turning ordinary objects into dynamic mixed-reality interfaces.

Log in or sign up for Devpost to join the conversation.