Pandas Applications and Use Cases

Get Ready for Your Dream Job: Click, Learn, Succeed, Start Now!

When discussing Python as a data science tool, pandas, a vast and essential library for data manipulation and analysis, comes to mind and stands out at the forefront. In the data science sphere, its importance is often overshadowed, as it has robust components designed to work well with structured data. Created to simplify the data wrangling process, Pandas are today widespread, rich in features, and multipurpose tools used by data scientists, professional analysts, as well as newcomers.

At its core, Pandas presents several versatile data structures, such as DataFrame, that make it easy to work with tabular data storage, manipulation, and analysis. It is through the use of NumPy arrays that lies the convenience provided by Pandas, as it puts before the user a user-friendly interface which simplifies routine data treatment procedures, from data cleaning and preparation to analysis and exploration.

This piece aims to explore various applications and real-world situations where pandas can be of great help. We intend to unveil the diverse reporting capabilities and real-life use cases of the Pandas information database, showcasing it as a tool that facilitates data analysis across multiple spheres. Indeed, from debugging and untangling chaotic data to producing insights and explaining them in a meaningful way, Powers will enable data professionals to derive value with little effort.

We will learn the relevance of Panda to life along the way as we create and explore interesting case studies. Through this guide, we hope to show readers exact scenarios where Pandas has been utilized in different contexts, and this would hopefully instil in them the confidence to leverage this tool as they carry out their data tasks and investigations, thus helping them better grasp the significance of Pandas in an environment where data science is constantly evolving.

Data Cleaning and Preparation

Cleaning the information is a vital part of studying it because it ensures that the units of data are correct, consistent, and prepared to be analysed. Pandas is a general tool for individuals who work with data, as it offers many capabilities and techniques that simplify everyday data cleaning tasks. This section will discuss how Pandas simplifies data analysis by handling tasks such as handling missing values, removing duplicates, and transforming data.

Handling Missing Values

Real-world datasets often have missing values that need to be handled efficiently to prevent bias or errors in evaluation. Pandas has some of approaches to cope with missing values, which include:

isnull() and notnull() are methods that can help you find DataFrames that are lacking values.

Dropna(): This approach gets rid of rows or columns that have empty values.

Fillna(): This method fills in lacking values with values or techniques that you specify.

Example:

import pandas as pd

# Create a DataFrame with missing values

data = {'A': [1, 2, None, 4],

'B': [5, None, 7, 8]}

df = pd.DataFrame(data)

# Check for missing values

print(df.isnull())

# Drop rows with missing values

cleaned_df = df.dropna()

# Fill missing values with mean

filled_df = df.fillna(df.mean())

print(cleaned_df)

print(filled_df)

Removing Duplicates

Removing duplicate rows from a dataset is crucial for maintaining accurate statistics. The ‘drop_duplicates()’ technique in Pandas allows you to remove duplicate rows based on specific columns.

Example:

import pandas as pd

# Create a DataFrame with duplicate rows

data = {'A': [1, 2, 2, 3],

'B': ['x', 'y', 'y', 'z']}

df = pd.DataFrame(data)

# Remove duplicate rows

cleaned_df = df.drop_duplicates()

print(cleaned_df)

Transforming Data

With Pandas, users can effortlessly transform the shape, pivot, and other forms of data to meet the needs of their analyses. The following are some not-unusual transformation operations:

For reshaping, use pivot_table(), melt(), stack(), and unstack().

Putting collectively facts: join(), concat(), merge()

Functions which are used: apply(), map(), and applymap()

Example :

import pandas as pd

# Create a DataFrame with duplicate rows

data = {'A': [1, 2, 2, 3],

'B': ['x', 'y', 'y', 'z']}

df = pd.DataFrame(data)

# Remove duplicate rows

cleaned_df = df.drop_duplicates()

print(cleaned_df)

By utilising these functions, Pandas enables users to quickly and easily prepare data for analysis, laying a stable foundation for deriving valuable insights from a wide range of datasets.

Data Exploration and Analysis

Exploratory Data Analysis (EDA) is an integral part of any information evaluation assignment because it facilitates data analysts in recognising the dataset’s characteristics and patterns. The fact that Pandas has many tools for summarizing, collecting, and visualizing facts makes it a key part of EDA. We’ll provide more details about how Pandas enables users to perform EDA effectively in this segment.

Putting Data Together

Pandas has many functions that may be used to locate descriptive information and summarise a dataset. The mean, median, standard deviation, minimum, maximum, and quartiles are all not unusual forms of precise records.

Example:

import pandas as pd

# Create a DataFrame

data = {'A': [1, 2, 3, 4, 5],

'B': [6, 7, 8, 9, 10]}

df = pd.DataFrame(data)

# Summary statistics

print(df.describe())

Sort with the Group-by Operations

A primary challenge in data evaluation is categorising records based on one or more categorical variables. The ‘groupby()’ characteristic in Pandas helps you to divide statistics into groups based on positive standards, after which to do something with each group.

Example:

import pandas as pd

# Create a DataFrame

data = {'Category': ['A', 'B', 'A', 'B', 'A'],

'Value': [1, 2, 3, 4, 5]}

df = pd.DataFrame(data)

# Group by 'Category' and compute mean

grouped_df = df.groupby('Category').mean()

print(grouped_df)

Plotting Functionality

Visualising facts is a fantastic way to get new thoughts from them. It is easy to apply and customise plotting features with Pandas because it works nicely with Matplotlib, a popular plotting library.

Example:

import pandas as pd

import matplotlib.pyplot as plt

# Create a DataFrame

data = {'A': [1, 2, 3, 4, 5],

'B': [6, 7, 8, 9, 10]}

df = pd.DataFrame(data)

# Plot line chart

df.plot()

plt.xlabel('Index')

plt.ylabel('Values')

plt.title('Line Chart')

plt.show()

In addition to line charts, Pandas helps with many other plot types, including histograms, bar plots, scatter plots, and box plots. These permit customers to see extraordinary parts of how the facts are shipped and how variables are associated.

Pandas offers data scientists and analysts these tools to quickly explore and examine datasets, finding valuable insights that help them make informed choices and conduct deeper studies. Pandas gives you the power and flexibility you want for effective EDA, whether you’re summarizing facts, doing institution-through operations, or making visualizations.

Time Series Analysis with Pandas

Time collection records, that is, information that is accumulated over time at regular intervals, are tougher to research and offer you more possibilities to accomplish that. Working with time collection facts in Pandas is straightforward, making it a valuable tool for time series analysis. We will examine Pandas’ time series evaluation features and demonstrate how they can be used for tasks such as datetime indexing, resampling, time-based calculations, fashion evaluation, identifying seasonality, and forecasting.

Datetime Indexing

Pandas does a first-rate job of helping datetime indexing, which makes it clean to work with and examine time collection information. Users can perform time-based total duties and retrieve records primarily based on specific dates or periods by creating a datetime index.

Example:

import pandas as pd

# Create a time series DataFrame with datetime index

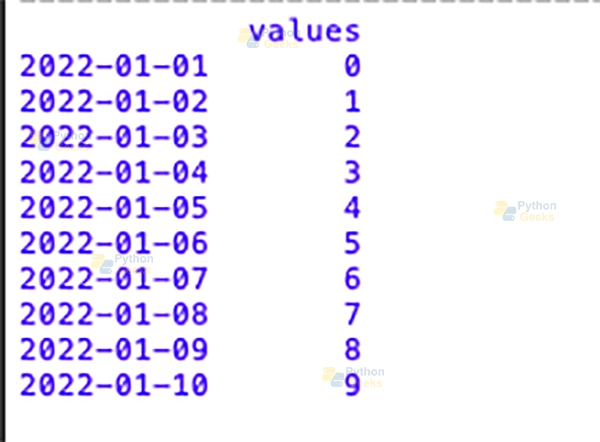

dates = pd.date_range(start='2022-01-01', end='2022-01-10')

data = {'values': range(10)}

ts_df = pd.DataFrame(data, index=dates)

print(ts_df)

Output:

Resampling

When you resample, you change how often the time series statistics are accumulated. The Resample() characteristic in Pandas enables users to upsample (increase the frequency) or downsample (decrease the frequency) the records, after which they can utilise aggregation features on the new intervals.

Example:

# Resample the time series data to monthly frequency and calculate the mean

monthly_mean = ts_df.resample('M').mean()

print(monthly_mean)

Time-Based Calculations

Pandas makes it easy to perform calculations based entirely on time, such as adding, subtracting, converting dates, and rolling window calculations.

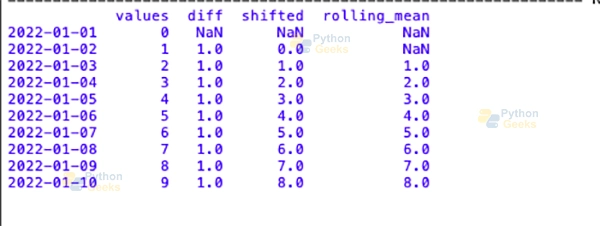

Example:

# Calculate the difference between consecutive values ts_df['diff'] = ts_df['values'].diff() # Shift the data by one period ts_df['shifted'] = ts_df['values'].shift(1) # Calculate rolling mean with a window size of 3 ts_df['rolling_mean'] = ts_df['values'].rolling(window=3).mean() print(ts_df)

Output:

Trend Analysis and Seasonality Detection

Time series information can be utilised with Pandas to identify trends and seasonality. Trend analysis seeks long-term patterns or tendencies within the data, while seasonality detection aims to identify patterns that recur at regular intervals.

Example:

import statsmodels.api as sm # Perform trend analysis using seasonal decomposition decomposition = sm.tsa.seasonal_decompose(ts_df['values'], model='additive') # Extract trend component trend = decomposition.trend # Print trend component print(trend)

Forecasting

A variety of strategies, including autoregressive integrated moving average (ARIMA) modelling, exponential smoothing (ETS), and machine learning algorithms, can be utilised with Pandas to make predictions about time series.

Using the ARIMA model, for instance:

from statsmodels.tsa.arima.model import ARIMA # Fit ARIMA model model = ARIMA(ts_df['values'], order=(1,1,1)) fit_model = model.fit() # Forecast future values forecast = fit_model.forecast(steps=5) print(forecast)

By utilising these features and methods, Pandas customers can effectively analyse time series data and conclude. Pandas has all the equipment you want to do full-time collection evaluation, which includes datetime indexing, resampling, time-based calculations, fashion evaluation, seasonality detection, forecasting, and more.

Natural Language Processing with Pandas

When you want to do natural language processing (NLP), Pandas can help you put together and take a look at textual content statistics. Here are some methods that Pandas can be utilized in NLP:

1. Data Loading and Preprocessing: There are many locations where Pandas can get text statistics, like databases, CSV files, and Excel spreadsheets. Tokenisation, lowercasing, removing punctuation and preventing phrases are some of the preprocessing features it offers as soon as it’s fully loaded.

2. Text Mining and Analysis: Word frequency analysis, sentiment analysis, and topic modelling are examples of textual content mining and evaluation responsibilities that Pandas makes easier. To get new ideas and beneficial records, you could group, filter, and accumulate textual content records using this tool.

3. Feature Engineering: One-hot encoding, TF-IDF (Term Frequency-Inverse Document Frequency) vectorization, and word embeddings are some of the ways that Pandas lets you make new functions from textual content information. After that, these features can be fed into models for the system to learn from.

4. Text Data Visualization: You can make beneficial visualizations of textual content records with Pandas because it works properly with statistics visualization libraries like Matplotlib and Seaborn. Word clouds, bar plots of phrase frequencies, and scatter plots of word embeddings are a few visualizations that permit you to understand and make sense of textual content facts.

5. Named Entity Recognition (NER): An NLP library like SpaCy or NLTK can be used with Pandas to do things like named entity popularity. Once NER is executed, Pandas can assist, organize and analyze the extracted entities in order that they can be utilized in different steps.

6. Text Classification and Sentiment Analysis: Pandas can encode specific variables and divide textual content statistics into education and test sets to get it prepared for classification obligations. Then, it may be used to test how highlighted textual content type models work and to examine how humans perceive things in text data.

7. Textual Data Integration: Pandas helps you to integrate textual information with other forms of data, like numerical or categorical records, so that you can do a complete evaluation. This integration enables the capture of a comprehensive view of datasets that contain diverse types of data.

8. Web Scraping and Text Data Collection: For web scraping, you may use Pandas and libraries like BeautifulSoup or Scrapy to get textual content statistics from websites or other online resources. After the scraped text information is accrued, Pandas can organise and preprocess it so that it may be used for further evaluation.

Analysts and data scientists can rapidly trade, examine, and analyse from textual information by using Pandas for natural language processing tasks. This allows for various responsibilities, such as identifying how human beings experience something, sorting files, and locating data.

Neuroscience with Pandas

The Python library Pandas, which can be used to analyse and visualise statistics in many ways, has been very beneficial within the area of neuroscience. Pandas offers neuroscientists a sturdy framework for conducting research, processing experimental data, and gaining a deeper understanding of how the brain works, by providing them with powerful tools for handling and analysing data.

Preprocessing and cleaning of data

In neuroscience, datasets frequently come from a variety of places, like behavioral assessments, imaging research, and recordings of the mind’s pastime. These sets of statistics can be huge, noisy, and lacking or wrong. Pandas has a set of functions for preprocessing and cleaning records that make it smooth for researchers to cope with those troubles.

Neuroscientists can use Pandas to do things like

- Adding and combining multiple datasets to conduct a comprehensive evaluation.

- Finding lacking values and coping with them through imputation or deletion.

- Ensuring that every experiment uses the same information formats and devices by standardising them.

- Identifying and excluding “outliers” that might skew statistical analyses.

Exploratory Data Analysis (EDA)

EDA is a crucial step in understanding how neuroscience datasets are established and the patterns they exhibit. Pandas makes EDA simpler through supplying you with easy methods to summarize, visualize, and discover statistics.

Neuroscientists can use Pandas to do the following:

- This involves creating descriptive statistics, such as the mean, median, standard deviation, and percentiles.

- Using fact visualisation tools like histograms, scatter plots, and heatmaps to show how information is sent and how it is related to other facts.

- Conducting correlation analysis to uncover institutions between distinct neural variables.

- Looking at how time changes through the years using Pandas’ time-collection evaluation equipment.

Statistical Analysis and Hypothesis Testing

For drawing stable conclusions from experimental statistics in neuroscience studies, statistical analysis is necessary. You can perform numerous specialised statistical checks and hypothesis tests with Pandas, as it integrates well with other Python libraries, such as NumPy and SciPy.

Pandas may be utilized by researchers to:

- Using parametric and non-parametric statistical exams to look at specific organisations or conditions.

- Running ANOVA (Analysis of Variance) and post hoc checks to search for differences among experimental groups.

- Putting device learning algorithms to work on neuroimaging data to perform tasks like clustering, regression, and classification.

- Using statistical inference strategies to check hypotheses and figure out what the outcomes imply.

Data Visualization and Reporting

Effectively sharing findings is essential in neuroscience research. When neuroscientists utilise libraries such as Pandas, Matplotlib, and Seaborn together, they can create engaging graphs and reports to share their findings with other scientists and the public.

With Pandas, researchers can:

- Change the manner visualizations appear to attract interest to essential findings and styles in neuroscience datasets.

- Create figures and plots for medical papers and displays that may be suitable for publication.

- For dynamic exploration of complicated neural records, use libraries like Plotly to make interactive visualizations.

- For repeatable study workflows, connect Pandas to tools like Jupyter Notebooks and Markdown to automate the file creation system.

Case Study: Analyzing Brain Connectivity Data

Take a look at a case wherein researchers study mind connectivity facts from purposeful magnetic resonance imaging (fMRI) experiments to expose how Pandas can be utilised in neuroscience. They can combine the uncooked fMRI data using Pandas, determine practical connectivity matrices, and apply graph concepts to describe how brain networks are organised.

Neuroscientists can gain a deeper understanding of how the mind works and solve the mysteries of the mind by utilising Pandas’ functions for manipulating, analysing, and visualising information.

Data Wrangling and Transformation with Pandas

Data wrangling, also known as “data munging,” is the process of cleansing, transforming, and reshaping raw data so that it can be analysed. Pandas is terrific at working with data because it has many features and techniques for reshaping data, joining or merging datasets, and applying custom transformations. We’ll discuss Pandas’ functionality in information wrangling and provide you with some examples of unusual fact wrangling tasks in this phase.

Reshaping Data: When you reshape statistics, you convert the format of a dataset by performing operations such as pivoting, melting, stacking, and unstacking. Pandas has easy-to-use tools for transforming the shape of statistics to meet the needs of analysis.

Pivot Tables: With pivot tables, users can sum up and organize records based on one or more express variables. The ‘pivot_table()’ function in Pandas makes it easy to show DataFrame items in pivot tables.

Example:

import pandas as pd

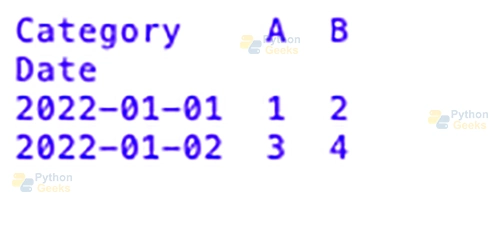

# Create a DataFrame

data = {'Date': ['2022-01-01', '2022-01-01', '2022-01-02', '2022-01-02'],

'Category': ['A', 'B', 'A', 'B'],

'Value': [1, 2, 3, 4]}

df = pd.DataFrame(data)

# Create a pivot table

pivot_table = df.pivot_table(index='Date', columns='Category', values='Value', aggfunc='sum')

print(pivot_table)

Output:

Melting: Wide-layout facts are turned into lengthy-format facts via melting. This makes the records easier to research and spot. The Melt() feature in Pandas is used to reshape DataFrame items.

Example:

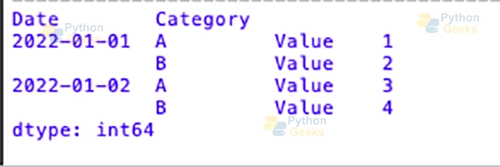

# Melt the DataFrame melted_df = pd.melt(df, id_vars='Date', value_vars=['A', 'B'], var_name='Category', value_name='Value') print(melted_df)

Stacking/Unstacking: To turn ranges of hierarchical index labels, you could stack and unstack them. The stack() and unstack() features in Pandas make these tasks easier.

Example:

# Create a DataFrame with hierarchical index

data = {'Date': ['2022-01-01', '2022-01-01', '2022-01-02', '2022-01-02'],

'Category': ['A', 'B', 'A', 'B'],

'Value': [1, 2, 3, 4]}

df = pd.DataFrame(data)

df.set_index(['Date', 'Category'], inplace=True)

# Stack the DataFrame

stacked_df = df.stack()

print(stacked_df)

Output:

Merging/Joining Datasets: During merging and joining, specific datasets are combined based on the columns or indices they share. The Merge() and be part of() capabilities in Pandas provide several methods to perform these tasks.

Example:

# Create two DataFrames

left_df = pd.DataFrame({'key': ['A', 'B', 'C'], 'value': [1, 2, 3]})

right_df = pd.DataFrame({'key': ['B', 'C', 'D'], 'value': [4, 5, 6]})

# Merge the DataFrames on the 'key' column

merged_df = pd.merge(left_df, right_df, on='key', how='outer')

print(merged_df)

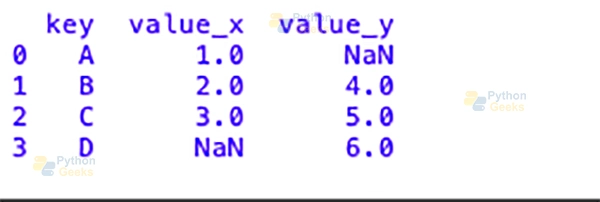

Output:

Applying Custom Transformations: With functions like follow(), map(), and applymap(), Pandas customers can apply statistics in their specific methods.

Example:

# Apply a custom transformation

def custom_transform(x):

return x * 2

df['Transformed_Value'] = df['Value'].apply(custom_transform)

print(df)

Pandas has loads of functions and techniques that can be used to quickly prepare and exchange facts so that it is ready for analysis and visualization. Pandas gives you the freedom and flexibility you need to efficiently wrangle records, whether you’re converting the form of data, merging or joining datasets, or using custom variations.

Summary

As a result, Pandas is seen as a key tool in data analysis, with no equal in data manipulation and exploration. From handling missing values to merging datasets and reshaping data, Pandas enables you to clean your data straightforwardly and, as a result, generates actionable insights from raw observations.

Due to its support for well-being and seasonal data analysis, along with user-friendly graphing functionality, it becomes the first choice for observing trends with time series data and predicting possible future outcomes. With the growing universe of data science, Pandas is an indispensable tool for extracting power from data, enabling us to uncover relevant insights and intelligent logic.