Basic Functionality of Pandas

Boost Your Career with In-demand Skills - Start Now!

One tool stands out in data analysis, with Python as a cornerstone of efficiency and versatility: It soothes the soul and provides opportunities for quiet observation and introspection. Although initially started as a solution to data management issues, Pandas is now recognized as the most popular library for data manipulation, exploration, and transformation. Python without Pandas would lose its significance in programming as the IDE allows users to perform data tasks without effort.

In its essence, the Panda library offers a variety of data types, notably Series and DataFrame types of data containers, that are distinguished by good performance in data storage, modification, and processing. Whether performing fast jobs for a small dataset or slow operations for massive data, pandas provide many functionalities that streamline the data analysis workflow.

Pandas can gather different data formats and sources efficiently, which gives the tool the upper hand. The wide range of data formats, from CSV files, excel spreadsheets, json, SQL databases and Pandas, makes the data loading a straightforward task, simplifying the preparation and transformation of data so analysts and data scientists can concentrate on interpretation and insights discovery.

Finally, pandas visualise with clear and precise charts and provide various ways of seeing and understanding data. Whether it is calculating statistics, finding missing values, or fixing messy data, Pandas gives users the tools needed for data onsets that can be viable.

In this article, we will scratch the surface of the Pandas’ basic functionality, uncovering the essential features and setting out how the tool can help us to handle structured data efficiently. By the end of the tutorial, you will have a profound knowledge of Pandas’ abilities and be ready to develop your envy of data acquisition and modification with Python.

What is Pandas?

Pandas is a free and open-supply Python package that offers information systems and gear for data evaluation which might be both clean to apply and distinctly performant. Pandas, created by Wes McKinney in 2008, is now a crucial part of the Python surroundings for running with and reading statistics.

Python Ecosystem Importance:

There are several motives why Pandas is so critical to the Python environment:

1. Efficient Data Handling: Pandas offers sturdy records structures optimized for speed and efficiency, simplifying working with based records. Thanks to this, Users can perform complex statistics manipulation obligations with minimum code.

2. Pandas is well matched with other famous Python libraries and tools for records analysis; for instance, scikit-examine, NumPy, and Matplotlib. Because of its compatibility with other packages, Pandas is an essential part of any records analyst’s toolbox and can do tons more.

3. Analyzing and Exploring Data: Pandas affords several tools for cleansing, remodeling, and studying records. Quickly and without problems, extract beneficial insights from records with its user-friendly syntax and enormous method set.

Pandas’ adaptability to various information codecs and sources—including CSV files, Excel spreadsheets, SQL databases, JSON facts, and more—makes it a brilliant desire to work with datasets of any size. Pandas’ adaptability makes it an awesome health for many records evaluation jobs.

Pandas is supported by a sturdy network of customers and participants who work together to make it higher. Users of varying skill ranges can easily navigate Pandas to the abundance of tutorials, documentation, and online assets at their fingertips.

Series and DataFrame, Two Fundamental Data Structures:

Two fundamental information structures form the idea of Pandas:

1. There’s the series, which is just like an array but simplest has one dimension and might keep any form of records (ints, floats, strings, etc.). Series gadgets are a super manner to control records because they are much like lists or NumPy arrays in Python; however, they have more outstanding capabilities and a labelled index.

2. DataFrame: A labelled information shape with two dimensions that looks like a table in SQL or a spreadsheet. Each column in a DataFrame can store unique statistics, and the rows make up the data itself. They are perfect for managing tabular records because of their sturdy information manipulation abilities encompassing indexing, filtering, merging, and aggregation.

Customers can rely on Series and DataFrame, Pandas statistics analysis’s two number one components, for practical exploration, manipulation, and analysis of dependent information in Python.

Installing Pandas:

Pandas can be without problems established using either pip or conda, the choice being yours based totally on your Python surroundings and private desire.

To use pip:

The following command may be done in the terminal or command prompt to install Pandas if you are using pip, the Python package manager:

pip install pandas

This command will fetch and set up the maximum recent Pandas bundle and all its dependencies from the Python Package Index (PyPI). Python scripts and Jupyter notebooks can utilise Pandas as soon as the installation is completed.

Making use of conda:

Pandas and statistics science libraries may be set up using conda if you use Anaconda or Miniconda, as well as Python distributions and package managers. Enter the following command into your terminal or Anaconda Prompt to install Pandas thru conda:

conda install pandas

Simply go for walks; this command will upload the Pandas bundle to Anaconda’s default repository. If you use Anaconda, Conda will set up Pandas and all its dependencies routinely, ensuring that every one of your packages will paintings together.

Checking the Setup:

After the set-up is finished, you can import the Pandas library right into a Python interpreter or a Jupyter pocketbook to ensure it becomes effectively installed:

import pandas as pd

You can begin using Pandas for information analysis and manipulation if no mistakes occur throughout the set-up technique.

Installing Pandas in digital surroundings is notably advocated to save you conflicts with different packages. Tools like virtualenv (with pip) and conda environments (with conda) make it easy to set up separate Python environments for different initiatives, even if you don’t have a good deal to enjoy with virtual environments. This ensures reproducibility across tasks and aids in keeping bundle dependencies.

Getting Started:

Importing the Pandas library and creating primary information systems like Series and DataFrame have to be our first steps before exploring the complexities of statistics manipulation with Pandas.

1. Bring up the Pandas Library:

Step one to start running with Pandas is importing the Pandas library into your Python script or Jupyter notebook. For convenience in referencing its capabilities and instructions to your code, Pandas is commonly imported with the alias ‘pd’:

import pandas as pd

The ‘pd’ shorthand notation makes it clean to access Pandas objects and functions once you import them in this manner.

2. Making DataFrame and Series Objects:

Creating some simple Pandas records structures is the subsequent step:

1. Series (a):

A series is just like an array; however, it is only one size and can store any statistic. You can use the ‘pd.Series()’ constructor with a Python list, NumPy array, or dictionary as arguments to construct a Series.

# Creating a Series from a Python list s = pd.Series([1, 2, 3, 4, 5]) print(s)

2. DataFrame (b):

A DataFrame resembles a spreadsheet or SQL desk in that it’s miles a -dimensional labelled information structure with rows and columns. The ‘pd.DataFrame()’ constructor accepts dictionaries, lists of dictionaries, or NumPy arrays as arguments, permitting you to create DataFrames.

# Creating a DataFrame from a dictionary

data = {'Name': ['Alice', 'Bob', 'Charlie', 'David'],

'Age': [25, 30, 35, 40],

'City': ['New York', 'Los Angeles', 'Chicago', 'Houston']}

df = pd.DataFrame(data)

print(df)

3. Grasping the DataFrame and Series Structure:

Series:

- The statistics, or values, and the index are the two primary elements of a series.

- The default integer indices (zero, 1, 2,…) or consumer-defined labels may be used to pick out the factors inside the Series.

- Use the ‘values’ characteristic to get entry to the facts and the ‘index’ characteristic to get entry to the index independently.

DataFrame:

- Like a table, a DataFrame has rows and columns.

- With the identical index, each column of a DataFrame is an instance of a Series.

- As residences of the DataFrame, column names provide entry to them.

- Another characteristic of DataFrames is an index that labels the rows. You can exchange the index from its default fee of a series of integers to a custom index or another column in the DataFrame.

To manage and examine information successfully with Pandas, you must recognise the Series and DataFrame structure.

Check out this table below for various attributes and method descriptions used exclusively.

| Sr.No. | Attribute or Method & Description |

| 1 | T

Transposes rows and columns. |

| 2 | axes

Returns a list with row and column axis labels as the only members. |

| 3 | dtypes

Returns the dtypes in this object. |

| 4 | empty

True if NDFrame is empty [no items]; if any axes are of length 0. |

| 5 | ndim

Number of axes / array dimensions. |

| 6 | shape

Returns a tuple representing the dimensionality of the DataFrame. |

| 7 | size

Several elements in the NDFrame. |

| 8 | values

Numpy representation of NDFrame. |

| 9 | head()

Returns the first n rows. |

| 10 | tail()

Returns last n rows. |

After getting a feel for the structure of Series and DataFrame items and finding a way to import Pandas, you are organized to dive into Pandas’ various information manipulation and evaluation skills.

Loading Data:

Pandas provides easy methods for examining facts from various resources, including SQL databases, Excel spreadsheets, CSV documents, etc. How about we take a look at the Pandas facts loading system?

1. Importing and Exporting CSV File Data:

To load CSV files into a DataFrame, you may use the ‘pd.Read_csv()’ characteristic. As an issue, this function requires you to specify the route to the record.

import pandas as pd

# Read data from a CSV file into a DataFrame

df_csv = pd.read_csv('data.csv')

# Display the DataFrame

print(df_csv.head())

2. Executing Excel File Data Readouts:

The ‘pd.Read_excel()’ function can read facts from Excel files. As arguments, you need to specify the course to the record and, if applicable, the call of the sheet.

# Read data from an Excel file into a DataFrame

df_excel = pd.read_excel('data.xlsx', sheet_name='Sheet1')

# Display the DataFrame

print(df_excel.head())

Getting Information Out of SQL Databases:

The ‘pd.Read_sql()’ feature is to be had in Pandas, allowing customers to read information from SQL databases. A library like SQLAlchemy or one well-matched with it will likely be required to connect with the database.

from sqlalchemy import create_engine

# Create a SQLAlchemy engine

engine = create_engine('sqlite:///database.db')

# Read data from a SQL query into a DataFrame

query = 'SELECT * FROM table_name'

df_sql = pd.read_sql(query, engine)

# Display the DataFrame

print(df_sql.head())

Getting a First Look at Loaded Data:

To ensure facts are loaded correctly into a DataFrame, previewing and looking into the facts is critical. Here are some approaches to accomplish that:

To preview the facts, show the primary few rows of the dataframe using the “head()” technique.

# Display the first few rows of the DataFrame print(df.head())

For a short overview of the DataFrame, such as its facts sorts and any non-null values, use the “info()” technique. This will assist you to inspect the records.

# Display information about the DataFrame print(df.info())

Use the ‘describe()’ approach to create descriptive facts for numerical columns.

# Generate descriptive statistics print(df.describe())

To check if a DataFrame has any missing values, you could use the “isnull()” approach.

# Check for missing values print(df.isnull().sum())

Before you dive into records evaluation and manipulation, ensure the loaded statistics is unbroken and get a feel for its shape through previewing and examining it.

Indexing and Selecting Data:

Data evaluation is predicated closely on indexing and selecting facts, allowing customers to retrieve a DataFrame subset for extra manipulation or analysis. Pandas have various information indexing and selection techniques, such as ‘loc’, ‘iloc’, and boolean indexing.

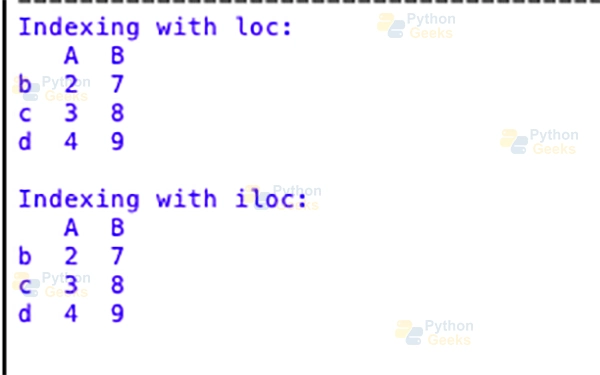

1. Indexing with loc and iloc:

Statistics-by means of-label choice is made feasible with the ‘loc’ accessor. When you want to get statistics out of a DataFrame, you can inform it which rows and columns to apply.

You can use the ‘iloc’ assessor to index based on integers. It gives extra traditional row/column indexing semantics and lets you pick statistics using integer location.

Here is an example to demonstrate these ideas:

import pandas as pd

# Sample DataFrame

data = {

'A': [1, 2, 3, 4, 5],

'B': [6, 7, 8, 9, 10],

'C': [11, 12, 13, 14, 15]

}

df = pd.DataFrame(data, index=['a', 'b', 'c', 'd', 'e'])

# Indexing with loc

print("Indexing with loc:")

print(df.loc['b':'d', 'A':'B']) # Selecting rows 'b' to 'd' and columns 'A' to 'B'

print()

# Indexing with iloc

print("Indexing with iloc:")

print(df.iloc[1:4, 0:2]) # Selecting rows 1 to 3 and columns 0 to 1 (exclusive)

Output :

For the instances given in advance:

A subset of rows and columns can be selected using ‘loc’ by specifying the row labels as ”b’: ‘d” and the column labels as ”A”.

To pick out a subset of rows and columns based on their integer positions, we can use the ‘iloc’ function with the parameters ‘1:4’ for rows and ‘zero:2’ for columns.

2. Boolean Indexing for Selecting Rows Based on Conditions:

We can use Boolean indexing to pick rows from a DataFrame that are consistent with certain standards. We construct arrays of ‘True’ and ‘False’ values called boolean masks and use them to clear out rows.

# Boolean indexing for selecting rows based on conditions

print("Boolean indexing for selecting rows based on conditions:")

print(df[df['B'] > 7]) # Selecting rows where values in column 'B' are greater than 7

In this case, we will use boolean indexing to find rows where column ‘B’ has values larger than 7. To clear out the rows of the DataFrame, the expression ‘df[‘B’] > 7′ creates a boolean mask.

Pandas’ indexing and choice methods make information work quicker and greater correctly, letting customers get the data they need for visualization or analysis.

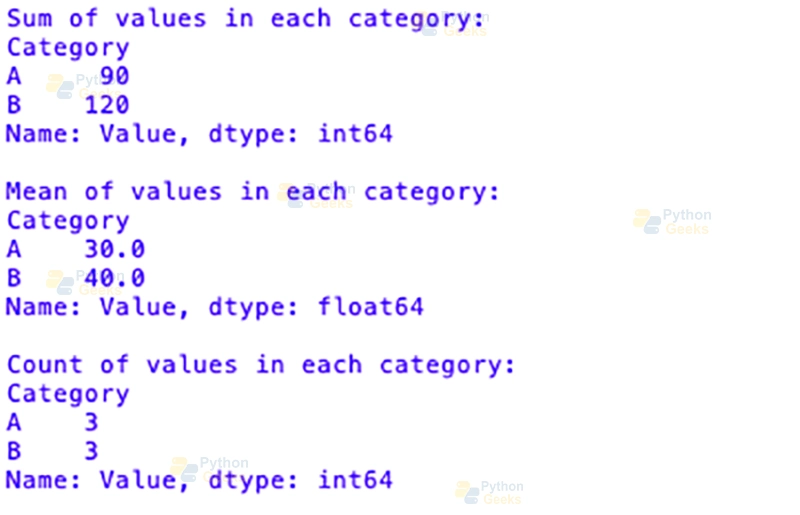

Grouping and Aggregating Data:

Essential operations in statistics analysis, information grouping and aggregation permit customers to summarize and derive insights from their datasets. Data can be easily grouped using Pandas’ ‘groupby’ function, and aggregation features like sum, suggest, count number, and so forth are at your fingertips.

1. Using ‘groupby’ to Group Data:

To institution information in Pandas consistent with one or more columns, you could use the ‘group by’ feature. You can accomplish aggregation operations at the grouped facts after it generates a DataFrameGroupBy item.

2. Executing Operations on Aggregated Data:

Once the records have been grouped, it can summarize the usage of lots of aggregation capabilities. To name some standard aggregation capabilities:

‘sum’: Adds up all of the values in each set.

“suggest”: Determines the average (imply) of all the set values.

‘rely’: Finds out what number of values aren’t null in each set.

‘min’ and max’: Find each set’s bottom and highest values.

“median”: Finds the middle number in each set.

‘std’ and ‘var’: Determines the dispersion of values across all classes and their widespread deviation.

import pandas as pd

# Sample DataFrame

data = {

'Category': ['A', 'B', 'A', 'B', 'A', 'B'],

'Value': [10, 20, 30, 40, 50, 60]

}

df = pd.DataFrame(data)

# Grouping data using groupby

grouped = df.groupby('Category')

# Performing aggregation functions

print("Sum of values in each category:")

print(grouped['Value'].sum()) # Calculate the sum of 'Value' in each category

print()

print("Mean of values in each category:")

print(grouped['Value'].mean()) # Calculate the mean of 'Value' in each category

print()

print("Count of values in each category:")

print(grouped['Value'].count()) # Count the number of values in each category

Output :

Here we see:

- Based on the ‘Category’ column, we use the ‘groupby’ characteristic to institution the DataFrame ‘df’.

- To summarize the values in each category, we apply aggregation features (‘sum,”imply,’ depend) to the grouped facts.

- The sum of all the values in every category is included in the produced series.

Data summarization and analysis tools like grouping and aggregation help screen hidden traits and styles in a dataset.

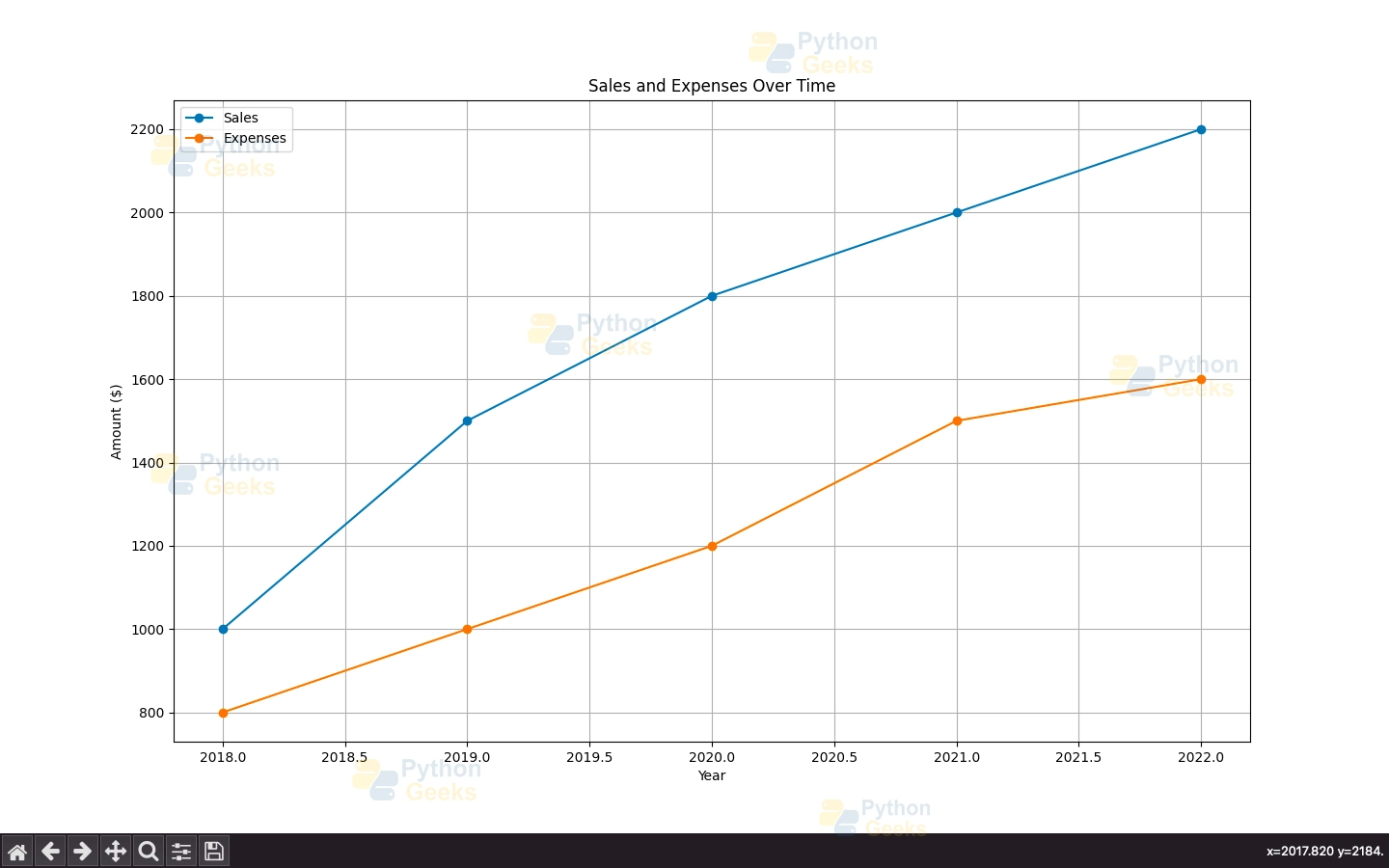

Visualizing Data:

Visualisation of facts is crucial to making sense of datasets and noticing tendencies, styles, and correlations. Pandas integrates with Matplotlib, a popular Python plotting library, to offer primary facts visualization talents, even though its number one use is statistics manipulation. Let’s check the basics of plotting with Pandas’ integrated strategies and get a top-level view of the bundle’s data visualization competencies.

1. Overview of Data Visualization in Pandas:

Create basic plots immediately from DataFrame and Series objects with Pandas’s easy interface. Users can visualize information without directly interacting with Matplotlib’s APIs because it uses Matplotlib behind the scenes to generate plots.

2. Basic Plotting Using Built-in Methods:

Common plot styles, including line plots, bar plots, histograms, scatter plots, and more, are built-in in Pandas. Users can, without problems, create data visualizations using those strategies and very little code.

Let’s see an instance to expose you how to use Pandas for basic plotting:

import pandas as pd

import matplotlib.pyplot as plt

# Sample DataFrame

data = {

'Year': [2018, 2019, 2020, 2021, 2022],

'Sales': [1000, 1500, 1800, 2000, 2200],

'Expenses': [800, 1000, 1200, 1500, 1600]

}

df = pd.DataFrame(data)

# Line plot

df.plot(x='Year', y=['Sales', 'Expenses'], kind='line', marker='o', title='Sales and Expenses Over Time')

plt.xlabel('Year')

plt.ylabel('Amount ($)')

plt.grid(True)

plt.show()

# Bar plot

df.plot(x='Year', y=['Sales', 'Expenses'], kind='bar', title='Sales and Expenses Comparison')

plt.xlabel('Year')

plt.ylabel('Amount ($)')

plt.show()

# Histogram

df['Profit'] = df['Sales'] - df['Expenses']

df['Profit'].plot(kind='hist', bins=5, title='Profit Distribution')

plt.xlabel('Profit ($)')

plt.ylabel('Frequency')

plt.show()

Output :

Here we see:

- Using sales and expense facts from diverse years, we build a pattern DataFrame ‘df’.

- Using the ‘ plot ‘ approach, we make line and bar plots to see how income and costs exchange over time.

- We also make a histogram to show how the sales and expenses turn into earnings.

Pandas’ in-built plotting techniques make exploratory statistics evaluation less complicated and faster by permitting users to create informative visualizations without delay from DataFrame and Series items. Matplotlib or specialized Python plotting libraries can be essential for customers wishing to create extra complex and personalized visualizations.

Summary

Therefore, Pandas is a great set of modules for data manipulation and analysis in Python, which we have to know even as a Python data scientist and analyst. Population and data cleaning are operations that one would perform while using the Pandas package; apart from that, Pandas supports grouping, aggregating, and visualization of data simply and efficiently. With standing mastery of the essential functionalities, users will realize the utility of their data sets and will also generate valid input and informed decision-making. No matter what level you are at, whether you are a beginner trying to dig into data for the first time or an experienced user working on real-life problems, Pandas gives you the needed arsenals to be on the winning side of data analysis. Therefore, jump in, take advantage of Panda’s capabilities, and allow it to liberate and empower you to tackle data projects.