Data Structures in Pandas

Get ready to crack interviews of top MNCs with Placement-ready courses Learn More!

Being the supplementary arm of data analysis and manipulation tools in Python that is very fundamental is pandas. With the help of this library, which is a transparent and free software, the operation for handling data structures will be easier and an indispensable tool for data scientists, analysts and enthusiasts.

Pandas offers intuitive data structures at its core: series and DataFrame, the most commonly used among other types of objects in pandas. A PlotSeries is similar to a one-dimensional array defined by a named index and a simple data structure. The DataFrame extends this concept to the next level by using two-dimensional tabular structures which are like the spreadsheet and the SQL table.

Data analysis skills of panda bears are critical to an organization. Its standard functionality with other Python libraries such as NumPy and Matplotlib, which ensure convergence of data types and operations, furthermore, grabs users’ attention to work with data through efficient data cleaning and transformation processes as well as data analysis and visualization tasks.

In this article, we will travel through the cognitive processes of Pandas, uncovering its data structures and functionality to the right practices. When it is done, you will not only know how Pandas works by heart but also it becomes a handy tool to parse data in the process of the exploratory analysis.

Pandas Series

When you figure with Pandas to trade information, the Series is one of the maximum critical builders. A one-dimensional categorized array, like a column in a spreadsheet or an unmarried characteristic in a dataset, is one way to consider it. Let’s study extra approximately how the Series information structure works.

Explanation of Series as a one-dimensional categorized array

In its middle, a Series is an item that lists values in a certain order. But what makes it exceptional is that every detail has an index, or labels, that go together with it. This index makes it easy to discover and exchange records quickly and without problems. It’s like a mix between a NumPy array and a dictionary, with labels for each object to help you discover it.

Creating Series Objects

The method of making a Series in Pandas is very simple. Different styles of statistics systems, like lists, arrays, dictionaries, or maybe scalar values, can be used to start a Series.

import pandas as pd

# Creating a Series from a list

s1 = pd.Series([10, 20, 30, 40, 50])

# Creating a Series from a dictionary

data = {'A': 100, 'B': 200, 'C': 300}

s2 = pd.Series(data)

# Creating a Series with custom index

s3 = pd.Series([1, 2, 3, 4], index=['a', 'b', 'c', 'd'])

# Creating a Series from a scalar value

s4 = pd.Series(5, index=['x', 'y', 'z'])

Accessing elements in a Series

Position-based or label-based indexing can be used to get to factors in a Series. Position-primarily based indexing works like a Python listing or NumPy array, whilst label-based total indexing uses the labels which can be attached to each element to discover it.

# Accessing elements by position print(s1[0]) # Output: 10 # Accessing elements by label print(s2['A']) # Output: 100

Basic operations and techniques to be had for Series

Pandas Series has several operations and methods for working with facts speedily and effortlessly. Math operations, cutting, filtering, and statistical computations are some of the maximum commonplace operations.

# Arithmetic operations result = s1 + s2 # Adds corresponding elements with the same index labels # Slicing print(s3[1:3]) # Output: b 2\n c 3\n dtype: int64 # Filtering print(s4[s4 > 5]) # Output: Empty Series # Statistical computations print(s1.mean()) # Output: 30.0 (mean of the Series)

These are only some of the many operations and techniques used with Series in Pandas. You’ll learn more about working with information with Pandas and how effective and flexible the Series is as we cross along.

Pandas DataFrame

When you use Pandas to analyze and trade statistics, the DataFrame is the workhorse. It offers a robust framework for working with categorized two-dimensional information. Let’s study more about how this flexible facts structure works.

Introduction to DataFrame as a two-dimensional categorized records shape

It’s smooth to think of a DataFrame as a table with rows and columns, like a spreadsheet or an SQL table. A DataFrame’s columns each hold an extraordinary attribute or variable, and its rows each keep a unique entry or observation. The DataFrame is specific due to the fact its axes are classified, which makes it smooth to index and exchange facts.

Creating DataFrames from various data assets

Pandas have many ways to make DataFrames from exclusive resources of facts so that they may be used for a wide range of responsibilities and with plenty of data formats. You can use dictionaries, lists, NumPy arrays, CSV files, Excel files, databases, and more to start a DataFrame.

import pandas as pd

# Creating a DataFrame from a dictionary

data = {'Name': ['John', 'Alice', 'Bob'],

'Age': [30, 25, 35],

'City': ['New York', 'Los Angeles', 'Chicago']}

df1 = pd.DataFrame(data)

# Creating a DataFrame from a list of lists

data = [['John', 30, 'New York'],

['Alice', 25, 'Los Angeles'],

['Bob', 35, 'Chicago']]

df2 = pd.DataFrame(data, columns=['Name', 'Age', 'City'])

# Reading a DataFrame from a CSV file

df3 = pd.read_csv('data.csv')

# Reading a DataFrame from an Excel file

df4 = pd.read_excel('data.xlsx')

Accessing and manipulating data in DataFrames

The DataFrame makes it clean and bendy to get entry to and alternate records. Using indexing and slicing operations, you could get records, alternate them in extraordinary ways, filter out rows primarily based on conditions, and add up and do math across rows and columns.

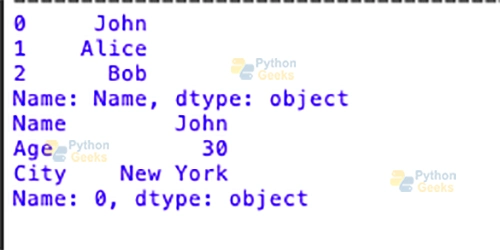

# Accessing a column print(df1['Name']) # Accessing a row print(df1.iloc[0]) # Adding a new column df1['Gender'] = ['Male', 'Female', 'Male'] # Filtering rows based on condition filtered_df = df1[df1['Age'] > 25] # Applying a function to a column df1['Age'] = df1['Age'].apply(lambda x: x + 1)

Output:

Performing operations and analysis on DataFrames

DataFrames allow customers to perform exceptional operations and analyses, from simple grouping to complicated statistical calculations. You can use integrated plotting tools to look at your statistics, compute descriptive facts, do operations on corporations, pivot tables, merge DataFrames, and more.

# Descriptive statistics

print(df1.describe())

# Group-wise operations

grouped_df = df1.groupby('City').mean()

# Merging DataFrames

merged_df = pd.merge(df1, df2, on='Name')

# Plotting data

df1.plot(kind='bar', x='Name', y='Age')

These examples display how flexible and powerful Pandas DataFrames are. As you study more about how to investigate and exchange information, you may find that DataFrames have quite a few beneficial functions and features that allow you to get insights from your statistics and make smart decisions.

Indexing and Selection in Pandas

Pandas’s maximum fundamental operations, indexing and choice, allow customers to get specific statistics groups from a DataFrame or Series. Pandas have many indexing and selection alternatives to match many wishes and possibilities.

1. Different approaches to index and pick out facts

There is also label-based indexing, in which you operate the index labels to choose which rows or columns to look at. The start and stop indices are each considered using this technique.

When using integer-primarily based indexing, you select rows or columns based on their positional indices, similar to how Python slices facts. Unlike label-primarily based indexing, the forestall index simplest works with one object.

When you operate boolean indexing, you select rows or columns based on a boolean situation. It helps you to filter statistics based on positive standards.

2. Using loc and iloc for label-based totally and integer-based totally indexing

loc: The loc accessor is frequently used for indexing based on labels. It takes row and column names as input and returns the records with them.

The iloc accessor is used to apply integers to find things in a listing. It takes integer positions as input and returns the records with those positions.

# Label-based indexing using loc print(df.loc['row_label', 'column_label']) # Integer-based indexing using iloc print(df.iloc[row_index, column_index])

3. Advanced indexing techniques

Multilevel indexing: This kind of indexing, which is also known as hierarchical indexing, lets you use multiple levels of row or column labels to locate statistics. It makes it simpler to paint with facts that have lots of dimensions.

# Creating a DataFrame with multi-level index

arrays = [['A', 'A', 'B', 'B'], [1, 2, 1, 2]]

index = pd.MultiIndex.from_arrays(arrays, names=('First', 'Second'))

df = pd.DataFrame({'Values': [10, 20, 30, 40]}, index=index)

# Accessing data with multi-level indexing

print(df.loc['A']) # Accessing data for level 'A'

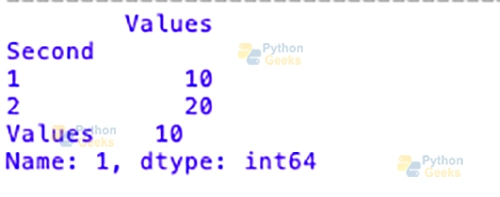

print(df.loc['A'].loc[1]) # Accessing data for level 'A' and sub-level 1

Output:

By getting precise at those indexing and selection strategies, you can control statistics in Pandas extra precisely to help you get insights and run analyses more quickly and as they should.

Data Manipulation with Pandas

Data manipulation is a vital part of statistics evaluation, and Pandas does a great task of giving you the effective equipment you want to do a extensive range of records manipulation obligations quickly and without problems. Here is a list of commonplace things that can be achieved with Pandas to exchange facts:

1. Filtering: This technique picks rows or columns of a DataFrame based on certain conditions. This lets you pull out elements of the information that meet positive criteria.

2. Sorting: Sorting rearranges the rows of a DataFrame based on the values of one or greater columns. This helps you to position your information in both ascending and descending order.

3. Grouping: To group records, you divide them into companies based on certain standards and run a function on each organization, after which you integrate the effects. This makes it easier to do mixed analyses and summarize statistics for every organization.

4. Merging: This technique takes two or more DataFrames and turns them into one by using columns or indices that they proportion. This may be used to combine datasets which can be related or to do relational operations.

5. Reshaping: To make evaluation or visualization easier, reshaping changes the structure of a DataFrame via doing things like pivoting, melting, or stacking and unstacking.

Let’s illustrate every facts manipulation project with examples:

import pandas as pd

# Create a sample DataFrame

data = {'Name': ['John', 'Alice', 'Bob', 'Jane'],

'Age': [30, 25, 35, 28],

'City': ['New York', 'Los Angeles', 'Chicago', 'Houston']}

df = pd.DataFrame(data)

# Filtering

filtered_df = df[df['Age'] > 25] # Select rows where Age is greater than 25

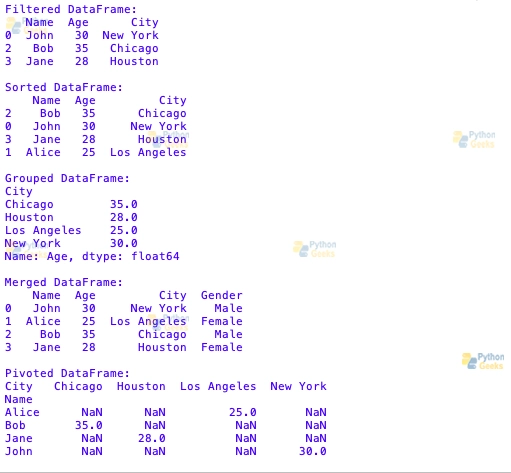

print("Filtered DataFrame:")

print(filtered_df)

# Sorting

sorted_df = df.sort_values(by='Age', ascending=False) # Sort DataFrame by Age in descending order

print("\nSorted DataFrame:")

print(sorted_df)

# Grouping

grouped_df = df.groupby('City')['Age'].mean() # Calculate average age by City

print("\nGrouped DataFrame:")

print(grouped_df)

# Merging

data2 = {'Name': ['John', 'Alice', 'Bob', 'Jane'],

'Gender': ['Male', 'Female', 'Male', 'Female']}

df2 = pd.DataFrame(data2)

merged_df = pd.merge(df, df2, on='Name') # Merge two DataFrames based on 'Name' column

print("\nMerged DataFrame:")

print(merged_df)

# Reshaping (Pivoting)

df_pivot = df.pivot(index='Name', columns='City', values='Age') # Pivot DataFrame

print("\nPivoted DataFrame:")

print(df_pivot)

Output:

These examples display how bendy Pandas is about commonplace records manipulation obligations. If you learn these skills, you can deal with numerous statistics analysis problems properly.

Data Cleaning and Preprocessing with Pandas

Before examining or modelling facts, you need to simplify it and do a little preprocessing. Pandas has many features that make it smooth to deal with missing statistics, normalize and standardize information, and cast off duplicates. Let’s appearance more closely at those duties:

1. Dealing with Missing Data

Finding Missing Values: Pandas calls values which are missing “NaN,” which stands for “Not a Number.” Methods like isnull() and notnull() may be used to locate missing values.

Handling Missing Values: Pandas has several methods to address lacking values. For instance, dropna() may be used to take away rows or columns that have missing values, fillna() may be used to fill missing values with specific values, and interpolate() may be used to fill lacking values based totally on values which can be close by.

2. Data Normalization and Standardization

When you normalize records, you exchange the numbers to a preferred range, commonly between zero and 1.

In records standardization, also called z-rating normalization, numbers are scaled so that their imply is zero and their popular deviation is 1.

Pandas do not have any integrated normalization or standardization features, but you can use math operations or libraries like Scikit-learn to do these things.

3. Removing Duplicates

You must find and dispose of the reproduction rows in a DataFrame to remove duplicates.

The ‘drop_duplicates()’ method in Pandas helps you dispose of reproduction rows based totally on positive or all columns.

import pandas as pd

# Create a sample DataFrame with missing values and duplicates

data = {'Name': ['John', 'Alice', 'Bob', 'Jane', 'Alice'],

'Age': [30, None, 35, 28, 25],

'City': ['New York', 'Los Angeles', 'Chicago', 'Houston', 'Los Angeles']}

df = pd.DataFrame(data)

# Identifying missing values

missing_values = df.isnull().sum()

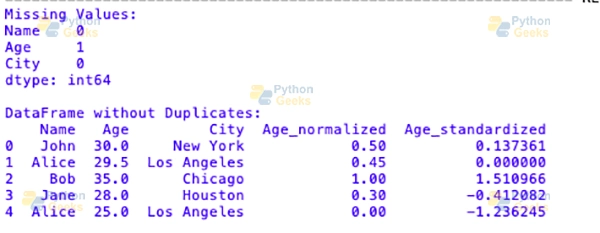

print("Missing Values:")

print(missing_values)

# Handling missing values (fill with mean)

df['Age'] = df['Age'].fillna(df['Age'].mean())

# Data normalization

df['Age_normalized'] = (df['Age'] - df['Age'].min()) / (df['Age'].max() - df['Age'].min())

# Data standardization (z-score normalization)

df['Age_standardized'] = (df['Age'] - df['Age'].mean()) / df['Age'].std()

# Removing duplicates

df_no_duplicates = df.drop_duplicates()

print("\nDataFrame without Duplicates:")

print(df_no_duplicates)

Output:

These examples show how to use Pandas to address missing records, normalize and standardize information, and cast off duplicates. If you use those strategies, you can be sure that your records are smooth, consistent, and ready for analysis or modelling.

Time Series Data Handling with Pandas

Time series records are made of observations that have been made or recorded over time. Pandas have effective gear for quickly and without difficulty working with and reading time series facts. Let’s have a look at the most important elements of the usage of Pandas to work with time collection information:

1. Introduction to Handling Time Series Data

The information in a time series is often a list of facts ordered through time. It can be univariate (with the most effective variable) or multivariate (with many variables). Pandas have special facts systems and features for working with time collection facts. These make it clean to do things like reducing, resampling, and indexing.

2. Working with DateTimeIndex

When you want to index time collection records, Pandas gives a unique “DateTimeIndex.” It suggests a list of timestamps, which makes it easy to slice and pick out statistics based on dates and instances. Pandas have many methods to make a DateTimeIndex, like pd.To_datetime(), which can be used to show strings or other formats into datetime objects.

3. Resampling and Frequency Conversion

When you resample, you convert the frequency of the time collection records through either upsampling (making the frequency better) or downsampling (decreasing the frequency). The Resample() method in Pandas helps you to combine or interpolate statistics from exclusive time intervals. This helps you to do such things as make precise facts, combine information into bigger time durations, or fill in missing values.

import pandas as pd

# Create a sample time series DataFrame

date_rng = pd.date_range(start='2022-01-01', end='2022-01-10', freq='D')

df = pd.DataFrame(date_rng, columns=['date'])

df['data'] = range(len(df))

# Set 'date' column as DateTimeIndex

df.set_index('date', inplace=True)

# Working with DateTimeIndex

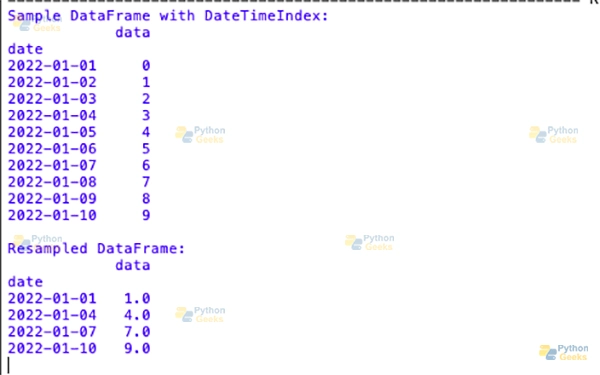

print("Sample DataFrame with DateTimeIndex:")

print(df)

# Resampling and frequency conversion (downsampling)

df_resampled = df.resample('3D').mean() # Resample data to 3-day intervals, compute mean

print("\nResampled DataFrame:")

print(df_resampled)

Output:

We made a sample time series DataFrame with a DateTimeIndex that indicates the dates from January 1, 2022, to January 10, 2022. Then, we used resample() to lessen the size of the information set to three-day chunks and observed the common price for each chunk.

You can effortlessly take care of and examine time collection data with Pandas’s DateTimeIndex and resampling capabilities. This makes it less difficult to locate traits, make predictions, and locate outliers.

Visualization with Pandas

The built-in facts visualization tools in Pandas make it smooth to make beneficial plots at once from DataFrame or Series gadgets. Let’s observe the most crucial elements of the usage of Pandas for visualization:

1. Overview of Data Visualization Capabilities

Pandas has features that are clean to use but very effective for making specific styles of plots, together with line plots, bar plots, histogram plots, scatter plots, and more. These plotting capabilities are based on Matplotlib, a famous Python plotting library. This makes it smooth to apply them with Pandas statistics structures.

2. Using Built-in Plotting Functions

As of Pandas 3.6, the ‘plot()’ method of DataFrame and Series objects lets you use hard and fast built-in plotting capabilities. You can quickly see styles, trends, and relationships with these functions because they make plots from the facts within the DataFrame or Series.

3. Customizing Plots for Better Visualization

Even though Pandas’ built-in plotting capabilities come with useful defaults, you could alternate plots even greater to make them look better and be simpler to recognize. You can trade the colors, labels, titles, axes, legends, and plot forms, among different matters, to make the visualization fit your needs.

Let’s have a look at a few examples of the way Pandas can be used for visualization:

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

# Create a sample DataFrame

np.random.seed(0)

data = pd.DataFrame(np.random.randn(100, 4), columns=['A', 'B', 'C', 'D'])

data = data.cumsum()

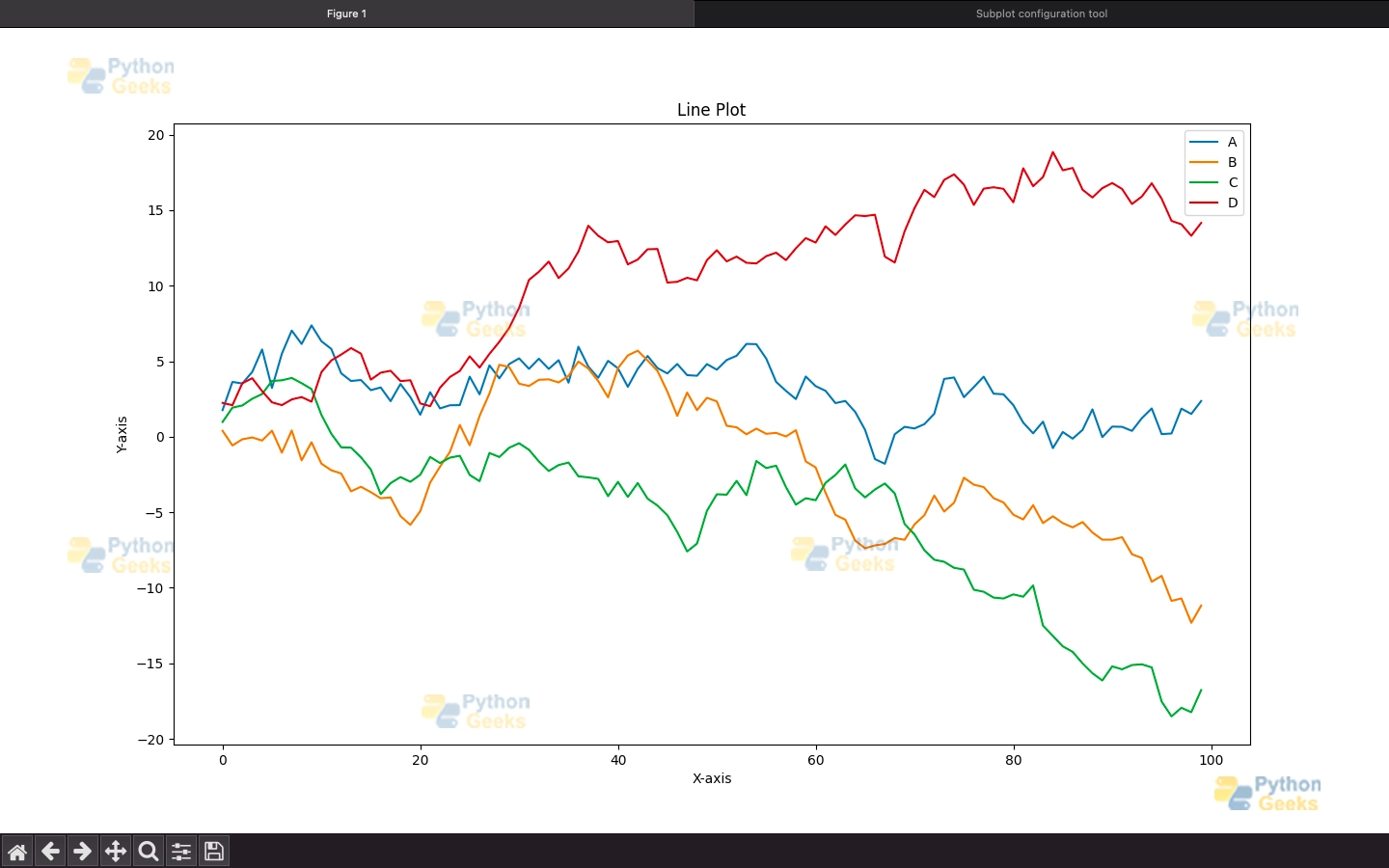

# Line plot

data.plot()

plt.title('Line Plot')

plt.xlabel('X-axis')

plt.ylabel('Y-axis')

plt.show()

# Bar plot

data.iloc[0].plot(kind='bar')

plt.title('Bar Plot')

plt.xlabel('Columns')

plt.ylabel('Values')

plt.show()

# Histogram plot

data['A'].plot(kind='hist', bins=20)

plt.title('Histogram Plot')

plt.xlabel('Values')

plt.ylabel('Frequency')

plt.show()

# Scatter plot

data.plot(kind='scatter', x='A', y='B')

plt.title('Scatter Plot')

plt.xlabel('A')

plt.ylabel('B')

plt.show()

Output:

In these examples, we used Pandas’ integrated plotting capabilities to make line, bar, histogram, and scatter plots among different types. We also changed the plots by way of using Matplotlib’s capabilities to feature titles, labels, and different visible elements.

You can efficiently discover, examine, and percentage insights from your records through compelling visualizations using Pandas’ visualization equipment and customization alternatives.

Summary

In summary, Pandas is more than a powerful tool that enables efficient data manipulation and analysis in Python. For the course of the article we have looked through the main data structures like a Series and a DataFrame, and we have seen how to do quick table manipulations. This includes filtering, sorting, grouping, joining, and reshaping. Moreover, pandas have made it possible for us to streamline data processing, particularly for time series, which is sufficiently complex. Yet, pandas offer all the required capabilities for thorough visualization and, along with it, ample opportunity for exploration and interpretation of data.

Through its straightforward syntax and countless features, Pandas places data scientists, statisticians, and researchers in positions to get a grip on all processes that relate to preprocessing, cleaning, analyzing and visualizing data, providing comprehensive decision-making and representations of info available in various types of research datasets. With time, you’ll find yourself looking deeper into the Pandas, the most valuable tool for every data analysis enthusiast, professional, or student who wants to learn how to deal with data to explore and manipulate it in their own way.