Features of Pandas

Get Job-ready with hands-on learning & real-time projects - Enroll Now!

In the diversified platform of data analytics, Pandas is a central pillar; it is the tool that provides a ground to smooth and analyse the structured data with significant time savings. In response to the community’s demands for a versatile data manipulation solution, Pandas has, in a few years, not only become the pinnacle of industry changes but has also changed and/or even standardised many workflows. Analysing data efficiently has become easier and more convenient with the help of pandas, in which the smooth integration of Java and NumPy is the backbone of its user-friendly interface, and efficiently performs data operations. That it has made its way into the Python sphere and found many adherents is a demonstration of the simplicity designers have built into it, as well as of the functionality which it already provides to the polyglot ecosystem.

At its core, Pandas revolves around two essential data structures: The two main features include Series and DataFrame. This architecture allows users to address multiple diversified data problems, from data cleansing and preprocessing error-prone data streams to high-level data analysis tasks.

Working with Pandas will transform your life. As you will see, extracting insights from data is a breeze. Its functionality covers the application of the fill-in method, creation of groups and aggregate data, operations with time series, etc. Also, data import and export for different file formats is possible without problems.

In this how-to guide for pandas-powering data lovers, we shall examine the product’s richly colored features, allowing them to appreciate their data sets’ actual value. Join us on a voyage they offer to find what Pandas data analysis has presented to our globe.

Data Structures in Pandas: Unveiling Series and DataFrame

When working with Pandas to trade information, it is crucial to comprehend their primary information systems, like Series and DataFrame. These flexible systems make it feasible to handle and analyse statistics speedily and effortlessly, assembling various record processing wishes.

Series: Unveiling the One-Dimensional Powerhouse

A Pandas Series is a one-dimensional, categorised array at its centre. It’s like a column in a spreadsheet or an unmarried variable in statistics. Series are exceptional from regular arrays because they can maintain any form of records, like integers, floats, strings, or maybe Python objects. They also include an index that lets you access the statistics in a simple way to recognise.

Calling the Pandas library and passing a list of values is all it takes to make a Series:

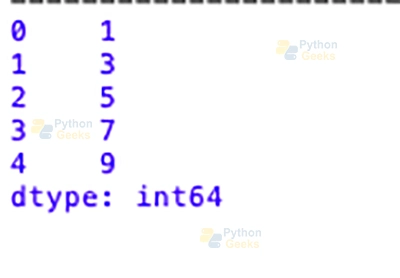

import pandas as pd # Creating a Series s = pd.Series([1, 3, 5, 7, 9]) print(s)

In this situation, the Series “s” contains numbers from 1 to 9, and the indexing is primarily based on integers.

DataFrame: Navigating the Two-Dimensional Landscape

Pandas DataFrame is a two-dimensional categorised records structure that builds on the domain of Series. It looks like a desk or a spreadsheet. It has rows and columns, and every column is a Series. DataFrame is the most famous device for working with structured information, making it clean to analyse and examine data in a table-like format.

It is straightforward to construct a DataFrame, and you can often use dictionaries or arrays to do it:

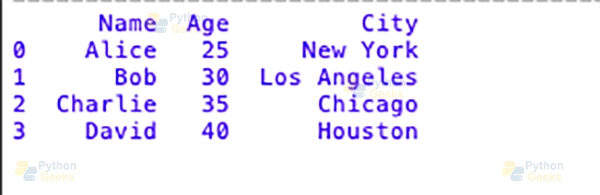

import pandas as pd

# Creating a DataFrame from a dictionary

data = {'Name': ['Alice', 'Bob', 'Charlie', 'David'],

'Age': [25, 30, 35, 40],

'City': ['New York', 'Los Angeles', 'Chicago', 'Houston']}

df = pd.DataFrame(data)

print(df)

Output:

In this example, every key within the dictionary factors to a column inside the DataFrame, and the rows are routinely numbered from 0 to n-1.

Series and DataFrame are the most crucial parts of Pandas’ statistics manipulation tools. They provide a strong and easy-to-use framework for running with dependent records. If records fanatics recognise these fact structures more intensely, they could begin using Pandas to the fullest extent of their statistical analysis initiatives.

Data Manipulation with Pandas: Unleashing the Power of Versatility

When manipulating information, Pandas is a powerful tool that provides a wealth of functions that make working with dependent records brief and clean. Pandas offers an extensive range of gear to work with records skillfully, from simple indexing and cutting to complicated merging and concatenating. Let’s take a journey through the enormous world of manipulating data with Pandas and look at its many functions.

Indexing and Slicing: Navigating Data with Precision

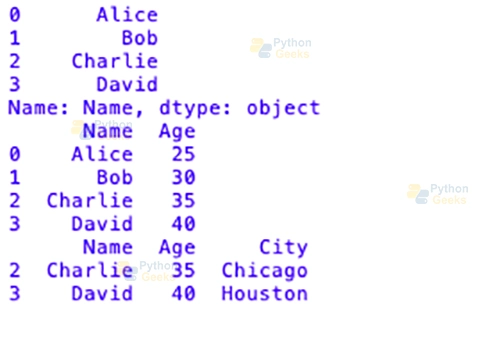

Pandas’ effective indexing and reducing tools are at the heart of its potential to work with large amounts of data. It’s easy to get to specific data elements with Pandas, whether they are rows, columns, or person elements. Users can without difficulty get the facts they need from a DataFrame by using simple indexing strategies like label-based indexing with “.Loc[]”, function-based indexing with “.Iloc[]”, or boolean indexing.

# Indexing and Slicing Example

import pandas as pd

# Creating a DataFrame

data = {'Name': ['Alice', 'Bob', 'Charlie', 'David'],

'Age': [25, 30, 35, 40],

'City': ['New York', 'Los Angeles', 'Chicago', 'Houston']}

df = pd.DataFrame(data)

# Selecting specific columns

print(df['Name']) # Selecting a single column

print(df[['Name', 'Age']]) # Selecting multiple columns

# Selecting rows based on condition

print(df[df['Age'] > 30]) # Selecting rows where Age is greater than 30

Merging and Joining: Seamlessly Integrating Data

Because data evaluation is so complex, it is vital to combine data from distinct resources regularly. ‘merge()’ and ‘join()’ are two of the features that Pandas presents to make it easy to mix one-of-a-kind datasets. Pandas lets customers merge DataFrames based on non-unique keys, allowing them to join inner, outer, left, or right. This opens up the possibility for in-depth statistics exploration and evaluation.

# Merging Example

df1 = pd.DataFrame({'key': ['A', 'B', 'C', 'D'],

'value': [1, 2, 3, 4]})

df2 = pd.DataFrame({'key': ['B', 'D', 'E', 'F'],

'value': [5, 6, 7, 8]})

merged_df = pd.merge(df1, df2, on='key', how='inner')

print(merged_df)

Concatenating: Stitching Data Together

The ‘concat()’ characteristic in Pandas is beneficial whilst you need to stack records both vertically or horizontally. It doesn’t remember if you are part of multiple DataFrames row-wise or column-wise; Pandas ensures the technique is clean, making it easy to combine records.

# Concatenating Example

df1 = pd.DataFrame({'A': ['A0', 'A1', 'A2'],

'B': ['B0', 'B1', 'B2']})

df2 = pd.DataFrame({'A': ['A3', 'A4', 'A5'],

'B': ['B3', 'B4', 'B5']})

concatenated_df = pd.concat([df1, df2], axis=0)

print(concatenated_df)

Simplifying Data Cleaning and Preprocessing: A Paradigm Shift

Pandas isn’t always best at reducing and pasting statistics; however, it is also excellent at cleaning and preprocessing records. Pandas makes it simpler to prepare raw information for analysis by letting you deal with missing data, convert record types, and apply information adjustments. This makes it feasible for insights to surface without any issues.

# Data Cleaning and Preprocessing Example # Handling Missing Data df.dropna() # Drop rows with missing values df.fillna(0) # Fill missing values with 0 # Data Type Conversions df['Age'] = df['Age'].astype(str) # Convert Age column to string # Applying Data Transformations df['City'] = df['City'].apply(lambda x: x.upper()) # Convert City names to uppercase

Pandas stands out as a flexible and quick tool as we learn more about controlling records. It shall help fans work with facts based on unequalled ease. When users understand entirely Pandas’ indexing, reducing, merging, joining, and concatenating alternatives, they can begin to use their datasets to their fullest capacity, which can lead to ground-breaking discoveries and insights.

Missing Data Handling with Pandas: Navigating the NaN Territory

When identifying the facts, missing or “NaN” (Not a Number) values regularly make things very difficult and can alter the consequences and insights of analyses. With its wide range of powerful features, Pandas provides various powerful approaches to dealing with missing data. Let’s look at various ways Pandas can deal with missing values. These vary from simple ones, like dropping missing values, to more complex ones, like interpolation.

Dropping Missing Values: Streamlining Data Cleanup

import pandas as pd

# Creating a DataFrame with missing values

data = {'A': [1, 2, None, 4],

'B': [None, 5, 6, 7]}

df = pd.DataFrame(data)

# Drop rows with any NaN values

clean_df = df.dropna()

print(clean_df)

One great way to cope with missing statistics is to dispose of all rows and columns with NaN values. Pandas’s ‘dropna()’ method allows users to remove rows or columns with any NaN values or a selected threshold.

Filling Missing Values: Bridging the Gaps

On the other hand, customers can use the “fillna()” method to replace missing values with actual values. This approach lets customers hold information accurately while reducing the impact of missing values on subsequent analyses.

# Fill missing values with specified value filled_df = df.fillna(0) # Fill NaN values with 0 print(filled_df)

Interpolation: Inferring Missing Values

Interpolation is a very beneficial tool in conditions where lacking values display a pattern or fashion. ‘Interpolate()’ in Pandas lets users fill in missing values by searching at nearby factors and using distinct interpolation methods, like linear, polynomial, or spline interpolation.

# Interpolate missing values using linear interpolation interpolated_df = df.interpolate(method='linear') print(interpolated_df)

In the journey of reading records, insufficient information is usually a significant problem that threatens the accuracy of the outcomes. But Pandas have quite a few different ways to cope with missing records. These range from dropping and filling lacking values to cutting area interpolation strategies. This means that customers can hopefully move through the NaN territory, ensuring missing records don’t hinder finding valuable insights and discoveries. With these techniques, folks who are crazy about information can start a journey to find out what their datasets are without a doubt capable of, which could result in groundbreaking analyses and discoveries.

Unleashing the Power of GroupBy in Pandas: Harnessing the Essence of Data

In the ever-changing world of information analysis, it is vital to understand how statistics are dependent. Pandas’ GroupBy characteristic is an effective tool that lets customers split down and examine datasets based on specific criteria, which can reveal insights that may not be visible otherwise. Let’s ride through the GroupBy world and see what it can do for collecting, converting, and reading data.

Introducing GroupBy: The Key to Data Segmentation

At its core, Pandas’ GroupBy feature helps divide records into groups primarily based on one or more keys, letting users group rows based on things they have in common. GroupBy also enables you to organise facts into companies based on express variables, time durations, or any other beneficial criteria, making it possible to do more in-depth analysis and exploration.

import pandas as pd

# Creating a DataFrame

data = {'Category': ['A', 'B', 'A', 'B', 'A'],

'Value': [10, 20, 30, 40, 50]}

df = pd.DataFrame(data)

# Grouping by 'Category'

grouped = df.groupby('Category')

Unveiling the Power of Aggregation: Consolidating Insights

Pandas has many aggregation functions that can bring together facts within each group as soon as the statistics have been grouped. GroupBy in Pandas lets users turn complex datasets into summaries that may be used. It is proper whether they are computing the sum, mean, median, mode, or other statistical metric.

# Computing sum within each group sum_by_category = grouped.sum() print(sum_by_category)

Harnessing the Magic of Transformation: Enriching Data

GroupBy does more than simply mix statistics; it facilitates transforming statistics by letting customers apply custom features to each institution individually. Pandas shall add useful information to their datasets via within-group operations, whether standardising, normalising, or changing the records in some other way.

# Applying a custom transformation function

def normalize(group):

return (group - group.mean()) / group.std()

normalized_data = grouped['Value'].transform(normalize)

print(normalized_data)

Uncovering Patterns with Analysis: Illuminating Insights

GroupBy does more than combine and trade facts. We can also help users evaluate complex records, finding styles, developments, and outliers within each organisation. Pandas’ GroupBy is a key tool for getting beneficial statistics from segmented datasets, whether you’re doing exploratory data analysis, hypothesis testing, or any other kind of analysis.

# Performing statistical analysis within each group statistical_summary = grouped.describe() print(statistical_summary)

GroupBy stands out as a beacon of flexibility and performance in the big subject of information evaluation. It permits users to resolve the mysteries of their datasets with unmatched accuracy. When fact fans use GroupBy to collect, rework, and analyse facts, they can start an adventure to discover what their datasets are capable of, which could result in lifestyle-converting insights and discoveries.

Unlocking the Power of Time Series Analysis with Pandas

Time collection facts bring their own set of problems and possibilities to the sphere of information analysis, which is continually changing. Understanding temporal patterns is crucial for locating insights and making clever decisions, whether or not it is with financial information, sensor readings, or inventory expenses. Pandas is available in a flexible library with an extensive range of functions designed to paint with time collection statistics. Let’s look at what Pandas can do for time series analysis, such as how nicely it can manage time zones, resampling, and indexing dates and instances.

Date/Time Indexing: Anchoring Insights in Time

Date/time indexing is one of the most critical components of Pandas’ time series capabilities. Utilising specialised record structures like DateTimeIndex, Pandas allows users to index and trade time series information without problems, making it easy to slice, filter out, and combine records primarily based on time parameters.

import pandas as pd

# Creating a time series DataFrame

dates = pd.date_range('2022-01-01', periods=5, freq='D')

data = {'Values': [10, 20, 30, 40, 50]}

ts_df = pd.DataFrame(data, index=dates)

# Accessing data based on date/time index

print(ts_df['2022-01-03'])

Resampling: Unveiling Temporal Trends

The resampling function in Pandas lets customers resample time series data from one frequency to any other, allowing them to examine and see temporal traits more clearly. Pandas makes it easy to perform seamless resampling operations, which ensure that temporal patterns are captured accurately, whether the frequency is upsampled (increasing) or downsampled (reducing).

# Resampling daily data to monthly frequency

monthly_ts = ts_df.resample('M').mean()

print(monthly_ts)

Time Zone Handling: Navigating Temporal Realms

Time region management is essential for the correct evaluation and interpretation of time collection information in an international environment where everyone is connected. Pandas’ time zone feature makes it easy to localise, convert, and trade time series information across multiple time zones, ensuring steady and accurate temporal analyses.

# Converting time zone

ts_df_utc = ts_df.tz_localize('UTC')

ts_df_ny = ts_df_utc.tz_convert('America/New_York')

print(ts_df_ny)

Regarding the complicated world of time collection analysis, Pandas is a vital tool that offers customers various alternatives for figuring out styles and trends over the years. Whether it’s date/time indexing, resampling, or handling time zones, Pandas shall help scientists navigate temporal nation-states correctly and with self-assurance. This permits them to use time collection records to make clever decisions and get beneficial insights. With Pandas’ time series functions, scientists can start a journey to locate the hidden memories in their temporal statistics, which may result in ground-breaking discoveries and new thoughts.

Seamless Data Interchange with Pandas: Exploring Input/Output Tools

It could be essential to effortlessly read and write information from and to one-of-a-kind document formats within the ever-changing discipline of data evaluation. Pandas is one of the most effective libraries in the Python ecosystem. It has various input/output (I/O) tools that can be used with special data sources. Let’s look at how nicely Pandas can read and write facts from and to standard document formats like CSV, Excel, and SQL databases. We’ll additionally study how bendy and simple this I/O equipment is to apply.

Reading the Data: Bringing People Together

Pandas makes getting information from outside sources easier by providing a single interface for studying facts from many document formats. Its read_*() features make importing tabular records from CSV files, Excel spreadsheets, or relational databases easy into DataFrame gadgets. This makes it easier to research and explore the statistics later.

import pandas as pd

# Reading data from CSV file

csv_df = pd.read_csv('data.csv')

# Reading data from Excel spreadsheet

excel_df = pd.read_excel('data.xlsx')

# Reading data from SQL database

sql_df = pd.read_sql_query('SELECT * FROM table', connection)

Writing Data: Making Insights Stick

In the same manner, Pandas lets customers export DataFrame objects to a wide variety of record formats. This makes it clear to the percentage and unfolds the insights gained from studying facts. Pandas ‘to_*() functions are the most flexible and consumer-friendly way to export data in any format. This includes saving facts to CSV documents, Excel spreadsheets, and SQL databases.

# Writing data to CSV file

csv_df.to_csv('output.csv', index=False)

# Writing data to Excel spreadsheet

excel_df.to_excel('output.xlsx', index=False)

# Writing data to SQL database

sql_df.to_sql('table', connection, if_exists='replace', index=False)

A Smooth Experience with Flexibility and Ease of Use

Python’s I/O tools are precise because they are bendy and easy to apply. Pandas’ I/O capabilities provide a unified and clean-to-recognise interface that lets customers pay attention to record analysis instead of statistics manipulation, even if operating with big datasets, complicated data structures, or several report formats. Pandas also helps you alternate delimiters, encoding, and date/time formats. This makes its I/O gear even more flexible and adaptable to meet extraordinary fact processing needs.

Pandas is a frontrunner within the international community of interconnected statistics evaluation. With its complete set of I/O gear, it connects distinctive record resources. Pandas’ I/O features make importing statistics from CSV documents, Excel spreadsheets, or SQL databases viable and exporting insights to the same codecs. These features are very flexible and smooth to use, which makes it possible for statistics fanatics to get the maximum out of their datasets. With Pandas’ powerful I/O equipment, data professionals can start using statistics, which can cause groundbreaking analyses and discoveries.

Perform Mathematical Operations on the Data

As a result, Pandas is a powerful tool for information analysis because it lets you do various exceptional mathematical operations on your data. Let’s observe a number of the most crucial functions and features, alongside a few sample codes:

1. Element-wise Operations:

With Pandas, you can do detailed math on Series and DataFrame objects through elements, making calculations on complete datasets smoother.

import pandas as pd

# Create a DataFrame

data = {'A': [1, 2, 3, 4],

'B': [5, 6, 7, 8]}

df = pd.DataFrame(data)

# Element-wise addition

result = df['A'] + df['B']

print(result)

2. Basic Arithmetic Operations:

Basic math operations like adding, subtracting, multiplying, and dividing may be executed immediately on Series or DataFrame objects.

# Element-wise multiplication result = df['A'] * 2 print(result)

3. Aggregation Functions:

‘sum’, ‘mean’, ‘min’, ‘max’, and different aggregation functions in Pandas allow you to discover precise records for your facts.

# Compute the sum of each column column_sums = df.sum() print(column_sums)

4. Cumulative Operations:

Cumulative operations allow you to discover cumulative sums, products, maximums, and minimums alongside a given axis.

# Compute cumulative sum along rows cumulative_sum = df.cumsum(axis=1) print(cumulative_sum)

5. Handling Missing Values:

Pandas handles lacking values (NaNs) automatically while operations are performed, ensuring smooth calculations.

# Create a DataFrame with missing values

data_with_nan = {'A': [1, 2, None, 4],

'B': [5, None, 7, 8]}

df_with_nan = pd.DataFrame(data_with_nan)

# Element-wise addition with missing values

result_with_nan = df_with_nan['A'] + df_with_nan['B']

print(result_with_nan)

6. Custom Functions:

The “observe” characteristic lets you apply custom features to your facts, which can help you perform complex mathematical operations or changes.

# Define a custom function

def custom_function(x):

return x ** 2 + 1

# Apply the custom function to a column

result_custom = df['A'].apply(custom_function)

print(result_custom)

7. Vectorised Operations:

Vectorised operations, which Pandas might use, are surprisingly optimised and plenty faster than standard Python loops for doing calculations.

# Element-wise multiplication using vectorized operations result_vectorized = df['A'] * df['B'] print(result_vectorized)

In those examples, you can see how Pandas makes it less complicated to do math on facts, which helps you to do calculations quickly and efficiently as part of your information evaluation workflow.

Unleashing Insights with Pandas: Seamlessly Integrating Visualisation

Visualisations are a perfect way to expose what you already know and discover patterns in big datasets when it involves record evaluation. With Matplotlib, Seaborn, and other Python visualisation libraries, Pandas offers tools for immediately making distinctive plots from DataFrame objects. Today, we will discuss how mixing Pandas with Matplotlib and Seaborn makes it less complicated to create powerful visualisations.

Combining Matplotlib and Seaborn: Making the Most of Visualisation Power

Pandas works well with Matplotlib and Seaborn, well-known Python libraries for visualising data, which might be considered bendy and attractive. Pandas could make many distinctive plots, from easy line plots to complex statistical visualisations, right from DataFrame gadgets using the plotting functions offered by those libraries.

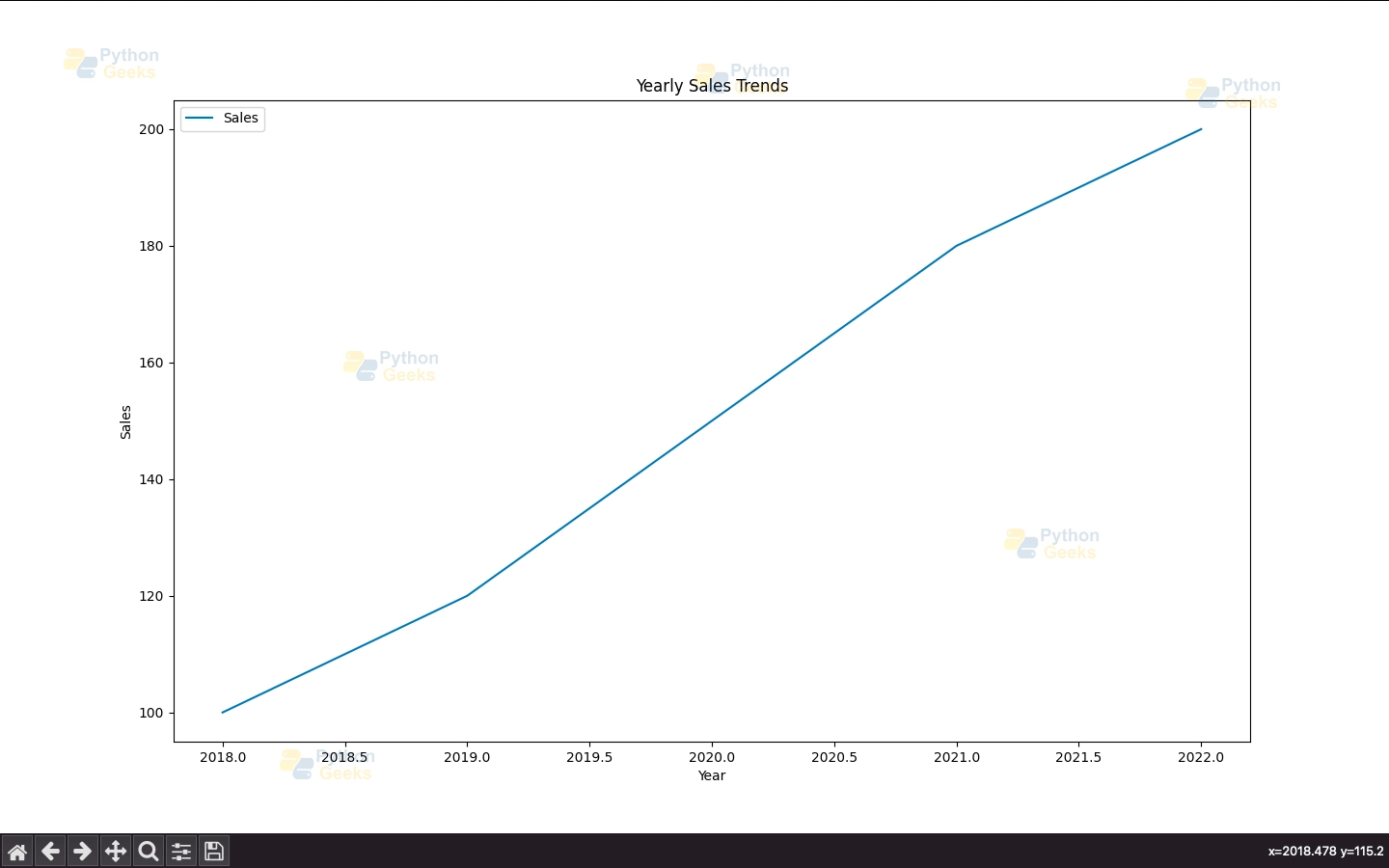

import pandas as pd

import matplotlib.pyplot as plt

import seaborn as sns

# Creating a DataFrame

data = {'Year': [2018, 2019, 2020, 2021, 2022],

'Sales': [100, 120, 150, 180, 200]}

df = pd.DataFrame(data)

# Plotting a line plot using Pandas with Matplotlib backend

df.plot(x='Year', y='Sales', kind='line')

plt.title('Yearly Sales Trends')

plt.xlabel('Year')

plt.ylabel('Sales')

plt.show()

# Plotting a scatter plot using Pandas with Seaborn backend

sns.scatterplot(data=df, x='Year', y='Sales')

plt.title('Yearly Sales Scatter Plot')

plt.xlabel('Year')

plt.ylabel('Sales')

plt.show()

Output:

Making visualisation less complicated: getting insights directly from dataframes

One factor that makes Pandas stand out is its ability to create insightful plots from DataFrame objects easily. By calling the ‘plot()’ method on DataFrame objects, users can easily create exceptional plots, including line plots, scatter plots, bar plots, and histograms, without having to do any complex record manipulation or preprocessing.

# Creating a DataFrame

data = {'Month': ['Jan', 'Feb', 'Mar', 'Apr', 'May'],

'Temperature': [10, 15, 20, 25, 30]}

df = pd.DataFrame(data)

# Plotting a bar plot directly from DataFrame

df.plot(x='Month', y='Temperature', kind='bar', color='skyblue')

plt.title('Monthly Temperature Trends')

plt.xlabel('Month')

plt.ylabel('Temperature (°C)')

plt.show()

Pandas stand proud as a pacesetter, searching for new ideas and insights. It works well with Matplotlib and Seaborn to make visualisations viable immediately from DataFrame objects. Pandas’ intuitive plotting functions provide data fans unequalled flexibility and ease of use, making it smooth to create compelling visualisations, whether examining temporal developments, statistical distributions, or categorical information. Data scientists can use Pandas’ visualisation tools to start an adventure to find the hidden stories of their datasets. This can cause groundbreaking analyses and discoveries.

Summary

These pandas are more than just cuddly mascots; They symbolise a generation of youth armed with knowledge and prepared to take ownership of our planet’s future.

Pandas has emerged in constantly changing data relations as the only utility on demand and fast-executing prosperity that empowers its users to deal effectively with various data challenges. To begin with, Pandas has created sound data structures and tools to manage data effortlessly. At the same time, it has extensive support for time series data analysis, input/output operations, and data visualisation. All these come in one powerful package that enables users to unleash the hidden power in structured data.

Equipped with all Pandas’ contributory tools and functionality, data advocates can start to understand, for instance, the underlying tendency or conclude with absolute certainty. Whether cleaning messy datasets, conducting sophisticated analyses, or visualising complex trends, Pandas streamlines the data analysis workflow, ensuring that data practitioners can focus on what truly matters: carefully gathering information and practical conclusions.

At the end of this excellent tour of Pandas’ features, we should remember that Pandas’ horizons are practically limitless. At the same time, the beautiful world of data analysis is guaranteed to become even more breathtaking. Pandas may be the steadfast ally, finding their way on the expansive sea of data involving judgment, artistry, and curiosity.