Python Pandas Ecosystem

Get Job-ready with hands-on learning & real-time projects - Enroll Now!

Pandas is like Excel for Python, amplified by a V8 automobile engine. This very strong library is an integral part of any Python data analytics process nowadays. Many people who work with Python data have heard of Pandas, and for a good reason: the whole thing gets a new vector, and it changes the game.

Python has two main types of data structures: Series and DataFrames (as a tool). For example, a Series can be declared as a one-dimensional object, like a list of strings or numbers. It is about data, DataFrames are similar in their capacity to a database table or Excel table including many rows storing columns of data.

Now, provide me reasons as to why Pandas are so essential. Here, assume you have a dataset having several rows and columns. It would not be comparable with living there due to unknown expenses, unfamiliar social norms, and, of course, an unsafe environment. But imagine how tedious it would be to rummage through this data manually. However, you only need a few short commands to carry out many other tasks in the Pandas.

Looking for a method of reducing data to show rows or columns only? One of the ways pandas will heal you mentally is to take care of you. Want to do most ranking or grouping of your data using certain rules? Not a problem. Moreover, Pandas provides us with particular tools to organize noisy data like old or duplicated registered numbers.

Pandas, in general, are non-mono semantic; however, they can do much more than just tidy and sort out data. On it, analysis is powerful as well. What are the basics of statistics here? Need the medians, means, thus you. Now types are seemingly able to do it. Do you prefer a visual representation of the data or all the data together to get a good picture? The rest-move-on technique is good for pandas to be applied.

Core Features of Pandas

Two primary kinds of information structures that make up Pandas are Series and DataFrames. Let us inspect each of those and see how they make it smooth to work with tabular information.

1. Series

You can think of a Series as a one-dimensional array that could keep any type of records, like strings, integers, floats, or even Python items.

It’s like a column in a spreadsheet or an array with the best measurement in NumPy or some other programming language.

Using the ‘pd.Series()’ constructor, you can flip a list, array, or dictionary into a Series.

import pandas as pd # Creating a Series from a list data = [1, 2, 3, 4, 5] series = pd.Series(data) print(series)

Output :

0 1

1 2

2 3

3 4

4 5

dtype: int64

2. DataFrame

A DataFrame is a -dimensional table-primarily based data shape made from rows and columns, like a spreadsheet or in a database. It’s the Pandas item that is used the most and works nicely with based information.

You can pull statistics from lists, dictionaries, NumPy arrays, or even other DataFrames to make a DataFrame.

import pandas as pd

# Creating a DataFrame from a dictionary

data = {'Name': ['Alice', 'Bob', 'Charlie', 'David'],

'Age': [25, 30, 35, 40],

'Salary': [50000, 60000, 70000, 80000]}

df = pd.DataFrame(data)

print(df)

Output

Name Age Salary

0 Alice 25 50000

1 Bob 30 60000

2 Charlie 35 70000

3 David 40 80000

Efficient Handling of Tabular Data

These statistics structures make it easier to work with tabular facts in some ways:

Flexibility: Series and DataFrames can keep one-of-a-kind statistics in the same structure, letting you display records in several unique ways.

Marking and Indexing: Every Series and DataFrame has an index that offers each row or column a name. This makes it easy to get to facts, alternate them, and line them up.

Vectorized Operations: Because Pandas uses vectorized operations, you may do things to entire columns or rows immediately without applying specific loops. This makes the computations move quicker.

Functionality: Pandas have many functions and strategies for operating with, reading, and displaying information. This makes it a device for Python users who need to work with tabular records.

Overall, Series and DataFrames in Pandas effectively and greenly work with tabular records in Python. They make cleaning, manipulating, and analyzing statistics easy and herbal. Because they may be so flexible and have so many uses, statistics scientists, analysts, and others running with Python information cannot do their jobs without them.

Data Manipulation with Pandas

Pandas has many functions for running with data, which makes it clean to filter, kind, group, and merge your information. Let’s look at some crucial capabilities and notice how they can be utilized in real lifestyles.

1. Filtering Data

You can choose subsets of facts based on certain criteria while you filter out them.

import pandas as pd

# Creating a DataFrame

data = {'Name': ['Alice', 'Bob', 'Charlie', 'David'],

'Age': [25, 30, 35, 40],

'Department': ['HR', 'IT', 'Finance', 'IT']}

df = pd.DataFrame(data)

# Filter rows where Age is greater than 30

filtered_df = df[df['Age'] > 30]

print("Filtered DataFrame:")

print(filtered_df)

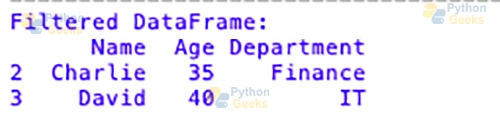

Output :

2. Sorting Data

Sorting lets you change the order of rows based on the values in one or more columns.

# Sort DataFrame by Age in descending order

sorted_df = df.sort_values(by='Age', ascending=False)

print("Sorted DataFrame:")

print(sorted_df)

3. Grouping Data

You can divide the facts into organizations based on positive standards, after which you use aggregate features on every institution.

# Group DataFrame by Department and calculate average Age in each group

grouped_df = df.groupby('Department')['Age'].mean()

print("Grouped DataFrame:")

print(grouped_df)

4. Merging Data

# Create another DataFrame

other_data = {'Name': ['Alice', 'Eve', 'Bob'],

'Salary': [50000, 55000, 60000]}

other_df = pd.DataFrame(other_data)

# Merge the two DataFrames based on 'Name' column

merged_df = pd.merge(df, other_df, on='Name', how='inner')

print("Merged DataFrame:")

print(merged_df)

These are just a few examples of how powerful Pandas are for running with facts. Pandas make it easy and brief to work along with your records in Python, whether or not you are cleansing up messy information, amassing statistics, or combining more than one dataset.

Data Analysis with Pandas

Pandas is top-notch at both working with and reading information. It’s extraordinary at descriptive statistics, records aggregation, and coping with missing statistics and outliers. Let’s take a look at some actual global examples of ways Pandas make this stuff less complicated.

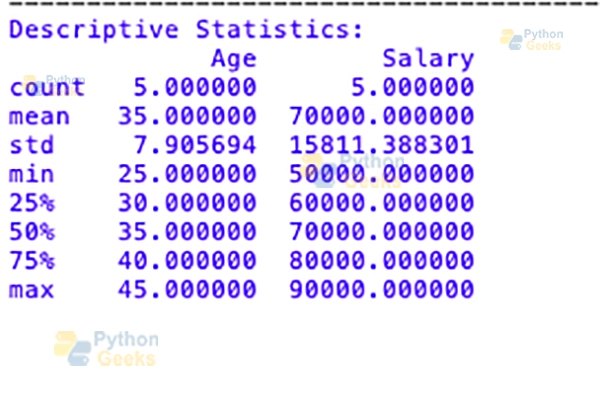

1. Descriptive statistics

Many descriptive statistics functions in Pandas will let you apprehend your statistics’ shape, principal tendency, and dispersion.

import pandas as pd

# Creating a DataFrame with sample data

data = {'Age': [25, 30, 35, 40, 45],

'Salary': [50000, 60000, 70000, 80000, 90000]}

df = pd.DataFrame(data)

# Compute descriptive statistics

print("Descriptive Statistics:")

print(df.describe())

Output

2. Data Aggregation

You can, without difficulty, integrate data in Pandas by grouping it, using features like sum, imply, median, and so forth. To every group.

# Group DataFrame by Age and calculate average Salary for each age group

grouped_df = df.groupby('Age')['Salary'].mean()

print("Data Aggregation:")

print(grouped_df)

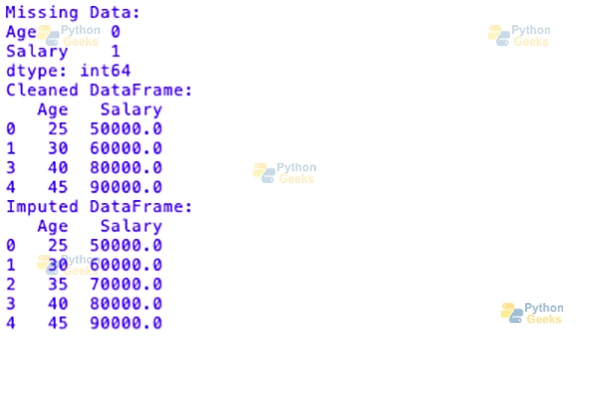

3. Dealing with Missing Data

Pandas have functions like detection, elimination, and imputation that can be used to cope with missing information in a well-mannered way.

# Introducing missing data

df.loc[2, 'Salary'] = None

# Check for missing values

print("Missing Data:")

print(df.isnull().sum())

# Drop rows with missing values

df_cleaned = df.dropna()

# Impute missing values with mean

df_imputed = df.fillna(df.mean())

print("Cleaned DataFrame:")

print(df_cleaned)

print("Imputed DataFrame:")

print(df_imputed)

Output

4. Dealing with Outliers

Outliers can throw off your analysis. However, Pandas has approaches to locate and address them, like trimming or winsorization.

# Introducing outliers

df.loc[4, 'Salary'] = 150000

# Winsorization to cap outliers

Q1 = df['Salary'].quantile(0.25)

Q3 = df['Salary'].quantile(0.75)

IQR = Q3 - Q1

lower_bound = Q1 - 1.5 * IQR

upper_bound = Q3 + 1.5 * IQR

df['Salary'] = df['Salary'].clip(lower_bound, upper_bound)

print("DataFrame after Winsorization:")

print(df)

These examples display how Pandas makes it clean for statistics analysts to do descriptive facts, collect facts, and cope with missing statistics and outliers. By using Pandas’ functions, you could get beneficial records from your statistics while ensuring they are accurate and straightforward.

Integration with Other Libraries

Pandas work properly with many other Python libraries, which increases its usefulness and makes it a strong tool for information evaluation. Let’s look at how Pandas works with famous libraries like NumPy, Matplotlib, and Scikit-learn, and deliver some examples of how they work together.

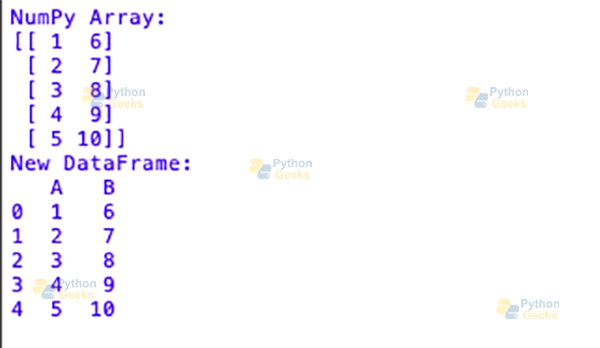

1. Integration with NumPy

Pants and NumPy go collectively like peanut butter and jelly. Pandas afford extra complex statistics structures on top of NumPy arrays. Switching among Pandas DataFrames/Series and NumPy arrays is clean, making it possible for them to work together without troubles.

import pandas as pd

import numpy as np

# Create a Pandas DataFrame

data = {'A': [1, 2, 3, 4, 5],

'B': [6, 7, 8, 9, 10]}

df = pd.DataFrame(data)

# Convert DataFrame to NumPy array

np_array = df.to_numpy()

print("NumPy Array:")

print(np_array)

# Create a Pandas DataFrame from a NumPy array

new_df = pd.DataFrame(np_array, columns=['A', 'B'])

print("New DataFrame:")

print(new_df)

Output

2. Integration with Matplotlib

A lot of humans use Matplotlib to make graphs and charts in Python. Pandas and Matplotlib work together flawlessly, so you can plot Panda’s information without delay.

import matplotlib.pyplot as plt

# Plot a DataFrame column using Matplotlib

df['A'].plot(kind='bar')

plt.title('Bar Plot of Column A')

plt.xlabel('Index')

plt.ylabel('Values')

plt.show()

3. Integration with Scikit-study

This is a completely useful library for Python that lets you do device studying. Scikit-learn’s Pandas may be used to smooth up and put together information for system getting to know tasks.

from sklearn.linear_model import LinearRegression

# Create a Pandas DataFrame with sample data

data = {'X': [1, 2, 3, 4, 5],

'Y': [2, 4, 6, 8, 10]}

df = pd.DataFrame(data)

# Extract features and target variable

X = df[['X']]

y = df['Y']

# Fit a linear regression model using Scikit-learn

model = LinearRegression()

model.fit(X, y)

# Make predictions

predictions = model.predict(X)

print("Predictions:")

print(predictions)

As you can see, Pandas works well with many different well-known Python libraries, along with NumPy, Matplotlib, and Scikit-study. You can use their capabilities to make your statistics evaluation responsibilities less complicated. You can use Pandas to manipulate information, make visualizations, or do system mastering obligations. It’s a flexible and effective base for your information analysis tasks.

Pandas Ecosystem

Along with its core features, Pandas has a thriving environment of extensions and libraries that make it even more beneficial and make plenty of exceptional facts evaluation responsibilities less complicated. Let’s check some famous Pandas extensions and libraries:

1. Pandas-profiling

The pandas-profiling library turns Pandas DataFrames into complete reviews that automate exploratory information evaluation (EDA). These reports deliver information about one-of-a-kind data types, lacking values, how the records are shipped, correlations, and more. This saves a whole lot of time when first exploring the statistics.

2. Pandasql

Pandas DataFrames may be queried in a manner that is just like SQL with pandasql. Users familiar with SQL can write SQL queries immediately on DataFrames to use this library. This lets them use the SQL syntax for their information evaluation workflows without problems.

3. Pdvega

pdvega helps you to use Vega and Altair to visualize Pandas DataFrames interactively. With it, users can make interactive plots from Pandas DataFrames without exchanging the statistics layout first. You could make several unique interactive visualizations with pdvega to help you explore and share your facts extra effectively.

4. Geopandas

Geopandas adds a guide for geospatial facts analysis to Pandas. Users can study, write, and exchange geospatial facts about the usage of these functions, which allows them to do exclusive spatial operations and visualizations right in Pandas DataFrames. When operating with geographic facts structures (GIS) and spatial analysis, geopandas are available.

These accessories and libraries are just a small part of the massive atmosphere that helps Pandas and meets the desires of many individuals who work with data evaluation. Many gears inside the Panda’s surroundings permit you to improve your statistics evaluation workflows. These gears could make exploratory statistics analysis easier, permit you to use SQL-like querying, make interactive visualizations, or work with geospatial facts.

Summary

The final word in this regard is Python pandas ecosystem embraces a whole range of tools and libraries that serve as high-level applications for data analysts and scientists, making data analysis and visualization simpler and more efficient.

From its core function for the work with data and observational analysis to auxiliary extensions such as pandasql for SQL-like querying, pandas-profiling for automation of EDA, pdvega for interaction visualization, and geopandas for Geographic information systems, it caters for a lot of dataset-related tasks.

Through the use of Pandas, the formatted data allows it to be integrated with other popular libraries such as NumPy, Matplotlib and Scikit-learn. Data engineers can now unravel more facts and shorten their analysis workflows. If you are a professional, data scientist or even an amateur, it is vital to master Pandas and its wider ecosystem because data science, which is becoming increasingly important in recent times, depends on this.