Python Pandas Ecosystem for Machine Learning

Get ready to crack interviews of top MNCs with Placement-ready courses Learn More!

The skill of working with data in data science and machine learning is no less than the feet of a marathon runner; it is the fuel for the long journey of learning. In addition to functions from NumPy, Pandas introduces a comprehensive library on top to fulfil this particular task. Through the simplicity of its interface and functionality, which leverages data science, analytics, and the expertise of Pandas enthusiasts, it has become a popular methodology.

This article introduces you to the Python Pandas realm, highlighting the importance of this tool in every data scientist’s daily workflow. From its initial stage as a free software project to its current status as a foundation in the data science community, pandas’ improvement is part of the cause that ensures the requirements of data scientists’ needs are still catered to.

We’ll guide you through a discovery of the most pertinent features and capabilities of Pandas, leaving you empowered and well fortified to capitalise on the full impact of your data. From data tug-of-war, complex data transformations, and deep-level analysis, there you are with Puny Pandas, your immensely trusted ally in the quest for data-driven visibility.

Understanding the Pandas Data Structures: Series and DataFrames

In Python, Series and DataFrames are the two essential kinds of data systems that make it easy to work with and analyse data.

Series

A Pandas Series is an object that resembles a one-dimensional array and contains a list of values, along with a variety of corresponding information labels. This array is called the index.

import pandas as pd # Creating a Series from a list data = [10, 20, 30, 40, 50] s = pd.Series(data) print(s)

A DataFrame is

A DataFrame is a categorised -dimensional data structure that can have columns of various sorts. You can think of it as either an Excel sheet or a SQL table.

# Creating a DataFrame from a dictionary

data = {'Name': ['Alice', 'Bob', 'Charlie', 'David'],

'Age': [25, 30, 35, 40],

'City': ['New York', 'Los Angeles', 'Chicago', 'Houston']}

df = pd.DataFrame(data)

print(df)

How Indexing and Selection Work in Pandas

Indexing

# Label-based indexing print(df.loc[1]) # Access row with index label 1 # Integer-based indexing print(df.iloc[1]) # Access row at position 1 # Boolean indexing print(df[df['Age'] > 30]) # Filter rows where Age > 30

Pandas offers several methods for indexing information, including boolean indexing, label-based total indexing (‘.Loc[]’), and integer-based indexing (‘.Iloc[]’).

Selection

In Pandas, selection is applied to elements, rows, or columns of a Series or DataFrame.

# Accessing specific elements print(s[0]) # Access element at index 0 print(df['Name']) # Access column 'Name' # Accessing rows and columns simultaneously print(df.loc[0, 'Age']) # Access Age of row with index 0 print(df.iloc[0, 1]) # Access Age of row at position 0 # Adding new column df['Gender'] = ['Female', 'Male', 'Male', 'Male'] print(df)

To correctly manipulate and examine records, you need to recognise a way to use Pandas’ Series and DataFrames, as well as indexing and selection techniques. You can maximise the potential of Pandas for your data-driven initiatives by grasping these key ideas and methods.

Exploring Data with Pandas: Unveiling the Power of Data Handling

In the vast field of data analysis, Python Pandas is a crucial tool for quickly exploring, cleaning, and preprocessing data. In this segment, we’ll take a look at several approaches that Pandas can use to discover data. We’ll discuss how to read facts from different assets, address missing values, normalise statistics, and perform fundamental data exploration and descriptive statistics.

Reading Data from Various Sources

Pandas has many beneficial features that allow you to examine facts from various locations, such as databases, CSV files, and Excel spreadsheets.

import pandas as pd

# Reading from CSV

df_csv = pd.read_csv('data.csv')

# Reading from Excel

df_excel = pd.read_excel('data.xlsx')

# Reading from database

import sqlite3

conn = sqlite3.connect('example.db')

df_db = pd.read_sql_query("SELECT * FROM table_name", conn)

Data Cleaning and Preprocessing

Pandas has practical tools for cleansing and preprocessing data, including tools for handling missing values, normalising data, and more.

# Handling missing values df.dropna() # Drop rows with missing values df.fillna(0) # Fill missing values with 0 df['column'].fillna(df['column'].mean(), inplace=True) # Fill missing values with column mean # Data normalization from sklearn.preprocessing import MinMaxScaler scaler = MinMaxScaler() df_normalized = pd.DataFrame(scaler.fit_transform(df), columns=df.columns)

Basic Data Exploration and Descriptive Statistics

Pandas allows you to explore large quantities of data and calculate descriptive statistics that provide information about the dataset’s properties.

# Summary statistics print(df.describe()) # Data visualization import matplotlib.pyplot as plt df['column'].plot.hist(bins=20) plt.show() # Correlation matrix print(df.corr())

Data scientists and analysts can gain a deeper understanding of their datasets by utilising Pandas’ practical features for data handling, exploration, and preprocessing. This enables them to make more informed choices and conduct more effective analyses. Pandas remains one of the most essential tools for data evaluation because it is straightforward to use and has loads of powerful features. It shall enable customers to get the most out of their statistics.

Integrating Pandas with Machine Learning: Bridging Data Preparation and Model Training

Preparing the statistics is an essential part of model training in the field of device learning. Python Pandas is a robust framework for combining tasks that involve preparing data for machine learning with those that utilise artificial intelligence. This component will guide you through preparing records for data analysis tasks, utilising Pandas for feature engineering, and dividing statistics into training and testing sets. It may even include several instances of code.

Preparing Data for Machine Learning Tasks

import pandas as pd

from sklearn.datasets import load_iris

# Load Iris dataset

iris = load_iris()

df = pd.DataFrame(iris.data, columns=iris.feature_names)

df['target'] = iris.target

# Separate features and target variable

X = df.drop('target', axis=1)

y = df['target']

Feature Engineering with Pandas

# Create new features df['sepal_ratio'] = df['sepal length (cm)'] / df['sepal width (cm)'] # Transform data df['petal_area'] = df['petal length (cm)'] * df['petal width (cm)']

Splitting Data into Training and Testing Sets

from sklearn.model_selection import train_test_split # Split data into training and testing sets X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

Example Code:

import pandas as pd

from sklearn.datasets import load_iris

from sklearn.model_selection import train_test_split

from sklearn.ensemble import RandomForestClassifier

from sklearn.metrics import accuracy_score

# Load Iris dataset

iris = load_iris()

df = pd.DataFrame(iris.data, columns=iris.feature_names)

df['target'] = iris.target

# Separate features and target variable

X = df.drop('target', axis=1)

y = df['target']

# Create new features

df['sepal_ratio'] = df['sepal length (cm)'] / df['sepal width (cm)']

df['petal_area'] = df['petal length (cm)'] * df['petal width (cm)']

# Split data into training and testing sets

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

# Initialize and train Random Forest classifier

clf = RandomForestClassifier(random_state=42)

clf.fit(X_train, y_train)

# Predict on test data

y_pred = clf.predict(X_test)

# Calculate accuracy

accuracy = accuracy_score(y_test, y_pred)

print("Accuracy:", accuracy)

This instance demonstrates how seamless it is to use Pandas with system administration tasks. It’s easy to create sturdy machine learning pipelines when you use Pandas for preprocessing data and feature engineering, and scikit-learn for training and evaluating models.

API for Pandas

Accessing monetary and financial statistics for device learning obligations is made easier by pandas-datareader. Users can get inventory expenses, financial indicators, and different economic metrics from Google Finance and the Federal Reserve Economic Data (FRED) database. It’s a very beneficial tool for economists and those who study quantitative finance, as it makes analysing complex facts much easier.

PandaSDMX: PandaSDMX makes it simpler to obtain and exchange statistical facts and metadata that comply with the SDMX 2.1 standard. Many statistical businesses and critical banks use this fashion. It’s mainly helpful for fact scientists who work with legitimate records or macroeconomic data, as it makes it easy to access structured datasets and metadata, which can be necessary for a comprehensive evaluation.

FredAPI: The Fred API provides a Python interface for accessing the Federal Reserve Economic Data (FRED) from the Federal Reserve Bank of St. Louis. It gives you access to both the FRED and ALFRED databases. Fredapi enables economists and researchers to access and examine economic time series information, providing them with vital insights into financial trends and indicators that inform their informed decisions.

While Pandas information items can already include geographic information, Geopandas adds it, letting customers analyse and display spatial records. It’s vital for tasks that utilise geographical data, such as mapping, spatial clustering, and identifying spatial patterns in records. It also enables statisticians who work with geospatial datasets to conduct more accurate evaluations.

Gurobipy-Pandas: Gurobipy-Pandas provides a way to connect Pandas to Gurobipy, a library for mathematical optimisation. This integration accelerates the process of creating optimisation models directly from Pandas data structures. This makes it easier for operations researchers and analysts to use information-driven strategies to identify and remedy complex optimisation issues.

Staircase is a records evaluation bundle for modelling and manipulating mathematical step features. It is based totally on Pandas and NumPy. It’s useful for searching information about adjustments in steps, such as cumulative distributions and categorical variables. It has several operations that can be used to discover and understand patterns in this type of information.

Xarray: xarray adds N-dimensional arrays to Pandas’ categorised facts abilities, supplying you with tools for studying multidimensional datasets that are common in the physical sciences. It’s useful for searching through and analysing large datasets, such as climate records and simulation results. It comes with a wide range of equipment that scientists and researchers can use for multidimensional statistical arrays.

Machine Learning Workflows with Pandas: Scikit Learn

Regression Analysis with Pandas and scikit-learn:

Regression evaluation is a method for applying information to parents about how two or more variables are related. It is often used in device studies to estimate a non-stop target variable based on one or more capabilities of the input.

import pandas as pd

import numpy as np

from sklearn.model_selection import train_test_split # Importing train_test_split function

from sklearn.linear_model import LinearRegression

from sklearn.metrics import mean_squared_error

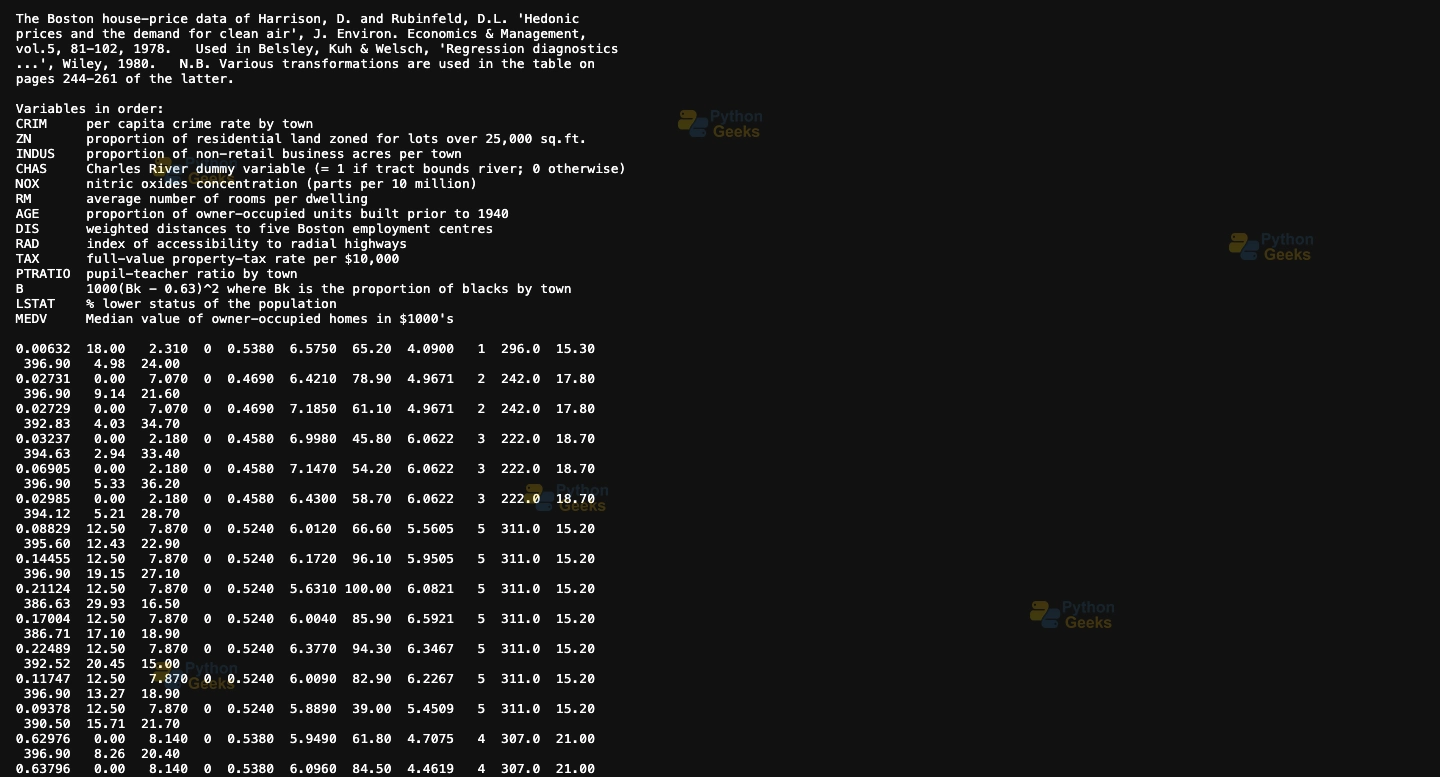

# Fetching Boston housing dataset from the original source

data_url = "http://lib.stat.cmu.edu/datasets/boston"

raw_df = pd.read_csv(data_url, sep="\s+", skiprows=22, header=None)

data = np.hstack([raw_df.values[::2, :], raw_df.values[1::2, :2]])

target = raw_df.values[1::2, 2]

# Creating DataFrame

df = pd.DataFrame(data, columns=['CRIM', 'ZN', 'INDUS', 'CHAS', 'NOX', 'RM', 'AGE', 'DIS', 'RAD', 'TAX', 'PTRATIO', 'B', 'LSTAT'])

df['target'] = target

# Separate features and target variable

X = df.drop('target', axis=1)

y = df['target']

# Split data into training and testing sets

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

# Initialize and train Linear Regression model

model = LinearRegression()

model.fit(X_train, y_train)

# Predict on test data

y_pred = model.predict(X_test)

# Calculate Mean Squared Error

mse = mean_squared_error(y_test, y_pred)

print("Mean Squared Error:", mse)

Sample Dataset:

Output:

Classification Tasks using Pandas and scikit-analyze:

Classification is a type of supervised learning where the intention is to predict what new data will be labelled based on what has been seen before.

import pandas as pd

from sklearn.model_selection import train_test_split

from sklearn.ensemble import RandomForestClassifier

from sklearn.metrics import accuracy_score

# Load dataset using Pandas

df = pd.read_csv('/Users/satchit/Desktop/DataFlair/annual-enterprise-survey-2021-financial-year-provisional-csv.csv') # Change 'your_dataset.csv' to your actual dataset file path

# Split data into features (X) and target variable (y)

X = df.drop(columns=['Variable_code'])

y = df['Variable_code']

# Split data into training and testing sets

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

# Initialize and train the Random Forest classifier

clf = RandomForestClassifier(random_state=42)

clf.fit(X_train, y_train)

# Predict on test data

y_pred = clf.predict(X_test)

# Calculate accuracy to evaluate the model

accuracy = accuracy_score(y_test, y_pred)

print("Accuracy:", accuracy)

Model Evaluation and Validation: The Usage of Pandas:

Model assessment and validation are critical steps in the device learning process, ensuring that the skilled model performs well with data it hasn’t seen before.

Example Code (the use of pass-validation):

from sklearn.model_selection import cross_val_score

# Perform K-fold cross-validation on the Random Forest classifier

scores = cross_val_score(clf, X, y, cv=5)

print("Cross-Validation Scores:", scores)

print("Mean Accuracy:", scores.mean())

The “cross_val_score” function from scikit-learn is used to do K-fold cross-validation in this situation. The version’s implied accuracy across all folds is used because of the evaluation metric. Make adjustments to the metric based on your trouble and version needs.

These examples demonstrate how Pandas and scikit-learn can be used together effortlessly for various data analysis tasks, including regression analysis, classification, and model assessment.

Statsmodels: Statistical Modelling with Pandas

Statsmodels is a library for Python that lets you version and analyse data. It provides you with the tools for testing hypotheses, estimating statistical models, and performing inferential statistics. Statsmodels provides Pandas by offering access to more advanced statistical methods for data analysis.

Features:

1. Statistical Models: It works with many models, along with time-collection fashions, generalised linear models, and linear regression models.

2. Hypothesis Testing: Does speculation assessments to peer how critical model coefficients are and to examine models.

3. Time-Series Analysis: It has equipment for breaking down time collection, analysing autocorrelation, and using ARIMA models.

4. Diagnostic Tools: Offers diagnostic plots and tests to check version assumptions and model suitability.

Applications:

1. Econometrics: It is used to model the financial system, compare regulations, and observe finances.

2. Biostatistics: Looks at biomedical records, observational research, and clinical trials.

3. Social Sciences: makes fashions of social networks, survey data, and cultural phenomena.

4. Data Science: Adds to gadget learning for drawing conclusions from statistics and figuring out what fashions suggest.

Statsmodels is a versatile library for statistical modelling in Python that offers advanced tools for exploring information and drawing conclusions from it. Users can conduct rigorous statistical evaluations and gain beneficial insights from their data by combining Statsmodels with Pandas and various Python libraries.

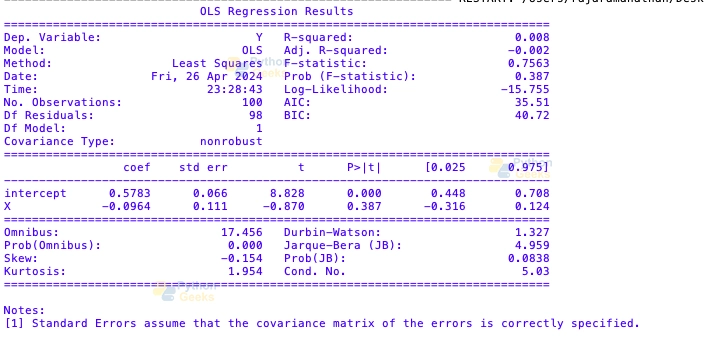

Here’s an example code:

import pandas as pd

import numpy as np

import statsmodels.api as sm

# Create a sample DataFrame

data = {

'X': np.random.rand(100), # Independent variable

'Y': np.random.rand(100) # Dependent variable

}

df = pd.DataFrame(data)

# Add a constant term for the intercept

df['intercept'] = 1

# Perform ordinary least squares (OLS) regression

model = sm.OLS(df['Y'], df[['intercept', 'X']])

results = model.fit()

# Print regression results summary

print(results.summary(

Output:

In this example:

- Here is an example DataFrame which we want to use: the dataset contains two columns, ‘X’ and ‘Y’, as the independent variable and the dependent variable, respectively.

- We use a constant (‘intercept”) as the last column of the DataFrame. It is the intercept of our model.

- As sm.OLS() is a function from the Statsmodels package that models an OLS (Ordinary Least Squares) regression. We will be using it to fit an ordinary least squares regression model. We only retain the constant and

- ‘X’ terms in the regression of ‘Y’ on both the intercepts and the ‘X’ functions.

- We plot the summary of the regression results by typing results. summary(), where the data, like coefficients, standard errors, t-statistics, p-values, and goodness-of-fitness (R-squared, F-statistic, etc.) are what this function spits out.

Advanced Topics in Pandas for Machine Learning

Time Series Analysis with Pandas

Time series analysis involves studying data collected over time to extract meaningful insights and patterns. Pandas provides powerful tools for efficiently handling time series data.

import pandas as pd

# Read time series data into DataFrame

df = pd.read_csv('time_series_data.csv', parse_dates=['date_column'], index_col='date_column')

# Resample data to daily frequency

daily_data = df.resample('D').sum()

# Compute rolling mean

rolling_mean = df['value_column'].rolling(window=30).mean()

# Plot time series data and rolling mean

import matplotlib.pyplot as plt

plt.plot(df.index, df['value_column'], label='Original Data')

plt.plot(rolling_mean.index, rolling_mean, label='Rolling Mean')

plt.xlabel('Date')

plt.ylabel('Value')

plt.title('Time Series Data with Rolling Mean')

plt.legend()

plt.show()

Handling Big Data with Pandas and Dask

Pandas is highly efficient for working with moderately sized datasets, but it may struggle with larger datasets that exceed available memory. Dask, a parallel computing library, seamlessly integrates with Pandas to handle big data.

import dask.dataframe as dd

# Read large dataset using Dask

ddf = dd.read_csv('big_data.csv')

# Perform operations on Dask DataFrame

result = ddf.groupby('column_name').mean().compute()

Parallel Computing and Optimisation Techniques

Pandas inherently performs operations sequentially, which may lead to performance bottlenecks on large datasets. Utilising parallel computing and optimisation techniques can significantly improve computation speed.

import pandas as pd

import swifter

# Read data into Pandas DataFrame

df = pd.read_csv('large_data.csv')

# Apply function in parallel using swifter

result = df['column_name'].swifter.apply(lambda x: custom_function(x))

These advanced topics demonstrate how Pandas can be extended to handle time series analysis, big data, and optimise performance using parallel computing techniques. Incorporating these techniques into your workflow can enhance productivity and efficiency when working with complex datasets in machine learning tasks.

Text Extensions

Text Extensions for Pandas provides extension types to cover common data structures for representing natural language data, as well as library integrations that convert the outputs of popular natural language processing libraries into Pandas DataFrames.

Accessors

A directory of projects providing extension accessors. This is for users to discover new accessors and for library authors to coordinate on the namespace.

| Library | Accessor | Classes |

| awkward-pandas | ak | Series |

| pdvega | vgplot | Series, DataFrame |

| pandas-genomics | genomics | Series, DataFrame |

| pint-pandas | pint | Series, DataFrame |

| physipandas | physipy | Series, DataFrame |

| composeml | slice | DataFrame |

| gurobipy-pandas | gppd | Series, DataFrame |

| staircase | sc | Series, DataFrame |

| woodwork | slice | Series, DataFrame |

Summary

Lastly, I want to conclude that Pandas possesses all the necessary abilities and potential to be a preferable library for data manipulation and analysis in the machine learning field. Pandas begins as a class for performing basic data handling.

In other words, the class can handle small tasks, such as displaying data in the form of a preview. Its advanced topics involve time series analysis, big data handling, and optimisation.

Through the use of Pandas, data scientists and analysts can automate their tasks and derive relevant conclusions from datasets of any complexity or size, utilising various models and data structures that have been appropriately prepared. Data exploration, pre-processing the dataset, and winnowing the model performance—Pandas is undoubtedly a key tool in the hands of a Data professional.

Over time, Pandas has adopted machine learning, continually evolving to address new challenges and introduce innovative approaches. Pandas has an intuitive interface, where both the data and the code are unified, which allows it to boast extensive documentation and active community support. The language thus brings the users into the heart of data handlin,g through which they can solve a wide range of currently acute real-world data problems efficiently and effectively.

Pandas, in the fluid data science ecosystem, become a faithful companion for data scientists to reveal the secrets of data and obtain the critical insights that affect the future development of machine learning.