In the rapidly evolving landscape of cloud-native infrastructure, Kagent emerges as the first open-source agentic AI framework purpose-built for Kubernetes environments. Developed by Solo.io and contributed to the Cloud Native Computing Foundation (CNCF), Kagent represents a paradigm shift from traditional automation to autonomous, reasoning-capable systems that can independently diagnose, troubleshoot, and resolve complex operational challenges without human intervention.

Unlike conventional automation tools that execute predetermined scripts, Kagent leverages Large Language Models (LLMs) and the Model Context Protocol (MCP) to create intelligent agents capable of multi-step reasoning, dynamic problem-solving, and adaptive decision-making within your Kubernetes clusters.

The Critical Problems Kagent Solves

1. Exponential Operational Complexity in Cloud-Native Ecosystems

Modern cloud-native stacks have become increasingly complex, with organizations typically running:

- Kubernetes for container orchestration

- Istio or Cilium for service mesh management

- Prometheus and Grafana for observability

- Argo CD/Flux for GitOps deployments

- Helm for package management

- Multiple CNCF projects across different layers

Each component solves critical problems but introduces operational overhead. Teams spend countless hours context-switching between tools, correlating data across systems, and manually troubleshooting issues that span multiple infrastructure layers.

Kagent’s Solution: Provides autonomous agents with deep knowledge of the entire cloud-native ecosystem. Instead of engineers manually querying Prometheus, checking pod logs, examining Gateway configurations, and correlating service mesh traffic patterns, Kagent agents perform this reconnaissance autonomously and provide actionable insights or execute fixes directly.

2. The Context Loss Problem in DevOps Workflows

Traditional ChatGPT/LLM-based troubleshooting follows a frustrating pattern:

- Copy error message → Paste into ChatGPT

- Receive suggested fix → Apply in cluster

- New error appears → Return to step 1

- Repeat until resolution (with no memory of previous attempts)

This workflow suffers from:

- No cluster state awareness: The LLM cannot see your actual infrastructure

- No action capability: Engineers must manually execute every suggestion

- Context fragmentation: Each interaction starts from zero

- No validation loop: No way to verify if suggestions actually work

Kagent’s Solution: Runs inside your Kubernetes cluster with direct access to cluster state, APIs, and observability data. Agents maintain context across interactions, can execute commands, validate results, and iterate on solutions autonomously. This transforms passive AI assistance into active, autonomous operations.

3. Tool Integration Hell for AI Agents

Building production-ready AI agents requires integrating with numerous external systems:

- Reading Kubernetes resources via kubectl

- Querying Prometheus metrics

- Parsing logs from multiple sources

- Interacting with Istio/Gateway APIs

- Managing Argo Rollouts

- Generating and validating YAML manifests

Each integration requires custom code, error handling, authentication, and ongoing maintenance. This “tool integration tax” prevents teams from rapidly building specialized agents for their unique operational needs.

Kagent’s Solution: Ships with production-ready MCP tools for the entire cloud-native ecosystem including Kubernetes, Istio, Helm, Argo, Prometheus, Grafana, and Cilium. All tools are implemented as Kubernetes Custom Resources (ToolServers), making them declaratively manageable and reusable across multiple agents. Teams can focus on agent logic rather than integration plumbing.

4. Lack of Observability in Agentic Systems

AI agents operate as black boxes, making it difficult to:

- Understand what decisions agents are making

- Debug unexpected behaviors

- Audit agent actions for compliance

- Measure agent effectiveness

- Identify performance bottlenecks

This opacity creates trust and security concerns in production environments.

Kagent’s Solution: Native OpenTelemetry tracing integration provides complete visibility into agent operations, including decision paths, tool invocations, LLM calls, and execution timelines. Platform teams can monitor agent behavior using existing observability stacks like Jaeger, Zipkin, or Grafana Tempo.

5. The “Infrastructure as Code” vs “Infrastructure as Agents” Gap

While Infrastructure as Code (IaC) revolutionized declarative infrastructure management, it remains fundamentally reactive. IaC tools ensure systems conform to declared state but cannot:

- Reason about why drift occurred

- Diagnose root causes of failures

- Predict and prevent issues proactively

- Handle novel situations outside predefined playbooks

Kagent’s Solution: Represents the evolution to Infrastructure as Agents – systems that don’t just enforce state but understand intent, reason about problems, and take autonomous corrective action. Agents can handle novel situations that would require human intervention in pure IaC workflows.

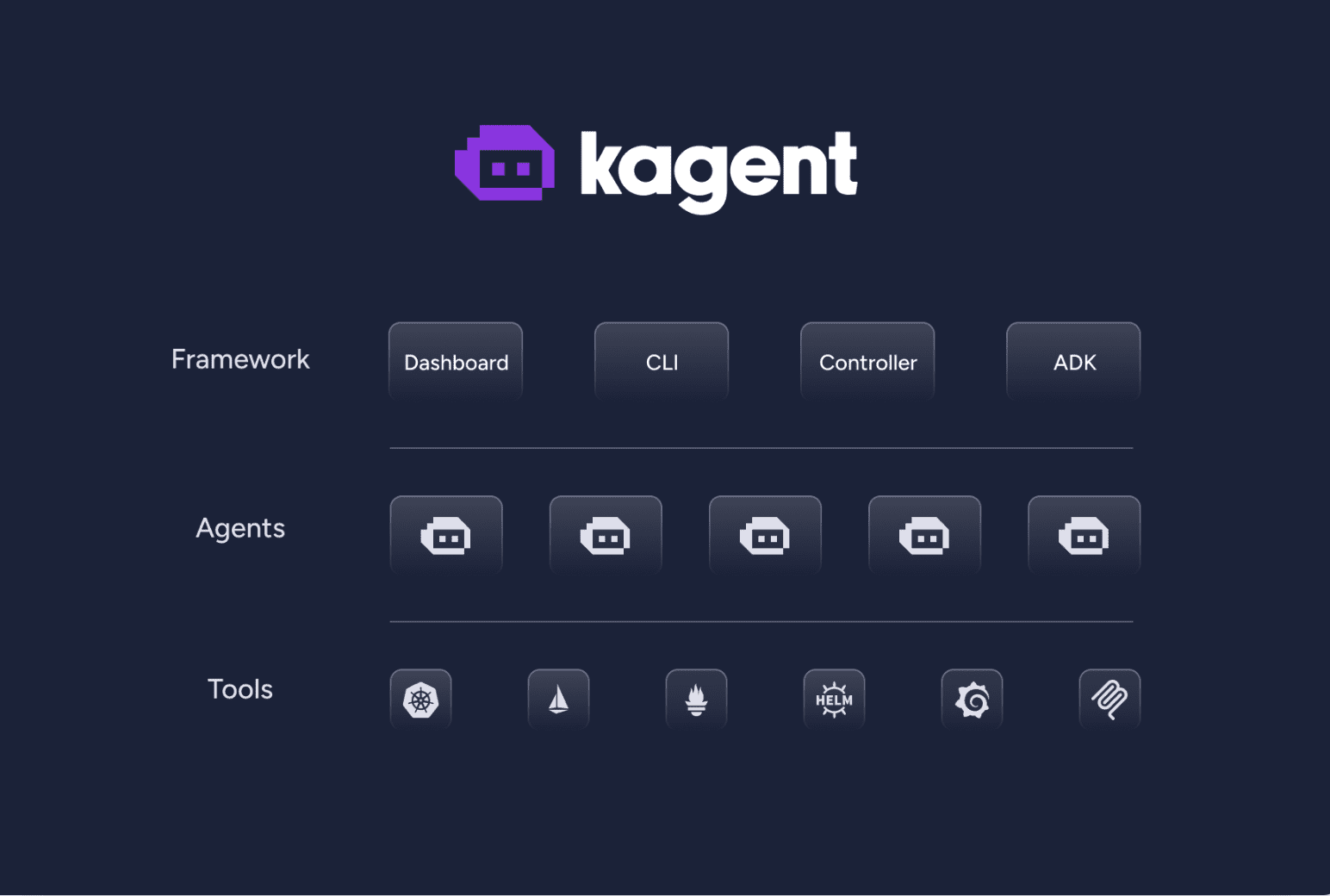

Kagent’s Technical Architecture: A Deep Dive

Kagent implements a three-layer architecture designed for extensibility, scalability, and Kubernetes-native operation:

Layer 1: Tools – The MCP Foundation

Tools in Kagent are MCP-compliant functions that agents invoke to interact with cloud-native systems. The framework provides:

Pre-Built Tool Categories:

- Kubernetes Operations: Get/Describe resources, retrieve logs, execute commands in pods, create resources from YAML

- Service Mesh Management: Analyze Gateway/HTTPRoute configurations, trace connection paths, debug traffic routing

- Observability: Execute PromQL queries, generate Grafana dashboards, analyze metrics trends

- GitOps Integration: Manage Argo CD applications, trigger rollouts, inspect deployment states

- Package Management: Query Helm charts, perform releases, rollback deployments

- Security: Implement Zero Trust policies, analyze RBAC configurations, scan for vulnerabilities

Tools as Kubernetes Resources:

apiVersion: kagent.dev/v1alpha2

kind: ToolServer

metadata:

name: prometheus-tools

namespace: kagent

spec:

mcpServerUrl: "http://prometheus-mcp-server:8080"

tools:

- name: query_metrics

description: "Execute PromQL queries against Prometheus"

- name: analyze_trends

description: "Analyze metric trends over time windows"All tools are declaratively defined as Custom Resources, enabling:

- Version control: Store tool definitions in Git

- RBAC enforcement: Control which agents can access which tools

- Auditing: Track tool usage across the cluster

- Reusability: Share tools across multiple agents

Model Context Protocol (MCP) Integration:

Kagent leverages MCP for standardized tool communication, providing:

- Vendor independence: Tools work across different LLM providers

- Standardized schemas: Consistent tool descriptions for reliable agent reasoning

- Protocol flexibility: Support for Stdio, SSE, and HTTP transports

- Ecosystem compatibility: Use any MCP server from the growing ecosystem

Layer 2: Agents – Autonomous Reasoning Systems

Agents in Kagent are autonomous systems defined with natural language instructions and equipped with tools. Built on Microsoft AutoGen, they support:

Multi-Agent Collaboration:

apiVersion: kagent.dev/v1alpha2

kind: Agent

metadata:

name: network-troubleshooting-team

namespace: kagent

spec:

type: team

agents:

- name: diagnostics-agent

role: "Diagnose network connectivity issues using service mesh data"

tools:

- istio-analyzer

- prometheus-metrics

modelConfig:

name: claude-sonnet-4

- name: remediation-agent

role: "Implement fixes for identified network issues"

tools:

- kubernetes-manager

- helm-controller

modelConfig:

name: claude-sonnet-4

orchestration:

pattern: sequential

planningAgent: diagnostics-agentKey Agent Capabilities:

- Planning and Execution: Agents decompose complex goals into executable steps

- Iterative Refinement: Validate results and adjust strategies based on outcomes

- Context Maintenance: Preserve state across multi-turn interactions

- Tool Selection: Dynamically choose appropriate tools for each sub-task

- Error Handling: Gracefully handle failures and retry with alternative approaches

Agent Types:

- Diagnostic Agents: Analyze system state and identify issues

- Remediation Agents: Execute fixes and validate outcomes

- Observability Agents: Monitor systems and generate insights

- Security Agents: Enforce policies and detect threats

- Deployment Agents: Manage application lifecycles with canary/blue-green strategies

Layer 3: Framework – Declarative Control Plane

The framework layer provides multiple interfaces for managing agents:

1. Declarative YAML Manifests:

apiVersion: kagent.dev/v1alpha2

kind: Agent

metadata:

name: gateway-debugger

spec:

description: "Debug Gateway and HTTPRoute configuration issues"

tools:

- gateway-analyzer

- service-mesh-tracer

- kubernetes-inspector

systemPrompt: |

You are an expert Istio Gateway troubleshooting agent.

When investigating routing issues:

1. Verify Gateway configuration for syntax errors

2. Check HTTPRoute bindings to Gateways

3. Validate backend Service endpoints

4. Trace request path through service mesh

5. Analyze VirtualService configurations

Always provide actionable remediation steps.

modelConfig:

name: claude-sonnet-4-model

provider: Anthropic2. CLI Interface:

bash

# Create agent from manifest

kagent agent create -f gateway-debugger.yaml

# Invoke agent with task

kagent agent run gateway-debugger \

--task "My Gateway on example.com is returning 404 errors"

# Stream agent output

kagent agent logs gateway-debugger --follow

# List available tools

kagent tools list --server istio-mcp-server3. Web UI Dashboard:

The Kagent UI provides:

- Visual agent builder with drag-and-drop tool assignment

- Real-time execution monitoring with streaming logs

- Tool catalog browser with inline documentation

- Agent performance metrics and execution history

- Model configuration management

4. Kubernetes Controller:

The Kagent controller watches Custom Resources and reconciles agent infrastructure:

- Deploys agent runtime environments

- Manages tool server connections

- Handles credential injection for LLM providers

- Implements retry logic and error recovery

- Collects telemetry and exposes Prometheus metrics

Advanced Kagent Capabilities

1. Multi-Model Support

While initially focused on OpenAI models, Kagent’s roadmap includes:

- Claude Models: via Anthropic API

- Local Models: Ollama, LM Studio for air-gapped environments

- Azure OpenAI: For enterprise deployments

- Gemini: Google’s multimodal models

- Custom Models: Via OpenAI-compatible endpoints

Example: Local Ollama Integration:

apiVersion: kagent.dev/v1alpha2

kind: ModelConfig

metadata:

name: llama3-local

namespace: kagent

spec:

model: llama3

provider: Ollama

ollama:

host: http://ollama.ollama.svc.cluster.local:802. Canary Deployment Automation

Example agent workflow for safe production deployments:

apiVersion: kagent.dev/v1alpha2

kind: Agent

metadata:

name: canary-deployment-manager

spec:

description: "Automate canary deployments with intelligent rollback"

tools:

- argo-rollouts

- prometheus-analyzer

- kubernetes-manager

workflow:

- stage: deploy

instruction: "Deploy new version with 10% traffic split"

- stage: validate

instruction: |

Monitor for 5 minutes checking:

- Error rate < 1%

- Latency p95 < baseline + 10%

- No increase in 5xx errors

- stage: decide

instruction: |

If all metrics healthy: Increase to 50% traffic

If any metric unhealthy: Rollback immediately

- stage: complete

instruction: "Gradually increase to 100% or rollback based on continuous validation"3. Zero Trust Security Implementation

apiVersion: kagent.dev/v1alpha2

kind: Agent

metadata:

name: zero-trust-enforcer

spec:

description: "Implement Zero Trust security policies across service mesh"

tools:

- istio-policy-manager

- kubernetes-rbac

- mtls-validator

schedule: "*/30 * * * *" # Run every 30 minutes

systemPrompt: |

Enforce Zero Trust principles:

1. Verify all service-to-service communication uses mTLS

2. Ensure AuthorizationPolicy exists for every service

3. Validate no default-allow rules in production namespaces

4. Check RBAC policies follow least privilege

Generate compliance report and auto-remediate violations where safe.4. Intelligent Alerting and Incident Response

apiVersion: kagent.dev/v1alpha2

kind: Agent

metadata:

name: incident-responder

spec:

description: "Autonomous incident response agent"

triggers:

- type: prometheus-alert

alertname: HighErrorRate

- type: prometheus-alert

alertname: PodCrashLooping

tools:

- kubernetes-debugger

- log-analyzer

- prometheus-querier

- slack-notifier

systemPrompt: |

When alert triggered:

1. Gather context: recent deployments, config changes, related alerts

2. Analyze logs for error patterns

3. Check resource utilization (CPU, memory, network)

4. Identify root cause candidates

5. If safe remediation available: execute and validate

6. Generate incident report to Slack with findings and actions takenOpenTelemetry Tracing Integration

Kagent’s observability implementation:

apiVersion: kagent.dev/v1alpha2

kind: Agent

metadata:

name: traced-agent

spec:

observability:

tracing:

enabled: true

exporter: otlp

endpoint: "tempo.observability.svc.cluster.local:4317"

samplingRate: 1.0 # 100% sampling in dev, reduce in prodTrace Data Captured:

- Agent decision trees and reasoning paths

- Tool invocation parameters and results

- LLM prompt/response pairs

- Execution timing and latency

- Error traces and retry attempts

Example Jaeger Trace View:

span: agent.execute

span: agent.planning

span: llm.call [model=claude-sonnet-4, tokens_in=1250, tokens_out=450]

span: tool.invoke [tool=prometheus-querier]

span: http.request [method=POST, url=http://prometheus:9090/api/v1/query]

span: agent.reflection

span: llm.call [model=claude-sonnet-4, tokens_in=2100, tokens_out=300]

span: tool.invoke [tool=kubernetes-manager]Real-World Use Cases

1. Multi-Cloud Kubernetes Management

Organization managing 50+ Kubernetes clusters across AWS EKS, Azure AKS, and GCP GKE.

Challenge: Different managed Kubernetes services have subtle API differences and require specialized knowledge.

Solution: Kagent agents with cloud-specific knowledge bases that abstract provider differences:

apiVersion: kagent.dev/v1alpha2

kind: Agent

metadata:

name: multi-cloud-manager

spec:

tools:

- eks-manager

- aks-manager

- gke-manager

- kubernetes-universal

knowledgeBase:

- type: documentation

source: eks-best-practices

- type: documentation

source: aks-operations-guide2. Cost Optimization Through Intelligent Scaling

SaaS company needing dynamic resource optimization based on usage patterns.

Agent Workflow:

- Analyze Prometheus metrics for CPU/memory utilization trends

- Correlate with business metrics (active users, request volume)

- Identify over-provisioned workloads

- Generate scaling recommendations

- Execute approved recommendations via Horizontal Pod Autoscaler updates

- Monitor impact and adjust

Result: 35% reduction in cloud costs without performance degradation.

3. Self-Healing Production Systems

E-commerce platform requiring 99.95% uptime during peak shopping periods.

Kagent Implementation:

- Health Monitoring Agent: Continuously validates critical path functionality

- Diagnostic Agent: Analyzes failures across logs, metrics, traces

- Remediation Agent: Executes fixes (pod restarts, cache clearing, circuit breaker resets)

- Escalation Agent: Pages on-call if automated remediation fails

Result: 70% reduction in MTTR (Mean Time To Resolution) for common incidents.

Security Considerations and Best Practices

1. RBAC for Agents

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

name: diagnostic-agent-role

namespace: production

rules:

- apiGroups: [""]

resources: ["pods", "pods/log"]

verbs: ["get", "list"]

- apiGroups: [""]

resources: ["pods/exec"]

verbs: [] # Explicitly deny exec for production agents2. Tool Approval Workflows

For high-risk operations:

apiVersion: kagent.dev/v1alpha2

kind: Agent

metadata:

name: production-deployment-agent

spec:

approvalRequired: true

approvers:

- engineering-leads

- sre-team

tools:

- kubernetes-manager # Write operations require approval3. Audit Logging

All agent actions logged to immutable storage:

apiVersion: kagent.dev/v1alpha2

kind: KagentConfig

metadata:

name: platform-config

spec:

auditLog:

enabled: true

backend: s3

bucket: kagent-audit-logs

retention: 90d4. Network Policies

Restrict agent network access:

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: kagent-agent-network-policy

spec:

podSelector:

matchLabels:

app: kagent-agent

policyTypes:

- Egress

egress:

- to:

- namespaceSelector:

matchLabels:

name: kagent

- to:

- podSelector:

matchLabels:

app: prometheusGetting Started with Kagent

Prerequisites

- Kubernetes cluster (1.27+)

- Helm 3.x

- LLM API key (OpenAI, Anthropic, or local model)

Installation

1. Install Kagent CRDs:

helm install kagent-crds oci://ghcr.io/kagent-dev/kagent/helm/kagent-crds \

--namespace kagent \

--create-namespace2. Configure LLM Provider:

export ANTHROPIC_API_KEY="your-api-key-here"3. Install Kagent Platform:

helm upgrade --install kagent oci://ghcr.io/kagent-dev/kagent/helm/kagent \

--namespace kagent \

--set providers.default=anthropic \

--set providers.anthropic.apiKey=$ANTHROPIC_API_KEY \

--set ui.service.type=LoadBalancer4. Access Kagent UI:

kubectl get service kagent-ui -n kagent

# Navigate to LoadBalancer IP in browserYour First Agent

Create a simple Kubernetes diagnostic agent:

cat <<EOF | kubectl apply -f -

apiVersion: kagent.dev/v1alpha2

kind: Agent

metadata:

name: k8s-helper

namespace: kagent

spec:

description: "Kubernetes troubleshooting assistant"

tools:

- kubernetes-inspector

- log-analyzer

systemPrompt: |

You are a Kubernetes expert assistant.

Help users diagnose pod issues by:

1. Checking pod status and recent events

2. Analyzing container logs for errors

3. Verifying resource limits and requests

4. Providing clear remediation steps

modelConfig:

name: claude-sonnet-4

EOFInvoke the agent:

kagent agent run k8s-helper \

--task "My pod nginx-deployment-abc123 is in CrashLoopBackOff"Performance Tuning and Optimization

1. Model Selection Strategy

# Fast, cost-effective for simple tasks

modelConfig:

name: gpt-4o-mini

temperature: 0.3

# Maximum reasoning capability for complex diagnostics

modelConfig:

name: claude-sonnet-4

temperature: 0.7

# Local deployment for data sensitivity

modelConfig:

name: llama3-70b

provider: ollama2. Prompt Engineering for Agents

systemPrompt: |

# Role Definition

You are a production Kubernetes SRE with 10 years experience.

# Constraints

- Never execute destructive operations without explicit approval

- Always validate before modifying production resources

- Provide step-by-step reasoning for all decisions

# Output Format

Always structure responses as:

1. Problem Analysis

2. Root Cause Identification

3. Recommended Actions

4. Risk Assessment

5. Rollback Plan3. Caching and Rate Limiting

apiVersion: kagent.dev/v1alpha2

kind: KagentConfig

spec:

llmCache:

enabled: true

ttl: 1h

backend: redis

rateLimits:

requestsPerMinute: 100

tokensPerMinute: 150000Kagent Ecosystem and Community

Official Resources:

- GitHub: https://github.com/kagent-dev/kagent

- Documentation: https://kagent.dev/docs

- Discord: https://bit.ly/kagentdiscord

- CNCF Slack: #kagent channel

Contributing: Kagent is Apache 2.0 licensed and welcomes contributions:

- New agent templates

- Additional MCP tool servers

- Documentation improvements

- Integration examples

- Bug reports and feature requests

Roadmap Highlights:

- Multi-agent coordination and collaboration

- Enhanced feedback and testing frameworks

- Expanded LLM provider support

- Advanced graph-based workflow execution

- Deeper OpenTelemetry integration

- MCP Gateway for centralized tool registry

Kagent in the Broader AI Agent Landscape

Kagent’s Unique Value Proposition:

- Only framework designed specifically for Kubernetes operations

- Native integration with CNCF ecosystem tools

- Production-ready MCP servers for cloud-native stack

- Declarative, GitOps-friendly configuration

- Enterprise-grade observability and auditing

The Future: From AgentOps to Autonomous Operations

Kagent represents a fundamental shift in how we approach infrastructure management:

Traditional DevOps (2015-2023):

- Human operators → Automation scripts → Infrastructure

GitOps Era (2020-present):

- Git commits → CI/CD pipelines → Reconciliation loops

AgentOps Era (2024+):

- Intent declaration → Autonomous agents → Self-healing infrastructure

The evolution from “shepherding servers” to “orchestrating agents” mirrors past transitions like:

- Manual server provisioning → Infrastructure as Code

- Monoliths → Microservices → Service Mesh

- VMs → Containers → Serverless

Kagent positions organizations at the forefront of this transformation, enabling teams to move from reactive problem-solving to proactive, autonomous operations.

Conclusion: Why Kagent Matters for Production Kubernetes

Kagent solves the critical operational complexity problem facing every organization running Kubernetes at scale. By combining:

- Kubernetes-native architecture for seamless integration

- Model Context Protocol for standardized tool access

- AutoGen framework for sophisticated agent capabilities

- OpenTelemetry observability for production confidence

- Declarative APIs for GitOps workflows

Kagent delivers autonomous operations that reduce toil, accelerate incident response, and enable teams to focus on strategic initiatives rather than firefighting.

For platform engineering teams managing complex cloud-native environments, Kagent represents not just another tool, but a new operational paradigm where AI agents serve as intelligent teammates that never sleep, continuously monitor, proactively prevent issues, and autonomously resolve problems when they occur.

The question isn’t whether agentic AI will transform infrastructure operations – it’s whether your organization will be leading or following this transformation. Kagent provides the production-ready framework to lead.

Additional Resources

- Documentation: kagent.dev/docs

- GitHub Repository: github.com/kagent-dev/kagent

- CNCF Sandbox: cncf.io/projects/kagent

- Solo.io Blog: solo.io/blog

- Community Discord: bit.ly/kagentdiscord