Ardent Performance Computing

Latest Post

How Blocking-Lock Brownouts Can Escalate from Row-Level to Complete System Outages

This article is a shortened version. For the full writeup, go to https://github.com/ardentperf/pg-idle-test/tree/main/conn_exhaustion

This test suite demonstrates a failure mode when application bugs which poison connection pools collide with PgBouncers that are missing peer config and positioned behind a load balancer. PgBouncer’s peering feature (added with v1.19 in 2023) should be configured if multiple PgBouncers are being used with a load balancer – this feature prevents the escalation demonstrated here.

The failures described here are based on real-world experiences. While uncommon, this failure mode has been seen multiple times in the field.

Along the way, we discover unexpected behaviors (bugs?) in Go’s database/sql (or sqlx) connection pooler with the pgx client and in Postgres itself.

Sample output: https://github.com/ardentperf/pg-idle-test/actions/workflows/test.yml

The Problem in Brief

Go’s database/sql allows connection pools to become poisoned by returning connections with open transactions for re-use. Transactions opened with db.BeginTx() will be cleaned up, but – for example – conn.ExecContext(..., "BEGIN") will not be cleaned up. PR #2481 proposes some cleanup logic in pgx for database/sql connection pools (not yet merged); I tested the PR with this test suite. The PR relies on the TxStatus indicator in the ReadyForStatus message which Postgres sends back to the client as part of its network protocol.

A poisoned connection pool can cause an application brownout since other sessions updating the same row wait indefinitely for the blocking transaction to commit or rollback its own update. On a high-activity or critical table, this can quickly lead to significant pile-ups of connections waiting to update the same locked row. With Go this means context deadline timeouts and retries and connection thrashing by all of the threads and processes that are trying to update the row. Backoff logic is often lacking in these code paths. When there is a currently running SQL (hung – waiting for a lock), pgx first tries to send a cancel request and then will proceed to a hard socket close.

If PgBouncer’s peering feature is not enabled, then cancel requests load-balanced across multiple PgBouncers will fail because the cancel key only exists on the PgBouncer that created the original connection. The peering feature solves the cancel routing problem by allowing PgBouncers to forward cancel requests to the correct peer that holds the cancel key. This feature should be enabled – the test suite demonstrates what happens when it is not.

Postgres immediately cleans up connections when it receives a cancel request. However, Postgres does not clean up connections when their TCP sockets are hard closed, if the connection is waiting for a lock. As a result, Postgres connection usage climbs while PgBouncer continually opens new connections that block on the same row. The app’s poisoned connection pool quickly leads to complete connection exhaustion in the Postgres server.

Existing connections will continue to work, as long as they don’t try to update the row which is locked. But the row-level brownout now becomes a database-level brownout – or perhaps a complete system outage (once the Go database/sql connection pool is exhausted) – because postgres rejects all new connection attempts from the application.

Result: Failed cancels → client closes socket → backends keep running → CLOSE_WAIT accumulates → Postgres hits max_connections → system outage

Table of Contents

- The Problem in Brief

- Table of Contents

- Architecture

- The Test Scenarios

- Test Results

- Detection and Prevention

Architecture

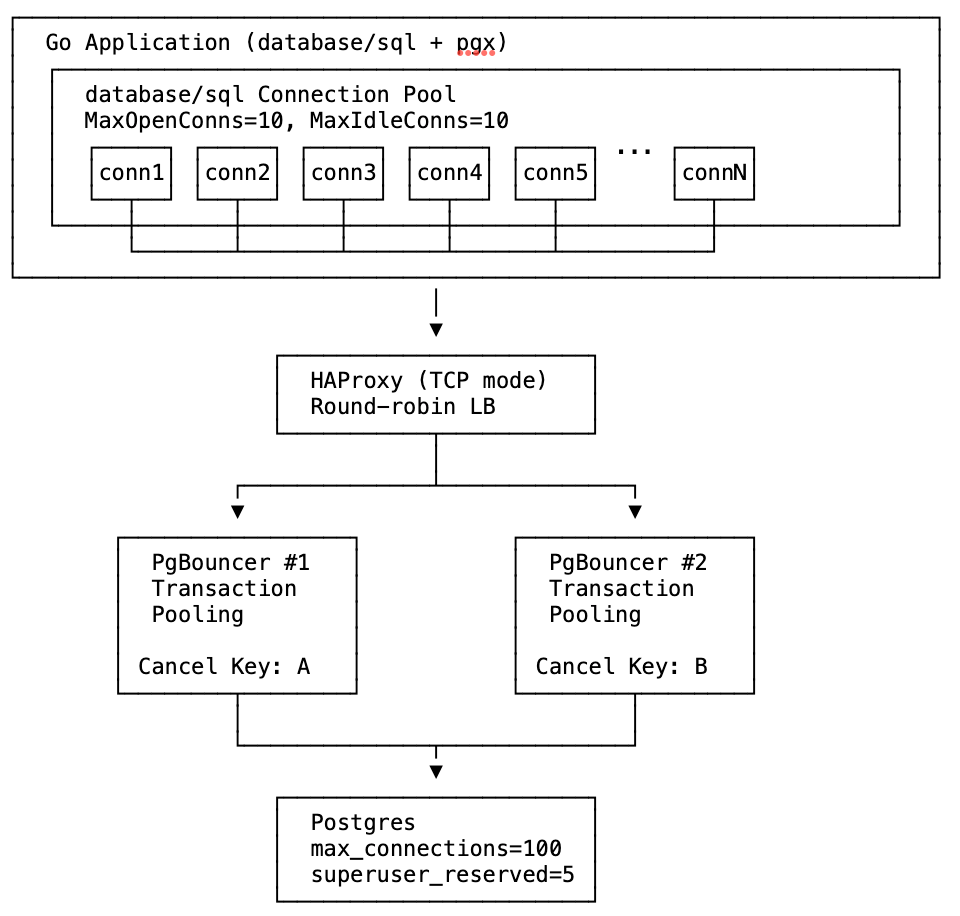

The test uses Docker Compose to create this infrastructure with configurable number of PgBouncer instances.

Continue reading

Recent Comments