Advancing AI models

from frontier innovation to

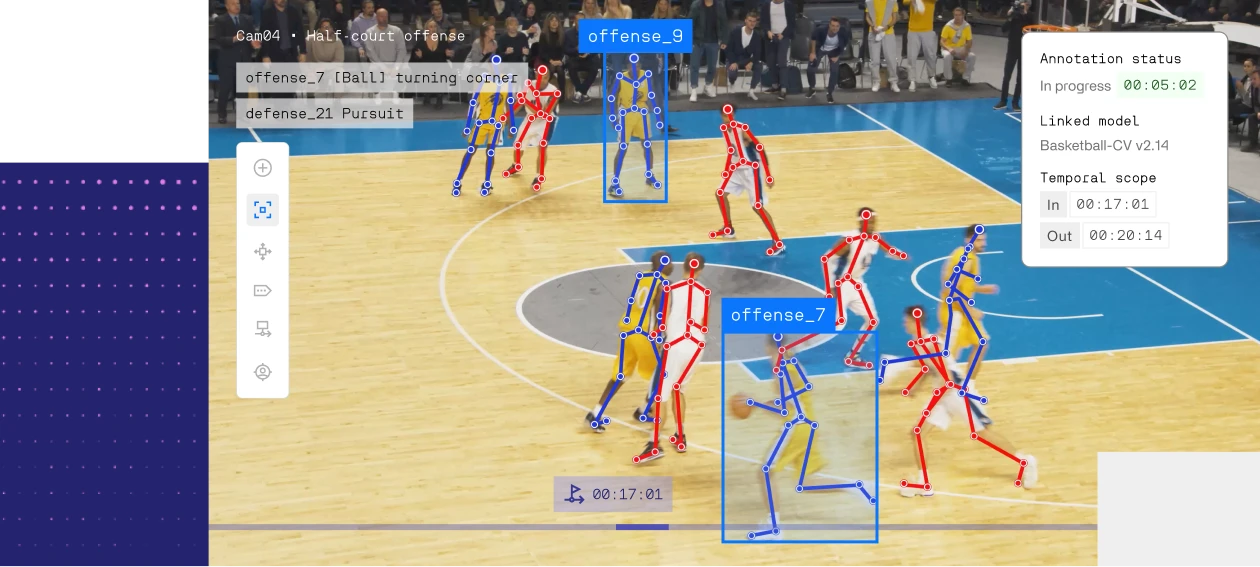

We've trained over 80% of the world's leading AI models and deploy them at enterprise scale from the NBA to the Navy.

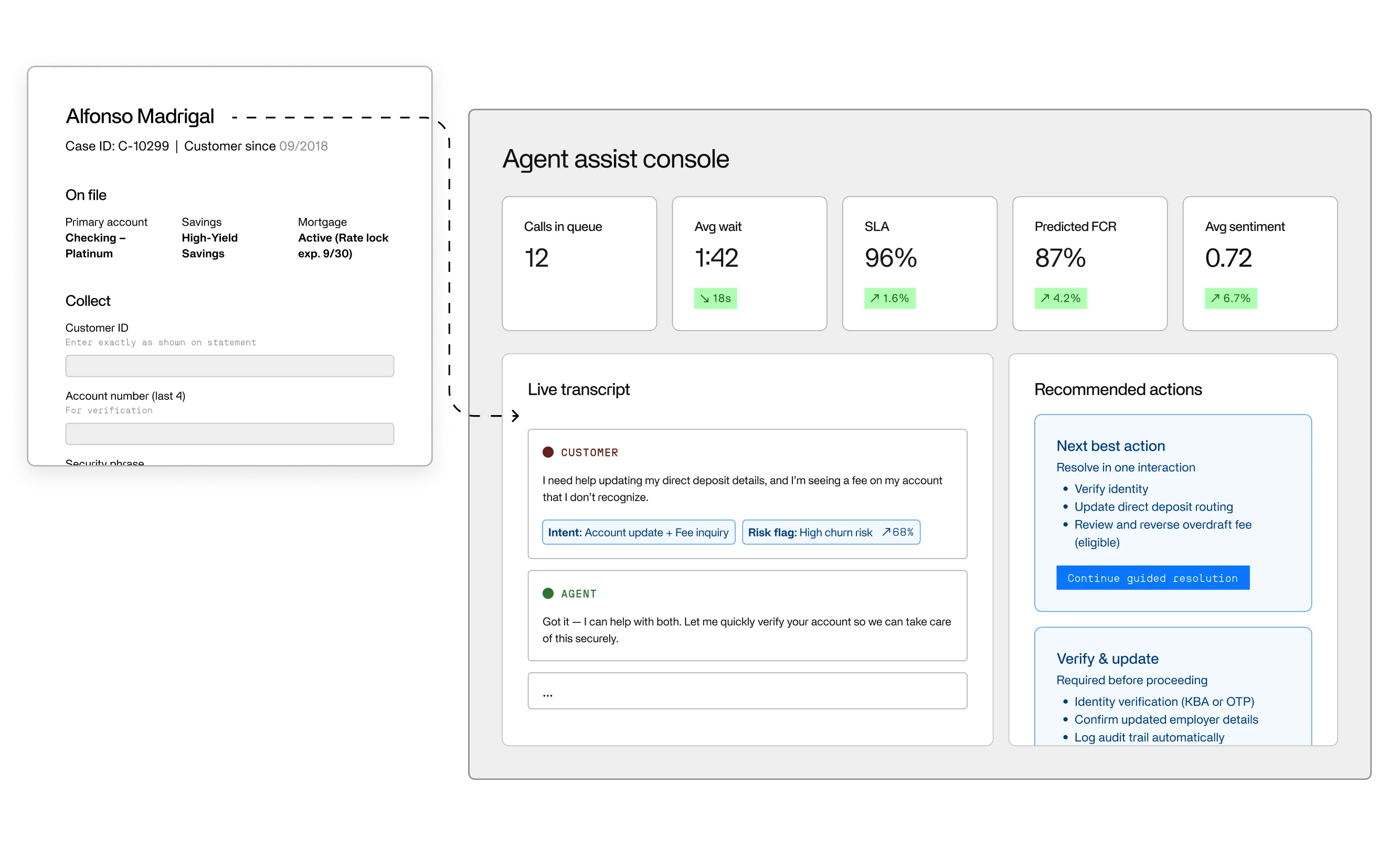

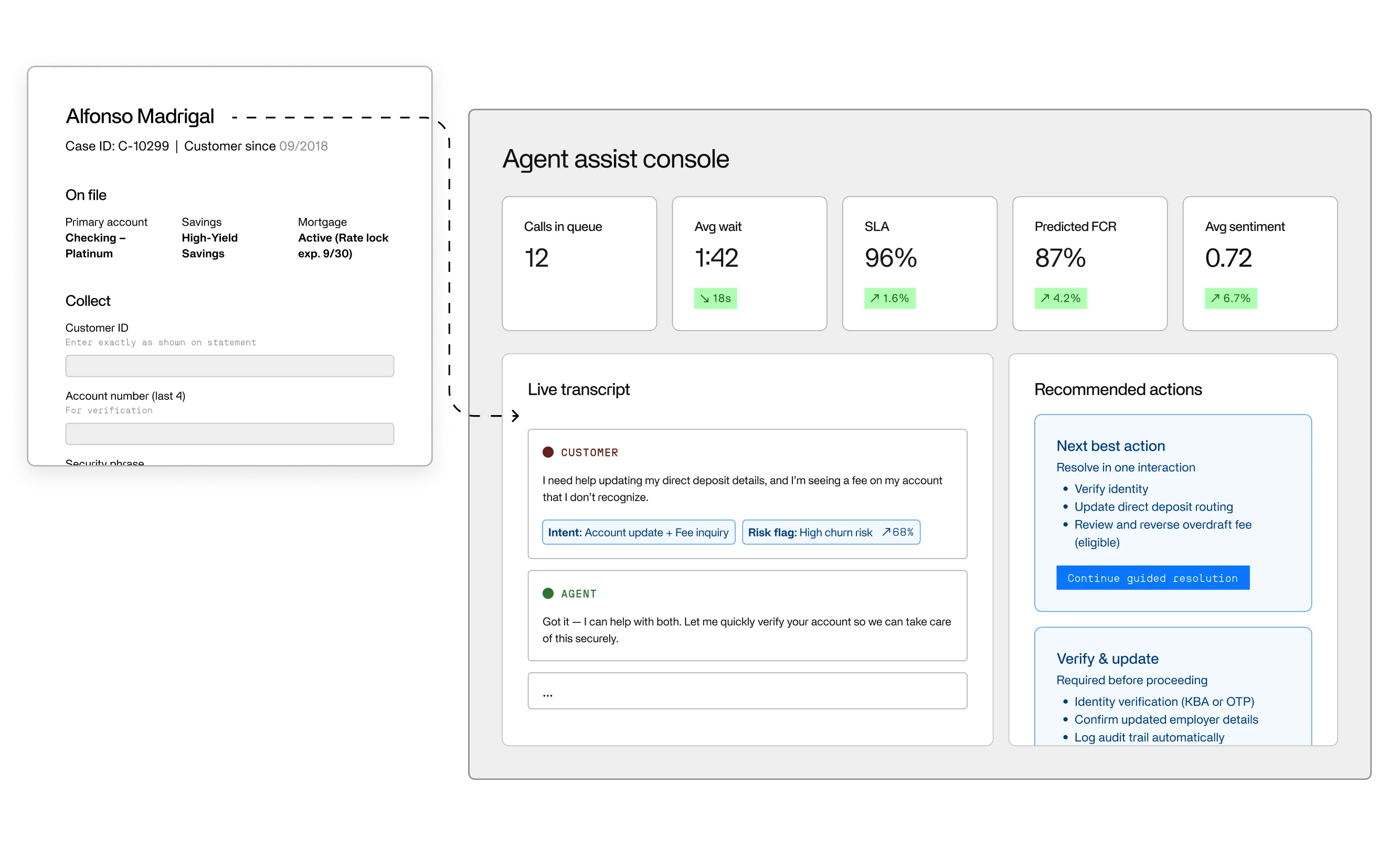

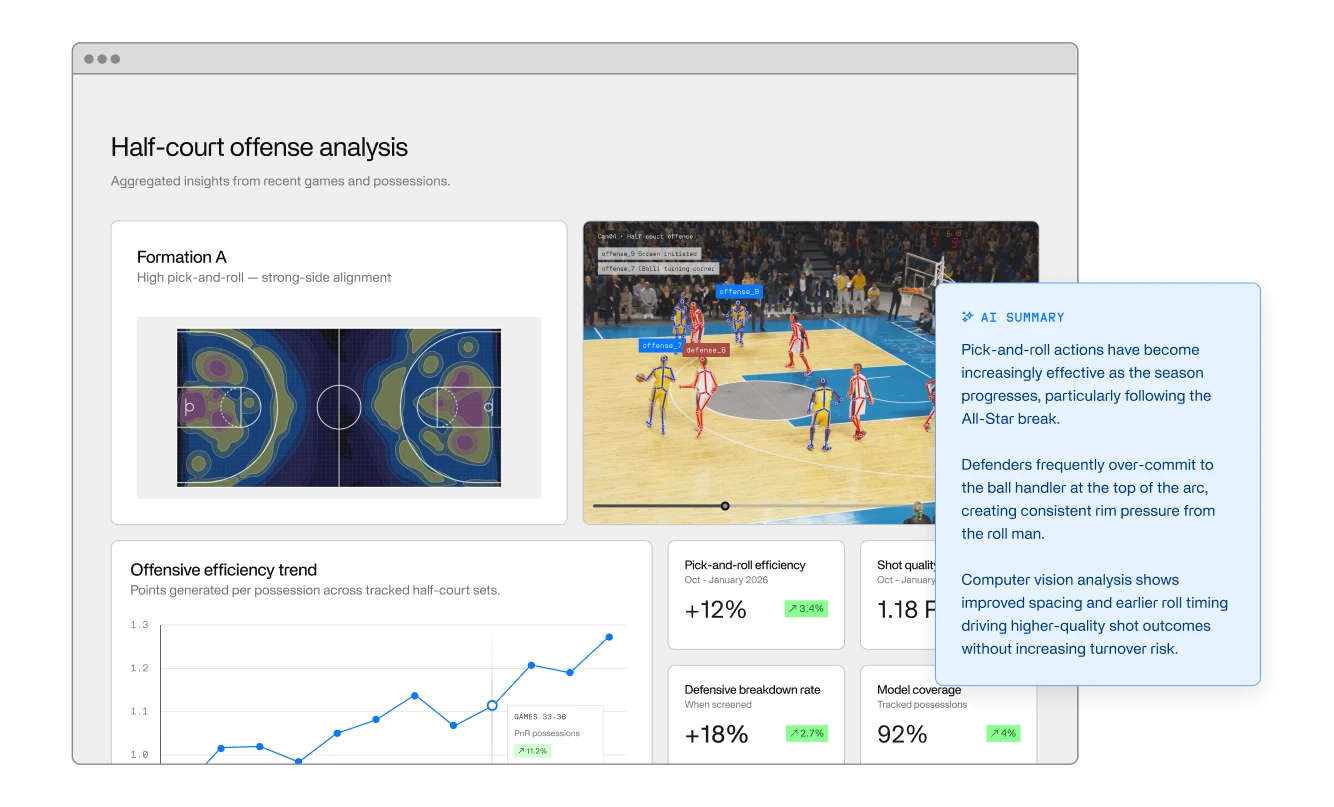

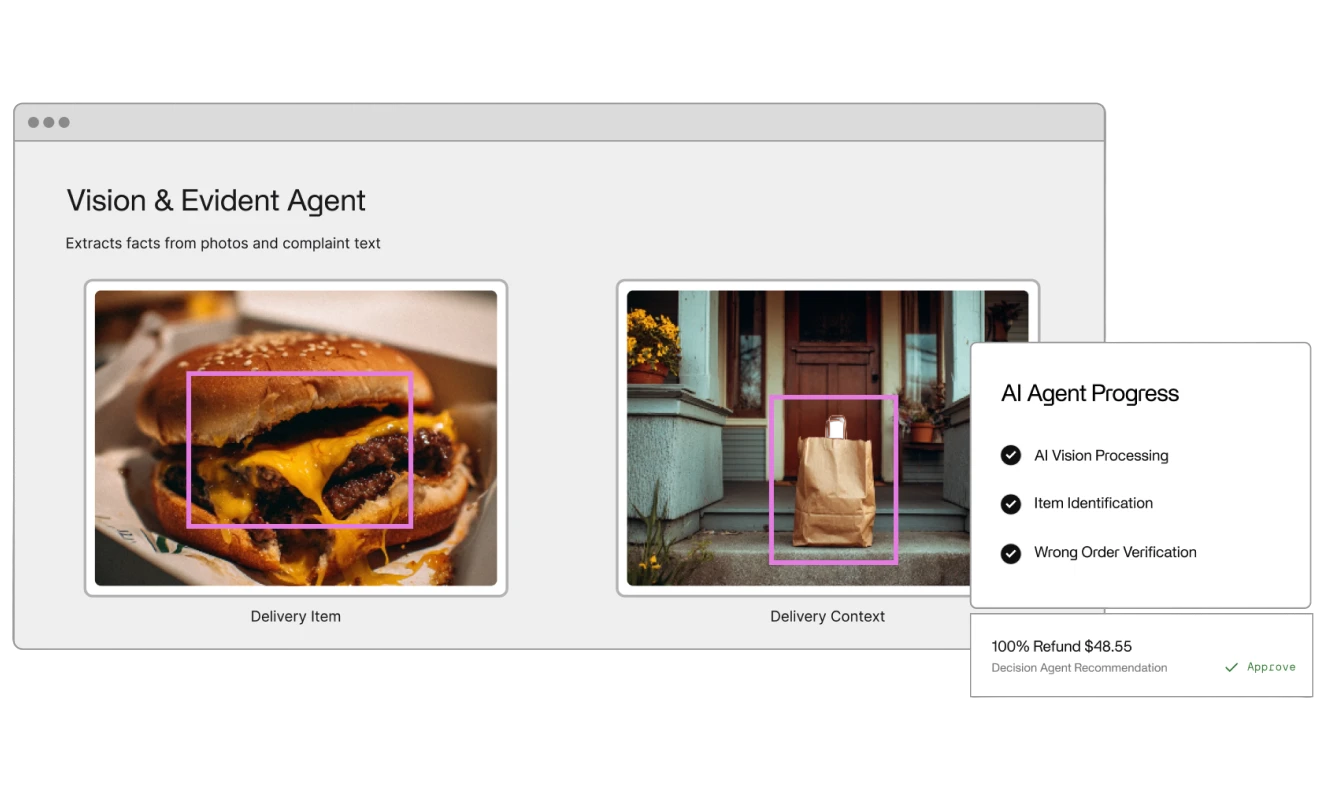

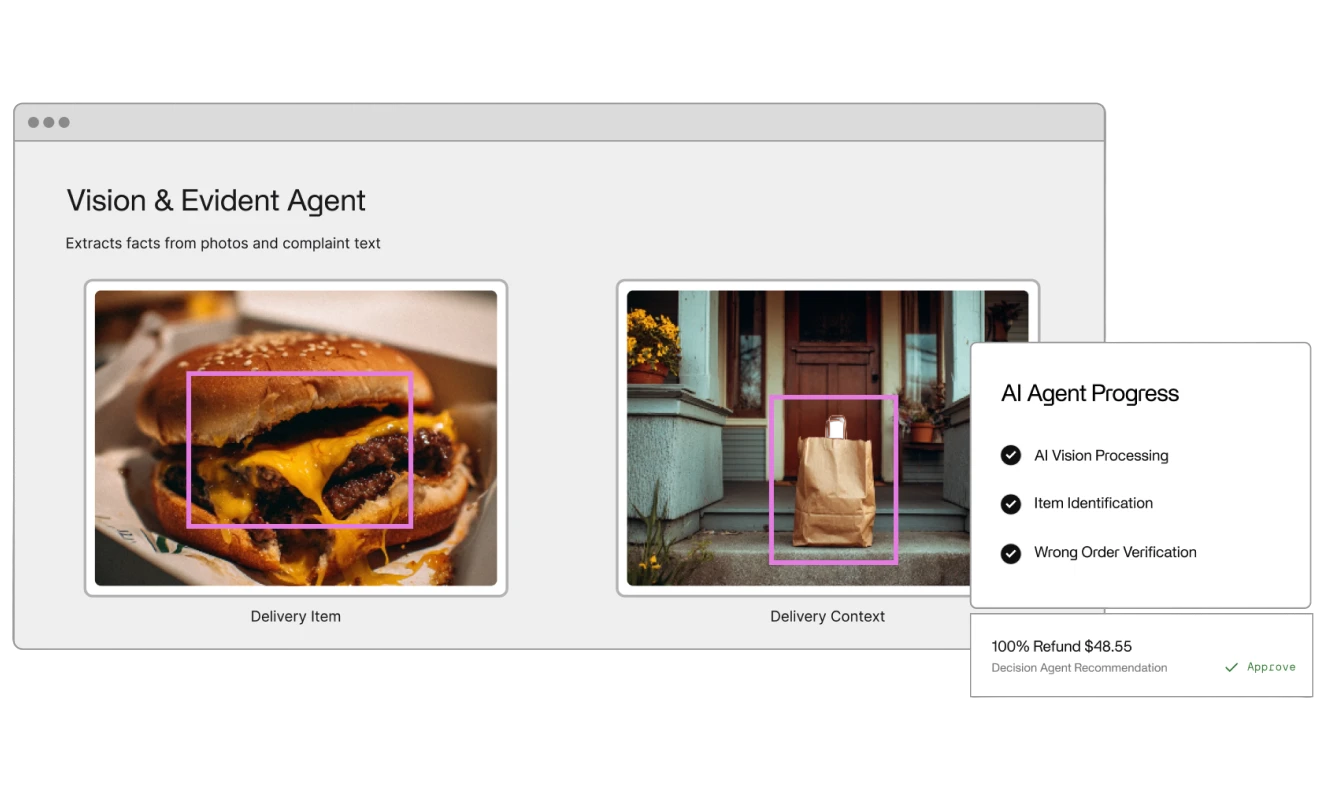

Deploy AI agents that can think through complex, multi-step work, use tools and write code when needed.

Each workflow is versioned, trackable, and separated by customer, so you can test safely and promote what works.

From engineering reviews to compliance checks to back-office operations, Axon compresses weeks of human work into minutes of AI-plus-human collaboration, with the audit trail and controls you'd expect from critical infrastructure.

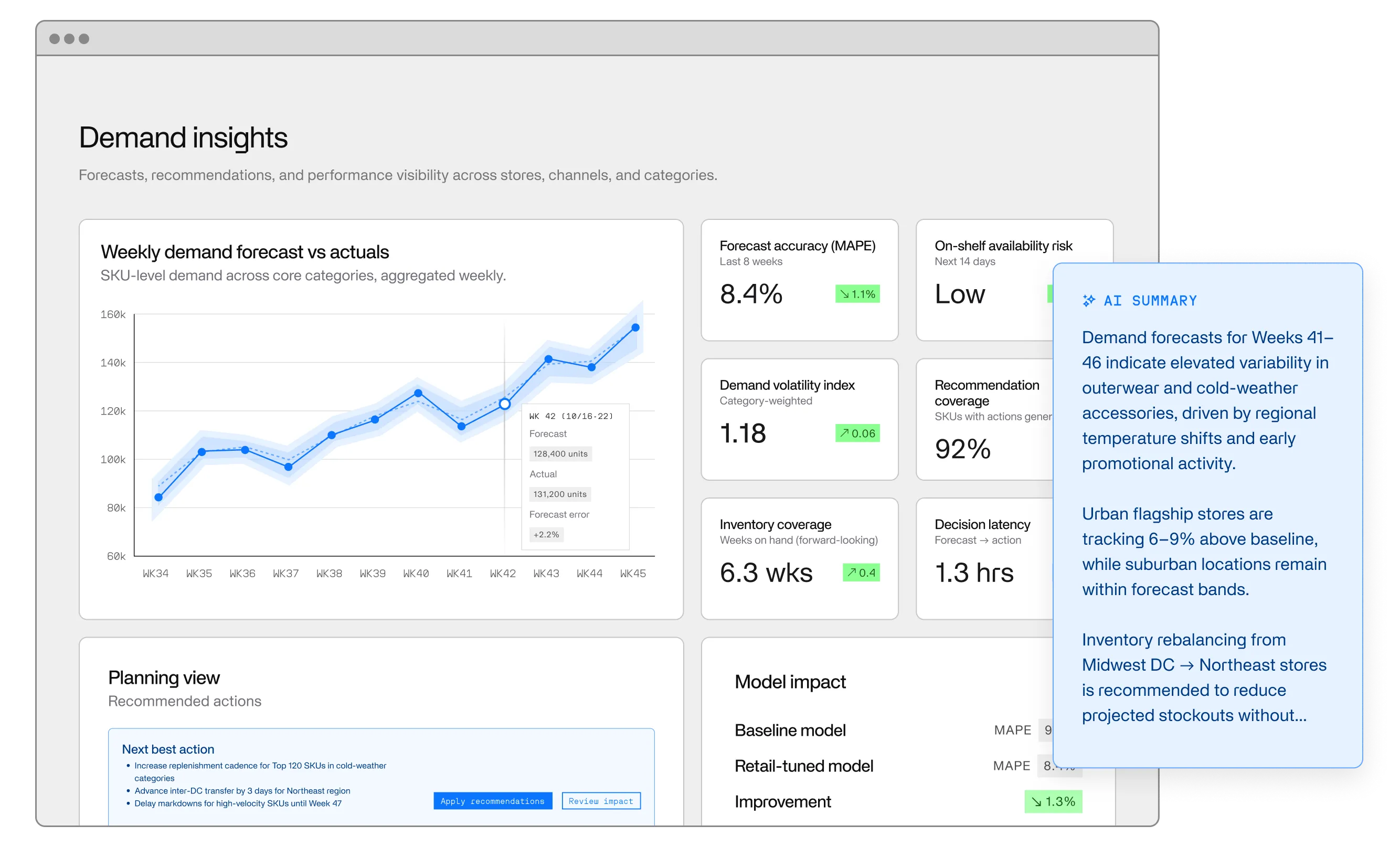

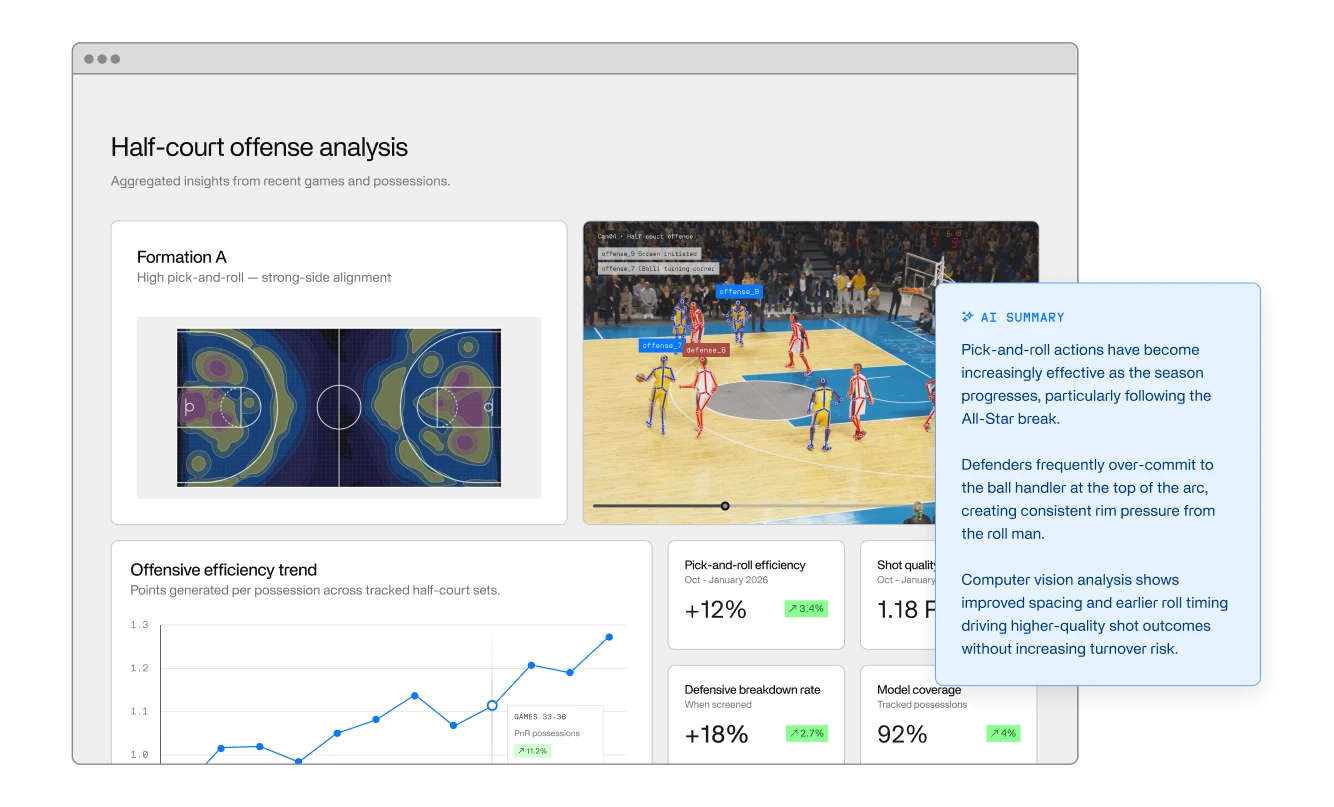

Whether you're building models from scratch or choosing between vendors, run any AI system through realistic work scenarios using the criteria that matter to you: accuracy, safety, tone, fit for your domain.

Automated checks compare every response against what "good" looks like, then show you how different models stack up side-by-side: where they pass, where they fail, and why.

The result: ongoing evaluation tied to your actual use cases, not a static benchmark score from someone else's test.

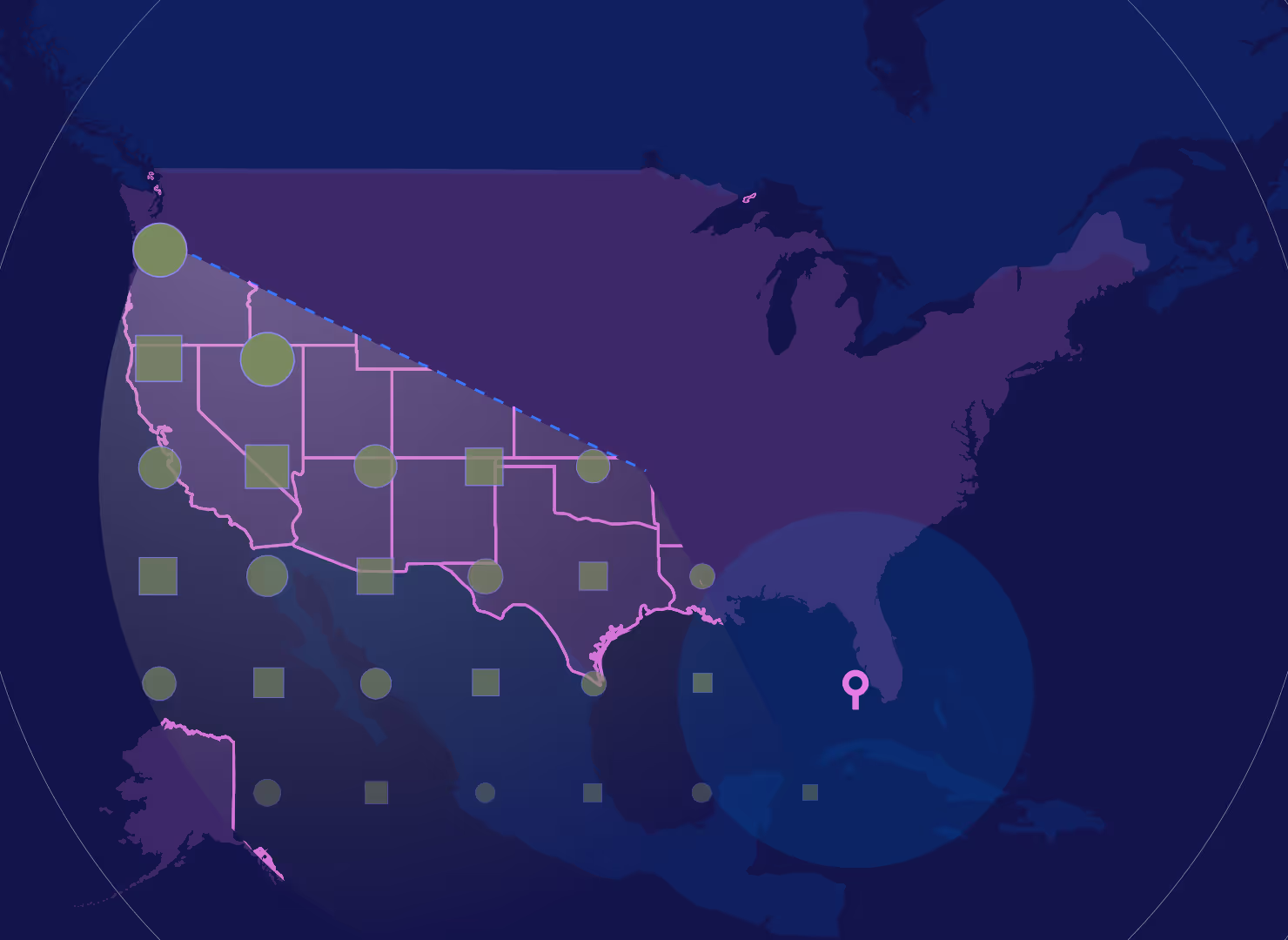

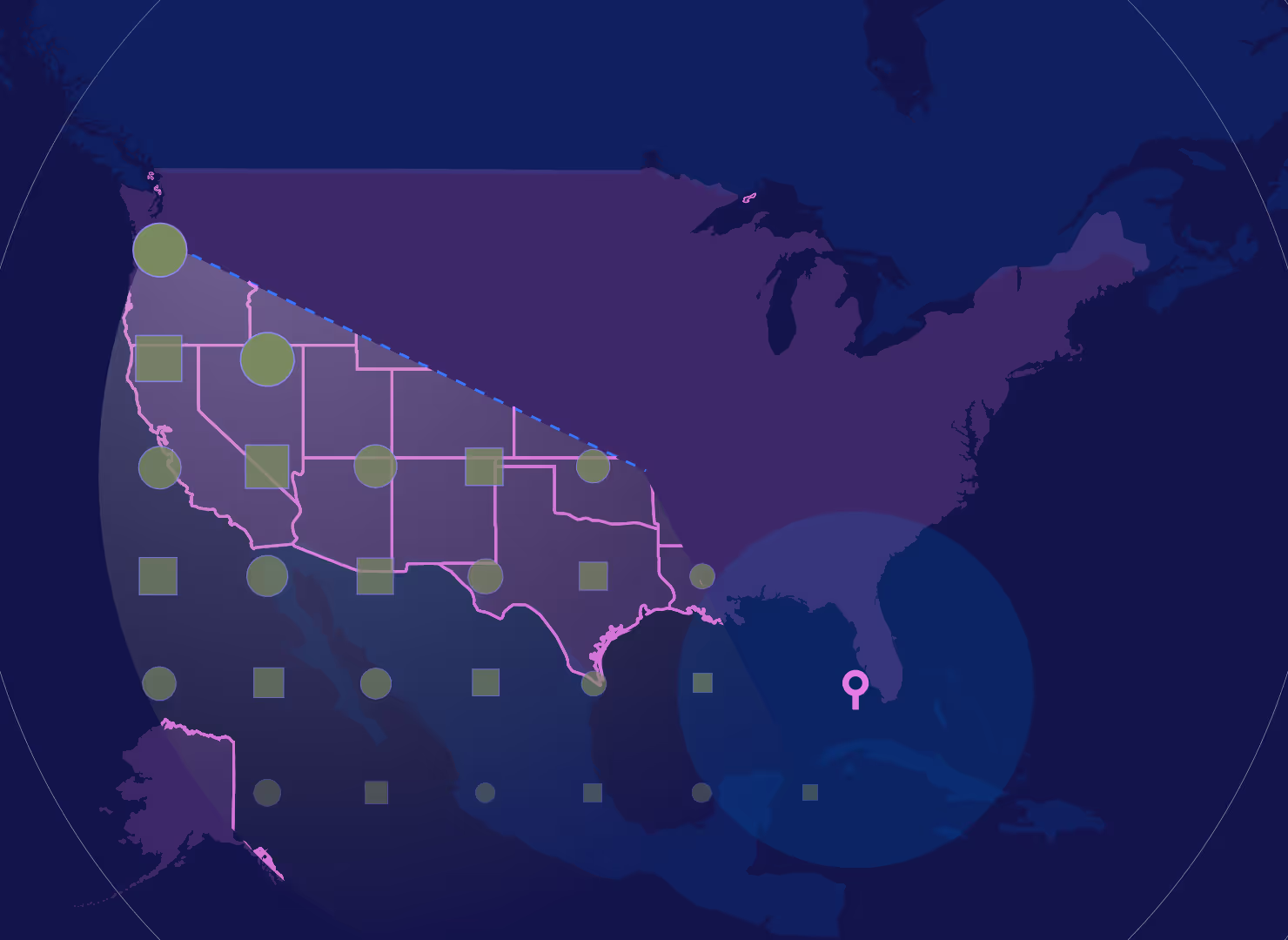

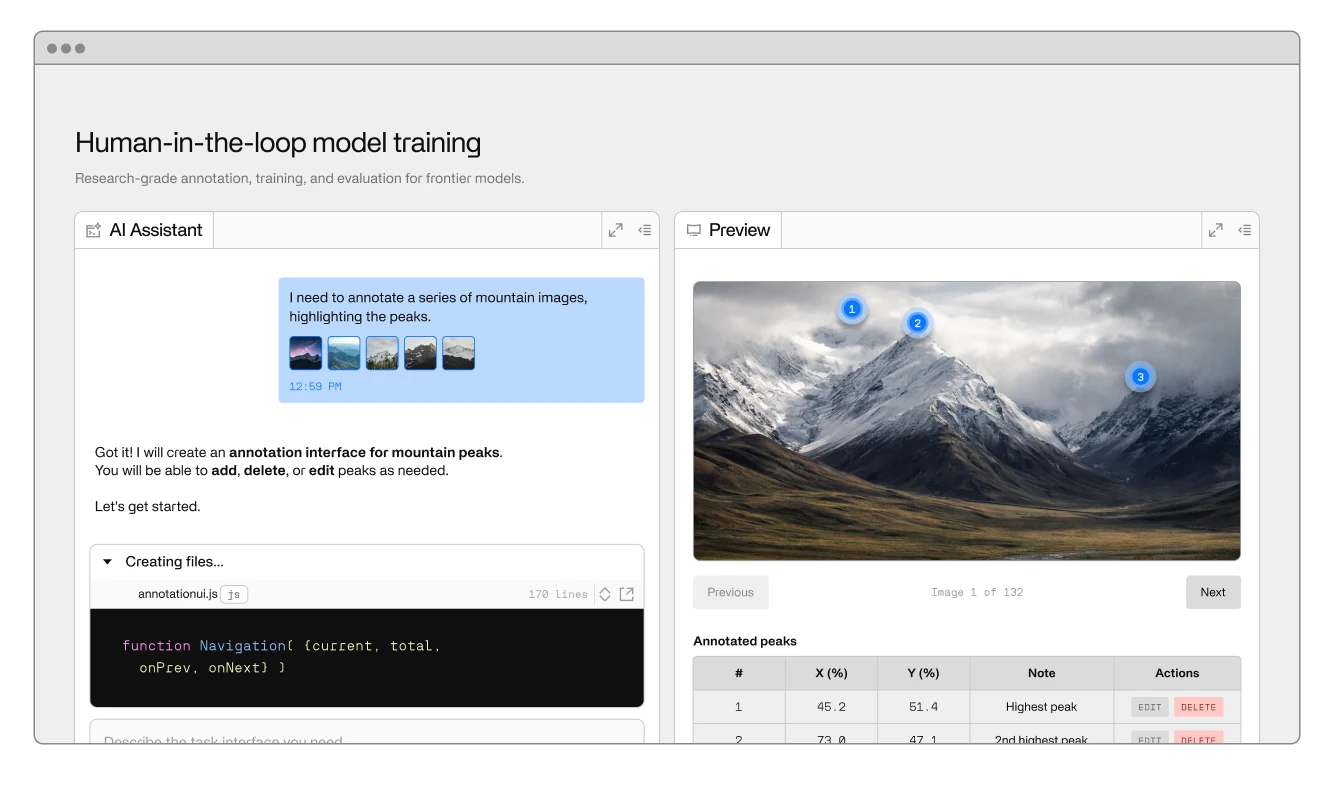

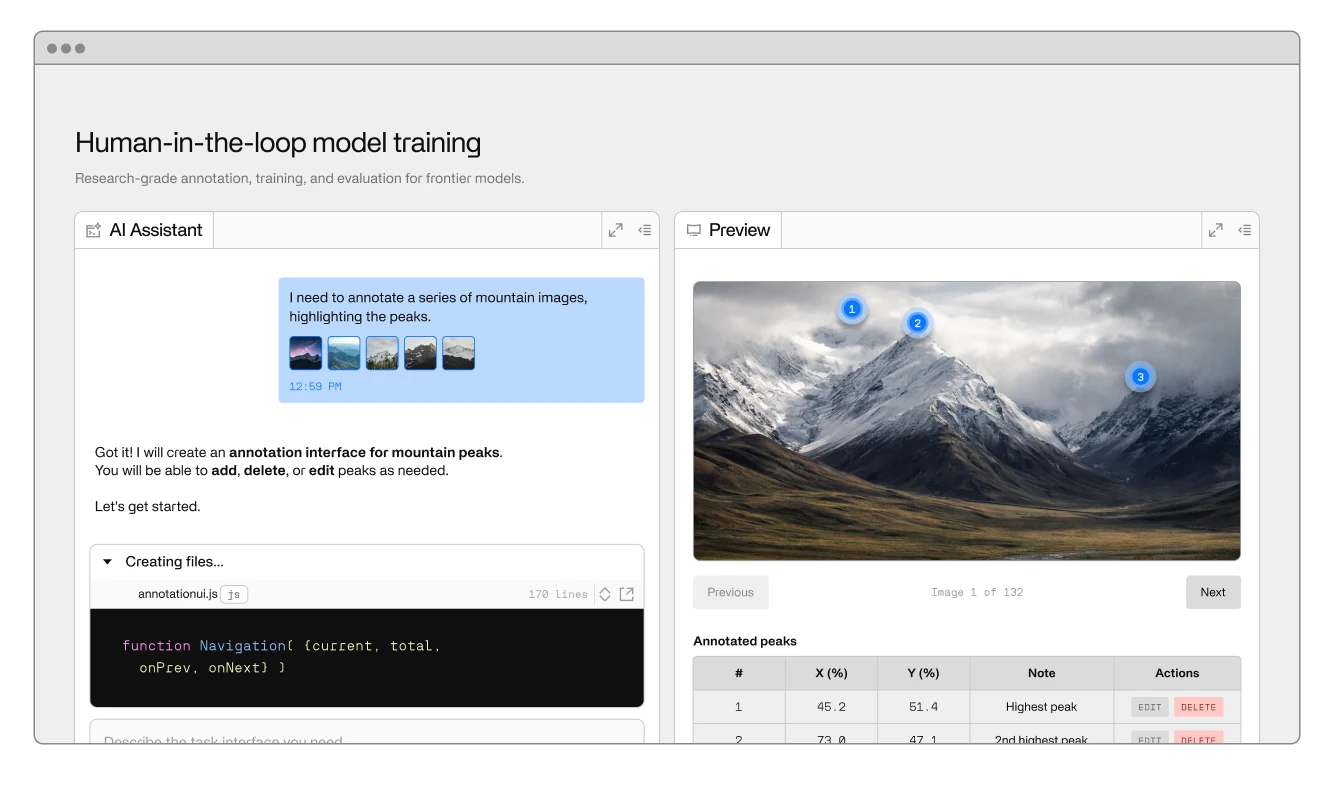

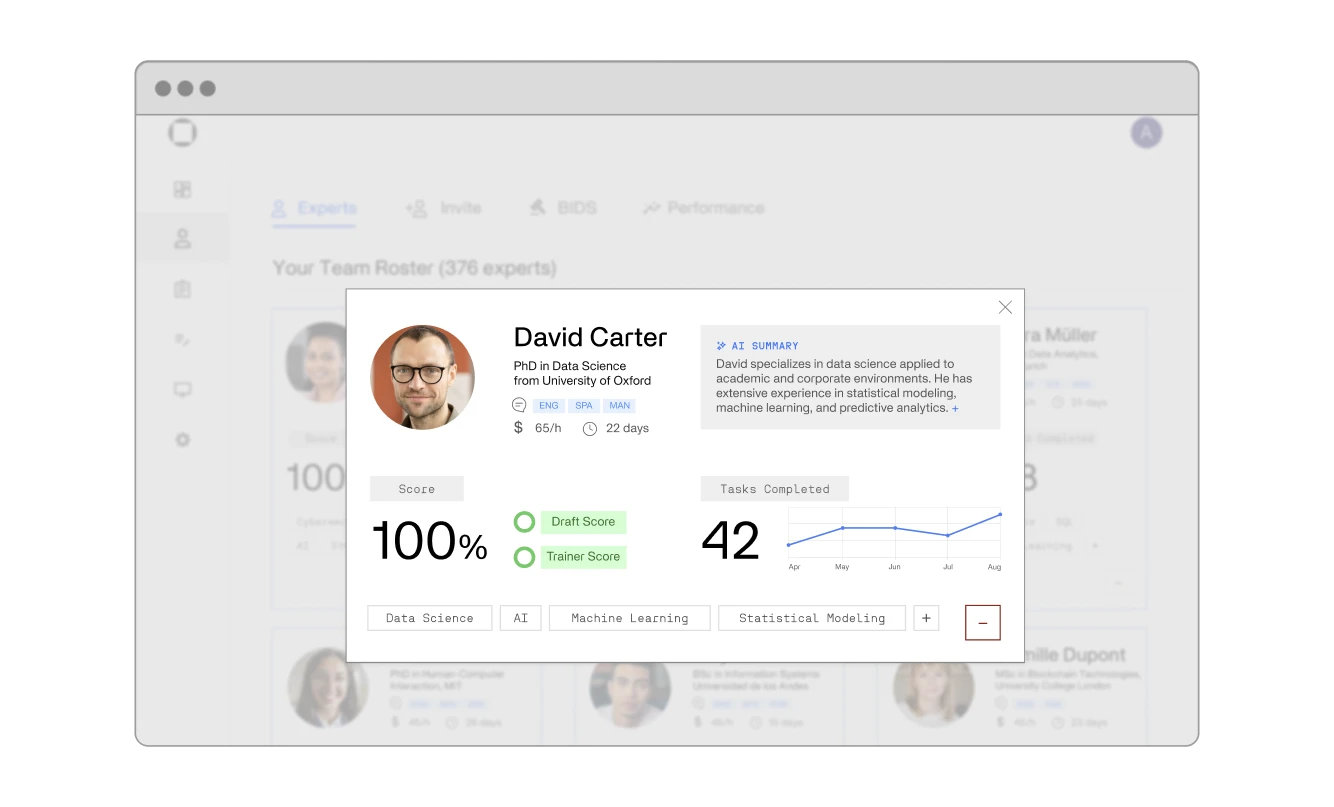

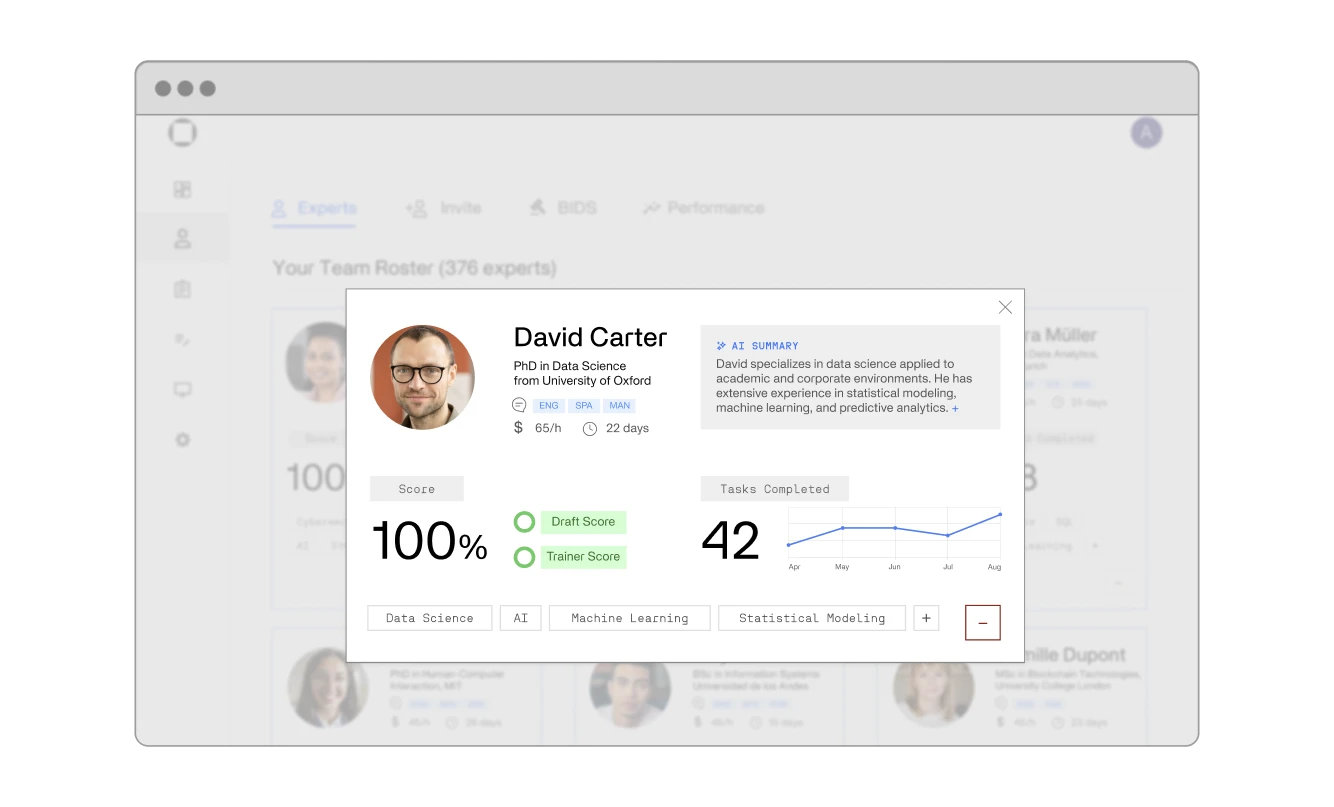

Our expert network can be deployed to your workflows.

Behind the scenes, we continuously screen, test, and organize talent by skill, language, and domain expertise, then match the right people to the right tasks, whether that's labeling training data for frontier models, stress-testing AI systems, or handling edge cases your agents aren't ready for yet.

The result: a flexible human layer that integrates tightly with our platform, so every AI system you deploy is backed by experts who keep it accurate, safe, and grounded in reality.

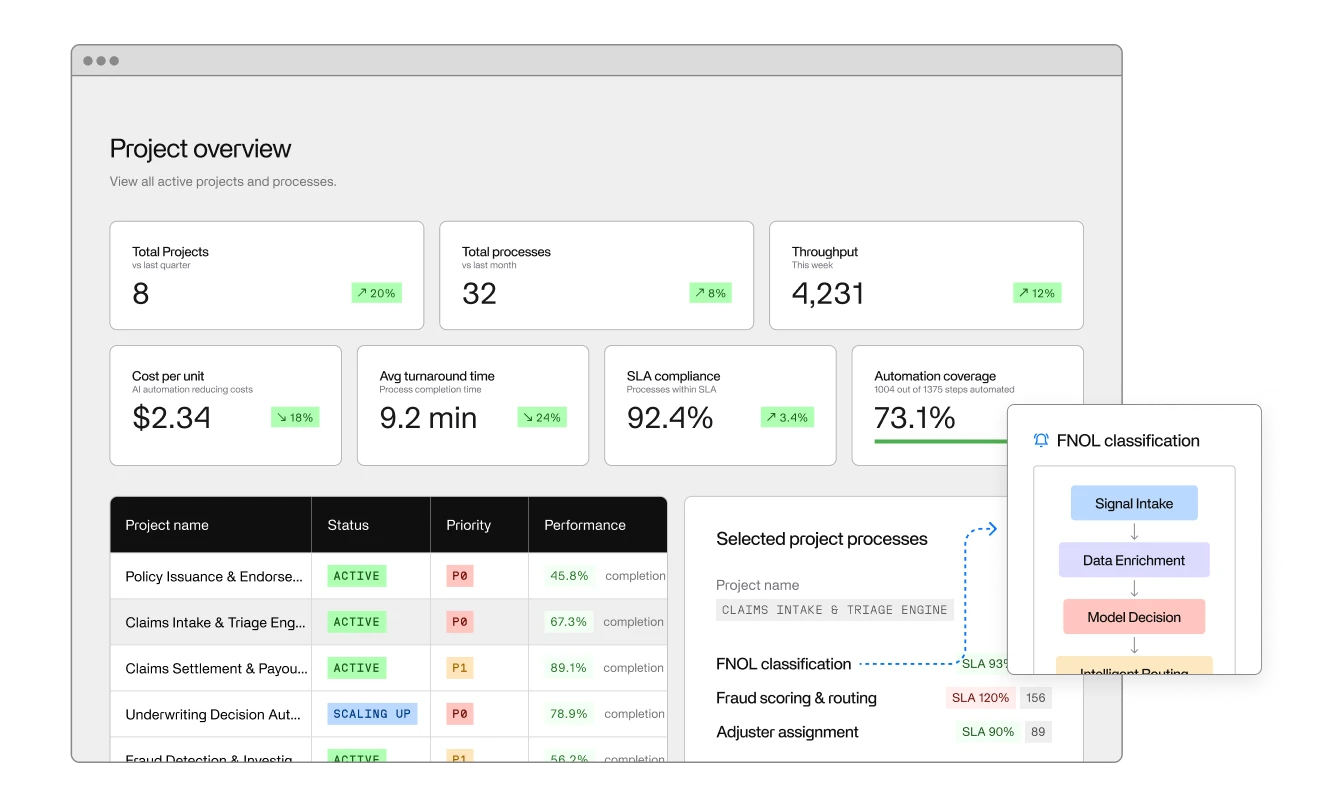

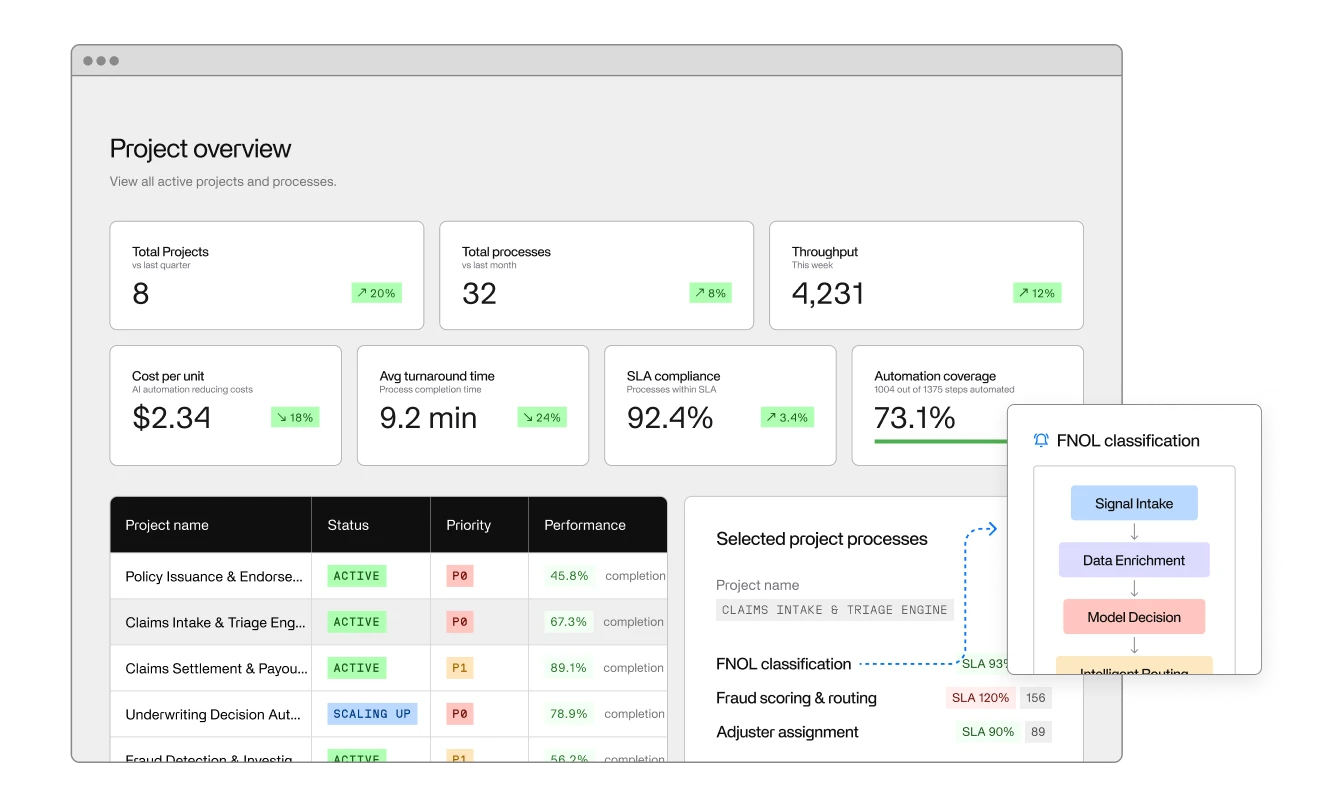

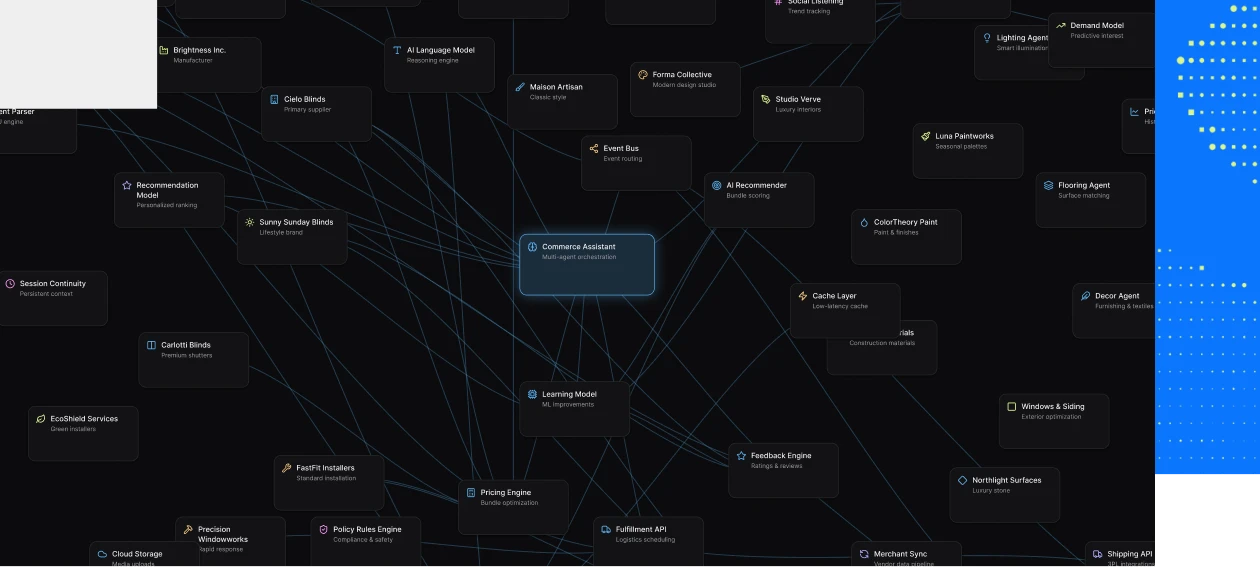

Instead of juggling disconnected tools, Atomic gives you a visual map of how work actually gets done, then runs every step automatically: pulling from your systems, routing to the right AI or person, enforcing quality checks, and sending clean results back where they need to go.

You can see and trace every piece of work, and you can start with your current process and gradually replace manual steps with AI without ripping everything out and starting over.

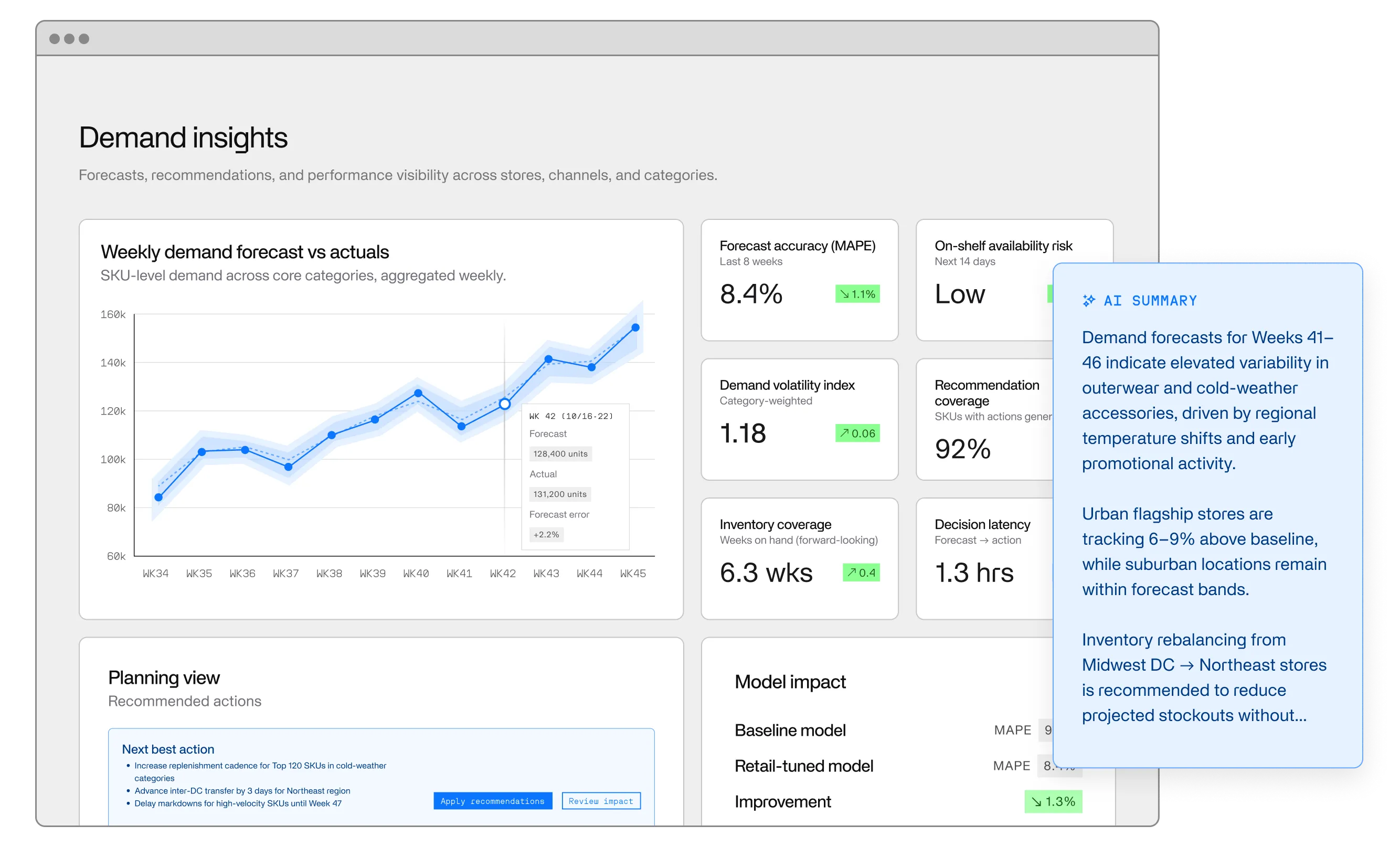

It pulls messy, scattered data from across your systems into one governed place, automatically cleaning and organizing it so it's actually usable: first for your team, then for AI.

Behind the scenes, Neuron builds a living map of how your business pieces fit together (orders, inventory, customers, processes), so models and workflows know where to look and how to connect things without weeks of manual setup.

The result: production-grade, AI-ready data so you can finally build on your data instead of fighting it.

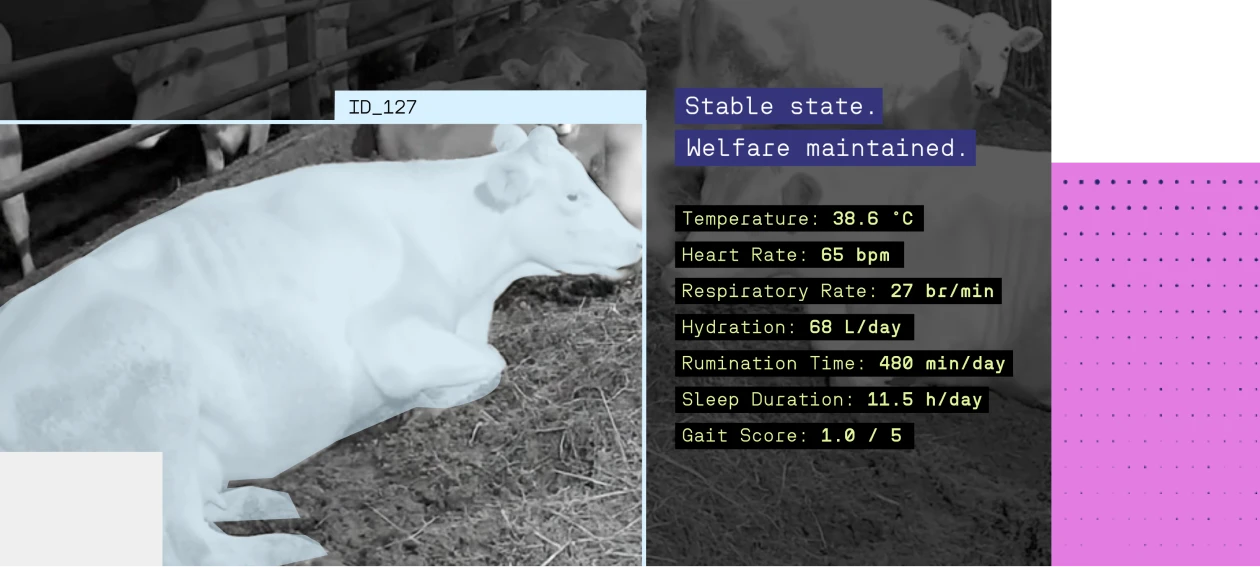

AI delivers impact when it’s embedded in core workflows, runs on your real operational data, and is governed by transparent metrics and SLAs, not just deployed for automation’s sake or as experimental pilots. With Invisible’s modular platform, you plug in only the pieces you need (data, agents, humans-in-the-loop, evaluations), and drive outcomes you can measure, fast.

Typical barriers include fragmented or unstructured data, challenges with legacy system integration, talent and skills gaps, lack of internal AI expertise, and uncertainty about how to measure ROI for large AI projects.

Begin with a workflow that will drive the most impact and set clear business goals. Track a few simple metrics (time saved, cost reduced, revenue or quality gains) on a monthly/quarterly review, and keep scaling what moves the numbers while fixing or retiring what doesn’t, with a named owner to keep it on track.

AI initiatives often stall due to shallow or siloed data, poor alignment with business strategy, insufficient change management, and a lack of clear governance, resulting in high pilot rates but low operational adoption.

The key is to go beyond simply adopting AI “out of the box.” You need to plug the model into your own organization’s data and workflows, align it with your goals and metrics, and involve internal domain experts so results are relevant at scale.