Fallom

Fallom is the AI-native observability platform that gives you complete visibility into every LLM and agent call.

Visit

About Fallom

Fallom is the AI-native observability platform engineered for the era of intelligent agents. It provides engineering and product teams with complete, real-time visibility into every Large Language Model (LLM) call and agentic workflow running in production. In a landscape where AI applications are becoming increasingly complex and mission-critical, Fallom eliminates the black box, allowing you to see prompts, outputs, tool calls, token usage, latency, and cost for every single interaction. Built on the open standard OpenTelemetry, it integrates in minutes, not months, giving you immediate insights without vendor lock-in. Fallom is designed for startups and enterprises that are scaling their AI capabilities, offering the tools needed to debug complex agent chains, optimize performance, control spiraling costs, and maintain rigorous compliance standards—all from a single, intuitive dashboard. Its core value proposition is turning AI operations from a guessing game into a precise, data-driven discipline, enabling teams to ship reliable, efficient, and compliant AI features with confidence.

Features of Fallom

End-to-End LLM Tracing

Fallom captures every detail of your LLM interactions in a unified trace. See the exact prompt sent, the model's raw output, all intermediate tool or function calls with their arguments and results, token counts, latency breakdowns, and the precise per-call cost. This granular, end-to-end visibility is the foundation for debugging complex issues, understanding model behavior, and optimizing your AI pipelines for both performance and economics.

Enterprise-Grade Compliance & Audit Trails

Built for regulated industries, Fallom provides immutable, complete audit trails to support compliance with standards like the EU AI Act, SOC 2, and GDPR. Features include full input/output logging with configurable redaction for privacy, detailed model versioning for reproducibility, and user consent tracking. This ensures you have a verifiable record of every AI decision for security reviews, regulatory submissions, and internal governance.

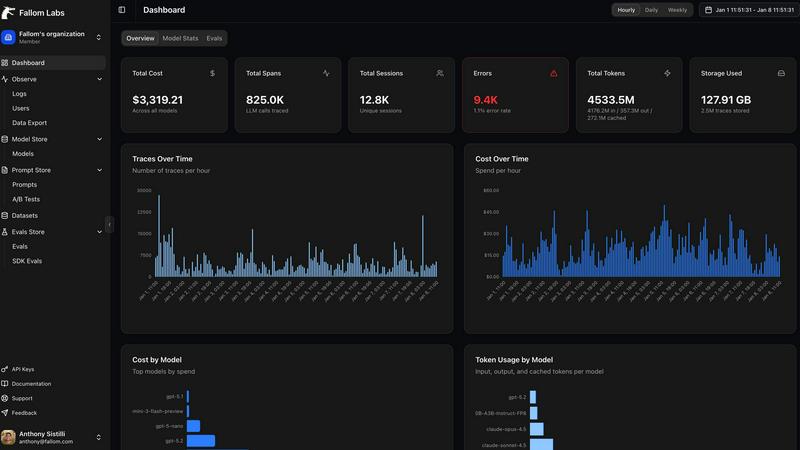

Real-Time Cost Attribution & Analytics

Gain full financial transparency over your AI spend. Fallom automatically attributes costs across dimensions like specific AI models (GPT-4, Claude, etc.), individual users, internal teams, or even external customers. This enables precise budgeting, accurate chargebacks, and data-driven decisions about model selection and optimization, helping you scale your AI usage without budget surprises.

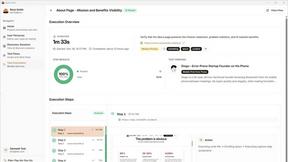

Advanced Debugging with Timing Waterfalls & Session Tracking

Debug multi-step agent workflows with visual timing waterfalls that break down latency across LLM calls, tool executions, and processing steps. Furthermore, group related traces by user session, customer, or conversation to understand the complete context of an interaction. This combination allows you to quickly pinpoint performance bottlenecks and understand the full user journey through your AI application.

Use Cases of Fallom

Monitoring and Optimizing Production AI Agents

Engineering teams use Fallom to monitor live AI agents handling tasks like customer support, data analysis, or content generation. By observing real-time traces, they can instantly detect failures in tool calls, identify latency spikes in specific workflow steps, and validate that agents are operating as intended, ensuring high reliability and a positive user experience for AI-powered features.

Controlling and Attributing AI Infrastructure Spend

Finance and engineering leaders leverage Fallom's cost attribution dashboards to track monthly spend per model, team, or product feature. This visibility allows for accurate budgeting, prevents cost overruns, and facilitates internal chargeback models. Teams can identify underperforming or expensive model calls and optimize prompts or switch models to achieve better cost-efficiency at scale.

Ensuring Compliance and Preparing for AI Audits

Companies in finance, healthcare, or any regulated sector use Fallom to build defensible audit trails. The platform logs every interaction, tracks which model version generated an output, and manages user consent, creating the necessary documentation to demonstrate compliance with evolving AI regulations and internal ethical AI policies during security and legal reviews.

Improving AI Product Development with A/B Testing

Product and ML teams employ Fallom to safely roll out new prompts or upgraded models. They can run A/B tests, splitting traffic between different configurations while comparing key metrics like cost, latency, and quality scores (via integrated evals) directly within the platform. This data-driven approach allows for confident, incremental improvements to AI features.

Frequently Asked Questions

How quickly can I integrate Fallom into my application?

Integration is designed to be incredibly fast. With our OpenTelemetry-native SDK, most teams can instrument their applications and start seeing traces in their Fallom dashboard in under 5 minutes. There's no need to change your LLM provider or rewrite your application logic; simply add our lightweight SDK.

Does Fallom support privacy-sensitive applications?

Absolutely. Fallom offers a robust Privacy Mode for handling sensitive data. You can configure the platform to redact specific data fields, log only metadata (like token counts and latency without the prompt/response content), or set different privacy levels per development, staging, and production environment.

Which AI models and providers does Fallom work with?

Fallom is provider-agnostic and works with every major LLM provider, including OpenAI (GPT-4, GPT-4o, etc.), Anthropic (Claude), Google (Gemini), and open-source models. Our OpenTelemetry-based approach ensures you get unified observability across your entire AI stack without being locked into a single vendor's ecosystem.

Can I use Fallom for testing and evaluation before production?

Yes. Fallom includes capabilities for running evaluations on LLM outputs, allowing you to track metrics like accuracy, relevance, and hallucination rates. This is crucial for catching regressions during development and staging, enabling you to compare the performance of different prompts or model versions before a full production deployment.

You may also like:

Vidgo API

Unlock powerful AI capabilities with Vidgo API, offering all models at up to 95% less than competitors for fast, effi...

Ark

Ark is the AI-first email API that lets your AI assistant write and send transactional emails instantly.

Rock Smith

Rock Smith uses AI agents to visually test your app like a real user, eliminating flaky tests.