For all the rightful criticisms that C gets, GLib does manage to alleviate at least some of it. If we can’t use a better language, we should at least make use of all the tools we have in C with GLib.

This post looks at the topic of ownership, and also how it applies to libdex fibers.

Ownership

In normal C usage, it is often not obvious at all if an object that gets returned from a function (either as a real return value or as an out-parameter) is owned by the caller or the callee:

MyThing *thing = my_thing_new ();

If thing is owned by the caller, then the caller also has to release the object thing. If it is owned by the callee, then the lifetime of the object thing has to be checked against its usage.

At this point, the documentation is usually being consulted with the hope that the developer of my_thing_new documented it somehow. With gobject-introspection, this documentation is standardized and you can usually read one of these:

The caller of the function takes ownership of the data, and is responsible for freeing it.

The returned data is owned by the instance.

If thing is owned by the caller, the caller now has to release the object or transfer ownership to another place. In normal C usage, both of those are hard issues. For releasing the object, one of two techniques are usually employed:

- single exit

MyThing *thing = my_thing_new ();

gboolean c;

c = my_thing_a (thing);

if (c)

c = my_thing_b (thing);

if (c)

my_thing_c (thing);

my_thing_release (thing); /* release thing */

- goto cleanup

MyThing *thing = my_thing_new ();

if (!my_thing_a (thing))

goto out;

if (!my_thing_b (thing))

goto out;

my_thing_c (thing);

out:

my_thing_release (thing); /* release thing */

Ownership Transfer

GLib provides automatic cleanup helpers (g_auto, g_autoptr, g_autofd, g_autolist). A macro associates the function to release the object with the type of the object (e.g. G_DEFINE_AUTOPTR_CLEANUP_FUNC). If they are being used, the single exit and goto cleanup approaches become unnecessary:

g_autoptr(MyThing) thing = my_thing_new ();

if (!my_thing_a (thing))

return;

if (!my_thing_b (thing))

return;

my_thing_c (thing);

The nice side effect of using automatic cleanup is that for a reader of the code, the g_auto helpers become a definite mark that the variable they are applied on own the object!

If we have a function which takes ownership over an object passed in (i.e. the called function will eventually release the resource itself) then in normal C usage this is indistinguishable from a function call which does not take ownership:

MyThing *thing = my_thing_new ();

my_thing_finish_thing (thing);

If my_thing_finish_thing takes ownership, then the code is correct, otherwise it leaks the object thing.

On the other hand, if automatic cleanup is used, there is only one correct way to handle either case.

A function call which does not take ownership is just a normal function call and the variable thing is not modified, so it keeps ownership:

g_autoptr(MyThing) thing = my_thing_new ();

my_thing_finish_thing (thing);

A function call which takes ownership on the other hand has to unset the variable thing to remove ownership from the variable and ensure the cleanup function is not called. This is done by “stealing” the object from the variable:

g_autoptr(MyThing) thing = my_thing_new ();

my_thing_finish_thing (g_steal_pointer (&thing));

By using g_steal_pointer and friends, the ownership transfer becomes obvious in the code, just like ownership of an object by a variable becomes obvious with g_autoptr.

Ownership Annotations

Now you could argue that the g_autoptr and g_steal_pointer combination without any conditional early exit is functionally exactly the same as the example with the normal C usage, and you would be right. We also need more code and it adds a tiny bit of runtime overhead.

I would still argue that it helps readers of the code immensely which makes it an acceptable trade-off in almost all situations. As long as you haven’t profiled and determined the overhead to be problematic, you should always use g_auto and g_steal!

The way I like to look at g_auto and g_steal is that it is not only a mechanism to release objects and unset variables, but also annotations about the ownership and ownership transfers.

Scoping

One pattern that is still somewhat pronounced in older code using GLib, is the declaration of all variables at the top of a function:

static void

foobar (void)

{

MyThing *thing = NULL;

size_t i;

for (i = 0; i < len; i++) {

g_clear_pointer (&thing);

thing = my_thing_new (i);

my_thing_bar (thing);

}

}

We can still avoid mixing declarations and code, but we don’t have to do it at the granularity of a function, but of natural scopes:

static void

foobar (void)

{

for (size_t i = 0; i < len; i++) {

g_autoptr(MyThing) thing = NULL;

thing = my_thing_new (i);

my_thing_bar (thing);

}

}

Similarly, we can introduce our own scopes which can be used to limit how long variables, and thus objects are alive:

static void

foobar (void)

{

g_autoptr(MyOtherThing) other = NULL;

{

/* we only need `thing` to get `other` */

g_autoptr(MyThing) thing = NULL;

thing = my_thing_new ();

other = my_thing_bar (thing);

}

my_other_thing_bar (other);

}

Fibers

When somewhat complex asynchronous patterns are required in a piece of GLib software, it becomes extremely advantageous to use libdex and the system of fibers it provides. They allow writing what looks like synchronous code, which suspends on await points:

g_autoptr(MyThing) thing = NULL;

thing = dex_await_object (my_thing_new_future (), NULL);

If this piece of code doesn’t make much sense to you, I suggest reading the libdex Additional Documentation.

Unfortunately the await points can also be a bit of a pitfall: the call to dex_await is semantically like calling g_main_loop_run on the thread default main context. If you use an object which is not owned across an await point, the lifetime of that object becomes critical. Often the lifetime is bound to another object which you might not control in that particular function. In that case, the pointer can point to an already released object when dex_await returns:

static DexFuture *

foobar (gpointer user_data)

{

/* foo is owned by the context, so we do not use an autoptr */

MyFoo *foo = context_get_foo ();

g_autoptr(MyOtherThing) other = NULL;

g_autoptr(MyThing) thing = NULL;

thing = my_thing_new ();

/* side effect of running g_main_loop_run */

other = dex_await_object (my_thing_bar (thing, foo), NULL);

if (!other)

return dex_future_new_false ();

/* foo here is not owned, and depending on the lifetime

* (context might recreate foo in some circumstances),

* foo might point to an already released object

*/

dex_await (my_other_thing_foo_bar (other, foo), NULL);

return dex_future_new_true ();

}

If we assume that context_get_foo returns a different object when the main loop runs, the code above will not work.

The fix is simple: own the objects that are being used across await points, or re-acquire an object. The correct choice depends on what semantic is required.

We can also combine this with improved scoping to only keep the objects alive for as long as required. Unnecessarily keeping objects alive across await points can keep resource usage high and might have unintended consequences.

static DexFuture *

foobar (gpointer user_data)

{

/* we now own foo */

g_autoptr(MyFoo) foo = g_object_ref (context_get_foo ());

g_autoptr(MyOtherThing) other = NULL;

{

g_autoptr(MyThing) thing = NULL;

thing = my_thing_new ();

/* side effect of running g_main_loop_run */

other = dex_await_object (my_thing_bar (thing, foo), NULL);

if (!other)

return dex_future_new_false ();

}

/* we own foo, so this always points to a valid object */

dex_await (my_other_thing_bar (other, foo), NULL);

return dex_future_new_true ();

}

static DexFuture *

foobar (gpointer user_data)

{

/* we now own foo */

g_autoptr(MyOtherThing) other = NULL;

{

/* We do not own foo, but we only use it before an

* await point.

* The scope ensures it is not being used afterwards.

*/

MyFoo *foo = context_get_foo ();

g_autoptr(MyThing) thing = NULL;

thing = my_thing_new ();

/* side effect of running g_main_loop_run */

other = dex_await_object (my_thing_bar (thing, foo), NULL);

if (!other)

return dex_future_new_false ();

}

{

MyFoo *foo = context_get_foo ();

dex_await (my_other_thing_bar (other, foo), NULL);

}

return dex_future_new_true ();

}

One of the scenarios where re-acquiring an object is necessary, are worker fibers which operate continuously, until the object gets disposed. Now, if this fiber owns the object (i.e. holds a reference to the object), it will never get disposed because the fiber would only finish when the reference it holds gets released, which doesn’t happen because it holds a reference. The naive code also suspiciously doesn’t have any exit condition.

static DexFuture *

foobar (gpointer user_data)

{

g_autoptr(MyThing) self = g_object_ref (MY_THING (user_data));

for (;;)

{

g_autoptr(GBytes) bytes = NULL;

bytes = dex_await_boxed (my_other_thing_bar (other, foo), NULL);

my_thing_write_bytes (self, bytes);

}

}

So instead of owning the object, we need a way to re-acquire it. A weak-ref is perfect for this.

static DexFuture *

foobar (gpointer user_data)

{

/* g_weak_ref_init in the caller somewhere */

GWeakRef *self_wr = user_data;

for (;;)

{

g_autoptr(GBytes) bytes = NULL;

bytes = dex_await_boxed (my_other_thing_bar (other, foo), NULL);

{

g_autoptr(MyThing) self = g_weak_ref_get (&self_wr);

if (!self)

return dex_future_new_true ();

my_thing_write_bytes (self, bytes);

}

}

}

Conclusion

- Always use

g_auto/g_steal helpers to mark ownership and ownership transfers (exceptions do apply)

- Use scopes to limit the lifetime of objects

- In fibers, always own objects you need across await points, or re-acquire them

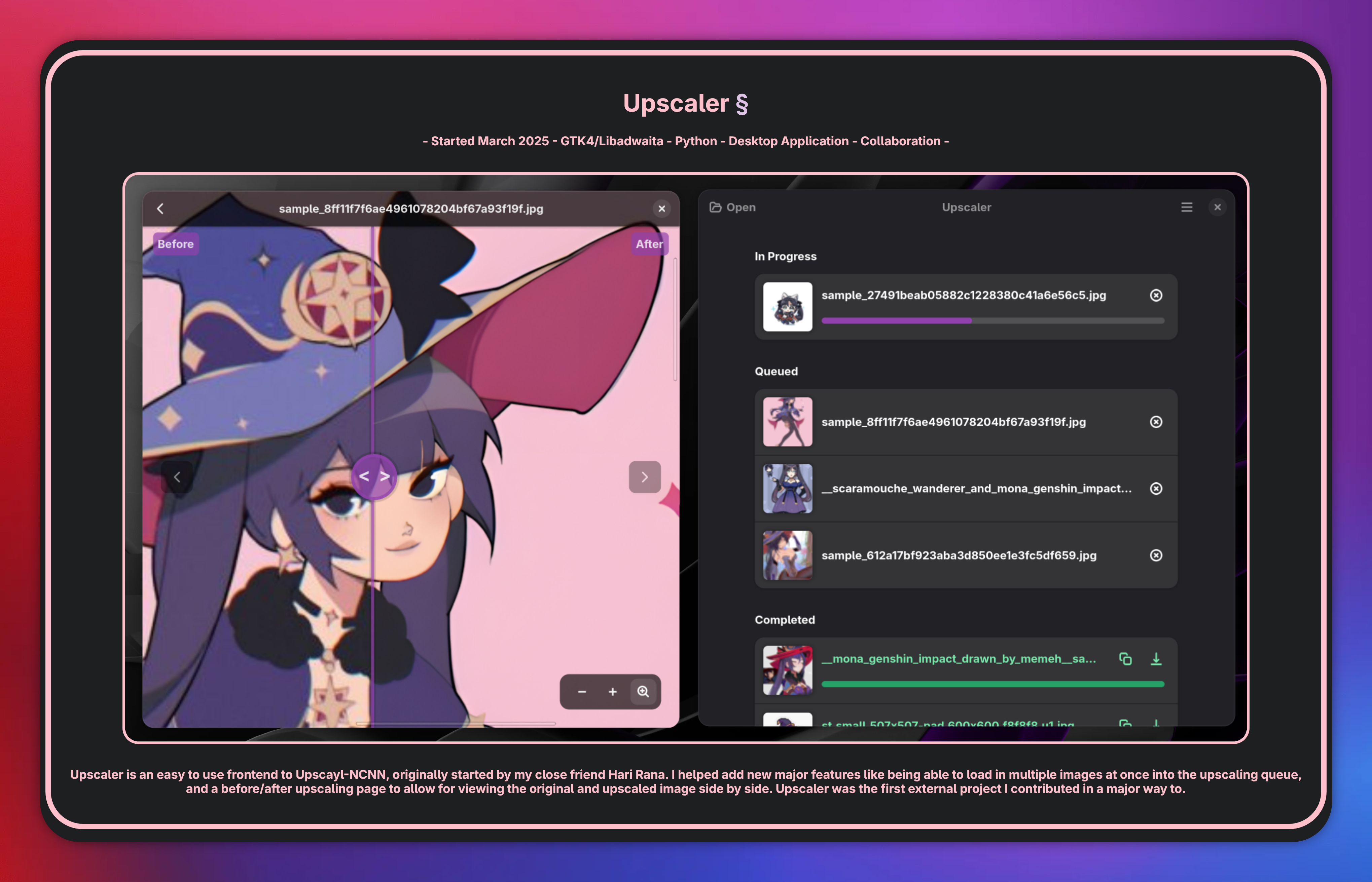

Coding is fun and making computers do cool stuff can be very addictive. That story starts long before 2026 as well. Have you heard of the demoscene?

Coding is fun and making computers do cool stuff can be very addictive. That story starts long before 2026 as well. Have you heard of the demoscene?