Overview

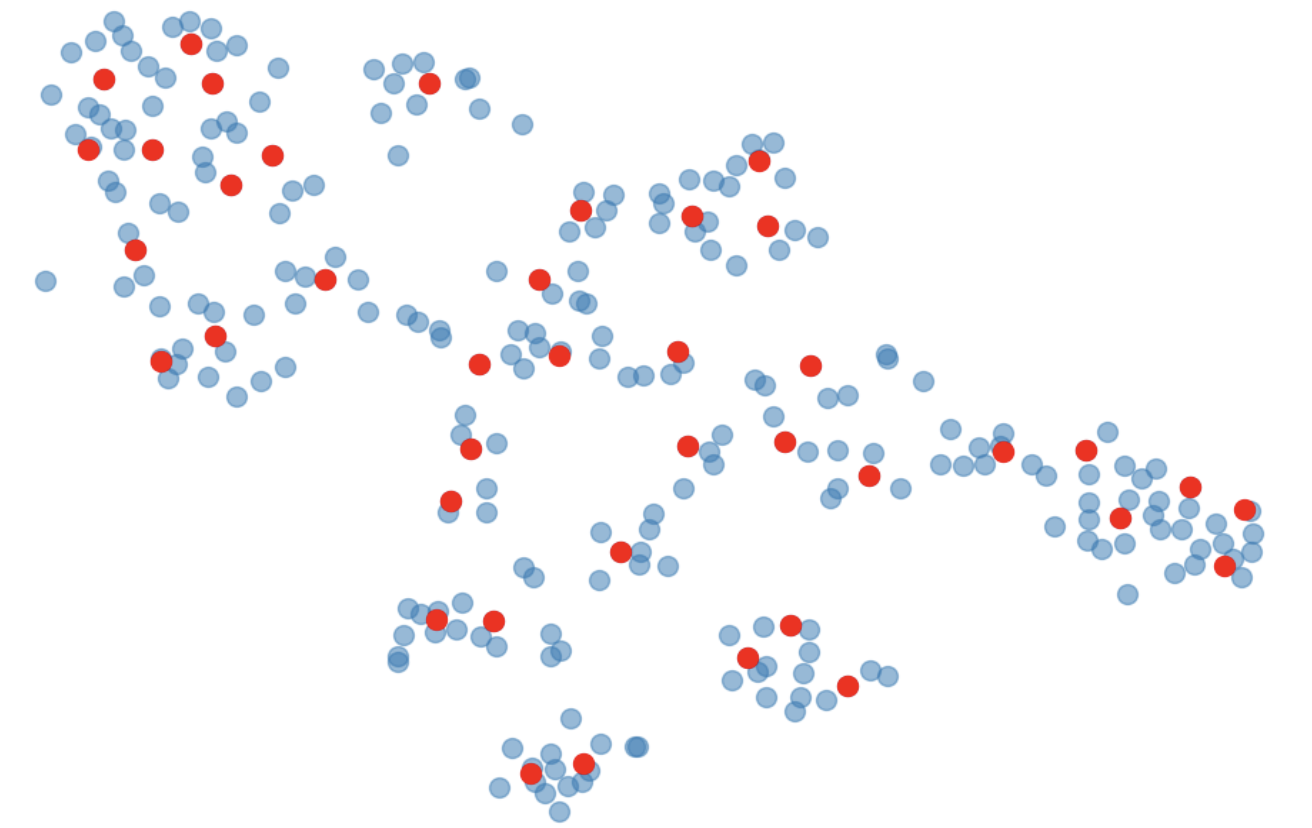

AI is no longer a research curiosity. It is reshaping how we live and work. To fully exploit its benefits, we must address critical gaps in trustworthiness.

Current foundational models like LLMs have critical trustworthiness problems: they hallucinate false information, fail at continual learning, resist knowledge editing (making GDPR compliance impractical), leak private information embedded in parameters, and require prohibitive compute for training and personalisation. These issues are blocking the widespread adoption of AI and the productivity revolution it promises.

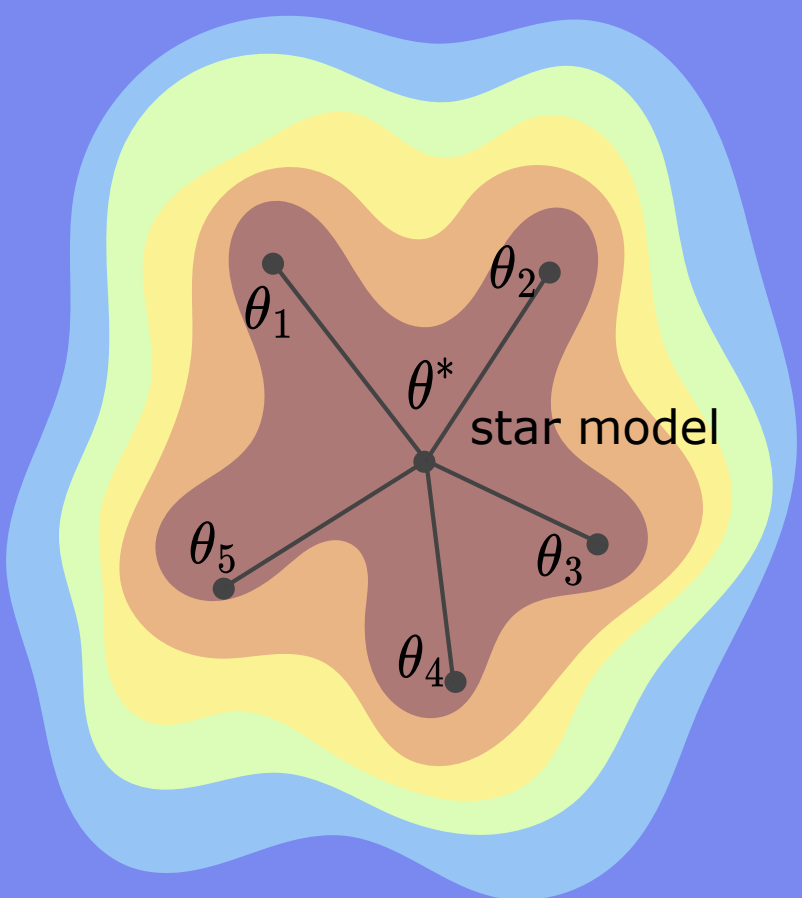

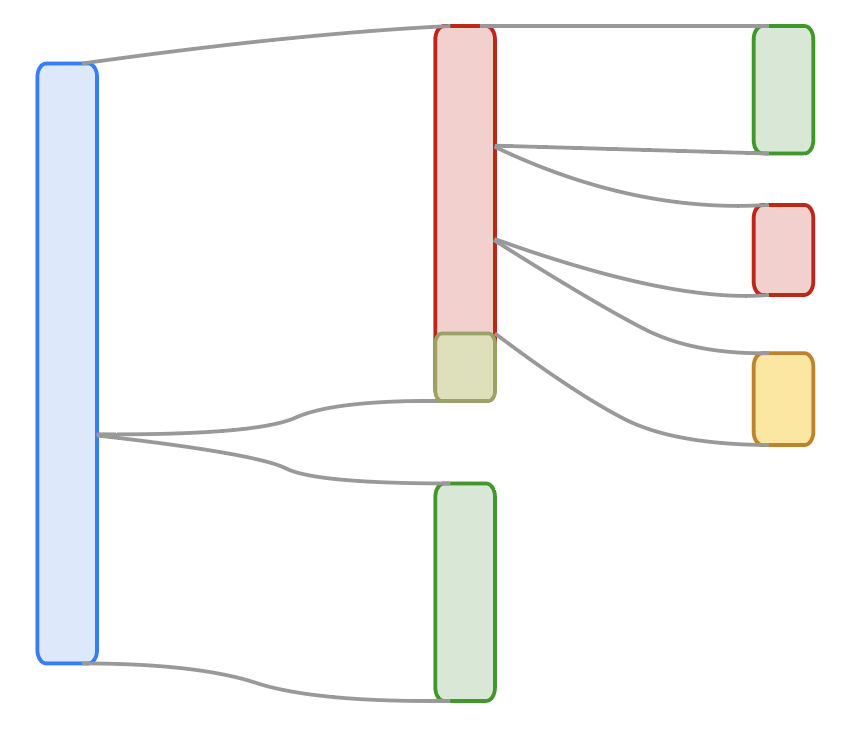

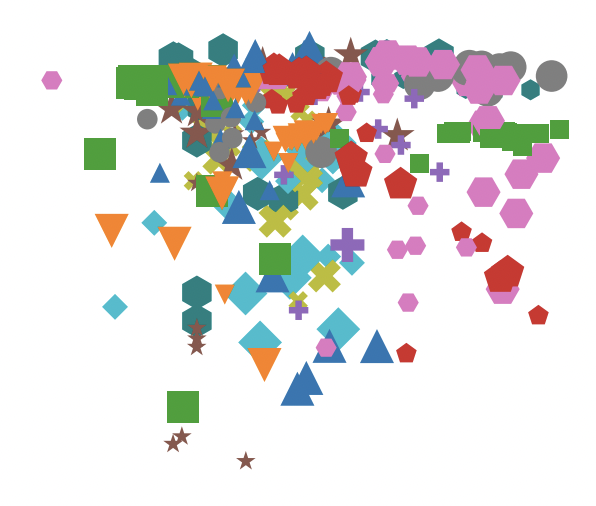

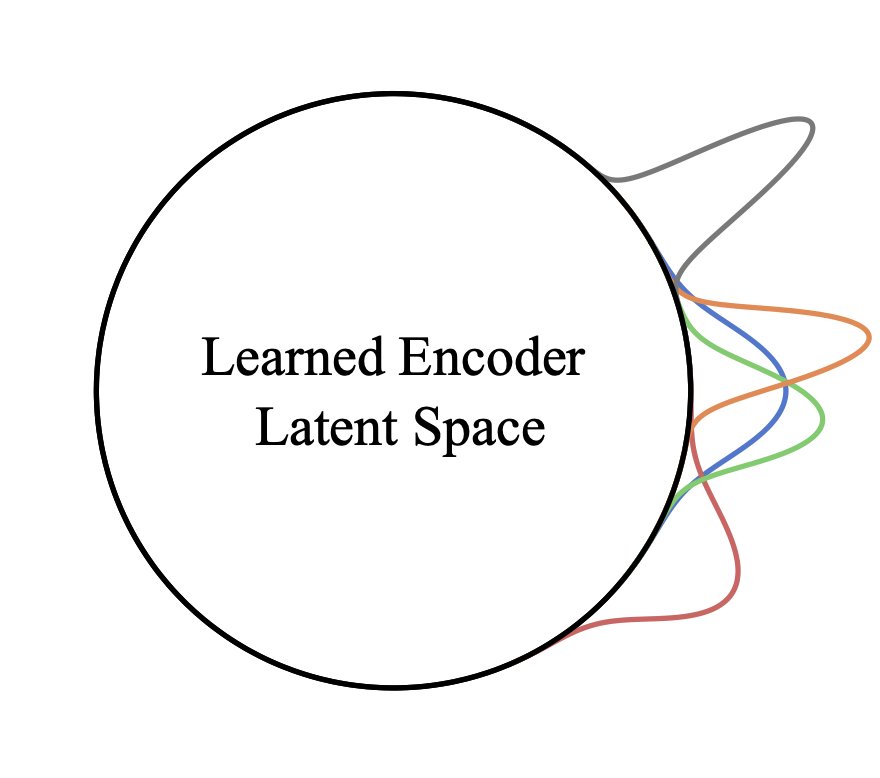

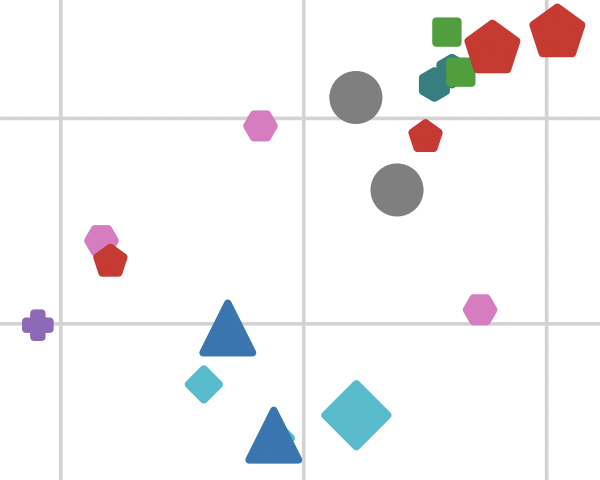

Our approach: Knowledge-Intelligence Separation. Just as the code-data separation in the 1960s enabled the modern software industry, we believe this separation is the key to unlocking AI’s full potential. When knowledge is stored in interpretable, editable external modules while intelligence (reasoning, generalisation) remains in the model, we enable faster customisation, training data attribution by design, and knowledge editing and unlearning .

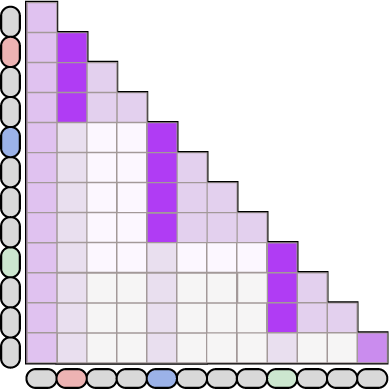

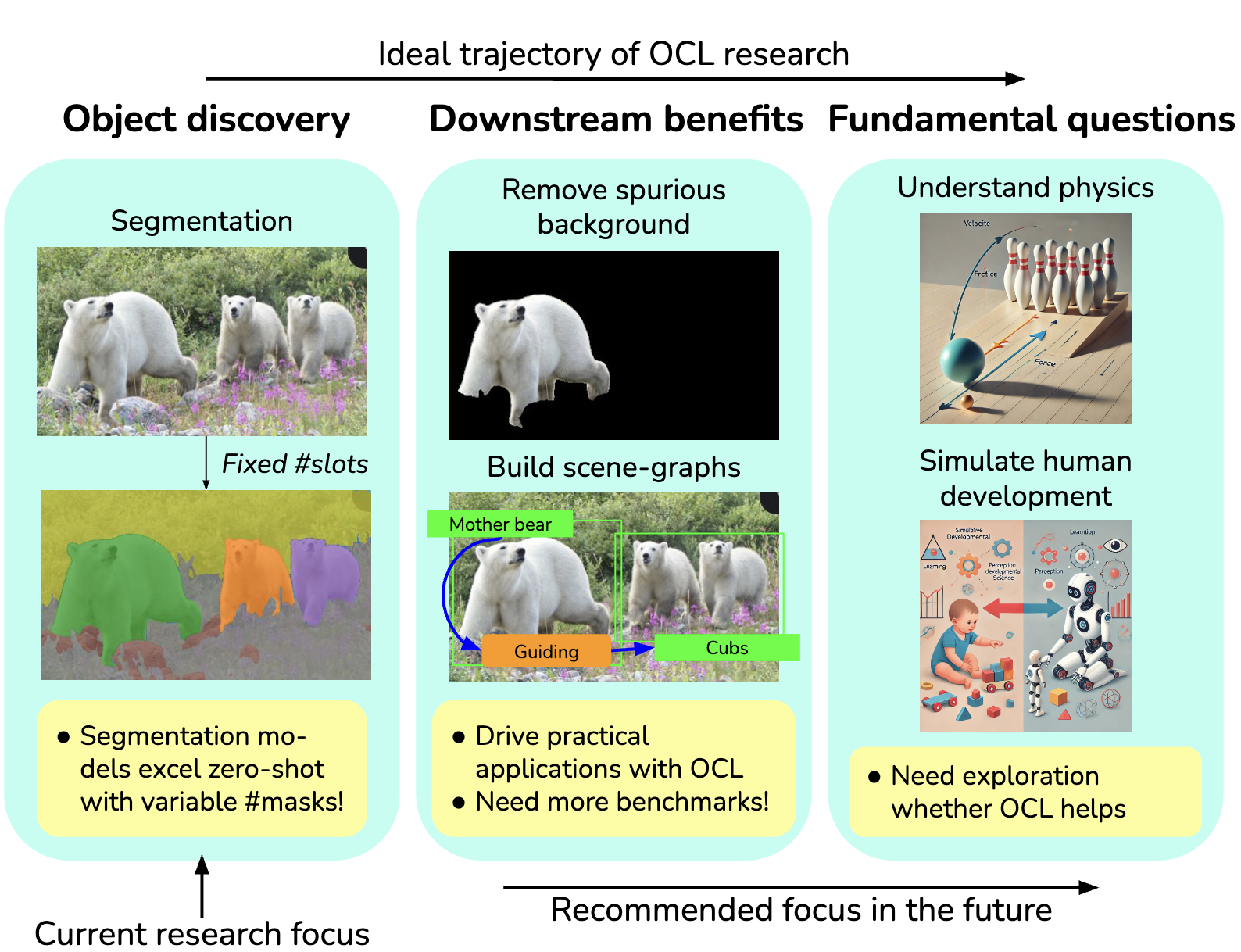

Our work spans a range of interconnected areas:

- Information retrieval and search, vector databases, RAG

- Memory-augmented architectures

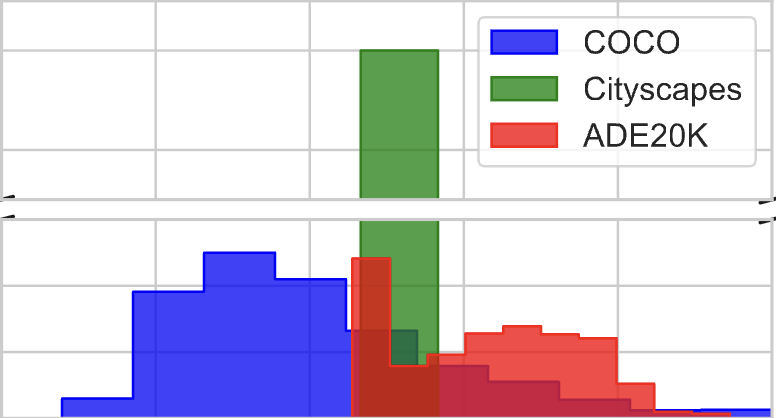

- Multimodal models

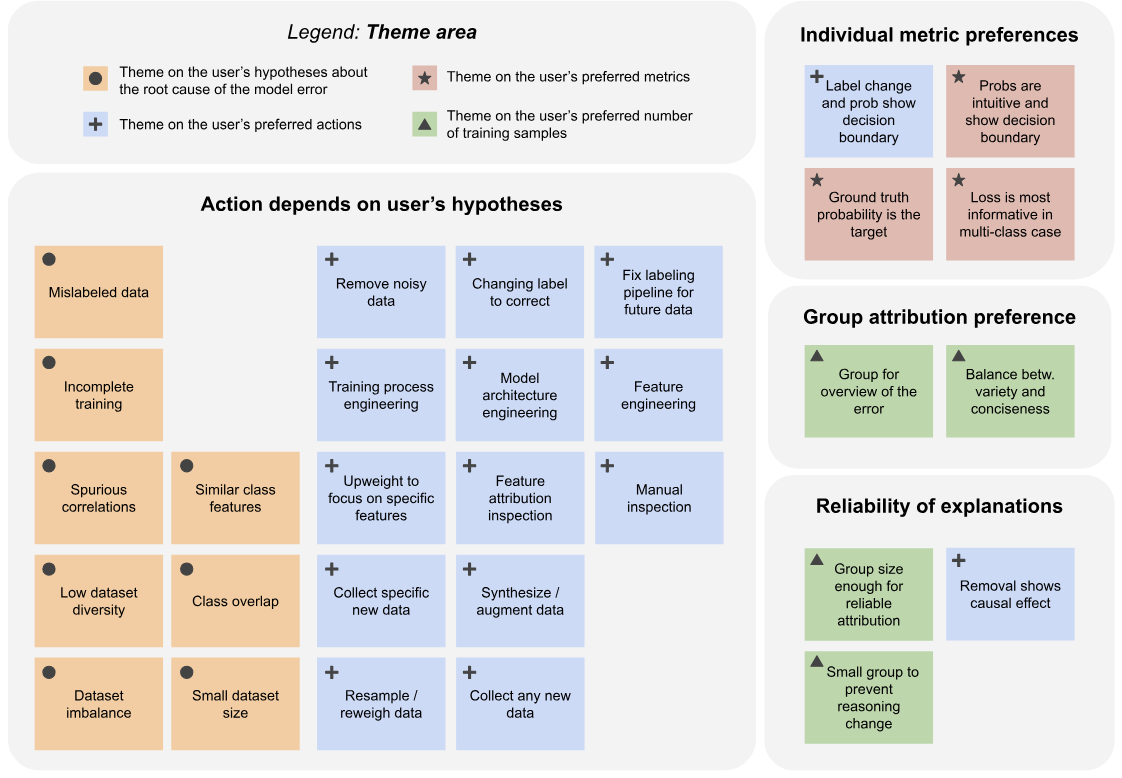

- Human-AI interaction

- Expert-in-the-loop systems

- Agentic AI

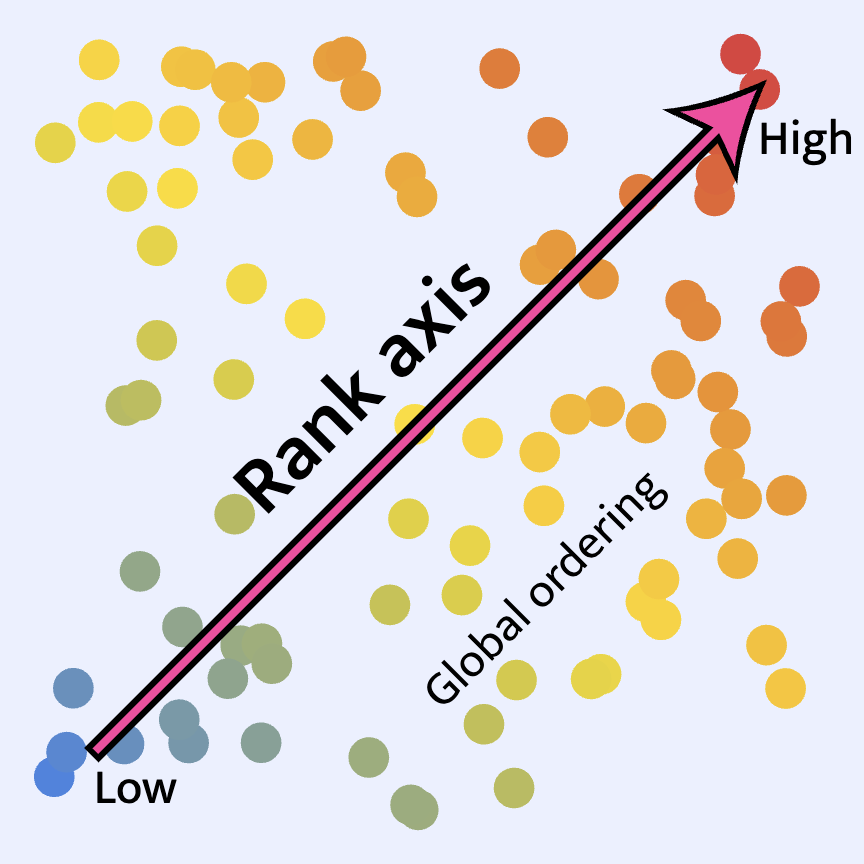

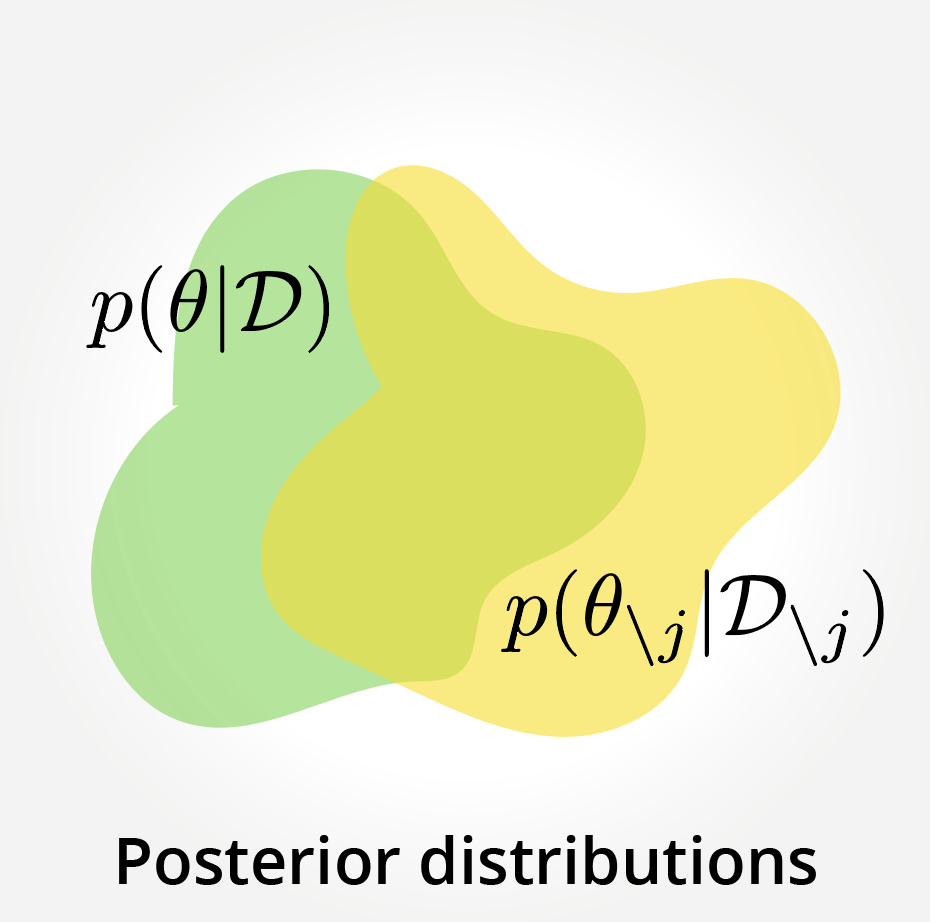

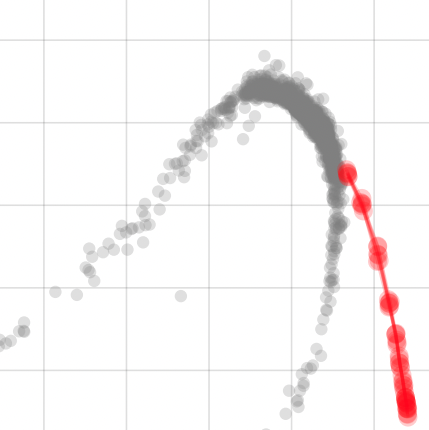

- Training data attribution

- Privacy and security

- Data and model ownership

We are not alone in this effort. Many research labs worldwide contribute to Trustworthy AI. Our group finds its uniqueness by striving for working solutions that are widely applicable and can be deployed at scale. We thus name our group Scalable Trustworthy AI. For impact at scale, we commit ourselves to the following principles:

- Simple is better than complex. Scalability and applicability are inversely correlated with complexity.

- Understand and then solve. You can only solve a problem when you understand it.

- Do not follow a dead end. When an approach is fundamentally limited in the long run, don’t take it.

For prospective students: You might be interested in our internal curriculum and guidelines for a PhD program: Principles for a PhD Program.