-

Notifications

You must be signed in to change notification settings - Fork 5.3k

SocketAsyncContext.Unix: remove Lock from IsReady #36705

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

|

Tagging subscribers to this area: @dotnet/ncl |

| if (_tail == null) | ||

| { | ||

| _state = QueueState.Ready; | ||

| _sequenceNumber++; |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

This change is unrelated. All code paths that set _state to QueueState.Ready increment _sequenceNumber. I'm not sure why it is not done at this location, so I've added it.

src/libraries/System.Net.Sockets/src/System/Net/Sockets/SocketAsyncContext.Unix.cs

Show resolved

Hide resolved

|

@adamsitnik I hope to see at least the 3k rps you measured in #36214. And I hope for some more because this is now used for sends and receives. |

src/libraries/System.Net.Sockets/src/System/Net/Sockets/SocketAsyncContext.Unix.cs

Show resolved

Hide resolved

|

@adamsitnik for benchmarking: System.Net.Sockets.dll.tar.gz |

|

The CI fails with following error: |

|

@adamsitnik not sure if you saw the change to make CI pass? Here is the dll: System.Net.Sockets.dll.tar.gz |

|

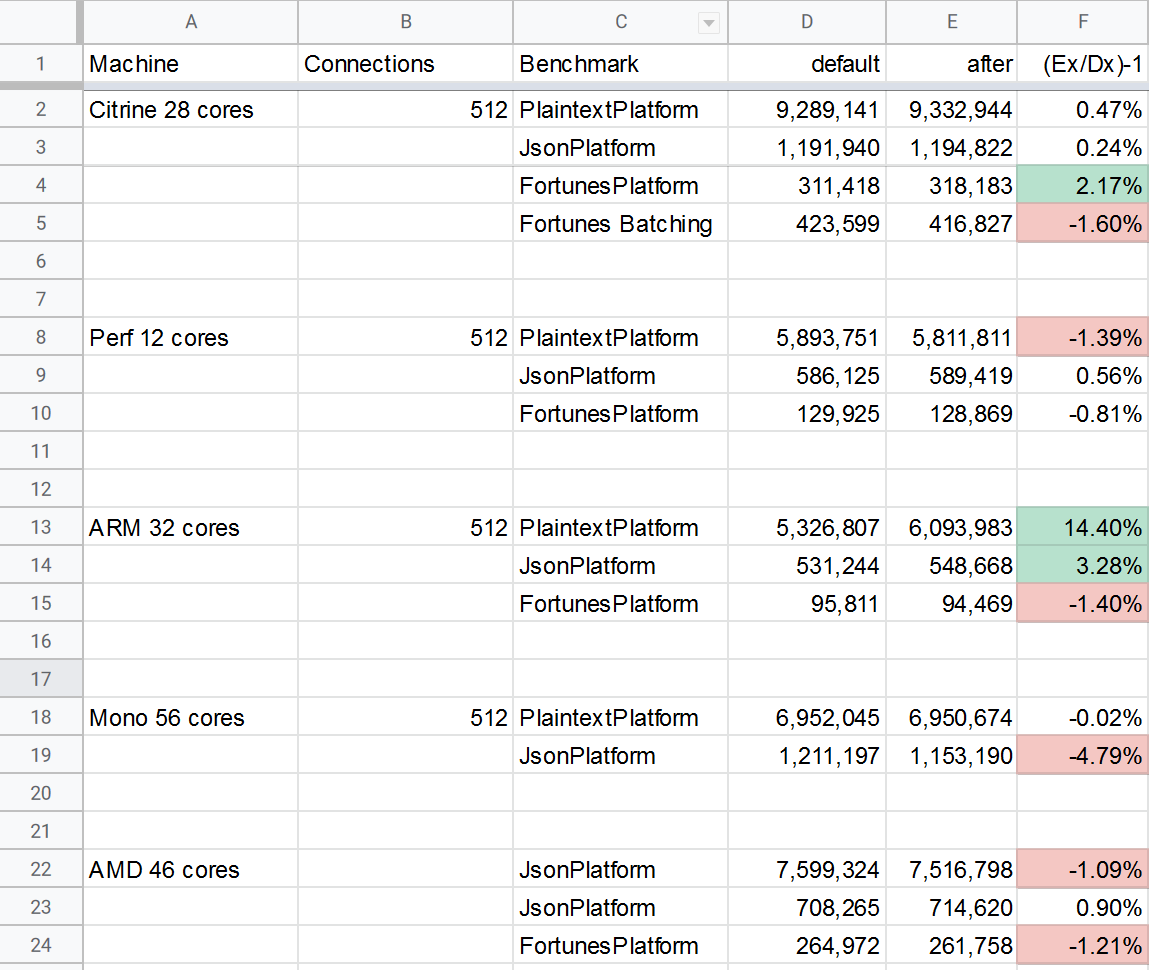

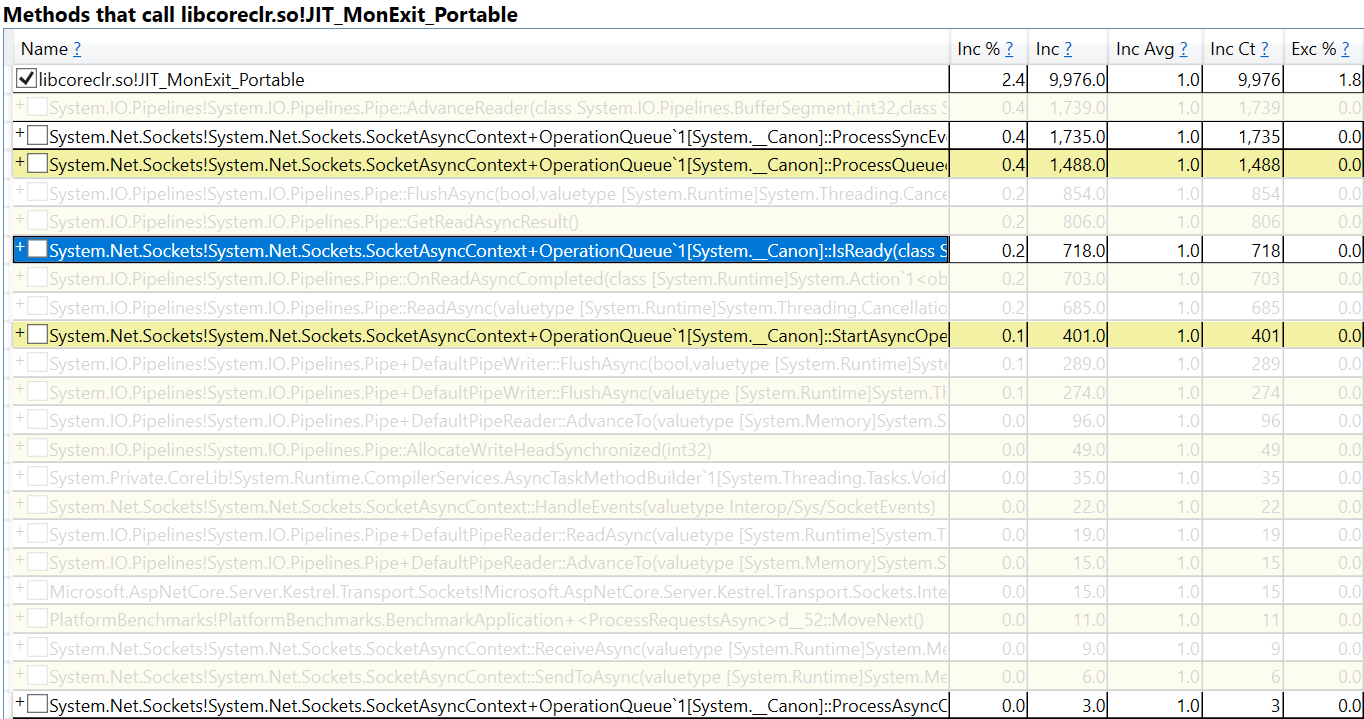

@tmds the results I've uploaded jsonplatform trace to |

|

Thanks for benchmarking Adam. For Citrine JSON platform, the improvements seems to be round the expected 3k (0.24%). Notable better improvement on ARM 32-core: 3.28%. About the measured regressions, it doesn't make sense why a lock (2 Interlocked) can perform better than two Volatile.Reads to me. I'm in for this change because the complexity is not so high. The tiny improvement applies to every socket operation. |

If you are talking about the |

|

Is this good to merge? |

In Adam's results, there are a bunch of regressions (not just on the mono machines) that are larger than the improvement in json on citrine. That's not a concern? |

From the benchmark results it doesn't look like it's an every scenario wins change. Though I don't understand why two volatile reads wouldn't always be cheaper than two compareexchanges. |

I'm struggling to see that. Can you elaborate? There was a notable increase in one benchmark on ARM, but two of the three shown regressed on AMD, two of the three regressed on Perf 12 cores, and while there was a measurable improvement in Fortunes Platform on citrine, there was an almost commensurate regression in Fortunes Batching on citrine. Is it possible this was just a bad run of the metrics? While I agree in theory removing a lock should be helpful, at the same time lock-freedom generally makes code more complex and harder to reason about and maintain, and so I'd only want to take such a change if we can prove to ourselves it really moves things in the right direction meaningfully. |

|

@stephentoub I'm basing myself mostly on ARM results and time spent in traces inside @adamsitnik what do you think about these regressions? To we have an idea on how much the same benchmark varies across successive runs? Are we confident these are regressions? I'm fine if you want to close this because the gain (0.24% on jsonplatform) is not worth the investigation. Though the gain on ARM seems worthwhile. And it will apply to any socket operation, not just TE 100% load cases. |

I don't; I want it to be successful :) I just want to make sure we're only taking it when we can prove that it actually is. |

| public bool IsReady(SocketAsyncContext context, out int observedSequenceNumber) | ||

| // IsReady returns whether an operation can be executed immediately. | ||

| // observedSequenceNumber must be passed to StartAsyncOperation. | ||

| public bool IsReady(SocketAsyncContext context, out int? observedSequenceNumber) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Can we keep observedSequenceNumber as a non-nullable int? It's only used when IsReady returns true, so we should be able to just return 0 or whatever when that's not the case. Seems like a non-nullable int would be slightly more efficient here.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

it's only used when IsReady returns true

When IsReady returns false, the null is used to ensure that StartAsyncOperation will try the operation in case the queue becomes ready in the meanwhile. To not use a nullable int here, the best value to use is _sequenceNumber - 1 (when returning false), because that will fail the sequence number equals check in StartAsyncOperation.

src/libraries/System.Net.Sockets/src/System/Net/Sockets/SocketAsyncContext.Unix.cs

Show resolved

Hide resolved

| { | ||

| observedSequenceNumber = _sequenceNumber; | ||

| bool isReady = (_state == QueueState.Ready) || (_state == QueueState.Stopped); | ||

| observedSequenceNumber--; |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Is there an enforced cap on the sequence number somewhere? I'm wondering what happens if, for example, this wraps around such that observedSequenceNumber is int.MinValue and observedSequenceNumber - 1 is int.MaxValue.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

There is no cap on sequence number. The observed sequence number gets checked for equality in StartAsyncOperation. It is a wrapping counter for the nr of times the queue becomes ready. Decrementing it is the same as pretending we have 'observed' the previous time the queue became ready, so StartAsyncOperation considers IsReady state as a new thing.

I think there is nothing special at int.{Min,Max}Value because we're doing ++, -- and != operations only.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Ok, I wasn't sure if it was ever compared with <. If it's only ever compared with == seems fine. Out of curiosity, what would happen if 4B operations occurred between the time we read the value and compare it such that it fully wrapped and was again the same? I assume if that's a (super rare) problem, it's already a problem.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Out of curiosity, what would happen if 4B operations occurred between the time we read the value and compare it such that it fully wrapped and was again the same?

We'd not be aware events happened, and the socket operation will never complete. Since there is a limited nr of cases that cause increments, we don't need to worry about reaching 4B increments.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Again, not actually concerned, but trying to understand... re "Since there is a limited nr of cases that cause increments, we don't need to worry about reaching 4B increments", what would prevent one thread from stalling in between the get and the check, and other threads processing 4B operations in between?

triggered by #36214

@adamsitnik @stephentoub ptal