Artificial Intelligence: Latest News and Knowledge Hub

Artificial Intelligence (AI) refers to the simulation of human intelligence in machines that are programmed to think like humans and mimic their actions. The term may also be applied to any machine that exhibits traits associated with a human mind such as learning and problem-solving. In the modern era, AI has evolved from simple rule-based systems to complex neural networks capable of generative creativity and autonomous reasoning.

What Is Artificial Intelligence?

At its core, Artificial Intelligence is a broad branch of computer science concerned with building smart machines capable of performing tasks that typically require human intelligence. These tasks include perception (interpreting sensory data), reasoning (logical deduction), learning (improving from experience), and decision-making.

Key Definitions and Hierarchy

To understand the landscape, it is essential to distinguish between the nested layers of AI technologies. Each layer builds upon the previous, creating an increasingly sophisticated capability stack that has evolved over decades of research and development.

Artificial Intelligence (AI)

Artificial Intelligence represents the overarching discipline covering all intelligent systems. It is a field of research in computer science that develops and studies methods and software that enable machines to perceive their environment and use learning and intelligence to take actions that maximize their chances of achieving defined goals.

The term encompasses everything from simple rule-based systems to the most advanced neural networks. AI draws upon multiple disciplines including computer science, data analytics, statistics, hardware and software engineering, linguistics, neuroscience, and even philosophy and psychology.

The field’s breadth means that “AI” serves as an umbrella term for many distinct technologies and approaches, unified by the goal of creating systems that can perform tasks typically requiring human intelligence.

Machine Learning (ML)

Machine Learning (ML) is a subset of AI where computers learn from data without being explicitly programmed for specific rules. Instead of writing code for every decision, engineers feed data into algorithms that identify patterns. This approach fundamentally changed AI by allowing systems to improve through experience rather than requiring humans to anticipate every possible scenario.

Machine learning comes in several varieties: supervised learning requires labeled training data with expected answers; unsupervised learning analyzes unlabeled data to find hidden patterns; and reinforcement learning involves agents learning through rewards and penalties. Common algorithms include linear regression, k-nearest neighbors, naive Bayes classifiers, decision trees, and support vector machines. The power of ML lies in its ability to handle problems too complex for hand-coded rules, from spam filtering to medical diagnosis.

Deep Learning (DL)

Deep Learning (DL) is a specialized subset of ML inspired by the structure of the human brain. It uses multi-layered artificial neural networks to model complex patterns in massive datasets, powering breakthroughs in image recognition and natural language processing (NLP).

The “deep” in deep learning refers to the multiple hidden layers between input and output, with each layer progressively extracting higher-level features. For example, in image processing, lower layers may identify edges while higher layers identify concepts like faces or objects.

Deep learning’s sudden success in 2012-2015 was not due to theoretical breakthroughs but rather the availability of GPUs for parallel processing and the explosion of available training data. This architecture powers modern AI systems from autonomous vehicles to voice assistants, and its ability to learn representations directly from raw data eliminated much of the manual feature engineering that previous approaches required.

Generative AI

Generative AI represents a recent evolution of Deep Learning that focuses on creating new content, such as text, images, code, and video, rather than just analyzing existing data. Unlike discriminative models that classify or predict based on input, generative models learn the underlying patterns and structures within vast amounts of training data and use that knowledge to produce entirely new, original content based on prompts. This capability emerged primarily through advances in transformer architectures and large language models (LLMs). Tools like DALL-E for images, ChatGPT‘s underlying LLMs for text, and Sora for video exemplify how generative AI has moved from research curiosity to mainstream application. The technology raises novel questions about creativity, authorship, and intellectual property while simultaneously democratizing content creation and enabling new forms of human-AI collaboration.

Narrow vs. General AI

The industry categorizes AI into primary types based on capability, each representing fundamentally different levels of machine intelligence. Understanding these distinctions is crucial for assessing both the current state of AI and the ambitious goals driving major research organizations.

Artificial Narrow Intelligence (ANI)

Artificial Narrow Intelligence represents the current state of all deployed AI systems. These systems excel at specific tasks, such as playing chess, recommending products, or driving a car, but lack consciousness and cannot perform tasks outside their defined domain.

A world-champion-defeating chess engine cannot play tic-tac-toe unless separately programmed. Despite the “narrow” label, ANI systems can achieve superhuman performance within their domains. Modern examples include image recognition systems that outperform radiologists at detecting certain cancers, language models that can translate between hundreds of languages, and game-playing AI that has mastered Go, StarCraft, and poker.

The commercial AI industry is entirely built on ANI, generating hundreds of billions of dollars in value through targeted advertising, recommendation engines, autonomous systems, and enterprise automation.

Artificial General Intelligence (AGI)

Artificial General Intelligence represents a theoretical future state where an AI system possesses the ability to understand, learn, and apply knowledge across a wide variety of tasks at a level equal to or exceeding human capability. Unlike narrow AI, an AGI system would demonstrate flexible intelligence, transferring knowledge between domains, reasoning about novel situations, and adapting to challenges it was never explicitly trained for.

Companies like OpenAI and Google DeepMind have explicitly stated their mission is to achieve AGI, investing billions of dollars in pursuit of this goal. The path to AGI remains deeply uncertain, with researchers debating whether current approaches like scaling large language models will eventually yield AGI or whether fundamental breakthroughs in architecture and training are required. Timeline estimates range from “within this decade” to “possibly never achievable.”

Artificial Superintelligence (ASI)

Artificial Superintelligence describes a hypothetical level beyond AGI where AI surpasses the cognitive performance of humans in virtually all domains, including scientific creativity, general wisdom, and social skills. The concept, popularized by philosopher Nick Bostrom and others, raises profound questions about humanity’s future.

Proponents of the “intelligence explosion” hypothesis argue that once AGI is achieved, a superintelligent system could emerge rapidly through recursive self-improvement. This scenario motivates significant investment in AI safety research, with organizations like the Machine Intelligence Research Institute and the Center for AI Safety working to ensure future superintelligent systems remain aligned with human values.

Critics contend that ASI discussions distract from more immediate AI harms and that the concept may be fundamentally incoherent. Regardless, the possibility of ASI shapes policy discussions, corporate strategies, and research priorities throughout the AI field.

Types by Functionality

Beyond capability levels, AI systems can also be classified by how they process and retain information. This taxonomy, proposed by AI researcher Arend Hintze, provides a framework for understanding the progression from simple reactive systems to hypothetical self-aware machines.

Reactive Machines

Reactive machines represent the simplest form of AI, responding to current inputs without memory of past interactions. These systems cannot learn or adapt; they simply apply fixed rules or patterns to immediate stimuli. IBM‘s Deep Blue, which defeated chess champion Garry Kasparov in 1997, serves as a classic example.

Deep Blue evaluated millions of positions per second using sophisticated heuristics but retained no memory between games and could not learn from experience. Each game started from scratch, with the system applying the same evaluation functions regardless of previous outcomes.

While limited, reactive machines excel in domains where optimal responses can be computed from current state alone. Modern spam filters and simple recommendation systems often operate as reactive machines, matching patterns without building user models.

Limited Memory

Limited memory systems can look into the past to inform current decisions, storing observations temporarily to make better predictions. Self-driving cars exemplify this category, using limited memory to observe other vehicles’ speed and trajectory over time, enabling them to navigate traffic safely by predicting where other cars will be moments in the future.

Most contemporary AI applications fall into this category, including chatbots that maintain conversation context, recommendation engines that track recent user behavior, and predictive maintenance systems that monitor equipment trends.

The “limited” aspect refers to the constrained time horizon and selective nature of what gets remembered. These systems do not build comprehensive world models but rather maintain task-relevant short-term memories that improve immediate decision-making.

Theory of Mind

Theory of mind represents a theoretical category where AI would understand emotions, beliefs, and intentions of other agents. Named after the cognitive science concept describing how humans model others’ mental states, such AI would recognize that different agents have different knowledge, desires, and plans.

This would enable more natural human-AI interaction, as the system could infer user intent, anticipate misunderstandings, and adapt communication style. Current AI systems lack genuine theory of mind, though some exhibit superficial behaviors that mimic it.

Large language models can discuss mental states and simulate perspective-taking in conversation, but whether they truly model other minds or merely pattern-match remains contested. Achieving robust theory of mind would transform applications from customer service to education to healthcare, where understanding human psychology is essential.

Self-Aware AI

Self-aware AI represents the most advanced theoretical type, where AI would possess consciousness and self-awareness. Such a system would not merely process information but would have subjective experience, understanding its own existence as a distinct entity with internal states, goals, and a sense of self. This category remains firmly in the realm of science fiction and philosophy, raising profound questions that science cannot yet answer.

What would it mean for an AI to be conscious? How would we recognize machine consciousness if it emerged? Would self-aware AI have moral status and rights? These questions intersect with some of philosophy’s deepest puzzles about the nature of mind and consciousness.

While some researchers believe consciousness could emerge from sufficiently complex information processing, others argue that subjective experience requires biological substrates or fundamentally different architectures than current AI approaches.

Philosophical Foundations

The question of whether machines can truly “think” has been debated since AI’s inception. These philosophical frameworks continue to shape how researchers, policymakers, and the public understand artificial intelligence and its implications.

The Turing Test

Proposed by Alan Turing in his seminal 1950 paper “Computing Machinery and Intelligence,” the Turing Test evaluates a machine’s ability to exhibit intelligent behavior indistinguishable from a human. In the test, a human evaluator engages in natural language conversations with both a human and a machine, without knowing which is which.

If the evaluator cannot reliably distinguish between machine and human responses, the machine is said to have passed the test. Turing’s insight was to sidestep the philosophically fraught question of whether machines can “think” by proposing an operational test based on observable behavior.

The test has been criticized for focusing on deception rather than intelligence, and for being susceptible to tricks that exploit human psychology rather than demonstrate genuine understanding. Nevertheless, it remains a cultural touchstone in AI discourse. Modern large language models have arguably passed informal versions of the test, prompting renewed debate about whether the test measures what Turing intended.

The Chinese Room

Philosopher John Searle’s 1980 thought experiment argues that a computer executing a program can process symbols (syntax) without understanding their meaning (semantics). Searle imagines a person locked in a room, receiving Chinese characters through a slot and using a comprehensive rulebook to produce appropriate Chinese responses.

To outside observers, the room appears to understand Chinese, yet the person inside comprehends nothing, merely manipulating symbols according to rules. The Chinese Room challenges the notion that AI can ever truly “understand” rather than merely simulate understanding.

It draws a distinction between strong AI (machines that genuinely think) and weak AI (machines that merely behave as if they think). Critics have proposed numerous responses: the Systems Reply argues that understanding emerges from the system as a whole; the Robot Reply suggests embodiment might provide grounding; others question whether the thought experiment’s premises are coherent. The debate remains unresolved and has gained renewed relevance as large language models demonstrate increasingly sophisticated linguistic behavior.

The Singularity

The technological singularity describes a hypothetical future point where AI triggers runaway technological growth, resulting in unfathomable changes to civilization. The concept, developed by mathematician Vernor Vinge and popularized by futurist Ray Kurzweil, envisions a moment when artificial intelligence becomes capable of recursive self-improvement, rapidly exceeding human intelligence and transforming the world in ways we cannot predict.

Kurzweil predicts this could occur around 2045, based on extrapolations of exponential technological progress. The singularity concept influences both AI optimists, who see it as a path to solving humanity’s greatest challenges, and pessimists, who worry about existential risk from superintelligent systems.

Critics argue that the singularity relies on questionable assumptions about intelligence, technological progress, and the feasibility of recursive self-improvement. Nevertheless, the concept shapes public discourse about AI’s long-term trajectory and motivates significant investment in AI safety research aimed at ensuring beneficial outcomes.

The AI Effect

A curious phenomenon known as the “AI effect” describes how technologies tend to lose their “AI” label once they become commonplace and well understood. When a capability once considered the hallmark of intelligence becomes routine, the public and even researchers tend to discount it as “not really AI.”

Consider: in the 1960s, a program that could play checkers or translate simple sentences was hailed as artificial intelligence. Today, spell checkers, search engines, and recommendation algorithms are rarely thought of as AI despite performing tasks that once seemed to require human cognition. This moving goalpost effect means that “AI” often refers to whatever intelligent behavior machines cannot yet achieve, creating a perpetually receding horizon.

The AI effect reveals something important about human psychology: we tend to define intelligence by what remains mysterious. Once we understand how a system works, it feels mechanical rather than intelligent. This has practical implications for AI researchers, who must continually push boundaries to maintain relevance, and for businesses, which may undersell the AI capabilities already embedded in their products.

History and Evolution

The journey of AI has been characterized by cycles of immense optimism followed by periods of stagnation known as “AI Winters.”

Ancient Precursors and Philosophical Roots

The dream of creating artificial beings predates modern computing by millennia. Greek myths described Hephaestus crafting intelligent automata like Talos, the bronze giant who guarded Crete, and Pygmalion’s statue Galatea coming to life. These stories reflect humanity’s enduring fascination with creating intelligence from inanimate matter.

Formal reasoning, the foundation of symbolic AI, has ancient roots. Aristotle’s syllogism, developed in the 4th century BC, described mechanical rules for logical deduction. In the 13th century, Ramon Llull created the Ars Magna, a mechanical device for combining concepts to generate new knowledge, an early precursor to computational reasoning. Gottfried Wilhelm Leibniz extended this vision in the 17th century, proposing a “calculus of reasoning” that could mechanically resolve all disputes.

Foundations and Symbolic AI (1950s-1980s)

The field was formally founded in 1956 at a workshop at Dartmouth College, attended by pioneers like John McCarthy, Marvin Minsky, Nathaniel Rochester, and Claude Shannon. Early research focused on “Symbolic AI,” also known as Good Old-Fashioned AI (GOFAI), which used logic and rules to solve problems.

Symbolic AI treated intelligence as symbol manipulation. Programs like the General Problem Solver (1959) and SHRDLU (1970) could reason about blocks in a virtual world or prove mathematical theorems. Expert systems emerged in the 1970s and 1980s, encoding human expertise into rule-based systems for medical diagnosis (MYCIN), mineral exploration (PROSPECTOR), and computer configuration (XCON/R1).

While successful in controlled environments, these systems struggled with the ambiguity and vastness of the real world. They required hand-crafted rules and couldn’t learn from data. The difficulty of encoding all necessary knowledge, the “commonsense knowledge problem,” became a fundamental barrier. This led to funding cuts and the first “AI Winter” in the mid-1970s, followed by another in the late 1980s when expert systems failed to deliver on commercial promises.

Key Historical Milestones

| Year | Milestone | Significance |

|---|---|---|

| 1950 | Alan Turing publishes “Computing Machinery and Intelligence” | Poses the question “Can machines think?” and proposes the Turing Test |

| 1956 | Dartmouth Workshop | The term “Artificial Intelligence” is coined; field formally established |

| 1966 | ELIZA chatbot created | First program to pass a limited Turing Test by mimicking a therapist |

| 1966 | Shakey the Robot | First mobile robot to reason about its actions |

| 1986 | Backpropagation popularized | Enables efficient training of multi-layer neural networks |

| 1997 | IBM Deep Blue defeats Garry Kasparov | First computer to beat a reigning world chess champion |

| 2011 | IBM Watson wins Jeopardy! | Demonstrates natural language understanding at scale |

| 2016 | DeepMind AlphaGo defeats Lee Sedol | Masters the ancient game of Go, previously thought too complex for AI |

| 2017 | “Attention Is All You Need” paper published | Introduces the Transformer architecture, revolutionizing NLP |

| 2022 | ChatGPT launches | Brings generative AI to the mainstream, sparking the “AI boom” |

The Deep Learning Revolution (2010s)

The modern AI boom began around 2012, driven by three converging factors: the availability of massive datasets (Big Data), the repurposing of GPUs for parallel processing, and improved algorithms like backpropagation. This era saw AI surpass human performance in image classification (ImageNet 2012) and complex games like Go (AlphaGo 2016).

The rise of connectionism, neural networks that learn from data rather than following explicit rules, marked a paradigm shift from symbolic AI. Deep learning proved remarkably effective at tasks that had stymied rule-based systems for decades: recognizing faces, understanding speech, and translating languages.

AI Performance Benchmarks: Games and Beyond

Games have served as crucial benchmarks for measuring AI progress because they provide well-defined rules and measurable outcomes. AI systems have achieved superhuman performance across an expanding range of challenges:

| Game/Task | AI Achievement Year | Significance |

|---|---|---|

| Checkers (Draughts) | 1994 | Chinook became world champion; game weakly solved in 2007 |

| Chess | 1997 | Deep Blue defeats Garry Kasparov |

| Othello | 1997 | Logistello defeats world champion |

| Scrabble | 2006 | AI achieves superhuman performance |

| Jeopardy! | 2011 | IBM Watson defeats human champions |

| Heads-up Limit Poker | 2015 | Statistically optimal play achieved |

| Go | 2016-2017 | AlphaGo defeats Lee Sedol and Ke Jie |

| No-Limit Texas Hold’em | 2017 | Libratus defeats top professionals |

| Dota 2 | 2018 | OpenAI Five defeats professional teams |

| StarCraft II | 2019 | AlphaStar reaches Grandmaster level |

| Gran Turismo Sport | 2022 | GT Sophy achieves superhuman racing |

| Diplomacy | 2022 | Cicero achieves human-level play in negotiation game |

The Agentic Shift (2025)

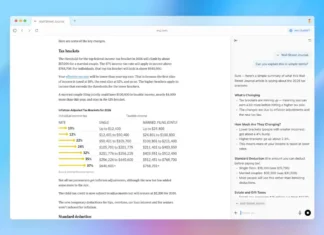

By late 2025, the industry began shifting from “Generative AI” (chatbots that create text) to “Agentic AI” (systems that execute workflows). This transition was marked by the release of Google’s Gemini 3 Pro and OpenAI’s GPT-5 in November 2025. Unlike their predecessors, which acted more as passive assistants, these models feature compley “reasoning engines” capable of planning multi-step tasks, correcting their own errors, and operating autonomously for extended periods.

For instance, GPT-5.1 Codex Max introduced “compaction” technology, allowing it to maintain context over 24-hour coding sessions, effectively turning the AI from a copilot into a virtual employee.

Architecture and Core Components

Modern AI systems are built upon sophisticated mathematical frameworks that allow them to process information similarly to biological neurons. However, AI encompasses far more than neural networks alone.

Machine Learning Paradigms

Machine learning approaches are categorized by how they learn from data. Each paradigm suits different problem types and data availability scenarios, and understanding these distinctions is essential for selecting appropriate solutions.

Supervised Learning

Supervised learning represents the most common form of machine learning, where models are trained on labeled data with known correct outputs. For example, showing a computer millions of images labeled “cat” or “dog” teaches it to classify new images.

The “supervision” comes from these labels guiding the learning process. Common algorithms include linear regression for continuous outputs, logistic regression for classification, polynomial regression for non-linear relationships, k-nearest neighbors for instance-based learning, naive Bayes for probabilistic classification, decision trees for interpretable rules, and support vector machines for finding optimal decision boundaries.

Supervised learning powers applications from email spam detection to medical diagnosis to credit scoring, anywhere historical data with known outcomes exists.

Unsupervised Learning

Unsupervised learning analyzes unlabeled data to find hidden structures or patterns without predetermined categories. The algorithm must discover organization in the data on its own, making it valuable for exploratory analysis when you don’t know what you’re looking for.

Common techniques include k-means clustering, which groups similar data points together; hierarchical clustering, which builds trees of nested groups; fuzzy c-means, which allows partial membership in multiple clusters; and principal component analysis (PCA), which reduces dimensionality while preserving variance.

Applications include customer segmentation for marketing, anomaly detection for fraud or intrusion detection, topic modeling for document analysis, and recommendation systems that identify users with similar preferences.

Semi-supervised Learning

Semi-supervised learning, also know as “Weak supervision”, combines a small amount of labeled data with a large amount of unlabeled data, proving particularly useful when labeling is expensive or time-consuming. For instance, medical imaging might have millions of scans but only thousands with expert annotations.

The approach leverages the structure of unlabeled data to improve learning from limited labels. Techniques include self-training (using model predictions as pseudo-labels), co-training (using multiple views of data), and graph-based methods (propagating labels through similarity networks). Semi-supervised learning has become increasingly important as organizations accumulate vast unlabeled datasets while struggling to annotate them comprehensively.

Self-supervised Learning

Self-supervised learning enables models to learn from the structure of the data itself, predicting parts of the input from other parts without external labels. This approach powers modern large language models (LLMs), which learn by predicting masked words or next tokens in vast text corpora.

The “labels” come from the data itself: predicting a hidden word from surrounding context, reconstructing corrupted images, or forecasting future frames in video. Self-supervised learning has proven remarkably effective at learning general representations that transfer to downstream tasks.

For example, Google’s Bidirectional Encoder Representations from Transformers (BERT) model learns bidirectional language representations by predicting masked words; GPT learns by predicting next tokens; contrastive learning methods like SimCLR learn visual representations by comparing augmented views of the same image.

Reinforcement Learning (RL)

Reinforcement Learning trains agents to make sequential decisions by performing actions and receiving rewards or penalties. Unlike supervised learning with immediate feedback on each prediction, RL agents must discover which actions lead to long-term reward through exploration and exploitation. The agent learns a policy mapping states to actions that maximizes cumulative reward.

RL was crucial for training AlphaGo to defeat world Go champions, teaching robots to walk and manipulate objects, and developing the reasoning capabilities of GPT-5 through reinforcement learning from human feedback (RLHF). Challenges include the credit assignment problem (determining which actions led to rewards), exploration-exploitation tradeoffs, and sample efficiency (RL often requires millions of interactions to learn).

Transfer Learning

Transfer learning applies knowledge gained from one task to a different but related task, dramatically reducing training time and data requirements. Instead of training from scratch, practitioners start with a model pre-trained on a large dataset and fine-tune it for their specific application.

This approach revolutionized computer vision (ImageNet pre-training) and NLP (BERT, GPT pre-training), making state-of-the-art results accessible to organizations without massive computational resources. Transfer learning works because low-level features (edge detectors in vision, grammatical patterns in language) are broadly useful across tasks.

The pre-training/fine-tuning paradigm has become the dominant approach in modern AI, with foundation models serving as general-purpose starting points for diverse applications.

Neural Network Architectures

Different network architectures are optimized for different types of data. The choice of architecture profoundly affects what patterns a model can learn and how efficiently it processes information.

Convolutional Neural Networks (CNNs)

Convolutional Neural Networks are specialized for processing grid-like data such as images. CNNs use convolutional layers that slide small filters across the input, detecting features like edges, textures, and shapes at various positions. This approach makes CNNs robust to variations in position and scale, since a learned edge detector works regardless of where the edge appears.

The architecture typically alternates convolutional layers (feature detection) with pooling layers (dimensionality reduction), building hierarchical representations where lower layers detect simple features and higher layers combine them into complex concepts. CNNs power applications from facial recognition and autonomous vehicle perception to medical imaging analysis and satellite imagery interpretation.

The 2012 ImageNet breakthrough, when AlexNet dramatically outperformed traditional methods, launched the deep learning revolution and established CNNs as the dominant approach for visual tasks.

Recurrent Neural Networks (RNNs) and LSTMs

Recurrent Neural Networks are designed for sequential data where context matters, maintaining a “memory” of previous inputs through recurrent connections. Unlike feedforward networks that process each input independently, RNNs pass information from one step to the next, making them suitable for speech recognition, language modeling, time-series prediction, and any task where sequence order is meaningful.

However, standard RNNs suffer from the “vanishing gradient” problem: gradients shrink exponentially over long sequences, preventing learning of long-range dependencies.

Long Short-Term Memory (LSTM) networks solve this through gating mechanisms that control information flow, enabling learning over hundreds of time steps. Gated Recurrent Units (GRUs) offer a simplified alternative with similar capabilities. While largely superseded by Transformers for NLP, RNNs remain relevant for real-time streaming applications and resource-constrained environments.

Transformers

The Transformer architecture, introduced in the 2017 paper “Attention Is All You Need,” revolutionized AI by enabling massive parallelization and superior performance on language tasks. Unlike RNNs that process sequences step by step, Transformers process all elements simultaneously using an “attention mechanism” that weighs the relevance of each input element to every other element.

This self-attention allows the model to capture relationships between distant words without information passing through intermediate steps. The architecture consists of stacked encoder and/or decoder blocks, each containing multi-head attention and feedforward layers.

Generative pre-trained transformer (GPT) models use decoder-only Transformers for autoregressive text generation; BERT uses encoder-only Transformers for bidirectional understanding; models like T5 use full encoder-decoder Transformers. The Transformer’s parallelizability enabled scaling to hundreds of billions of parameters, and its flexibility has extended beyond NLP to vision (Vision Transformer), audio (Whisper), and multimodal applications (GPT-4V, Gemini).

Classical AI Techniques

While deep learning dominates headlines, classical AI techniques remain essential for many applications and continue to influence modern systems:

Knowledge Representation and Reasoning

Knowledge representation involves encoding information about the world so AI systems can reason about it. These techniques bridge the gap between raw data and meaningful inference, enabling systems to answer questions, make decisions, and explain their reasoning.

Ontologies

Ontologies provide formal specifications of concepts and their relationships within a domain. They define what entities exist, their properties, and the logical constraints governing them.

The Semantic Web uses ontologies expressed in languages like OWL (Web Ontology Language) to enable machine-readable data across the internet. In healthcare, SNOMED CT provides a comprehensive ontology of medical terms; in e-commerce, product taxonomies organize items into browsable categories. Ontologies enable AI systems to understand that a “laptop” is a type of “computer,” which is a type of “electronic device,” supporting inference and interoperability across systems.

Building ontologies requires careful domain analysis and expert input, making them expensive to create but valuable for applications requiring precise, structured knowledge.

Knowledge Graphs

Knowledge graphs represent networks of entities (nodes) and relationships (edges) that capture real-world knowledge. Google’s Knowledge Graph, launched in 2012, powers enhanced search results by connecting billions of facts about people, places, and things.

Wikidata provides a free, community-maintained knowledge base with over 100 million items. Enterprise knowledge graphs help organizations integrate information across siloed databases, enabling sophisticated question-answering and analytics.Knowledge graphs support multi-hop reasoning:

Given “Who founded the company that makes the iPhone?” the system traverses Apple → founded by → Steve Jobs.

The combination of knowledge graphs with neural networks, known as neuro-symbolic AI, aims to blend the reasoning capabilities of structured knowledge with the pattern recognition of deep learning.

Semantic Networks

Semantic networks are graph structures representing concepts and their semantic relationships, predating modern knowledge graphs by decades. Nodes represent concepts (like “bird” or “flight”), while labeled edges represent relationships (“bird” → “can” → “fly”).

These networks support spreading activation, where activating one concept primes related concepts, mimicking human associative memory.

WordNet, developed at Princeton, organizes English words into synonym sets linked by semantic relations, supporting natural language processing applications. Semantic networks influenced both cognitive science models of human memory and practical AI systems for natural language understanding. While simpler than full ontologies, they capture intuitive relationships that support common-sense reasoning.

The Commonsense Knowledge Problem

A fundamental challenge in AI is encoding the vast implicit knowledge humans take for granted: that water is wet, objects fall when dropped, people have beliefs and desires, and countless other facts we learn through experience. This commonsense knowledge, though obvious to humans, is difficult to enumerate and even harder to apply appropriately in context.

The Cyc project, started in 1984, attempted to hand-code millions of commonsense rules, but progress proved slower than anticipated. ConceptNet provides a crowd-sourced commonsense knowledge base with millions of assertions.

Modern large language models appear to capture some commonsense knowledge implicitly through training on vast text corpora, but they still make striking errors that reveal gaps in understanding. The commonsense knowledge problem remains one of AI’s most persistent challenges, limiting systems’ ability to reason about everyday situations.

Search and Optimization Algorithms

Many AI problems can be framed as searching for optimal solutions in vast possibility spaces. These algorithms provide systematic methods for finding good solutions when exhaustive enumeration is impossible.

State Space Search

State space search explores trees of possible states to find a goal, modeling problems as initial states, actions that transition between states, and goal conditions. Uninformed search methods like breadth-first and depth-first search guarantee finding solutions but may be inefficient.

Informed search algorithms like A* use heuristics to estimate distance to the goal, dramatically reducing the search space by prioritizing promising paths. A* search powers route planning in navigation applications, finding shortest paths through road networks with millions of intersections.

In puzzle solving and game playing, state space search evaluates millions of positions to find winning strategies. The key challenge is designing admissible heuristics that guide search without overestimating costs, ensuring optimal solutions while maintaining efficiency.

Adversarial Search

Adversarial search techniques handle competitive scenarios where opponents try to thwart your goals, as in games where players take alternating turns. The minimax algorithm evaluates game trees by assuming both players play optimally: maximizing one’s own score while expecting the opponent to minimize it.

Alpha-beta pruning eliminates branches that cannot affect the final decision, enabling chess engines to evaluate millions of positions per second. Monte Carlo Tree Search (MCTS), used by AlphaGo, combines tree search with random simulations to estimate position values without exhaustive evaluation.

Adversarial search extends beyond games to security (anticipating attacker strategies), economics (modeling competitor responses), and robotics (planning in the presence of unpredictable agents).

Local Search and Gradient Descent

Local search algorithms iteratively improve solutions by making small changes, navigating the landscape of possible solutions toward better regions.

Hill climbing moves to neighboring states with better evaluations; simulated annealing adds randomness to escape local optima. Gradient descent, the foundation of neural network training, adjusts model parameters in the direction that reduces prediction errors, following the gradient of the loss function.

Stochastic gradient descent (SGD) processes random mini-batches of data, enabling efficient training on massive datasets. Advanced optimizers like Adam combine momentum (remembering past gradients) with adaptive learning rates (adjusting step sizes per parameter). The success of deep learning depends critically on gradient descent’s ability to navigate loss landscapes with billions of dimensions, finding good solutions despite the theoretical possibility of getting stuck in poor local minima.

Evolutionary Computation

Evolutionary computation algorithms are inspired by biological evolution, evolving solutions through selection, mutation, and recombination. Genetic algorithms represent solutions as “chromosomes” (often bit strings), evaluate their “fitness,” and create new generations by combining successful individuals. Evolutionary strategies operate on continuous parameters, using mutation and selection to optimize functions where gradients are unavailable.

Genetic programming evolves programs or mathematical expressions, discovering novel solutions humans might not design. Applications include neural architecture search (evolving network designs), robot morphology optimization, and solving combinatorial optimization problems.

The field connects to artificial life research exploring how complex behaviors emerge from simple evolutionary rules. While often slower than gradient-based methods for differentiable problems, evolutionary approaches excel when dealing with discrete choices, multi-objective optimization, or highly irregular fitness landscapes.

Logic-Based Systems

Formal logic provides rigorous foundations for AI reasoning, enabling systems to draw valid conclusions from premises and explain their inferences in human-understandable terms.

Propositional Logic

Propositional logic represents facts as true/false propositions connected by AND, OR, NOT operators. A knowledge base might contain propositions like “It is raining” and “If it is raining, then the street is wet,” from which the system can infer “The street is wet.”

While simple and computationally tractable, propositional logic is limited in expressiveness: it cannot easily represent statements about categories of objects or relationships between entities. Satisfiability (SAT) solvers, which determine whether a propositional formula can be made true, have become remarkably efficient, solving problems with millions of variables.

SAT solvers power applications from hardware verification to planning to cryptography. The theoretical significance of propositional logic extends to computational complexity theory, where SAT serves as the canonical NP-complete problem.

Predicate Logic (First-Order Logic)

Predicate logic extends propositional logic with variables, quantifiers (for all, there exists), and relations, enabling more complex reasoning about objects and their properties.

Instead of individual propositions, predicate logic can express “All humans are mortal” and “Socrates is human,” deriving “Socrates is mortal” through logical inference.

First-order logic (FOL) provides sufficient expressiveness for most mathematical and scientific reasoning. Automated theorem provers implement FOL inference, assisting mathematicians in verifying proofs and finding new results.

Prolog, a logic programming language based on FOL, represents knowledge as facts and rules, with the interpreter performing automatic inference. While more powerful than propositional logic, FOL inference is semi-decidable: proofs can be found for valid conclusions, but the search may never terminate for invalid queries.

Fuzzy Logic

Fuzzy logic handles degrees of truth rather than binary true/false, modeling the imprecision inherent in human concepts. A temperature might be “somewhat hot” with truth value 0.7 rather than strictly hot or cold. Fuzzy sets extend classical set membership to continuous values between 0 and 1, enabling smooth transitions between categories. F

uzzy inference systems apply rules to fuzzy inputs, producing fuzzy outputs that can be “defuzzified” into crisp decisions. Applications include control systems (washing machines adjusting cycles based on load conditions), consumer electronics (autofocus cameras), and decision support systems handling vague or uncertain criteria.

Fuzzy logic bridges the gap between human linguistic descriptions and precise computational requirements, though critics argue that probability theory provides a more principled approach to uncertainty.

Expert Systems

Expert systems encode domain expertise as IF-THEN rules, enabling computer systems to reason like human specialists. A medical diagnosis system might contain rules like “IF patient has fever AND sore throat AND swollen glands THEN consider mononucleosis with certainty 0.7.”

The rule base captures knowledge from domain experts; an inference engine applies rules to specific cases; and an explanation facility shows which rules led to conclusions.

MYCIN, developed in the 1970s for bacterial infection diagnosis, demonstrated that expert systems could match human specialists in narrow domains. While less fashionable than machine learning, expert systems remain valuable in regulated industries (healthcare, finance, law) where decisions must be explainable and auditable.

The knowledge engineering process of extracting and formalizing expert knowledge remains challenging, limiting the domains where expert systems are practical.

Probabilistic Methods

Real-world AI must reason under uncertainty, making decisions based on incomplete information and noisy observations. Probabilistic methods provide principled frameworks for representing and computing with uncertainty.

Bayesian Networks

Bayesian networks are graphical models representing probabilistic relationships between variables as directed acyclic graphs. Nodes represent random variables; edges encode conditional dependencies; and associated probability tables quantify the relationships.

Given evidence about some variables, Bayesian inference computes updated probabilities for others. The foundations were laid by Thomas Bayes in the 18th century with his theorem relating conditional probabilities. Medical diagnosis systems use Bayesian networks to reason about diseases given symptoms, accounting for the base rates of conditions and the reliability of tests.

Spam filters apply Bayesian methods to classify emails based on word frequencies. Risk assessment in finance and insurance relies on Bayesian models to estimate probabilities of adverse events. The explicit representation of uncertainty and the ability to update beliefs with new evidence make Bayesian networks particularly valuable for decision support in uncertain domains.

Hidden Markov Models (HMMs)

Hidden Markov Models are statistical models for sequential data where observed outputs depend on hidden states that evolve over time according to transition probabilities. The “hidden” states cannot be directly observed; only their emissions (outputs) are visible.

HMMs were originally developed for speech recognition at Bell Labs in the 1970s, modeling spoken words as sequences of phonemes with observable acoustic features. The Viterbi algorithm efficiently finds the most likely sequence of hidden states given observations; the forward-backward algorithm computes state probabilities; and the Baum-Welch algorithm learns model parameters from data.

Beyond speech, HMMs analyze biological sequences (gene finding, protein structure), model financial markets (regime detection, trading signals), and recognize gestures and activities from sensor data. While largely superseded by neural approaches for speech recognition, HMMs remain valuable for their interpretability and efficiency on small datasets.

Kalman Filters

Kalman filters are algorithms for estimating the state of a dynamic system from noisy observations, optimally combining predictions from a system model with measurements to minimize estimation error. Developed by Rudolf Kalman in 1960, the filter recursively updates state estimates as new observations arrive, maintaining estimates of both the state and its uncertainty.

The algorithm is computationally efficient and provides optimal estimates for linear systems with Gaussian noise. Extended Kalman filters and unscented Kalman filters handle nonlinear systems. Applications pervade engineering: navigation systems fuse GPS with inertial sensors; robotics combines odometry with sensor readings; autonomous vehicles track surrounding objects; and spacecraft maintain attitude estimates. Kalman filters exemplify the power of probabilistic reasoning for real-world systems where perfect information is impossible.

Probabilistic Programming

Probabilistic programming languages combine traditional programming with probabilistic inference, enabling flexible modeling of uncertainty without manually deriving inference algorithms. Programs specify generative models describing how data might have been produced; the inference engine automatically computes posterior distributions over unobserved variables given observed data.

Languages like Stan, PyMC, and Pyro allow practitioners to express complex Bayesian models in familiar programming syntax. Probabilistic programming democratizes sophisticated statistical modeling, making it accessible to researchers without deep expertise in inference algorithms.

Applications range from A/B testing and causal inference in tech companies to scientific modeling and machine learning research. The field connects to the broader goal of automating statistical reasoning, with ongoing research on more efficient inference algorithms and richer modeling languages.

Perception and Affective Computing

AI perception extends beyond visual recognition to encompass the full range of sensory modalities and even the detection of emotional states. These capabilities enable richer human-AI interaction and more sophisticated environmental understanding.

Multimodal Perception

Modern AI systems increasingly integrate multiple sensory modalities, including vision, audio, text, and touch, to build richer world models than any single modality could provide. Robots combine camera feeds with LIDAR (laser-based distance sensing) and tactile sensors to navigate environments and manipulate objects.

Virtual assistants process speech alongside contextual cues from user history and environmental data. Self-driving cars fuse camera, radar, and ultrasonic sensor data to perceive their surroundings redundantly, compensating for the weaknesses of each individual sensor.

Multimodal foundation models accept both images and text as input, enabling visual question answering and image-grounded conversation. The technical challenge lies in aligning representations across modalities: learning that a spoken word, its written form, and an image of the concept all refer to the same thing. Multimodal AI promises more natural interaction, as humans seamlessly combine senses when perceiving the world.

Speech Recognition and Synthesis

Converting spoken language to text (automatic speech recognition, ASR) and vice versa (text-to-speech, TTS) has reached near-human accuracy for many languages, enabling voice interfaces from Siri and Alexa to real-time transcription services.

Modern ASR systems, like OpenAI’s Whisper, use neural networks trained on hundreds of thousands of hours of audio to handle diverse accents, background noise, and domain-specific vocabulary. Real-time transcription enables live captioning for the deaf and hard of hearing, meeting transcription for business productivity, and voice-controlled interfaces for hands-free operation.

TTS systems have progressed from robotic monotone to naturalistic speech with appropriate prosody and emotion, powered by neural vocoders that generate high-fidelity audio. Voice cloning raises ethical concerns: synthesizing convincing speech from minutes of samples enables both accessibility applications (preserving the voices of those losing their ability to speak) and potential for fraud and misinformation.

Affective Computing

Affective computing, a field pioneered by MIT’s Rosalind Picard, focuses on detecting and simulating human emotions. Systems analyze facial expressions (detecting smiles, frowns, or surprise), voice tone (identifying stress, excitement, or sadness), physiological signals (heart rate variability, skin conductance), and text sentiment to infer emotional states.

Applications range from customer service sentiment analysis and market research to mental health monitoring and educational systems that adapt to student frustration. Automotive systems monitor driver drowsiness and attention; therapeutic applications help autistic individuals recognize emotions in others. However, the field faces significant criticism.

The science of emotion recognition from facial expressions is contested, with studies showing substantial individual and cultural variation that undermines universal claims. Ethical concerns about manipulation and surveillance persist: should advertisers know our emotional vulnerabilities? Should employers monitor worker stress?

The technology’s potential benefits must be weighed against risks of misuse and the fundamental question of whether current approaches genuinely understand emotion or merely correlate surface features.

Efficiency and Optimization Techniques

After years of scaling up models, the industry is now focused on doing more with less. These techniques are making powerful AI accessible on consumer hardware and mobile devices.

Quantization

Quantization reduces the precision of model weights (e.g., from 32-bit floating point to 4-bit integers) to shrink model size and speed inference, often with minimal accuracy loss. A model quantized from 16-bit to 4-bit becomes roughly four times smaller and faster, enabling deployment on resource-constrained devices.

Research has shown that neural networks are surprisingly robust to reduced precision, particularly during inference. Quantization-aware training produces models specifically optimized for low-precision deployment. The technique has enabled large language models to run on consumer GPUs and even smartphones, democratizing access to powerful AI capabilities.

Knowledge Distillation

Knowledge distillation trains smaller “student” models to mimic larger “teacher” models, transferring capabilities to more efficient architectures. The student learns not just the teacher’s final predictions but the full probability distribution over outputs, capturing nuances that hard labels would miss.

Distilled models can achieve 90%+ of the teacher’s performance with a fraction of the parameters and compute. The technique enables deploying powerful AI on edge devices, mobile phones, and in latency-sensitive applications where large models would be impractical. Many production AI systems use distilled models optimized for their specific deployment constraints.

Mixture of Experts (MoE)

Mixture of Experts architectures activate only a subset of parameters for each input, achieving the capacity of large models with the inference cost of smaller ones. A MoE model might have 100 billion parameters but only activate 10 billion for any given input, routing through a learned gating mechanism to the most relevant expert subnetworks. This approach provides the knowledge diversity of large models while maintaining reasonable inference costs. GPT-4 and Gemini reportedly use MoE architectures. The technique represents a fundamental shift from dense models where every parameter is used for every input to sparse models that adaptively allocate computation.

Retrieval-Augmented Generation (RAG)

Retrieval-Augmented Generation combines LLMs with external knowledge bases, allowing models to access up-to-date information without retraining. Instead of storing all knowledge in model weights, RAG systems retrieve relevant documents from a knowledge base and provide them as context for generation.

his approach addresses LLM limitations: knowledge cutoff dates become irrelevant when the model can access current information; hallucinations reduce when answers are grounded in retrieved documents; and domain adaptation is simplified by updating the knowledge base rather than retraining the model. RAG has become a standard pattern for enterprise AI applications requiring accurate, current information.

Sparse Attention

Sparse attention mechanisms process only relevant portions of long contexts, reducing computational requirements from quadratic to linear or near-linear in sequence length. Standard transformer attention scales quadratically with input length, making long contexts computationally prohibitive.

Sparse variants like Longformer, BigBird, and various linear attention mechanisms attend only to local windows, specific patterns, or learned important positions. These techniques enable processing of document-length or book-length inputs that would be impossible with standard attention. Sparse attention is particularly important for applications involving long documents, code repositories, or extended conversations.

Hardware and Scaling

AI progress is tightly coupled with hardware advancement. The computational demands of modern AI systems have driven innovation in processor design and raised fundamental questions about the economics of intelligence.

GPUs (Graphics Processing Units)

Graphics Processing Units, originally designed for rendering video games, proved ideal for the parallel matrix operations required by neural networks. A GPU contains thousands of smaller cores optimized for performing the same operation on many data elements simultaneously, exactly what neural network training requires.

NVIDIA‘s CUDA platform, released in 2007, made GPU programming accessible to researchers outside graphics, enabling the deep learning revolution. The 2012 ImageNet breakthrough used two GPUs to train AlexNet in days rather than weeks. Today, AI training clusters contain thousands of GPUs, with NVIDIA’s H100 and subsequent generations commanding premium prices and extended wait times.

The company’s market capitalization has grown to rival the largest tech firms, reflecting AI’s dependence on specialized hardware. AMD and Intel compete with alternative GPU architectures, while cloud providers offer GPU access on demand, democratizing access to AI compute.

TPUs (Tensor Processing Units)

Google’s Tensor Processing Units are custom Application-Specific Integrated Circuits (ASICs) designed specifically for neural network workloads. First deployed in 2015 for internal Google services, TPUs offer superior efficiency for large-scale training and inference compared to general-purpose GPUs. The architecture optimizes for the reduced-precision arithmetic common in neural networks, packing more computation into each chip. Google’s TPU pods, containing thousands of interconnected TPUs, train the largest models, including Gemini. Google Cloud offers TPU access to external customers, and the TPU Research Cloud provides free access for academic projects. The TPU’s tight integration with TensorFlow (Google’s machine learning framework) creates a vertically integrated AI stack. Other companies have pursued similar custom silicon: Amazon‘s Trainium and Inferentia, Tesla‘s Dojo, and numerous AI chip startups seeking to capture value in the hardware layer.

Huang’s Law

Named after NVIDIA CEO Jensen Huang, this postulate notes that GPU performance for AI workloads has been improving faster than Moore’s Law would predict, roughly doubling every two years in the past but accelerating recently.

The H100 GPU offered roughly 6x the AI performance of its predecessor, the A100, in just two years. This performance growth comes from multiple sources: more transistors (still following Moore’s Law for now), architectural improvements specific to AI workloads, better memory bandwidth, and software optimizations.

Huang’s Law has profound implications for AI capabilities: if compute costs for a given performance level decline rapidly, then today’s impossibly expensive AI systems become affordable within years. However, the law faces potential headwinds from the end of Moore’s Law scaling and the physical limits of energy consumption. How long Huang’s Law can continue will significantly influence AI’s trajectory.

Scaling Laws

Research has shown that model performance improves predictably with increases in parameters, data, and compute, following mathematical relationships known as scaling laws.

OpenAI’s 2020 paper demonstrated that language model loss decreases as a power law of these factors, enabling researchers to predict capabilities before training. Perhaps more surprisingly, “emergent capabilities” (abilities not present in smaller models) appear once models cross certain size thresholds.

A model might fail at arithmetic below some parameter count, then suddenly succeed above it. These observations have driven a compute arms race, with leading labs investing billions in ever-larger training runs.

The strategy of throwing more resources at proven architectures has dominated recent AI progress, though some researchers argue that algorithmic improvements offer better returns. Whether scaling alone will lead to AGI, or whether qualitative breakthroughs are needed, remains the field’s central debate. Evidence of scaling law slowdowns would reshape investment strategies and research priorities throughout the industry.

Emerging Technologies

As of late 2025, several new architectural innovations have emerged to solve the limitations of traditional Large Language Models (LLMs). These techniques address challenges in context management, reasoning depth, and training efficiency.

Compaction (OpenAI)

Introduced with GPT-5.1, compaction technology acts as a “garbage collector” for the model’s attention span. Traditional transformers process a fixed context window, but as conversations or tasks extend beyond that window, older information is simply truncated, potentially losing critical context. Compaction actively summarizes and prunes the context window, preserving essential information while discarding irrelevant details.

This prevents the model from getting “confused” by too much information during long tasks and enables sustained operation over extended periods. For coding tasks, GPT-5.1 Codex Max can maintain context over 24-hour sessions by compacting completed code sections while keeping active working memory fresh.

The technique represents a shift from passive context windows to active memory management, bringing AI systems closer to how humans selectively remember and forget.

Deep Think (Google)

Deep Think is a reasoning engine in Gemini 3 that moves beyond simple token prediction to deliberate, multi-step reasoning. Rather than generating each token based primarily on immediate context, Deep Think allows the model to “think” before answering, simulating System 2 human thinking (slow, logical, deliberate) rather than System 1 (fast, intuitive, pattern-matching).

The system constructs internal reasoning chains, considers multiple approaches, and verifies conclusions before committing to outputs. This proves particularly valuable for mathematical problems, coding challenges, and complex logical reasoning where quick pattern matching fails.

The architecture involves dedicated reasoning modules that can iterate on problems, backtrack when hitting dead ends, and allocate more computation to harder questions. Deep Think represents the industry’s broader shift toward reasoning-focused models that can handle tasks requiring genuine thought rather than mere information retrieval.

Cold Start Self-Training (DeepSeek)

Cold Start Self-Training, a method pioneered by Chinese AI lab DeepSeek, enables models to iteratively generate their own training data to improve reasoning capabilities, bypassing the need for expensive external datasets. The process begins with a base model that can solve some problems; the model generates solutions to harder problems, filters for correct answers, and trains on its own successful outputs.

This bootstrapping cycle continues, with each iteration producing a more capable model that can tackle more difficult challenges. DeepSeek’s approach proved remarkably effective for mathematical reasoning, enabling their DeepSeekMath-V2 model to achieve IMO Gold Medal performance. The technique challenges assumptions that frontier AI requires massive human-labeled datasets, potentially democratizing advanced model development.

Cold Start Self-Training relates to broader research on self-improvement and recursive self-enhancement, raising questions about whether models might eventually improve themselves without human oversight.

Use Cases

AI has permeated virtually every sector, but the rise of autonomous agents has unlocked new categories of utility.

Autonomous Coding

Autonomous Coding has revolutionized software development. Unlike previous systems that suggested code snippets, AI coding agents can be assigned a complex refactoring ticket and work on it autonomously for over 24 hours or linger. They write code, runs tests, interprets error messages, fixes bugs, and submits a final pull request without human intervention.

Research and Commerce

OpenAI’s Shopping Research Agent (powered by GPT-5 Mini) demonstrates how AI is changing e-commerce. Instead of providing a list of links, the agent conducts deep research to generate structured “buyer’s guides” tailored to the user’s specific criteria. Notably, it prioritizes privacy by refusing to share user intent data with retailers, positioning the AI as a neutral advocate for the consumer.

Multimodal Content Creation

AI platforms like ElevenLabs have pivoted from audio-only to full multimodal production. By integrating models like OpenAI’s Sora 2 Pro and Google’s Veo 3.1, these tools allow creators to edit video, generate voiceovers, and modify scripts in a single unified timeline, streamlining the creative workflow.

Computer Vision

Computer vision enables machines to interpret and understand visual information from the world. This field has advanced dramatically with deep learning, enabling applications that seemed like science fiction just a decade ago.

Object Detection and Recognition

Object detection and recognition systems identify and locate objects within images or video streams, drawing bounding boxes around detected items and classifying them.

Modern architectures like YOLO (You Only Look Once) and Faster R-CNN achieve real-time detection of hundreds of object categories. Security surveillance systems use object detection to identify people, vehicles, and suspicious activities.

Autonomous vehicles detect pedestrians, traffic signs, and other cars. Retail stores use visual AI for inventory management, automatically tracking stock levels. The technology has matured to the point where consumer smartphones can identify thousands of objects, enabling visual search and accessibility features for the visually impaired.

Optical Character Recognition (OCR)

Optical Character Recognition converts images of text into machine-readable text, enabling digitization of documents at scale. Modern OCR powered by deep learning handles diverse fonts, handwriting, and degraded historical documents that defeated earlier approaches. Google has digitized millions of books using OCR; businesses process billions of pages of invoices, receipts, and contracts annually.

The technology enables searchable archives of historical records, accessibility for visually impaired users through text-to-speech, and automation of data entry tasks. Advanced OCR systems handle multiple languages, detect text orientation, and correct for image distortions, achieving accuracy rates exceeding 99% on clean printed text.

Face Detection and Recognition

Face detection and recognition systems identify human faces in images and match them against databases of known individuals. The technology has become remarkably accurate, with error rates below 0.1% on benchmark datasets. Consumer applications include smartphone unlocking (Face ID), photo organization (grouping photos by person), and social media tagging suggestions.

Enterprise and government applications include identity verification, access control, and law enforcement investigations. However, the technology remains highly controversial. Studies have documented higher error rates for women and people with darker skin tones, raising fairness concerns. Civil liberties organizations oppose mass surveillance applications. Several cities have banned government use of facial recognition, while the EU has debated comprehensive restrictions.

Medical Imaging

AI assists radiologists and pathologists by detecting anomalies in X-rays, MRIs, CT scans, and microscope slides, often catching patterns that humans might miss. Deep learning models trained on millions of images can identify early-stage cancers, diabetic retinopathy, and cardiovascular disease markers.

FDA-approved AI systems now assist with mammography screening, detecting breast cancer with accuracy matching or exceeding human radiologists. The technology promises to extend specialist capabilities to underserved regions and reduce diagnostic delays. However, deployment requires careful validation, as models trained on one population may perform poorly on others. The field continues to grapple with questions of liability, workflow integration, and appropriate human oversight.

Document AI

Document AI combines OCR with natural language processing to extract structured data from invoices, contracts, forms, and other business documents automatically. Rather than simply converting images to text, these systems understand document structure, identifying fields like “vendor name,” “invoice total,” or “contract date” and extracting their values. Enterprise applications process millions of documents daily, automating accounts payable, contract analysis, and compliance monitoring.

The technology handles semi-structured documents where layouts vary across vendors or formats. Advances in layout understanding and entity extraction have reduced manual data entry by orders of magnitude, though human review remains necessary for edge cases and high-stakes decisions.

Visual Inspection AI

Visual inspection AI automates quality control in manufacturing by detecting defects, anomalies, and assembly errors in products on the production line. Camera systems capture images of each item; AI models trained on examples of good and defective products classify items in milliseconds.

Applications range from detecting scratches on smartphone screens to identifying weld defects in automotive assembly to spotting contamination in food packaging. The technology enables 100% inspection at production speeds impossible for human inspectors, improving quality while reducing costs. Modern systems use anomaly detection approaches that can identify novel defects not present in training data, adapting to new failure modes as they emerge.

Natural Language Processing (NLP)

Natural language processing enables machines to understand, interpret, and generate human language. Once the province of specialized research, NLP has become ubiquitous through large language models and conversational AI.

Natural Language Understanding (NLU)

Natural Language Understanding focuses on comprehending the meaning and intent behind text, extracting structured information from unstructured language. NLU systems identify entities (people, organizations, locations, dates), recognize relationships between entities, and classify intent (is this email a complaint, a question, or a request?).

Sentiment analysis determines whether text expresses positive, negative, or neutral opinions. Named entity recognition tags mentions of specific things. Coreference resolution determines that “she” refers to “Marie Curie” mentioned earlier.

These capabilities underpin applications from email routing to customer feedback analysis to intelligence gathering. Modern approaches using transformers have dramatically improved accuracy, though understanding nuance, sarcasm, and context-dependent meaning remains challenging.

Natural Language Generation (NLG)

Natural Language Generation produces human-like text, from simple chatbot responses to full articles, reports, and creative writing. Template-based systems filled slots with data; modern neural approaches generate fluent prose from scratch. Large language models can produce coherent long-form content, summarize documents, translate between languages, and adapt writing style to different audiences.

Business applications include automated report generation (converting data into narrative summaries), personalized marketing content, and customer service responses. The technology raises questions about authenticity and attribution: should AI-generated text be labeled? When does assistance become authorship? NLG capabilities have sparked both enthusiasm about productivity gains and concern about potential misuse for disinformation.

Machine Translation

Machine translation systems convert text between languages, now approaching near-human quality for many language pairs. Google Translate serves over 500 million users daily, handling translations across 130+ languages. Neural machine translation, introduced around 2016, dramatically improved fluency by considering full sentences rather than phrase-by-phrase translation.

Modern systems handle idiomatic expressions, preserve document formatting, and adapt to domain-specific terminology. Real-time translation enables cross-language communication in meetings and video calls. However, quality varies significantly across language pairs: high-resource pairs like English-Spanish achieve near-professional quality, while low-resource languages lag behind. Challenges remain with highly contextual language, cultural references, and specialized domains where training data is scarce.

Sentiment Analysis

Sentiment analysis, also known as opinion mining or emotion AI, automatically determines whether text expresses positive, negative, or neutral opinions, enabling organizations to process customer feedback at scale. Brand monitoring services track millions of social media posts, reviews, and news articles to gauge public sentiment toward products and companies.

Customer service teams route complaints based on detected frustration levels. Financial analysts gauge market sentiment from news and social media. The technology has evolved from simple word-counting (positive words minus negative words) to deep learning models that understand context, negation, and nuance.

Fine-grained sentiment analysis identifies specific aspects being praised or criticized: “great camera but terrible battery life.” Despite improvements, detecting sarcasm, cultural context, and mixed sentiments remains challenging.

Chatbots and Virtual Assistants

From chatbots powered by ChatGPT, Google Gemini, over customer service bots to Siri, Alexa, and Google Assistant, NLP powers conversational interfaces that handle millions of queries daily. Virtual assistants combine speech recognition, natural language understanding, dialogue management, and natural language generation to conduct multi-turn conversations.

Consumer applications handle tasks like setting reminders, playing music, controlling smart home devices, and answering questions. Enterprise chatbots automate customer support, reducing wait times and enabling 24/7 availability.

The latest generation of AI assistants, powered by large language models, can engage in open-ended conversation, explain concepts, help with writing tasks, and even assist with coding. Challenges include handling ambiguous requests, managing conversation context over long interactions, knowing when to escalate to human agents, and avoiding inappropriate or harmful responses.

Healthcare and Scientific Discovery

AI is accelerating breakthroughs in medicine and science, transforming how we discover drugs, understand biology, and deliver care. These applications represent some of AI’s most profound potential benefits for humanity.

Drug Discovery

Machine learning models like Google’s TxGemma or Microsoft’s BioEmu aim to predict how molecules will interact with biological targets, dramatically reducing the time and cost of identifying promising drug candidates. Traditional drug discovery screens millions of compounds through expensive wet-lab experiments; AI can virtually screen billions of molecules, prioritizing those most likely to succeed.

Deep learning models predict molecular properties like toxicity, solubility, and binding affinity from chemical structures. Generative models design entirely novel molecules optimized for desired characteristics. Companies like Insilico Medicine and Recursion Pharmaceuticals have advanced AI-designed drugs to clinical trials.

The COVID-19 pandemic accelerated adoption, with AI contributing to vaccine development and therapeutic discovery. However, drug development remains challenging: AI improves early-stage candidate identification but cannot shortcut the years of clinical trials required to establish safety and efficacy.

Protein Structure Prediction

DeepMind’s AlphaFold solved a 50-year-old grand challenge by accurately predicting protein structures from amino acid sequences, revolutionizing structural biology. Proteins fold into complex 3D shapes that determine their function, but predicting these shapes from sequences had defeated computational approaches for decades. AlphaFold’s deep learning architecture, trained on known protein structures, achieved accuracy comparable to experimental methods at a fraction of the time and cost.

DeepMind released predicted structures for nearly every protein known to science, transforming fields from drug design to enzyme engineering. AlphaFold2’s success demonstrated that AI could crack problems that had resisted decades of expert effort, inspiring similar approaches to other scientific grand challenges. The work earned the 2024 Nobel Prize in Chemistry for Demis Hassabis and John Jumper.

Clinical Decision Support

AI assists doctors by analyzing patient data, suggesting diagnoses, and recommending treatments based on vast medical literature that no human could fully absorb. Clinical decision support systems alert clinicians to potential drug interactions, flag abnormal lab results, and suggest differential diagnoses based on symptoms.

Sepsis prediction models identify patients at risk hours before clinical deterioration, enabling early intervention. Treatment recommendation systems match patients to clinical trials or suggest therapies based on similar cases.

However, deployment challenges are significant: AI must integrate with complex healthcare IT systems, earn clinician trust, and demonstrate real-world benefit beyond benchmark performance. Concerns about bias, liability, and appropriate human oversight continue to shape regulatory approaches.

Personalized Medicine

By analyzing genetic data, health records, lifestyle factors, and treatment outcomes, AI can tailor treatments to individual patients rather than relying on one-size-fits-all protocols. Pharmacogenomics predicts how patients will respond to medications based on genetic variants, reducing adverse reactions and improving efficacy.

Oncology increasingly uses AI to match cancer patients to targeted therapies based on tumor genetics. Digital twins, computational models of individual patients, simulate treatment responses before administering drugs. Wearable devices generate continuous health data that AI analyzes for personalized insights and early warning signs.